Steven Peters

Improving AEBS Validation Through Objective Intervention Classification Leveraging the Prediction Divergence Principle

Jul 10, 2025Abstract:The safety validation of automatic emergency braking system (AEBS) requires accurately distinguishing between false positive (FP) and true positive (TP) system activations. While simulations allow straightforward differentiation by comparing scenarios with and without interventions, analyzing activations from open-loop resimulations - such as those from field operational testing (FOT) - is more complex. This complexity arises from scenario parameter uncertainty and the influence of driver interventions in the recorded data. Human labeling is frequently used to address these challenges, relying on subjective assessments of intervention necessity or situational criticality, potentially introducing biases and limitations. This work proposes a rule-based classification approach leveraging the Prediction Divergence Principle (PDP) to address those issues. Applied to a simplified AEBS, the proposed method reveals key strengths, limitations, and system requirements for effective implementation. The findings suggest that combining this approach with human labeling may enhance the transparency and consistency of classification, thereby improving the overall validation process. While the rule set for classification derived in this work adopts a conservative approach, the paper outlines future directions for refinement and broader applicability. Finally, this work highlights the potential of such methods to complement existing practices, paving the way for more reliable and reproducible AEBS validation frameworks.

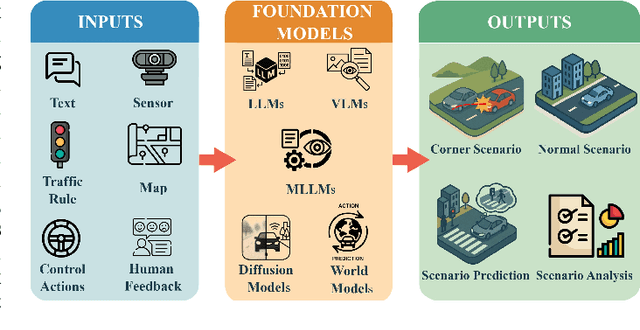

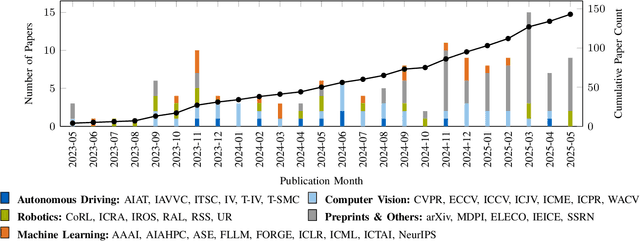

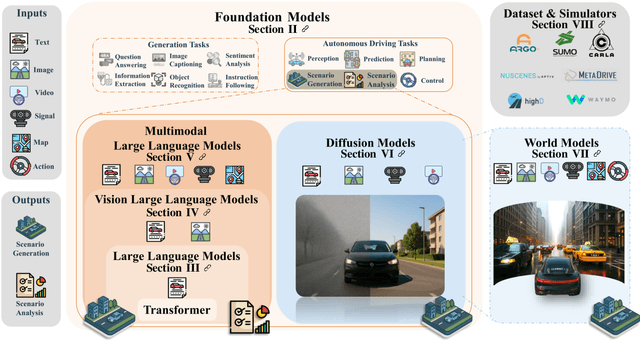

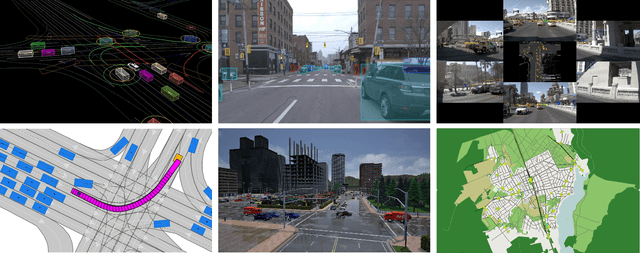

Foundation Models in Autonomous Driving: A Survey on Scenario Generation and Scenario Analysis

Jun 13, 2025

Abstract:For autonomous vehicles, safe navigation in complex environments depends on handling a broad range of diverse and rare driving scenarios. Simulation- and scenario-based testing have emerged as key approaches to development and validation of autonomous driving systems. Traditional scenario generation relies on rule-based systems, knowledge-driven models, and data-driven synthesis, often producing limited diversity and unrealistic safety-critical cases. With the emergence of foundation models, which represent a new generation of pre-trained, general-purpose AI models, developers can process heterogeneous inputs (e.g., natural language, sensor data, HD maps, and control actions), enabling the synthesis and interpretation of complex driving scenarios. In this paper, we conduct a survey about the application of foundation models for scenario generation and scenario analysis in autonomous driving (as of May 2025). Our survey presents a unified taxonomy that includes large language models, vision-language models, multimodal large language models, diffusion models, and world models for the generation and analysis of autonomous driving scenarios. In addition, we review the methodologies, open-source datasets, simulation platforms, and benchmark challenges, and we examine the evaluation metrics tailored explicitly to scenario generation and analysis. Finally, the survey concludes by highlighting the open challenges and research questions, and outlining promising future research directions. All reviewed papers are listed in a continuously maintained repository, which contains supplementary materials and is available at https://github.com/TUM-AVS/FM-for-Scenario-Generation-Analysis.

Towards more efficient quantitative safety validation of residual risk for assisted and automated driving

Jun 12, 2025Abstract:The safety validation of Advanced Driver Assistance Systems (ADAS) and Automated Driving Systems (ADS) increasingly demands efficient and reliable methods to quantify residual risk while adhering to international standards such as ISO 21448. Traditionally, Field Operational Testing (FOT) has been pivotal for macroscopic safety validation of automotive driving functions up to SAE automation level 2. However, state-of-the-art derivations for empirical safety demonstrations using FOT often result in impractical testing efforts, particularly at higher automation levels. Even at lower automation levels, this limitation - coupled with the substantial costs associated with FOT - motivates the exploration of approaches to enhance the efficiency of FOT-based macroscopic safety validation. Therefore, this publication systematically identifies and evaluates state-of-the-art Reduction Approaches (RAs) for FOT, including novel methods reported in the literature. Based on an analysis of ISO 21448, two models are derived: a generic model capturing the argumentation components of the standard, and a base model, exemplarily applied to Automatic Emergency Braking (AEB) systems, establishing a baseline for the real-world driving requirement for a Quantitative Safety Validation of Residual Risk (QSVRR). Subsequently, the RAs are assessed using four criteria: quantifiability, threats to validity, missing links, and black box compatibility, highlighting potential benefits, inherent limitations, and identifying key areas for further research. Our evaluation reveals that, while several approaches offer potential, none are free from missing links or other substantial shortcomings. Moreover, no identified alternative can fully replace FOT, reflecting its crucial role in the safety validation of ADAS and ADS.

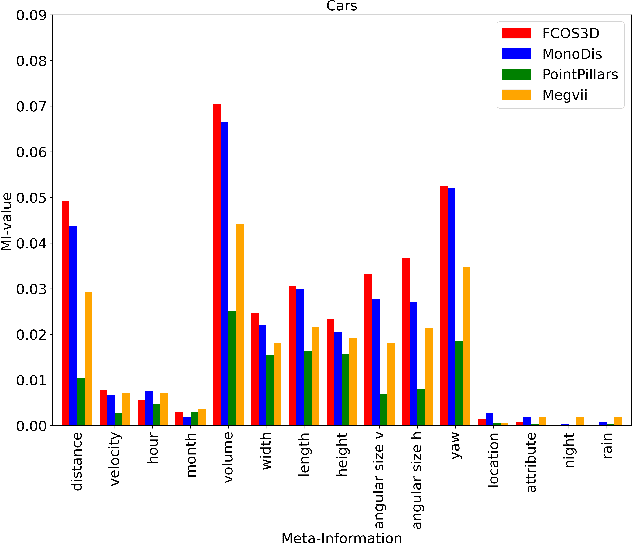

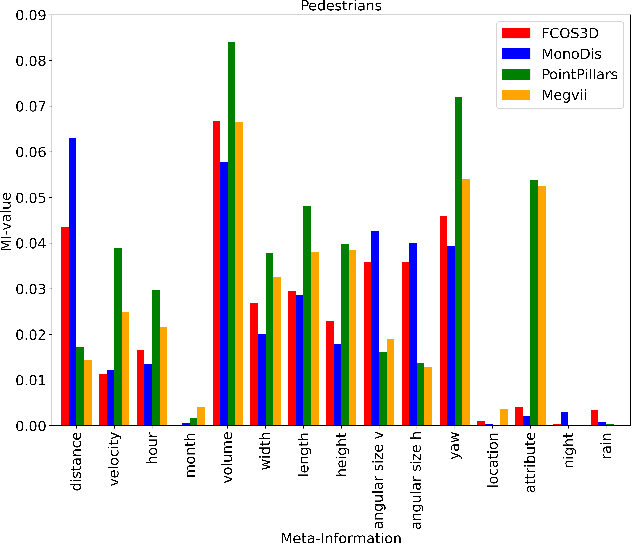

Methodology for a Statistical Analysis of Influencing Factors on 3D Object Detection Performance

Nov 13, 2024

Abstract:In autonomous driving, object detection is an essential task to perceive the environment by localizing and classifying objects. Most object detection algorithms rely on deep learning for their superior performance. However, their black box nature makes it challenging to ensure safety. In this paper, we propose a first-of-its-kind methodology for statistical analysis of the influence of various factors related to the objects to detect or the environment on the detection performance of both LiDAR- and camera-based 3D object detectors. We perform a univariate analysis between each of the factors and the detection error in order to compare the strength of influence. To better identify potential sources of detection errors, we also analyze the performance in dependency of the influencing factors and examine the interdependencies between the different influencing factors. Recognizing the factors that influence detection performance helps identify robustness issues in the trained object detector and supports the safety approval of object detection systems.

A new Taxonomy for Automated Driving: Structuring Applications based on their Operational Design Domain, Level of Automation and Automation Readiness

Apr 25, 2024

Abstract:The aim of this paper is to investigate the relationship between operational design domains (ODD), automated driving SAE Levels, and Technology Readiness Level (TRL). The first highly automated vehicles, like robotaxis, are in commercial use, and the first vehicles with highway pilot systems have been delivered to private customers. It has emerged as a crucial issue that these automated driving systems differ significantly in their ODD and in their technical maturity. Consequently, any approach to compare these systems is difficult and requires a deep dive into defined ODDs, specifications, and technologies used. Therefore, this paper challenges current state-of-the-art taxonomies and develops a new and integrated taxonomy that can structure automated vehicle systems more efficiently. We use the well-known SAE Levels 0-5 as the "level of responsibility", and link and describe the ODD at an intermediate level of abstraction. Finally, a new maturity model is explicitly proposed to improve the comparability of automated vehicles and driving functions. This method is then used to analyze today's existing automated vehicle applications, which are structured into the new taxonomy and rated by the new maturity levels. Our results indicate that this new taxonomy and maturity level model will help to differentiate automated vehicle systems in discussions more clearly and to discover white fields more systematically and upfront, e.g. for research but also for regulatory purposes.

Explainable AI for Safe and Trustworthy Autonomous Driving: A Systematic Review

Feb 08, 2024Abstract:Artificial Intelligence (AI) shows promising applications for the perception and planning tasks in autonomous driving (AD) due to its superior performance compared to conventional methods. However, inscrutable AI systems exacerbate the existing challenge of safety assurance of AD. One way to mitigate this challenge is to utilize explainable AI (XAI) techniques. To this end, we present the first comprehensive systematic literature review of explainable methods for safe and trustworthy AD. We begin by analyzing the requirements for AI in the context of AD, focusing on three key aspects: data, model, and agency. We find that XAI is fundamental to meeting these requirements. Based on this, we explain the sources of explanations in AI and describe a taxonomy of XAI. We then identify five key contributions of XAI for safe and trustworthy AI in AD, which are interpretable design, interpretable surrogate models, interpretable monitoring, auxiliary explanations, and interpretable validation. Finally, we propose a modular framework called SafeX to integrate these contributions, enabling explanation delivery to users while simultaneously ensuring the safety of AI models.

Acceptance and Trust: Drivers' First Contact with Released Automated Vehicles in Naturalistic Traffic

Dec 19, 2023

Abstract:This study investigates the impact of initial contact of drivers with an SAE Level 3 Automated Driving System (ADS) under real traffic conditions, focusing on the Mercedes-Benz Drive Pilot in the EQS. It examines Acceptance, Trust, Usability, and User Experience. Although previous studies in simulated environments provided insights into human-automation interaction, real-world experiences can differ significantly. The research was conducted on a segment of German interstate with 30 participants lacking familiarity with Level 3 ADS. Pre- and post-driving questionnaires were used to assess changes in acceptance and confidence. Supplementary metrics included post-driving ratings for usability and user experience. Findings reveal a significant increase in acceptance and trust following the first contact, confirming results from prior simulator studies. Factors such as Performance Expectancy, Effort Expectancy, Facilitating Condition, Self-Efficacy, and Behavioral Intention to use the vehicle were rated higher after initial contact with the ADS. However, inadequate communication from the ADS to the human driver was detected, highlighting the need for improved communication to prevent misuse or confusion about the operating mode. Contrary to prior research, we found no significant impact of general attitudes towards technological innovation on acceptance and trust. However, it's worth noting that most participants already had a high affinity for technology. Although overall reception was positive and showed an upward trend post first contact, the ADS was also perceived as demanding as manual driving. Future research should focus on a more diverse participant sample and include longer or multiple real-traffic trips to understand behavioral adaptations over time.

SURE-Val: Safe Urban Relevance Extension and Validation

Aug 04, 2023

Abstract:To evaluate perception components of an automated driving system, it is necessary to define the relevant objects. While the urban domain is popular among perception datasets, relevance is insufficiently specified for this domain. Therefore, this work adopts an existing method to define relevance in the highway domain and expands it to the urban domain. While different conceptualizations and definitions of relevance are present in literature, there is a lack of methods to validate these definitions. Therefore, this work presents a novel relevance validation method leveraging a motion prediction component. The validation leverages the idea that removing irrelevant objects should not influence a prediction component which reflects human driving behavior. The influence on the prediction is quantified by considering the statistical distribution of prediction performance across a large-scale dataset. The validation procedure is verified using criteria specifically designed to exclude relevant objects. The validation method is successfully applied to the relevance criteria from this work, thus supporting their validity.

Towards Establishing Systematic Classification Requirements for Automated Driving

Jul 26, 2023

Abstract:Despite the presence of the classification task in many different benchmark datasets for perception in the automotive domain, few efforts have been undertaken to define consistent classification requirements. This work addresses the topic by proposing a structured method to generate a classification structure. First, legal categories are identified based on behavioral requirements for the vehicle. This structure is further substantiated by considering the two aspects of collision safety for objects as well as perceptual categories. A classification hierarchy is obtained by applying the method to an exemplary legal text. A comparison of the results with benchmark dataset categories shows limited agreement. This indicates the necessity for explicit consideration of legal requirements regarding perception.

Conservative Estimation of Perception Relevance of Dynamic Objects for Safe Trajectories in Automotive Scenarios

Jul 20, 2023Abstract:Having efficient testing strategies is a core challenge that needs to be overcome for the release of automated driving. This necessitates clear requirements as well as suitable methods for testing. In this work, the requirements for perception modules are considered with respect to relevance. The concept of relevance currently remains insufficiently defined and specified. In this paper, we propose a novel methodology to overcome this challenge by exemplary application to collision safety in the highway domain. Using this general system and use case specification, a corresponding concept for relevance is derived. Irrelevant objects are thus defined as objects which do not limit the set of safe actions available to the ego vehicle under consideration of all uncertainties. As an initial step, the use case is decomposed into functional scenarios with respect to collision relevance. For each functional scenario, possible actions of both the ego vehicle and any other dynamic object are formalized as equations. This set of possible actions is constrained by traffic rules, yielding relevance criteria. As a result, we present a conservative estimation which dynamic objects are relevant for perception and need to be considered for a complete evaluation. The estimation provides requirements which are applicable for offline testing and validation of perception components. A visualization is presented for examples from the highD dataset, showing the plausibility of the results. Finally, a possibility for a future validation of the presented relevance concept is outlined.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge