Anthony Shek

RelCAT: Advancing Extraction of Clinical Inter-Entity Relationships from Unstructured Electronic Health Records

Jan 27, 2025Abstract:This study introduces RelCAT (Relation Concept Annotation Toolkit), an interactive tool, library, and workflow designed to classify relations between entities extracted from clinical narratives. Building upon the CogStack MedCAT framework, RelCAT addresses the challenge of capturing complete clinical relations dispersed within text. The toolkit implements state-of-the-art machine learning models such as BERT and Llama along with proven evaluation and training methods. We demonstrate a dataset annotation tool (built within MedCATTrainer), model training, and evaluate our methodology on both openly available gold-standard and real-world UK National Health Service (NHS) hospital clinical datasets. We perform extensive experimentation and a comparative analysis of the various publicly available models with varied approaches selected for model fine-tuning. Finally, we achieve macro F1-scores of 0.977 on the gold-standard n2c2, surpassing the previous state-of-the-art performance, and achieve performance of >=0.93 F1 on our NHS gathered datasets.

Improving Extraction of Clinical Event Contextual Properties from Electronic Health Records: A Comparative Study

Aug 30, 2024

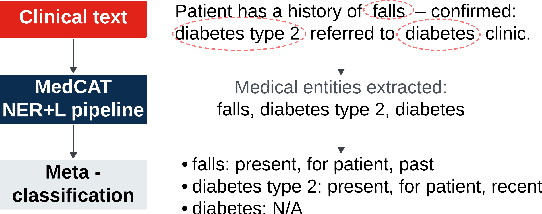

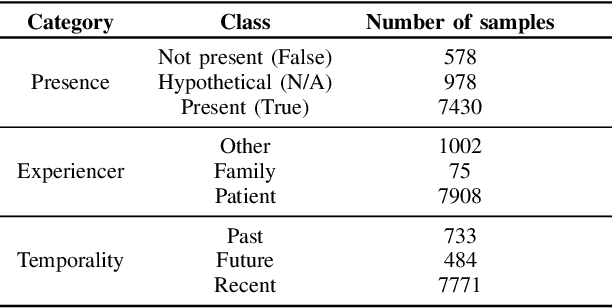

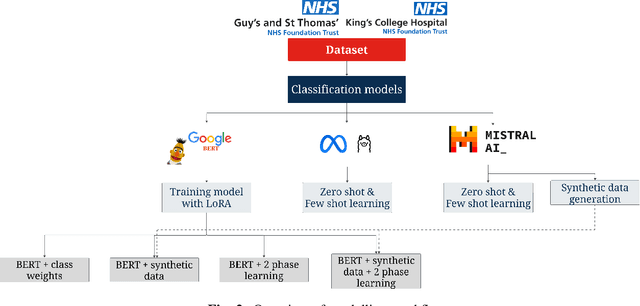

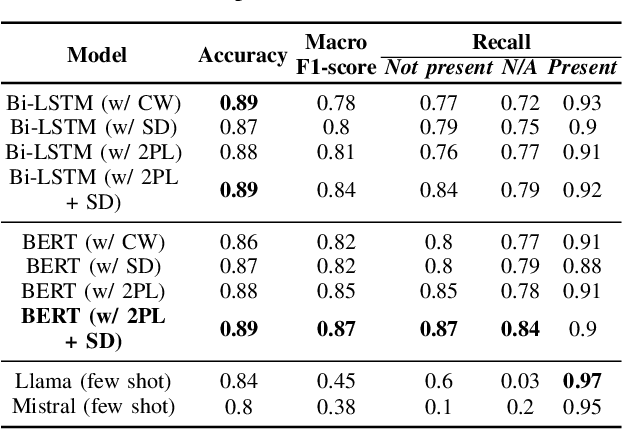

Abstract:Electronic Health Records are large repositories of valuable clinical data, with a significant portion stored in unstructured text format. This textual data includes clinical events (e.g., disorders, symptoms, findings, medications and procedures) in context that if extracted accurately at scale can unlock valuable downstream applications such as disease prediction. Using an existing Named Entity Recognition and Linking methodology, MedCAT, these identified concepts need to be further classified (contextualised) for their relevance to the patient, and their temporal and negated status for example, to be useful downstream. This study performs a comparative analysis of various natural language models for medical text classification. Extensive experimentation reveals the effectiveness of transformer-based language models, particularly BERT. When combined with class imbalance mitigation techniques, BERT outperforms Bi-LSTM models by up to 28% and the baseline BERT model by up to 16% for recall of the minority classes. The method has been implemented as part of CogStack/MedCAT framework and made available to the community for further research.

Validating transformers for redaction of text from electronic health records in real-world healthcare

Oct 05, 2023

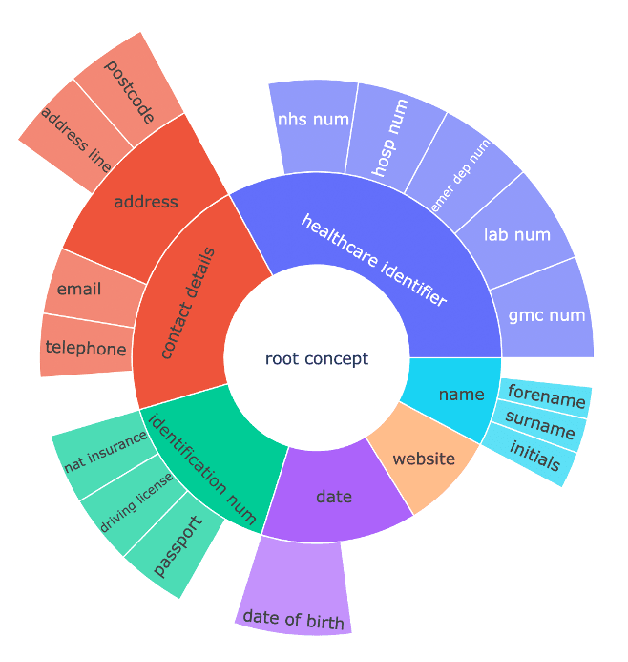

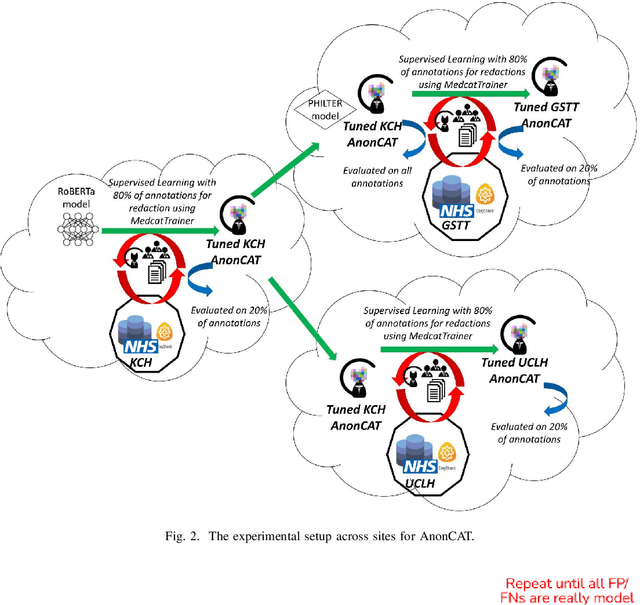

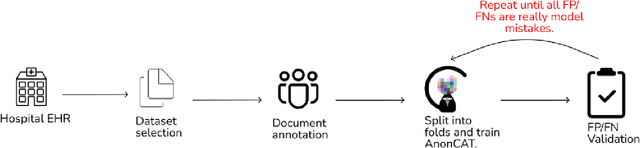

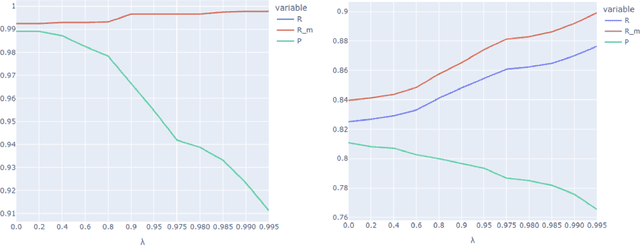

Abstract:Protecting patient privacy in healthcare records is a top priority, and redaction is a commonly used method for obscuring directly identifiable information in text. Rule-based methods have been widely used, but their precision is often low causing over-redaction of text and frequently not being adaptable enough for non-standardised or unconventional structures of personal health information. Deep learning techniques have emerged as a promising solution, but implementing them in real-world environments poses challenges due to the differences in patient record structure and language across different departments, hospitals, and countries. In this study, we present AnonCAT, a transformer-based model and a blueprint on how deidentification models can be deployed in real-world healthcare. AnonCAT was trained through a process involving manually annotated redactions of real-world documents from three UK hospitals with different electronic health record systems and 3116 documents. The model achieved high performance in all three hospitals with a Recall of 0.99, 0.99 and 0.96. Our findings demonstrate the potential of deep learning techniques for improving the efficiency and accuracy of redaction in global healthcare data and highlight the importance of building workflows which not just use these models but are also able to continually fine-tune and audit the performance of these algorithms to ensure continuing effectiveness in real-world settings. This approach provides a blueprint for the real-world use of de-identifying algorithms through fine-tuning and localisation, the code together with tutorials is available on GitHub (https://github.com/CogStack/MedCAT).

Foresight -- Deep Generative Modelling of Patient Timelines using Electronic Health Records

Dec 13, 2022

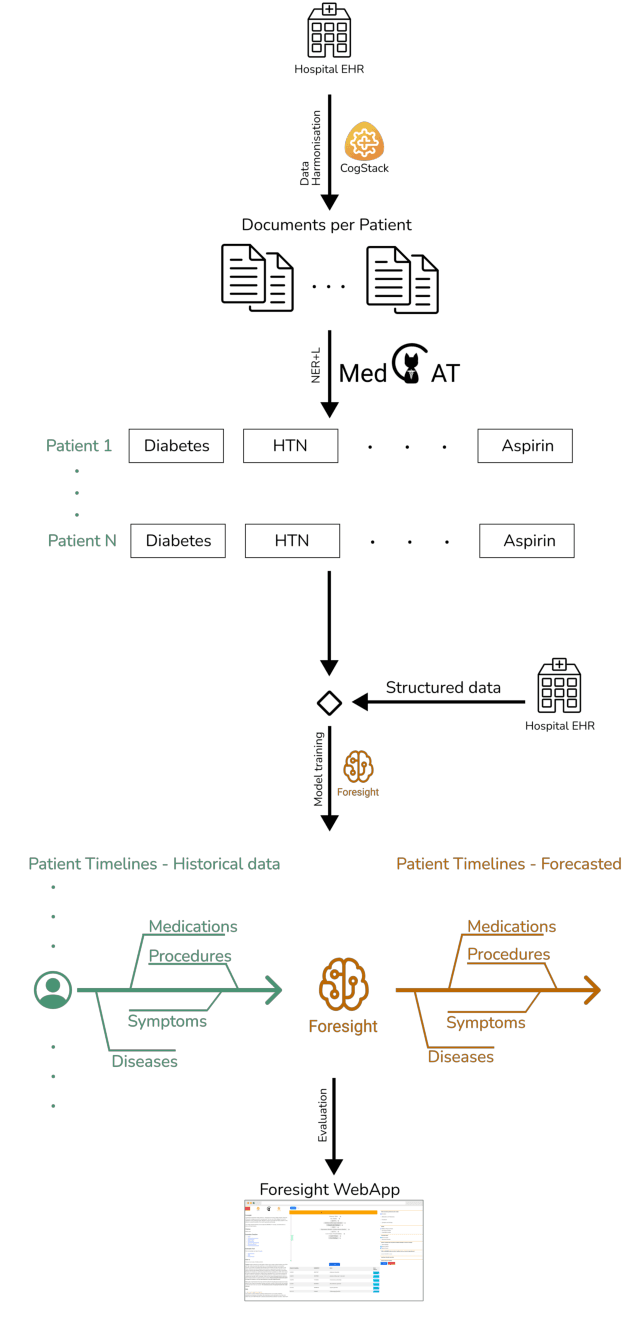

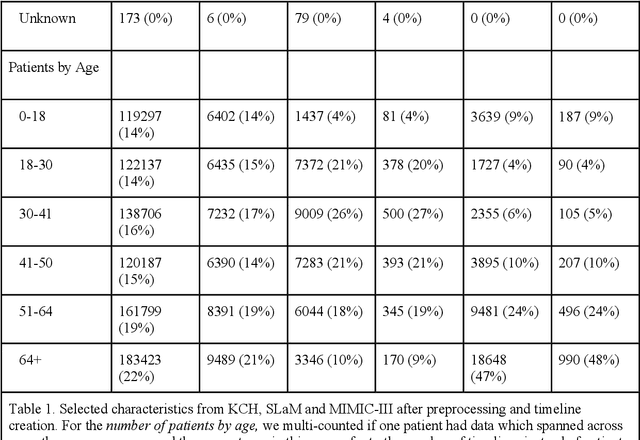

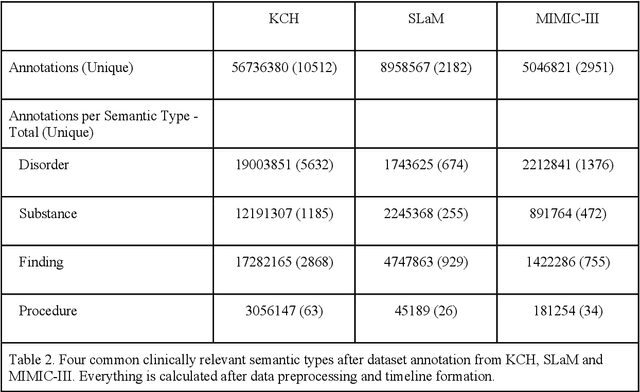

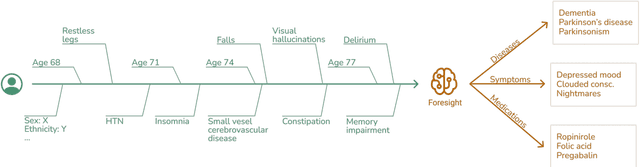

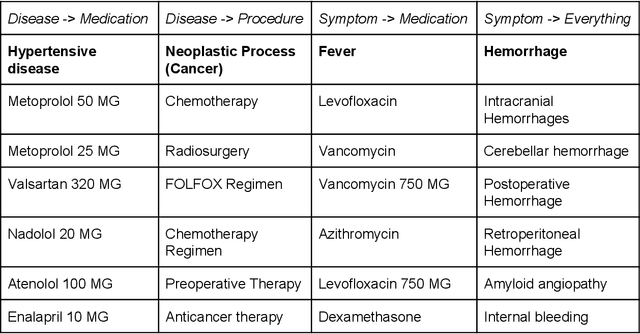

Abstract:Electronic Health Records (EHRs) hold detailed longitudinal information about each patient's health status and general clinical history, a large portion of which is stored within the unstructured text. Temporal modelling of this medical history, which considers the sequence of events, can be used to forecast and simulate future events, estimate risk, suggest alternative diagnoses or forecast complications. While most prediction approaches use mainly structured data or a subset of single-domain forecasts and outcomes, we processed the entire free-text portion of EHRs for longitudinal modelling. We present Foresight, a novel GPT3-based pipeline that uses NER+L tools (i.e. MedCAT) to convert document text into structured, coded concepts, followed by providing probabilistic forecasts for future medical events such as disorders, medications, symptoms and interventions. Since large portions of EHR data are in text form, such an approach benefits from a granular and detailed view of a patient while introducing modest additional noise. On tests in two large UK hospitals (King's College Hospital, South London and Maudsley) and the US MIMIC-III dataset precision@10 of 0.80, 0.81 and 0.91 was achieved for forecasting the next biomedical concept. Foresight was also validated on 34 synthetic patient timelines by 5 clinicians and achieved relevancy of 97% for the top forecasted candidate disorder. Foresight can be easily trained and deployed locally as it only requires free-text data (as a minimum). As a generative model, it can simulate follow-on disorders, medications and interventions for as many steps as required. Foresight is a general-purpose model for biomedical concept modelling that can be used for real-world risk estimation, virtual trials and clinical research to study the progression of diseases, simulate interventions and counterfactuals, and for educational purposes.

MedGPT: Medical Concept Prediction from Clinical Narratives

Jul 07, 2021

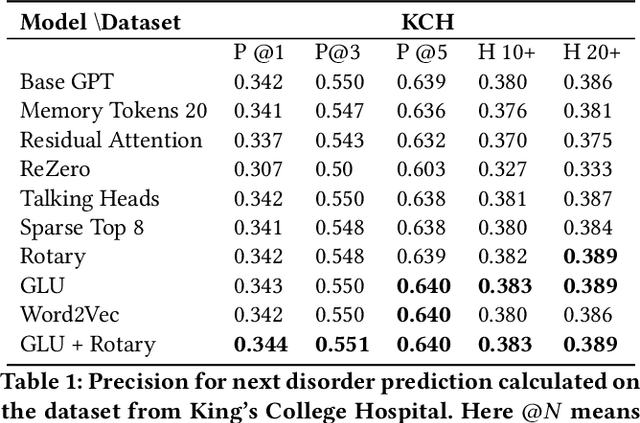

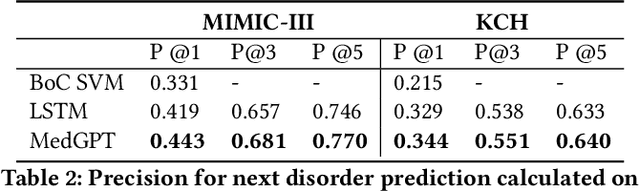

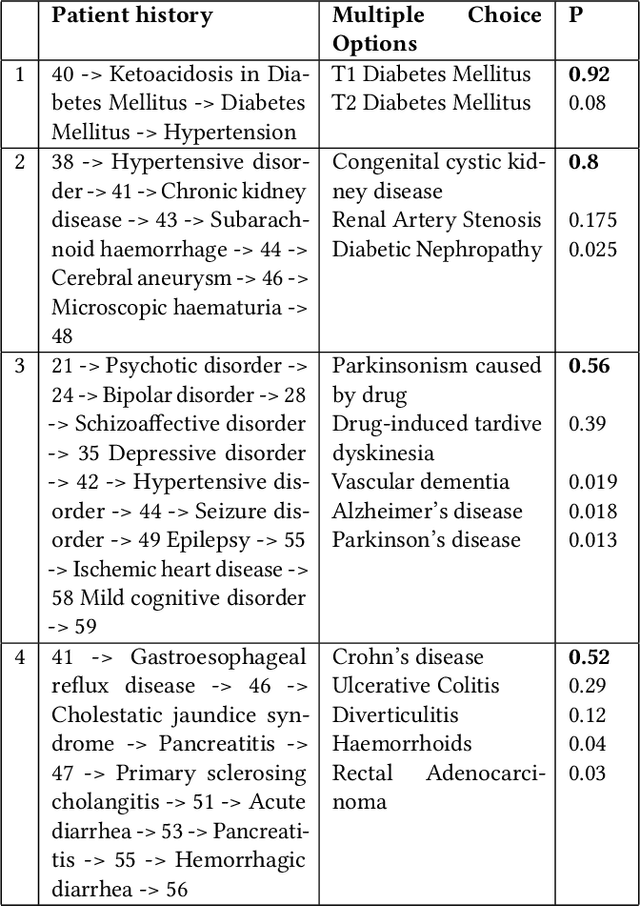

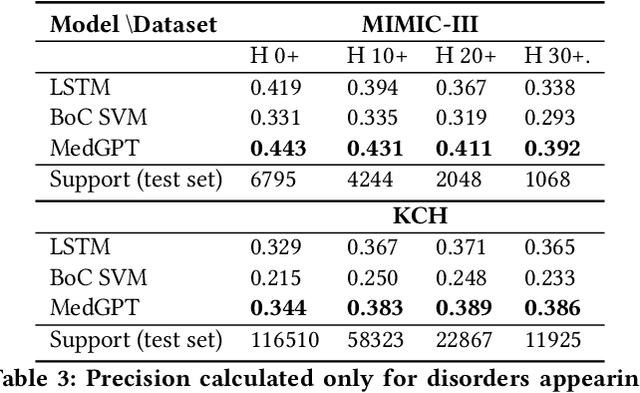

Abstract:The data available in Electronic Health Records (EHRs) provides the opportunity to transform care, and the best way to provide better care for one patient is through learning from the data available on all other patients. Temporal modelling of a patient's medical history, which takes into account the sequence of past events, can be used to predict future events such as a diagnosis of a new disorder or complication of a previous or existing disorder. While most prediction approaches use mostly the structured data in EHRs or a subset of single-domain predictions and outcomes, we present MedGPT a novel transformer-based pipeline that uses Named Entity Recognition and Linking tools (i.e. MedCAT) to structure and organize the free text portion of EHRs and anticipate a range of future medical events (initially disorders). Since a large portion of EHR data is in text form, such an approach benefits from a granular and detailed view of a patient while introducing modest additional noise. MedGPT effectively deals with the noise and the added granularity, and achieves a precision of 0.344, 0.552 and 0.640 (vs LSTM 0.329, 0.538 and 0.633) when predicting the top 1, 3 and 5 candidate future disorders on real world hospital data from King's College Hospital, London, UK (\textasciitilde600k patients). We also show that our model captures medical knowledge by testing it on an experimental medical multiple choice question answering task, and by examining the attentional focus of the model using gradient-based saliency methods.

A Knowledge Distillation Ensemble Framework for Predicting Short and Long-term Hospitalisation Outcomes from Electronic Health Records Data

Nov 18, 2020

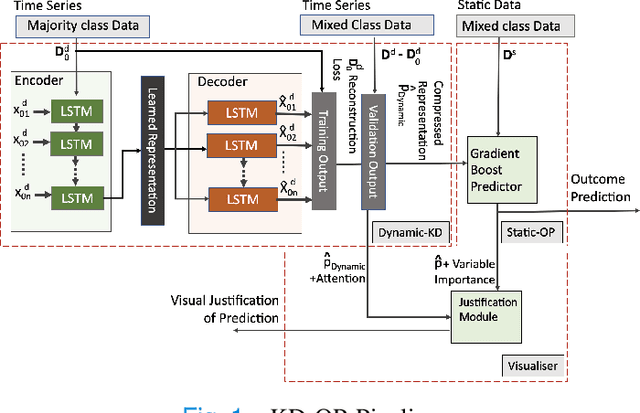

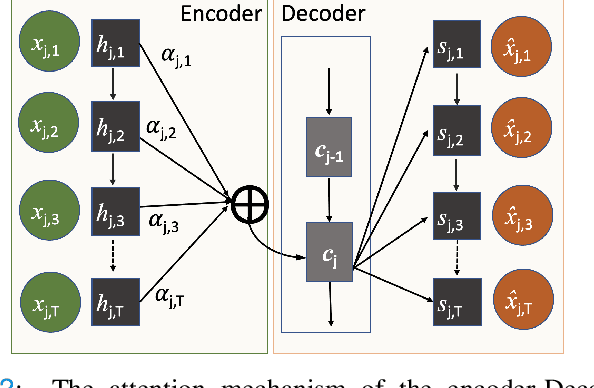

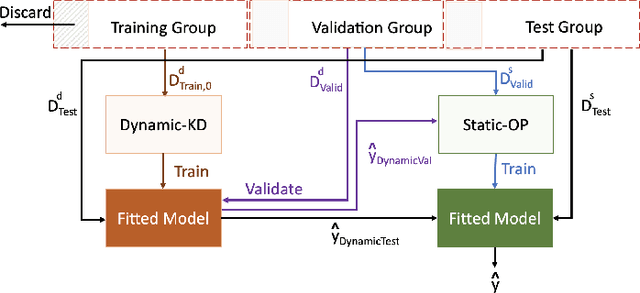

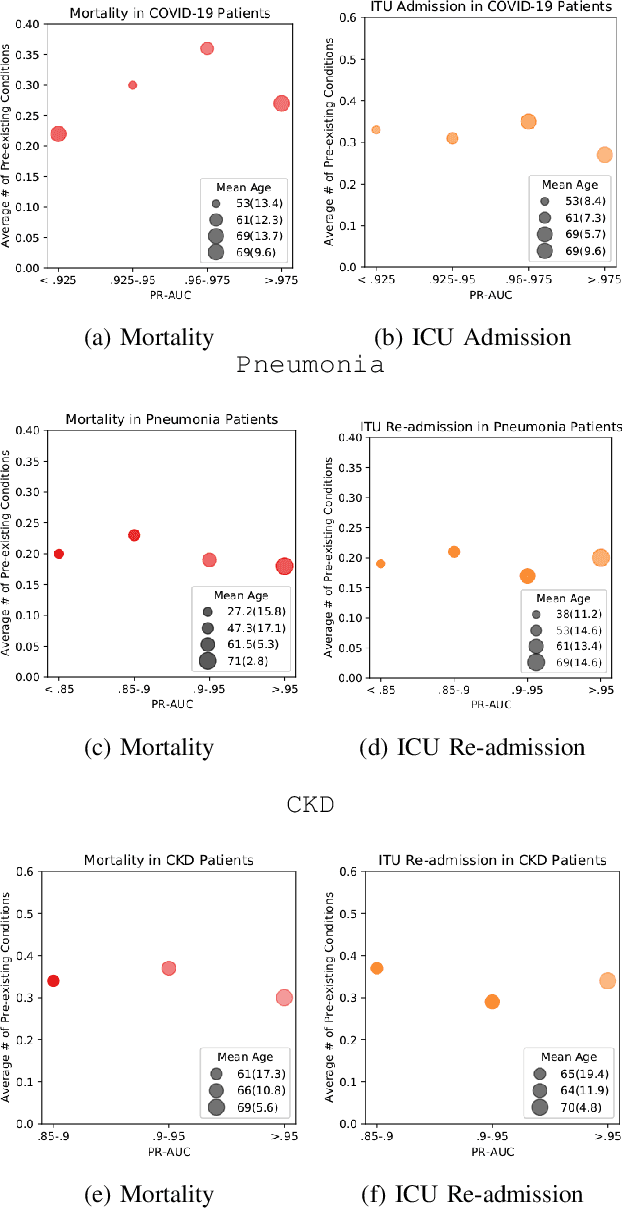

Abstract:The ability to perform accurate prognosis of patients is crucial for proactive clinical decision making, informed resource management and personalised care. Existing outcome prediction models suffer from a low recall of infrequent positive outcomes. We present a highly-scalable and robust machine learning framework to automatically predict adversity represented by mortality and ICU admission from time-series vital signs and laboratory results obtained within the first 24 hours of hospital admission. The stacked platform comprises two components: a) an unsupervised LSTM Autoencoder that learns an optimal representation of the time-series, using it to differentiate the less frequent patterns which conclude with an adverse event from the majority patterns that do not, and b) a gradient boosting model, which relies on the constructed representation to refine prediction, incorporating static features of demographics, admission details and clinical summaries. The model is used to assess a patient's risk of adversity over time and provides visual justifications of its prediction based on the patient's static features and dynamic signals. Results of three case studies for predicting mortality and ICU admission show that the model outperforms all existing outcome prediction models, achieving PR-AUC of 0.93 (95$%$ CI: 0.878 - 0.969) in predicting mortality in ICU and general ward settings and 0.987 (95$%$ CI: 0.985-0.995) in predicting ICU admission.

Multi-domain Clinical Natural Language Processing with MedCAT: the Medical Concept Annotation Toolkit

Oct 02, 2020

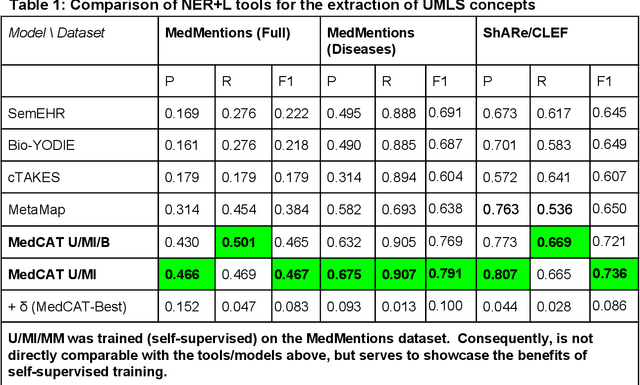

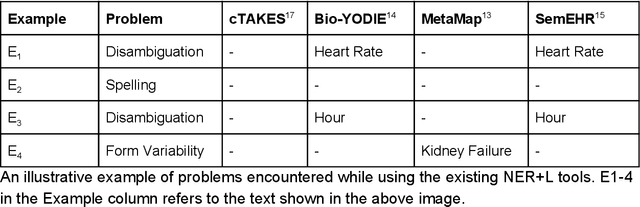

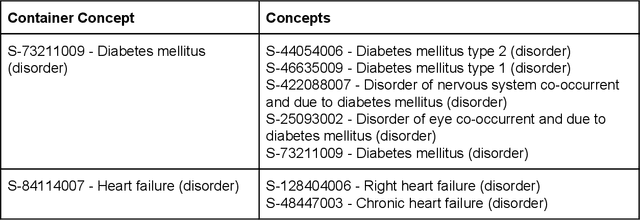

Abstract:Electronic health records (EHR) contain large volumes of unstructured text, requiring the application of Information Extraction (IE) technologies to enable clinical analysis. We present the open source Medical Concept Annotation Toolkit (MedCAT) that provides: a) a novel self-supervised machine learning algorithm for extracting concepts using any concept vocabulary including UMLS/SNOMED-CT; b) a feature-rich annotation interface for customizing and training IE models; and c) integrations to the broader CogStack ecosystem for vendor-agnostic health system deployment. We show improved performance in extracting UMLS concepts from open datasets ( F1 0.467-0.791 vs 0.384-0.691). Further real-world validation demonstrates SNOMED-CT extraction at 3 large London hospitals with self-supervised training over ~8.8B words from ~17M clinical records and further fine-tuning with ~6K clinician annotated examples. We show strong transferability ( F1 >0.94) between hospitals, datasets and concept types indicating cross-domain EHR-agnostic utility for accelerated clinical and research use cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge