Aaron Tuor

Homotopy-Guided Self-Supervised Learning of Parametric Solutions for AC Optimal Power Flow

Nov 11, 2025Abstract:Learning to optimize (L2O) parametric approximations of AC optimal power flow (AC-OPF) solutions offers the potential for fast, reusable decision-making in real-time power system operations. However, the inherent nonconvexity of AC-OPF results in challenging optimization landscapes, and standard learning approaches often fail to converge to feasible, high-quality solutions. This work introduces a \textit{homotopy-guided self-supervised L2O method} for parametric AC-OPF problems. The key idea is to construct a continuous deformation of the objective and constraints during training, beginning from a relaxed problem with a broad basin of attraction and gradually transforming it toward the original problem. The resulting learning process improves convergence stability and promotes feasibility without requiring labeled optimal solutions or external solvers. We evaluate the proposed method on standard IEEE AC-OPF benchmarks and show that homotopy-guided L2O significantly increases feasibility rates compared to non-homotopy baselines, while achieving objective values comparable to full OPF solvers. These findings demonstrate the promise of homotopy-based heuristics for scalable, constraint-aware L2O in power system optimization.

Domain-aware Control-oriented Neural Models for Autonomous Underwater Vehicles

Aug 15, 2022

Abstract:Conventional physics-based modeling is a time-consuming bottleneck in control design for complex nonlinear systems like autonomous underwater vehicles (AUVs). In contrast, purely data-driven models, though convenient and quick to obtain, require a large number of observations and lack operational guarantees for safety-critical systems. Data-driven models leveraging available partially characterized dynamics have potential to provide reliable systems models in a typical data-limited scenario for high value complex systems, thereby avoiding months of expensive expert modeling time. In this work we explore this middle-ground between expert-modeled and pure data-driven modeling. We present control-oriented parametric models with varying levels of domain-awareness that exploit known system structure and prior physics knowledge to create constrained deep neural dynamical system models. We employ universal differential equations to construct data-driven blackbox and graybox representations of the AUV dynamics. In addition, we explore a hybrid formulation that explicitly models the residual error related to imperfect graybox models. We compare the prediction performance of the learned models for different distributions of initial conditions and control inputs to assess their accuracy, generalization, and suitability for control.

Differentiable Predictive Control with Safety Guarantees: A Control Barrier Function Approach

Aug 03, 2022

Abstract:We develop a novel form of differentiable predictive control (DPC) with safety and robustness guarantees based on control barrier functions. DPC is an unsupervised learning-based method for obtaining approximate solutions to explicit model predictive control (MPC) problems. In DPC, the predictive control policy parametrized by a neural network is optimized offline via direct policy gradients obtained by automatic differentiation of the MPC problem. The proposed approach exploits a new form of sampled-data barrier function to enforce offline and online safety requirements in DPC settings while only interrupting the neural network-based controller near the boundary of the safe set. The effectiveness of the proposed approach is demonstrated in simulation.

Structural Inference of Networked Dynamical Systems with Universal Differential Equations

Jul 11, 2022

Abstract:Networked dynamical systems are common throughout science in engineering; e.g., biological networks, reaction networks, power systems, and the like. For many such systems, nonlinearity drives populations of identical (or near-identical) units to exhibit a wide range of nontrivial behaviors, such as the emergence of coherent structures (e.g., waves and patterns) or otherwise notable dynamics (e.g., synchrony and chaos). In this work, we seek to infer (i) the intrinsic physics of a base unit of a population, (ii) the underlying graphical structure shared between units, and (iii) the coupling physics of a given networked dynamical system given observations of nodal states. These tasks are formulated around the notion of the Universal Differential Equation, whereby unknown dynamical systems can be approximated with neural networks, mathematical terms known a priori (albeit with unknown parameterizations), or combinations of the two. We demonstrate the value of these inference tasks by investigating not only future state predictions but also the inference of system behavior on varied network topologies. The effectiveness and utility of these methods is shown with their application to canonical networked nonlinear coupled oscillators.

Neural Lyapunov Differentiable Predictive Control

May 22, 2022

Abstract:We present a learning-based predictive control methodology using the differentiable programming framework with probabilistic Lyapunov-based stability guarantees. The neural Lyapunov differentiable predictive control (NLDPC) learns the policy by constructing a computational graph encompassing the system dynamics, state and input constraints, and the necessary Lyapunov certification constraints, and thereafter using the automatic differentiation to update the neural policy parameters. In conjunction, our approach jointly learns a Lyapunov function that certifies the regions of state-space with stable dynamics. We also provide a sampling-based statistical guarantee for the training of NLDPC from the distribution of initial conditions. Our offline training approach provides a computationally efficient and scalable alternative to classical explicit model predictive control solutions. We substantiate the advantages of the proposed approach with simulations to stabilize the double integrator model and on an example of controlling an aircraft model.

Neuro-physical dynamic load modeling using differentiable parametric optimization

Mar 20, 2022

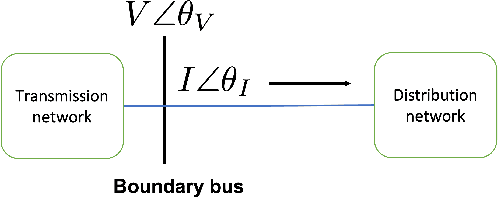

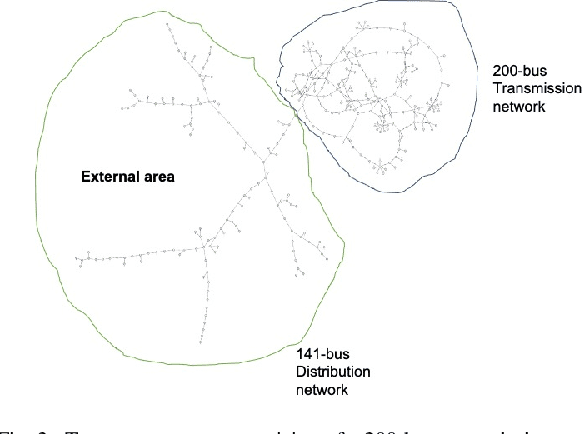

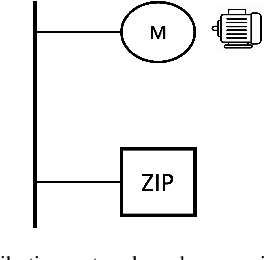

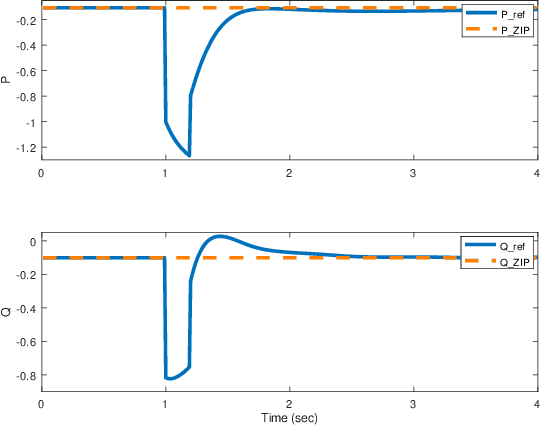

Abstract:In this work, we investigate a data-driven approach for obtaining a reduced equivalent load model of distribution systems for electromechanical transient stability analysis. The proposed reduced equivalent is a neuro-physical model comprising of a traditional ZIP load model augmented with a neural network. This neuro-physical model is trained through differentiable programming. We discuss the formulation, modeling details, and training of the proposed model set up as a differential parametric program. The performance and accuracy of this neurophysical ZIP load model is presented on a medium-scale 350-bus transmission-distribution network.

Neural Ordinary Differential Equations for Nonlinear System Identification

Mar 15, 2022

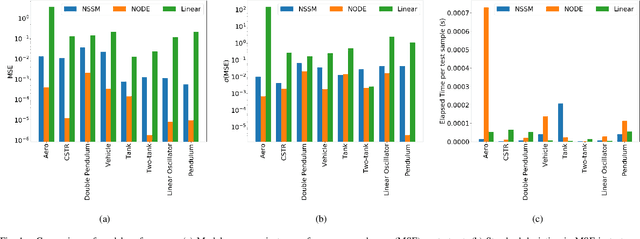

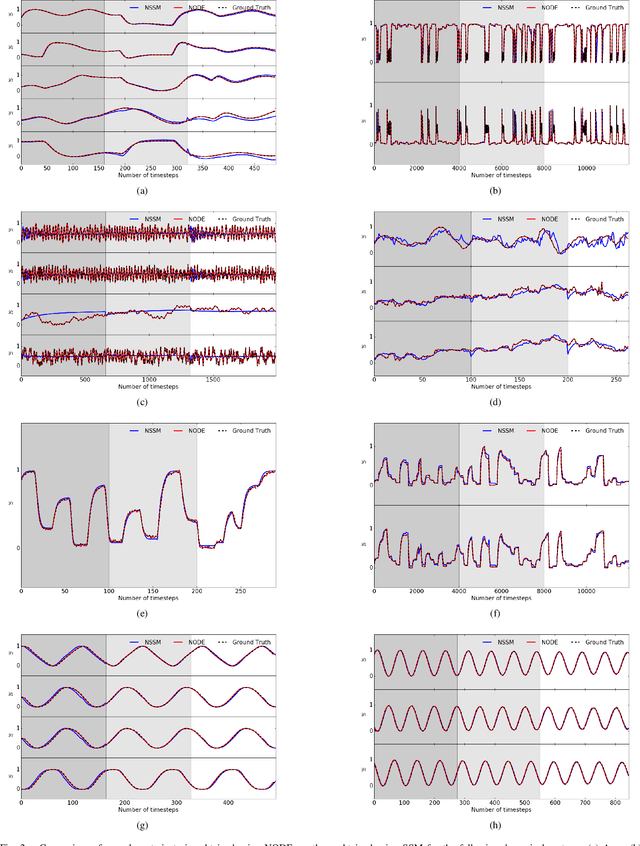

Abstract:Neural ordinary differential equations (NODE) have been recently proposed as a promising approach for nonlinear system identification tasks. In this work, we systematically compare their predictive performance with current state-of-the-art nonlinear and classical linear methods. In particular, we present a quantitative study comparing NODE's performance against neural state-space models and classical linear system identification methods. We evaluate the inference speed and prediction performance of each method on open-loop errors across eight different dynamical systems. The experiments show that NODEs can consistently improve the prediction accuracy by an order of magnitude compared to benchmark methods. Besides improved accuracy, we also observed that NODEs are less sensitive to hyperparameters compared to neural state-space models. On the other hand, these performance gains come with a slight increase of computation at the inference time.

Learning Stochastic Parametric Differentiable Predictive Control Policies

Mar 02, 2022

Abstract:The problem of synthesizing stochastic explicit model predictive control policies is known to be quickly intractable even for systems of modest complexity when using classical control-theoretic methods. To address this challenge, we present a scalable alternative called stochastic parametric differentiable predictive control (SP-DPC) for unsupervised learning of neural control policies governing stochastic linear systems subject to nonlinear chance constraints. SP-DPC is formulated as a deterministic approximation to the stochastic parametric constrained optimal control problem. This formulation allows us to directly compute the policy gradients via automatic differentiation of the problem's value function, evaluated over sampled parameters and uncertainties. In particular, the computed expectation of the SP-DPC problem's value function is backpropagated through the closed-loop system rollouts parametrized by a known nominal system dynamics model and neural control policy which allows for direct model-based policy optimization. We provide theoretical probabilistic guarantees for policies learned via the SP-DPC method on closed-loop stability and chance constraints satisfaction. Furthermore, we demonstrate the computational efficiency and scalability of the proposed policy optimization algorithm in three numerical examples, including systems with a large number of states or subject to nonlinear constraints.

Deep Learning Explicit Differentiable Predictive Control Laws for Buildings

Jul 25, 2021

Abstract:We present a differentiable predictive control (DPC) methodology for learning constrained control laws for unknown nonlinear systems. DPC poses an approximate solution to multiparametric programming problems emerging from explicit nonlinear model predictive control (MPC). Contrary to approximate MPC, DPC does not require supervision by an expert controller. Instead, a system dynamics model is learned from the observed system's dynamics, and the neural control law is optimized offline by leveraging the differentiable closed-loop system model. The combination of a differentiable closed-loop system and penalty methods for constraint handling of system outputs and inputs allows us to optimize the control law's parameters directly by backpropagating economic MPC loss through the learned system model. The control performance of the proposed DPC method is demonstrated in simulation using learned model of multi-zone building thermal dynamics.

Prototypical Region Proposal Networks for Few-Shot Localization and Classification

Apr 08, 2021

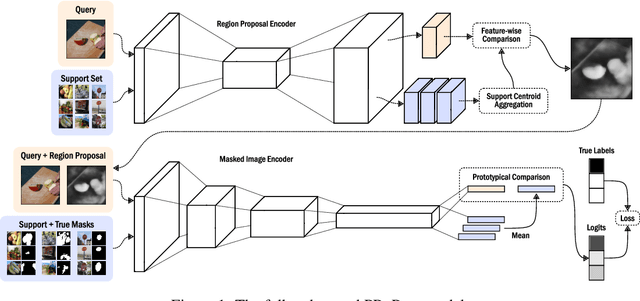

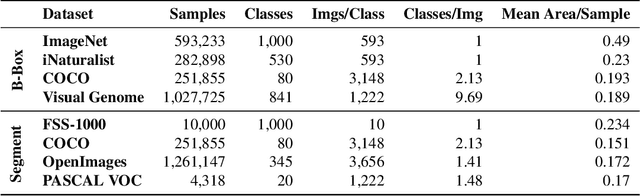

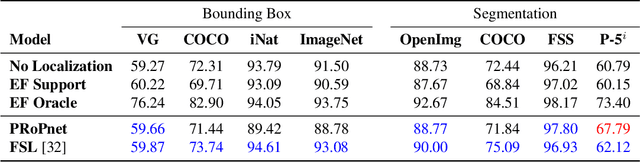

Abstract:Recently proposed few-shot image classification methods have generally focused on use cases where the objects to be classified are the central subject of images. Despite success on benchmark vision datasets aligned with this use case, these methods typically fail on use cases involving densely-annotated, busy images: images common in the wild where objects of relevance are not the central subject, instead appearing potentially occluded, small, or among other incidental objects belonging to other classes of potential interest. To localize relevant objects, we employ a prototype-based few-shot segmentation model which compares the encoded features of unlabeled query images with support class centroids to produce region proposals indicating the presence and location of support set classes in a query image. These region proposals are then used as additional conditioning input to few-shot image classifiers. We develop a framework to unify the two stages (segmentation and classification) into an end-to-end classification model -- PRoPnet -- and empirically demonstrate that our methods improve accuracy on image datasets with natural scenes containing multiple object classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge