Andrew August

Neuro-physical dynamic load modeling using differentiable parametric optimization

Mar 20, 2022

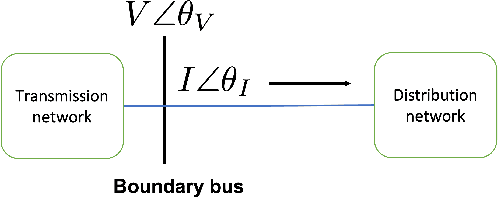

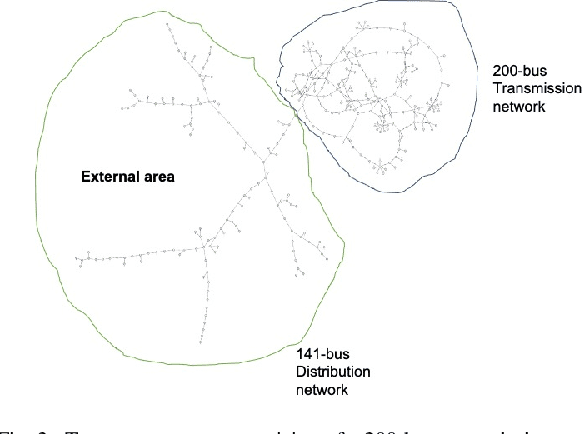

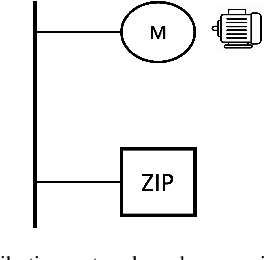

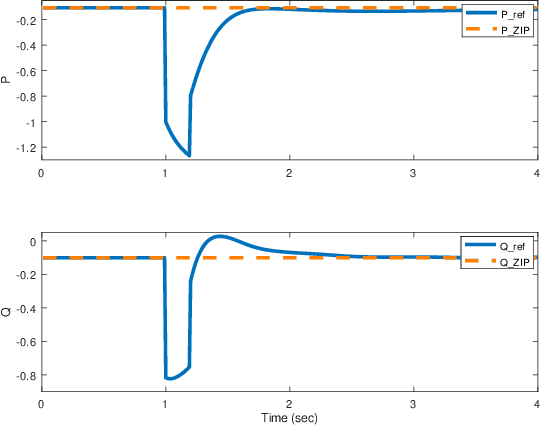

Abstract:In this work, we investigate a data-driven approach for obtaining a reduced equivalent load model of distribution systems for electromechanical transient stability analysis. The proposed reduced equivalent is a neuro-physical model comprising of a traditional ZIP load model augmented with a neural network. This neuro-physical model is trained through differentiable programming. We discuss the formulation, modeling details, and training of the proposed model set up as a differential parametric program. The performance and accuracy of this neurophysical ZIP load model is presented on a medium-scale 350-bus transmission-distribution network.

Analyzing Koopman approaches to physics-informed machine learning for long-term sea-surface temperature forecasting

Sep 15, 2020

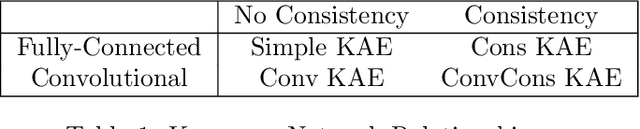

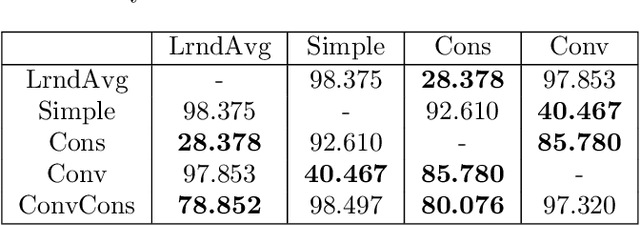

Abstract:Accurately predicting sea-surface temperature weeks to months into the future is an important step toward long term weather forecasting. Standard atmosphere-ocean coupled numerical models provide accurate sea-surface forecasts on the scale of a few days to a few weeks, but many important weather systems require greater foresight. In this paper we propose machine-learning approaches sea-surface temperature forecasting that are accurate on the scale of dozens of weeks. Our approach is based in Koopman operator theory, a useful tool for dynamical systems modelling. With this approach, we predict sea surface temperature in the Gulf of Mexico up to 180 days into the future based on a present image of thermal conditions and three years of historical training data. We evaluate the combination of a basic Koopman method with a convolutional autoencoder, and a newly proposed "consistent Koopman" method, in various permutations. We show that the Koopman approach consistently outperforms baselines, and we discuss the utility of our additional assumptions and methods in this sea-surface temperature domain.

Systematic Evaluation of Backdoor Data Poisoning Attacks on Image Classifiers

Apr 24, 2020

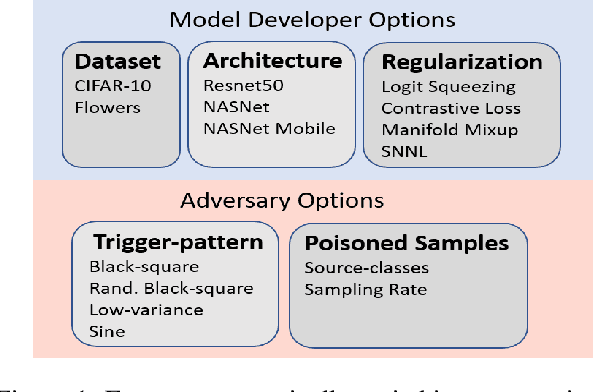

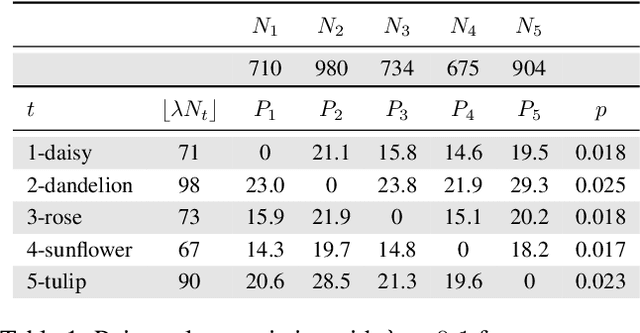

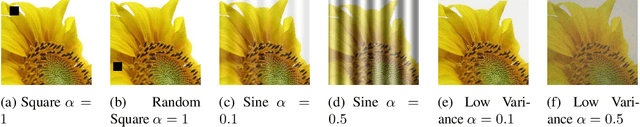

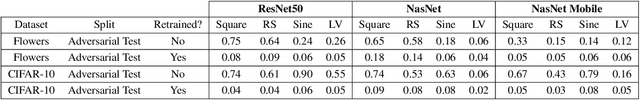

Abstract:Backdoor data poisoning attacks have recently been demonstrated in computer vision research as a potential safety risk for machine learning (ML) systems. Traditional data poisoning attacks manipulate training data to induce unreliability of an ML model, whereas backdoor data poisoning attacks maintain system performance unless the ML model is presented with an input containing an embedded "trigger" that provides a predetermined response advantageous to the adversary. Our work builds upon prior backdoor data-poisoning research for ML image classifiers and systematically assesses different experimental conditions including types of trigger patterns, persistence of trigger patterns during retraining, poisoning strategies, architectures (ResNet-50, NasNet, NasNet-Mobile), datasets (Flowers, CIFAR-10), and potential defensive regularization techniques (Contrastive Loss, Logit Squeezing, Manifold Mixup, Soft-Nearest-Neighbors Loss). Experiments yield four key findings. First, the success rate of backdoor poisoning attacks varies widely, depending on several factors, including model architecture, trigger pattern and regularization technique. Second, we find that poisoned models are hard to detect through performance inspection alone. Third, regularization typically reduces backdoor success rate, although it can have no effect or even slightly increase it, depending on the form of regularization. Finally, backdoors inserted through data poisoning can be rendered ineffective after just a few epochs of additional training on a small set of clean data without affecting the model's performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge