CSTR

Papers and Code

ScienceDB AI: An LLM-Driven Agentic Recommender System for Large-Scale Scientific Data Sharing Services

Jan 03, 2026The rapid growth of AI for Science (AI4S) has underscored the significance of scientific datasets, leading to the establishment of numerous national scientific data centers and sharing platforms. Despite this progress, efficiently promoting dataset sharing and utilization for scientific research remains challenging. Scientific datasets contain intricate domain-specific knowledge and contexts, rendering traditional collaborative filtering-based recommenders inadequate. Recent advances in Large Language Models (LLMs) offer unprecedented opportunities to build conversational agents capable of deep semantic understanding and personalized recommendations. In response, we present ScienceDB AI, a novel LLM-driven agentic recommender system developed on Science Data Bank (ScienceDB), one of the largest global scientific data-sharing platforms. ScienceDB AI leverages natural language conversations and deep reasoning to accurately recommend datasets aligned with researchers' scientific intents and evolving requirements. The system introduces several innovations: a Scientific Intention Perceptor to extract structured experimental elements from complicated queries, a Structured Memory Compressor to manage multi-turn dialogues effectively, and a Trustworthy Retrieval-Augmented Generation (Trustworthy RAG) framework. The Trustworthy RAG employs a two-stage retrieval mechanism and provides citable dataset references via Citable Scientific Task Record (CSTR) identifiers, enhancing recommendation trustworthiness and reproducibility. Through extensive offline and online experiments using over 10 million real-world datasets, ScienceDB AI has demonstrated significant effectiveness. To our knowledge, ScienceDB AI is the first LLM-driven conversational recommender tailored explicitly for large-scale scientific dataset sharing services. The platform is publicly accessible at: https://ai.scidb.cn/en.

Beyond Cropped Regions: New Benchmark and Corresponding Baseline for Chinese Scene Text Retrieval in Diverse Layouts

Jun 05, 2025

Chinese scene text retrieval is a practical task that aims to search for images containing visual instances of a Chinese query text. This task is extremely challenging because Chinese text often features complex and diverse layouts in real-world scenes. Current efforts tend to inherit the solution for English scene text retrieval, failing to achieve satisfactory performance. In this paper, we establish a Diversified Layout benchmark for Chinese Street View Text Retrieval (DL-CSVTR), which is specifically designed to evaluate retrieval performance across various text layouts, including vertical, cross-line, and partial alignments. To address the limitations in existing methods, we propose Chinese Scene Text Retrieval CLIP (CSTR-CLIP), a novel model that integrates global visual information with multi-granularity alignment training. CSTR-CLIP applies a two-stage training process to overcome previous limitations, such as the exclusion of visual features outside the text region and reliance on single-granularity alignment, thereby enabling the model to effectively handle diverse text layouts. Experiments on existing benchmark show that CSTR-CLIP outperforms the previous state-of-the-art model by 18.82% accuracy and also provides faster inference speed. Further analysis on DL-CSVTR confirms the superior performance of CSTR-CLIP in handling various text layouts. The dataset and code will be publicly available to facilitate research in Chinese scene text retrieval.

Towards Improving NAM-to-Speech Synthesis Intelligibility using Self-Supervised Speech Models

Jul 26, 2024

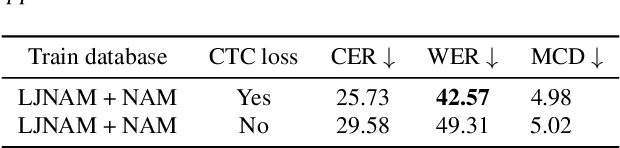

We propose a novel approach to significantly improve the intelligibility in the Non-Audible Murmur (NAM)-to-speech conversion task, leveraging self-supervision and sequence-to-sequence (Seq2Seq) learning techniques. Unlike conventional methods that explicitly record ground-truth speech, our methodology relies on self-supervision and speech-to-speech synthesis to simulate ground-truth speech. Despite utilizing simulated speech, our method surpasses the current state-of-the-art (SOTA) by 29.08% improvement in the Mel-Cepstral Distortion (MCD) metric. Additionally, we present error rates and demonstrate our model's proficiency to synthesize speech in novel voices of interest. Moreover, we present a methodology for augmenting the existing CSTR NAM TIMIT Plus corpus, setting a benchmark with a Word Error Rate (WER) of 42.57% to gauge the intelligibility of the synthesized speech. Speech samples can be found at https://nam2speech.github.io/NAM2Speech/

Physics-Informed Neural Networks with Hard Linear Equality Constraints

Feb 11, 2024Surrogate modeling is used to replace computationally expensive simulations. Neural networks have been widely applied as surrogate models that enable efficient evaluations over complex physical systems. Despite this, neural networks are data-driven models and devoid of any physics. The incorporation of physics into neural networks can improve generalization and data efficiency. The physics-informed neural network (PINN) is an approach to leverage known physical constraints present in the data, but it cannot strictly satisfy them in the predictions. This work proposes a novel physics-informed neural network, KKT-hPINN, which rigorously guarantees hard linear equality constraints through projection layers derived from KKT conditions. Numerical experiments on Aspen models of a continuous stirred-tank reactor (CSTR) unit, an extractive distillation subsystem, and a chemical plant demonstrate that this model can further enhance the prediction accuracy.

Vulnerability Assessment of Industrial Control System with an Improved CVSS

Jun 14, 2023

Cyberattacks on industrial control systems (ICS) have been drawing attention in academia. However, this has not raised adequate concerns among some industrial practitioners. Therefore, it is necessary to identify the vulnerable locations and components in the ICS and investigate the attack scenarios and techniques. This study proposes a method to assess the risk of cyberattacks on ICS with an improved Common Vulnerability Scoring System (CVSS) and applies it to a continuous stirred tank reactor (CSTR) model. The results show the physical system levels of ICS have the highest severity once cyberattacked, and controllers, workstations, and human-machine interface are the crucial components in the cyberattack and defense.

Noisy Speech Based Temporal Decomposition to Improve Fundamental Frequency Estimation

Dec 18, 2021

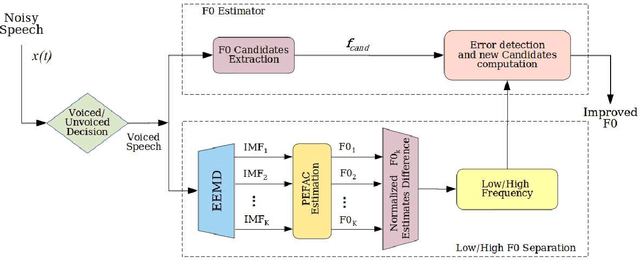

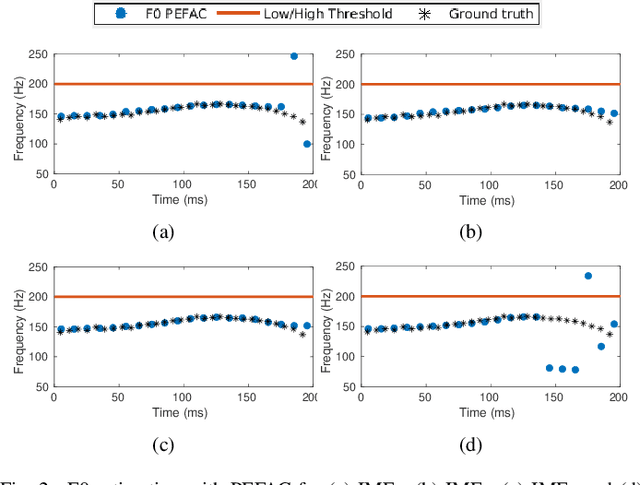

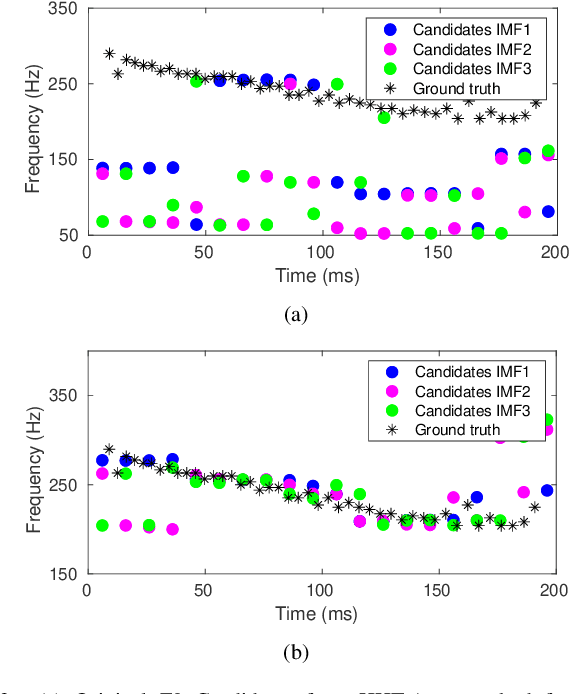

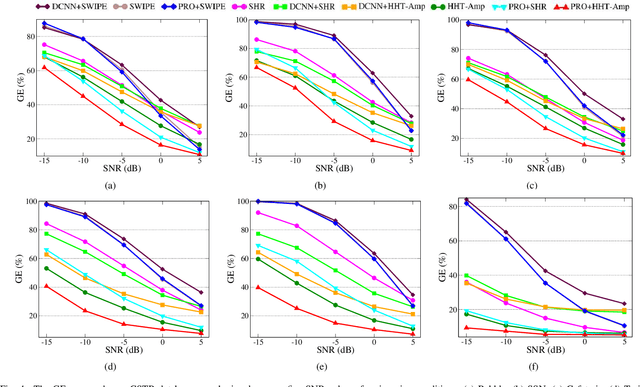

This paper introduces a novel method to separate noisy speech into low or high frequency frames, in order to improve fundamental frequency (F0) estimation accuracy. In this proposal, the target signal is analyzed by means of the ensemble empirical mode decomposition. Next, the pitch information is extracted from the first decomposition modes. This feature indicates the frequency region where the F0 of speech should be located, thus separating the frames into low-frequency (LF) or high-frequency (HF). The separation is applied to correct candidates extracted from a conventional fundamental frequency detection method, and hence improving the accuracy of F0 estimate. The proposed method is evaluated in experiments with CSTR and TIMIT databases, considering six acoustic noises under various signal-to-noise ratios. A pitch enhancement algorithm is adopted as baseline in the evaluation analysis considering three conventional estimators. Results show that the proposed method outperforms the competing strategies, in terms of low/high frequency separation accuracy. Moreover, the performance metrics of the F0 estimation techniques show that the novel solution is able to better improve F0 detection accuracy when compared to competitive approaches under different noisy conditions.

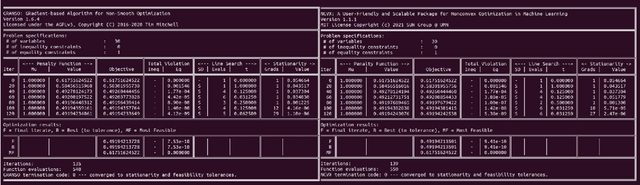

NCVX: A User-Friendly and Scalable Package for Nonconvex Optimization in Machine Learning

Nov 27, 2021

Optimizing nonconvex (NCVX) problems, especially those nonsmooth (NSMT) and constrained (CSTR), is an essential part of machine learning and deep learning. But it is hard to reliably solve this type of problems without optimization expertise. Existing general-purpose NCVX optimization packages are powerful, but typically cannot handle nonsmoothness. GRANSO is among the first packages targeting NCVX, NSMT, CSTR problems. However, it has several limitations such as the lack of auto-differentiation and GPU acceleration, which preclude the potential broad deployment by non-experts. To lower the technical barrier for the machine learning community, we revamp GRANSO into a user-friendly and scalable python package named NCVX, featuring auto-differentiation, GPU acceleration, tensor input, scalable QP solver, and zero dependency on proprietary packages. As a highlight, NCVX can solve general CSTR deep learning problems, the first of its kind. NCVX is available at https://ncvx.org, with detailed documentation and numerous examples from machine learning and other fields.

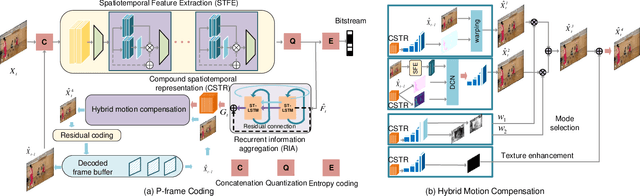

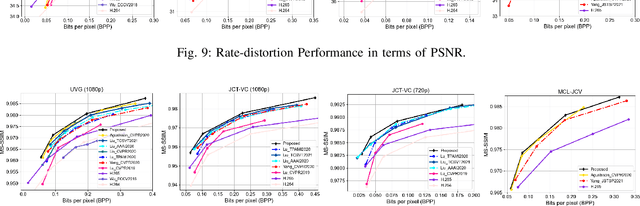

End-to-end Neural Video Coding Using a Compound Spatiotemporal Representation

Aug 05, 2021

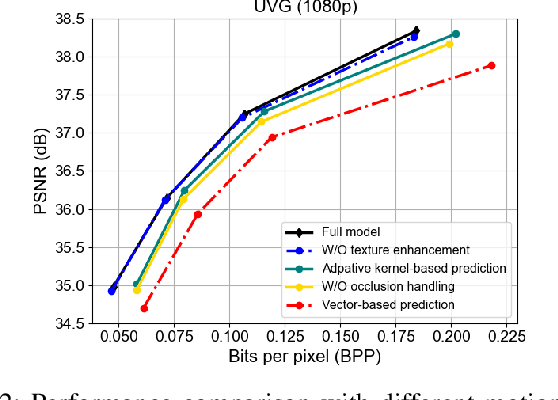

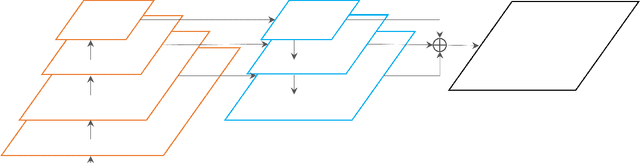

Recent years have witnessed rapid advances in learnt video coding. Most algorithms have solely relied on the vector-based motion representation and resampling (e.g., optical flow based bilinear sampling) for exploiting the inter frame redundancy. In spite of the great success of adaptive kernel-based resampling (e.g., adaptive convolutions and deformable convolutions) in video prediction for uncompressed videos, integrating such approaches with rate-distortion optimization for inter frame coding has been less successful. Recognizing that each resampling solution offers unique advantages in regions with different motion and texture characteristics, we propose a hybrid motion compensation (HMC) method that adaptively combines the predictions generated by these two approaches. Specifically, we generate a compound spatiotemporal representation (CSTR) through a recurrent information aggregation (RIA) module using information from the current and multiple past frames. We further design a one-to-many decoder pipeline to generate multiple predictions from the CSTR, including vector-based resampling, adaptive kernel-based resampling, compensation mode selection maps and texture enhancements, and combines them adaptively to achieve more accurate inter prediction. Experiments show that our proposed inter coding system can provide better motion-compensated prediction and is more robust to occlusions and complex motions. Together with jointly trained intra coder and residual coder, the overall learnt hybrid coder yields the state-of-the-art coding efficiency in low-delay scenario, compared to the traditional H.264/AVC and H.265/HEVC, as well as recently published learning-based methods, in terms of both PSNR and MS-SSIM metrics.

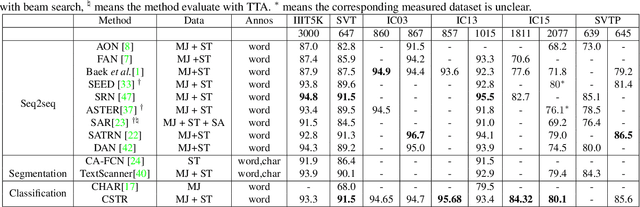

CSTR: A Classification Perspective on Scene Text Recognition

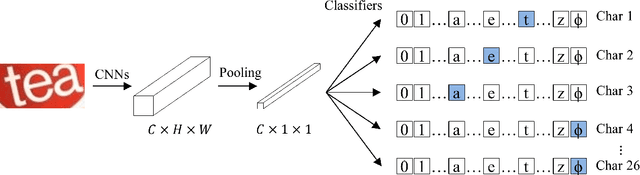

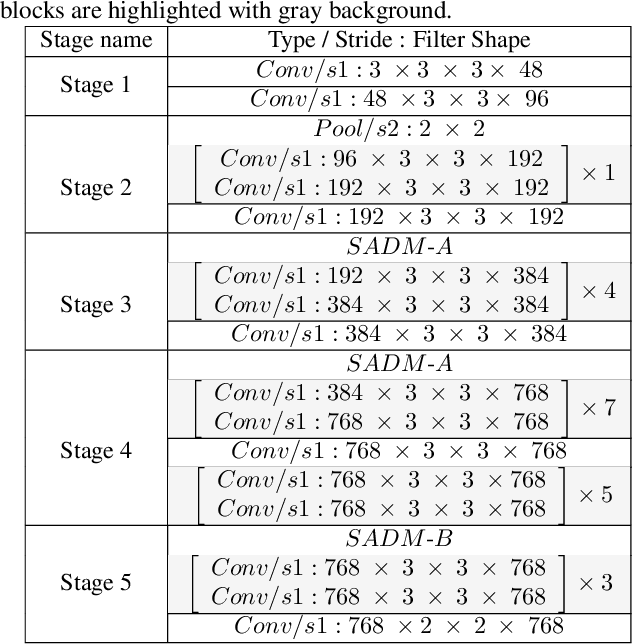

Feb 22, 2021

The prevalent perspectives of scene text recognition are from sequence to sequence (seq2seq) and segmentation. In this paper, we propose a new perspective on scene text recognition, in which we model the scene text recognition as an image classification problem. Based on the image classification perspective, a scene text recognition model is proposed, which is named as CSTR. The CSTR model consists of a series of convolutional layers and a global average pooling layer at the end, followed by independent multi-class classification heads, each of which predicts the corresponding character of the word sequence in input image. The CSTR model is easy to train using parallel cross entropy losses. CSTR is as simple as image classification models like ResNet \cite{he2016deep} which makes it easy to implement, and the fully convolutional neural network architecture makes it efficient to train and deploy. We demonstrate the effectiveness of the classification perspective on scene text recognition with thorough experiments. Futhermore, CSTR achieves nearly state-of-the-art performance on six public benchmarks including regular text, irregular text. The code will be available at https://github.com/Media-Smart/vedastr.

Quantum Computing Assisted Deep Learning for Fault Detection and Diagnosis in Industrial Process Systems

Feb 29, 2020

Quantum computing (QC) and deep learning techniques have attracted widespread attention in the recent years. This paper proposes QC-based deep learning methods for fault diagnosis that exploit their unique capabilities to overcome the computational challenges faced by conventional data-driven approaches performed on classical computers. Deep belief networks are integrated into the proposed fault diagnosis model and are used to extract features at different levels for normal and faulty process operations. The QC-based fault diagnosis model uses a quantum computing assisted generative training process followed by discriminative training to address the shortcomings of classical algorithms. To demonstrate its applicability and efficiency, the proposed fault diagnosis method is applied to process monitoring of continuous stirred tank reactor (CSTR) and Tennessee Eastman (TE) process. The proposed QC-based deep learning approach enjoys superior fault detection and diagnosis performance with obtained average fault detection rates of 79.2% and 99.39% for CSTR and TE process, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge