Zikang Xiong

A Framework for Learning Scoring Rules in Autonomous Driving Planning Systems

Feb 17, 2025

Abstract:In autonomous driving systems, motion planning is commonly implemented as a two-stage process: first, a trajectory proposer generates multiple candidate trajectories, then a scoring mechanism selects the most suitable trajectory for execution. For this critical selection stage, rule-based scoring mechanisms are particularly appealing as they can explicitly encode driving preferences, safety constraints, and traffic regulations in a formalized, human-understandable format. However, manually crafting these scoring rules presents significant challenges: the rules often contain complex interdependencies, require careful parameter tuning, and may not fully capture the nuances present in real-world driving data. This work introduces FLoRA, a novel framework that bridges this gap by learning interpretable scoring rules represented in temporal logic. Our method features a learnable logic structure that captures nuanced relationships across diverse driving scenarios, optimizing both rules and parameters directly from real-world driving demonstrations collected in NuPlan. Our approach effectively learns to evaluate driving behavior even though the training data only contains positive examples (successful driving demonstrations). Evaluations in closed-loop planning simulations demonstrate that our learned scoring rules outperform existing techniques, including expert-designed rules and neural network scoring models, while maintaining interpretability. This work introduces a data-driven approach to enhance the scoring mechanism in autonomous driving systems, designed as a plug-in module to seamlessly integrate with various trajectory proposers. Our video and code are available on xiong.zikang.me/FLoRA.

Scaling Safe Multi-Agent Control for Signal Temporal Logic Specifications

Jan 10, 2025

Abstract:Existing methods for safe multi-agent control using logic specifications like Signal Temporal Logic (STL) often face scalability issues. This is because they rely either on single-agent perspectives or on Mixed Integer Linear Programming (MILP)-based planners, which are complex to optimize. These methods have proven to be computationally expensive and inefficient when dealing with a large number of agents. To address these limitations, we present a new scalable approach to multi-agent control in this setting. Our method treats the relationships between agents using a graph structure rather than in terms of a single-agent perspective. Moreover, it combines a multi-agent collision avoidance controller with a Graph Neural Network (GNN) based planner, models the system in a decentralized fashion, and trains on STL-based objectives to generate safe and efficient plans for multiple agents, thereby optimizing the satisfaction of complex temporal specifications while also facilitating multi-agent collision avoidance. Our experiments show that our approach significantly outperforms existing methods that use a state-of-the-art MILP-based planner in terms of scalability and performance. The project website is https://jeappen.com/mastl-gcbf-website/ and the code is at https://github.com/jeappen/mastl-gcbf .

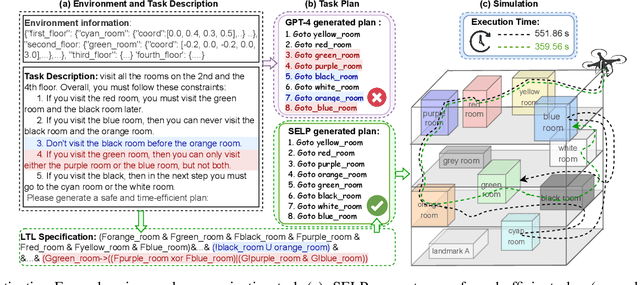

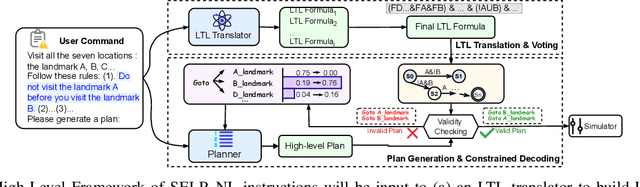

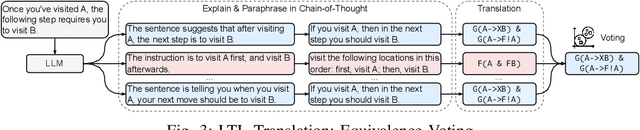

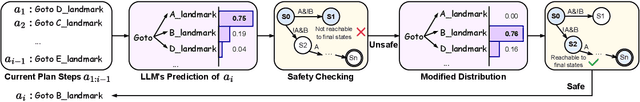

SELP: Generating Safe and Efficient Task Plans for Robot Agents with Large Language Models

Sep 28, 2024

Abstract:Despite significant advancements in large language models (LLMs) that enhance robot agents' understanding and execution of natural language (NL) commands, ensuring the agents adhere to user-specified constraints remains challenging, particularly for complex commands and long-horizon tasks. To address this challenge, we present three key insights, equivalence voting, constrained decoding, and domain-specific fine-tuning, which significantly enhance LLM planners' capability in handling complex tasks. Equivalence voting ensures consistency by generating and sampling multiple Linear Temporal Logic (LTL) formulas from NL commands, grouping equivalent LTL formulas, and selecting the majority group of formulas as the final LTL formula. Constrained decoding then uses the generated LTL formula to enforce the autoregressive inference of plans, ensuring the generated plans conform to the LTL. Domain-specific fine-tuning customizes LLMs to produce safe and efficient plans within specific task domains. Our approach, Safe Efficient LLM Planner (SELP), combines these insights to create LLM planners to generate plans adhering to user commands with high confidence. We demonstrate the effectiveness and generalizability of SELP across different robot agents and tasks, including drone navigation and robot manipulation. For drone navigation tasks, SELP outperforms state-of-the-art planners by 10.8% in safety rate (i.e., finishing tasks conforming to NL commands) and by 19.8% in plan efficiency. For robot manipulation tasks, SELP achieves 20.4% improvement in safety rate. Our datasets for evaluating NL-to-LTL and robot task planning will be released in github.com/lt-asset/selp.

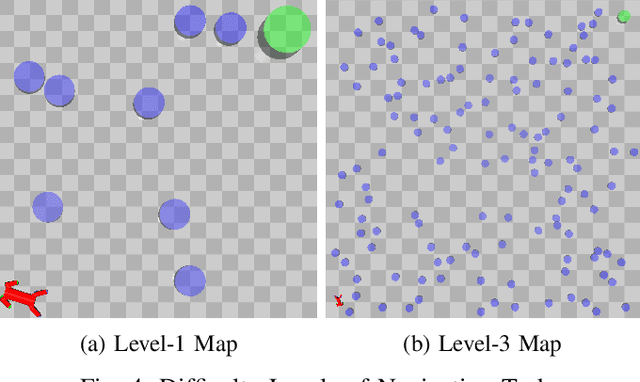

DIGIMON: Diagnosis and Mitigation of Sampling Skew for Reinforcement Learning based Meta-Planner in Robot Navigation

Sep 17, 2024

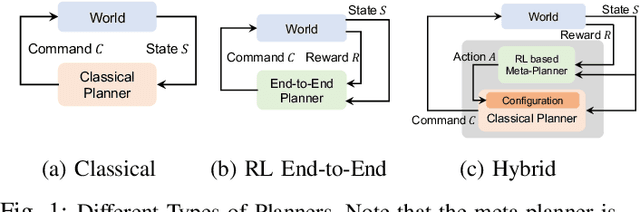

Abstract:Robot navigation is increasingly crucial across applications like delivery services and warehouse management. The integration of Reinforcement Learning (RL) with classical planning has given rise to meta-planners that combine the adaptability of RL with the explainable decision-making of classical planners. However, the exploration capabilities of RL-based meta-planners during training are often constrained by the capabilities of the underlying classical planners. This constraint can result in limited exploration, thereby leading to sampling skew issues. To address these issues, our paper introduces a novel framework, DIGIMON, which begins with behavior-guided diagnosis for exploration bottlenecks within the meta-planner and follows up with a mitigation strategy that conducts up-sampling from diagnosed bottleneck data. Our evaluation shows 13.5%+ improvement in navigation performance, greater robustness in out-of-distribution environments, and a 4x boost in training efficiency. DIGIMON is designed as a versatile, plug-and-play solution, allowing seamless integration into various RL-based meta-planners.

Manipulating Neural Path Planners via Slight Perturbations

Mar 27, 2024

Abstract:Data-driven neural path planners are attracting increasing interest in the robotics community. However, their neural network components typically come as black boxes, obscuring their underlying decision-making processes. Their black-box nature exposes them to the risk of being compromised via the insertion of hidden malicious behaviors. For example, an attacker may hide behaviors that, when triggered, hijack a delivery robot by guiding it to a specific (albeit wrong) destination, trapping it in a predefined region, or inducing unnecessary energy expenditure by causing the robot to repeatedly circle a region. In this paper, we propose a novel approach to specify and inject a range of hidden malicious behaviors, known as backdoors, into neural path planners. Our approach provides a concise but flexible way to define these behaviors, and we show that hidden behaviors can be triggered by slight perturbations (e.g., inserting a tiny unnoticeable object), that can nonetheless significantly compromise their integrity. We also discuss potential techniques to identify these backdoors aimed at alleviating such risks. We demonstrate our approach on both sampling-based and search-based neural path planners.

Co-learning Planning and Control Policies Using Differentiable Formal Task Constraints

Mar 02, 2023Abstract:This paper presents a hierarchical reinforcement learning algorithm constrained by differentiable signal temporal logic. Previous work on logic-constrained reinforcement learning consider encoding these constraints with a reward function, constraining policy updates with a sample-based policy gradient. However, such techniques oftentimes tend to be inefficient because of the significant number of samples required to obtain accurate policy gradients. In this paper, instead of implicitly constraining policy search with sample-based policy gradients, we directly constrain policy search by backpropagating through formal constraints, enabling training hierarchical policies with substantially fewer training samples. The use of hierarchical policies is recognized as a crucial component of reinforcement learning with task constraints. We show that we can stably constrain policy updates, thus enabling different levels of the policy to be learned simultaneously, yielding superior performance compared with training them separately. Experiment results on several simulated high-dimensional robot dynamics and a real-world differential drive robot (TurtleBot3) demonstrate the effectiveness of our approach on five different types of task constraints. Demo videos, code, and models can be found at our project website: https://sites.google.com/view/dscrl

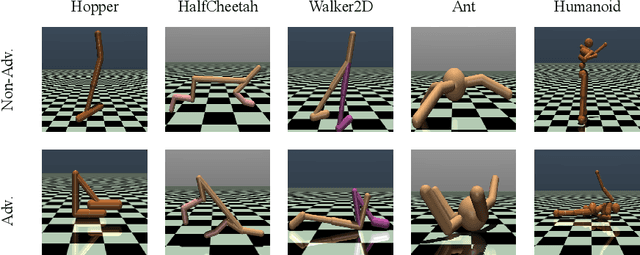

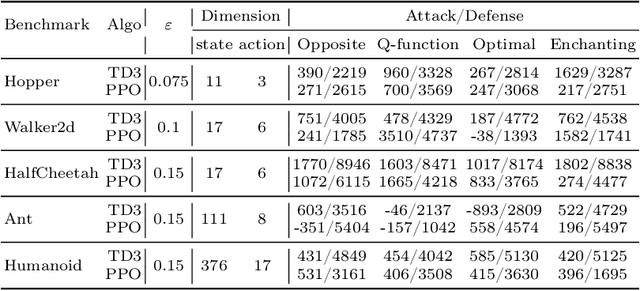

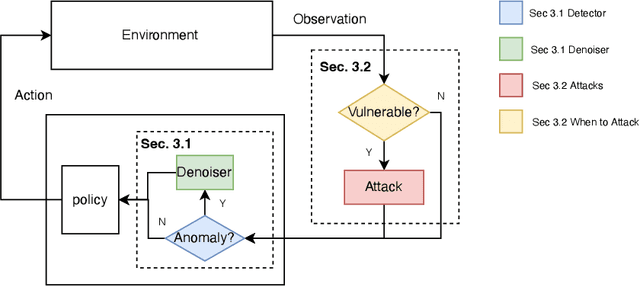

Defending Observation Attacks in Deep Reinforcement Learning via Detection and Denoising

Jun 14, 2022

Abstract:Neural network policies trained using Deep Reinforcement Learning (DRL) are well-known to be susceptible to adversarial attacks. In this paper, we consider attacks manifesting as perturbations in the observation space managed by the external environment. These attacks have been shown to downgrade policy performance significantly. We focus our attention on well-trained deterministic and stochastic neural network policies in the context of continuous control benchmarks subject to four well-studied observation space adversarial attacks. To defend against these attacks, we propose a novel defense strategy using a detect-and-denoise schema. Unlike previous adversarial training approaches that sample data in adversarial scenarios, our solution does not require sampling data in an environment under attack, thereby greatly reducing risk during training. Detailed experimental results show that our technique is comparable with state-of-the-art adversarial training approaches.

Model-free Neural Lyapunov Control for Safe Robot Navigation

Mar 02, 2022

Abstract:Model-free Deep Reinforcement Learning (DRL) controllers have demonstrated promising results on various challenging non-linear control tasks. While a model-free DRL algorithm can solve unknown dynamics and high-dimensional problems, it lacks safety assurance. Although safety constraints can be encoded as part of a reward function, there still exists a large gap between an RL controller trained with this modified reward and a safe controller. In contrast, instead of implicitly encoding safety constraints with rewards, we explicitly co-learn a Twin Neural Lyapunov Function (TNLF) with the control policy in the DRL training loop and use the learned TNLF to build a runtime monitor. Combined with the path generated from a planner, the monitor chooses appropriate waypoints that guide the learned controller to provide collision-free control trajectories. Our approach inherits the scalability advantages from DRL while enhancing safety guarantees. Our experimental evaluation demonstrates the effectiveness of our approach compared to DRL with augmented rewards and constrained DRL methods over a range of high-dimensional safety-sensitive navigation tasks.

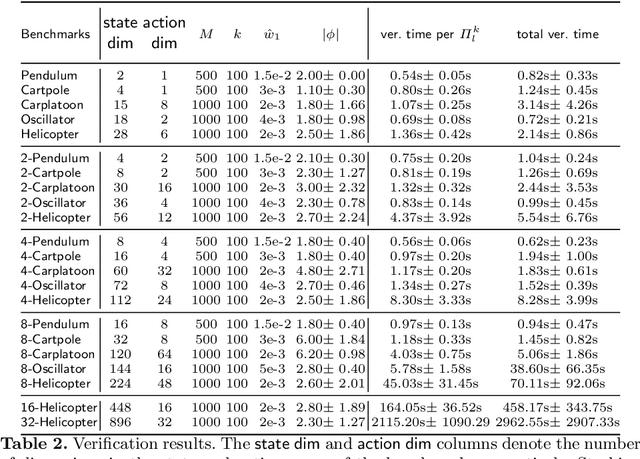

Scalable Synthesis of Verified Controllers in Deep Reinforcement Learning

Apr 20, 2021

Abstract:There has been significant recent interest in devising verification techniques for learning-enabled controllers (LECs) that manage safety-critical systems. Given the opacity and lack of interpretability of the neural policies that govern the behavior of such controllers, many existing approaches enforce safety properties through the use of shields, a dynamic monitoring and repair mechanism that ensures a LEC does not emit actions that would violate desired safety conditions. These methods, however, have shown to have significant scalability limitations because verification costs grow as problem dimensionality and objective complexity increase. In this paper, we propose a new automated verification pipeline capable of synthesizing high-quality safety shields even when the problem domain involves hundreds of dimensions, or when the desired objective involves stochastic perturbations, liveness considerations, and other complex non-functional properties. Our key insight involves separating safety verification from neural controller, using pre-computed verified safety shields to constrain neural controller training which does not only focus on safety. Experimental results over a range of realistic high-dimensional deep RL benchmarks demonstrate the effectiveness of our approach.

Batch Sequential Adaptive Designs for Global Optimization

Oct 21, 2020

Abstract:Compared with the fixed-run designs, the sequential adaptive designs (SAD) are thought to be more efficient and effective. Efficient global optimization (EGO) is one of the most popular SAD methods for expensive black-box optimization problems. A well-recognized weakness of the original EGO in complex computer experiments is that it is serial, and hence the modern parallel computing techniques cannot be utilized to speed up the running of simulator experiments. For those multiple points EGO methods, the heavy computation and points clustering are the obstacles. In this work, a novel batch SAD method, named "accelerated EGO", is forwarded by using a refined sampling/importance resampling (SIR) method to search the points with large expected improvement (EI) values. The computation burden of the new method is much lighter, and the points clustering is also avoided. The efficiency of the proposed SAD is validated by nine classic test functions with dimension from 2 to 12. The empirical results show that the proposed algorithm indeed can parallelize original EGO, and gain much improvement compared against the other parallel EGO algorithm especially under high-dimensional case. Additionally, we also apply the new method to the hyper-parameter tuning of Support Vector Machine (SVM). Accelerated EGO obtains comparable cross validation accuracy with other methods and the CPU time can be reduced a lot due to the parallel computation and sampling method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge