Ahmed H. Qureshi

Multi-Agent Monte Carlo Tree Search for Makespan-Efficient Object Rearrangement in Cluttered Spaces

Feb 02, 2026Abstract:Object rearrangement planning in complex, cluttered environments is a common challenge in warehouses, households, and rescue sites. Prior studies largely address monotone instances, whereas real-world tasks are often non-monotone-objects block one another and must be temporarily relocated to intermediate positions before reaching their final goals. In such settings, effective multi-agent collaboration can substantially reduce the time required to complete tasks. This paper introduces Centralized, Asynchronous, Multi-agent Monte Carlo Tree Search (CAM-MCTS), a novel framework for general-purpose makespan-efficient object rearrangement planning in challenging environments. CAM-MCTS combines centralized task assignment-where agents remain aware of each other's intended actions to facilitate globally optimized planning-with an asynchronous task execution strategy that enables agents to take on new tasks at appropriate time steps, rather than waiting for others, guided by a one-step look-ahead cost estimate. This design minimizes idle time, prevents unnecessary synchronization delays, and enhances overall system efficiency. We evaluate CAM-MCTS across a diverse set of monotone and non-monotone tasks in cluttered environments, demonstrating consistent reductions in makespan compared to strong baselines. Finally, we validate our approach on a real-world multi-agent system under different configurations, further confirming its effectiveness and robustness.

Goal Reaching with Eikonal-Constrained Hierarchical Quasimetric Reinforcement Learning

Dec 12, 2025

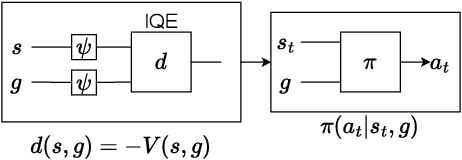

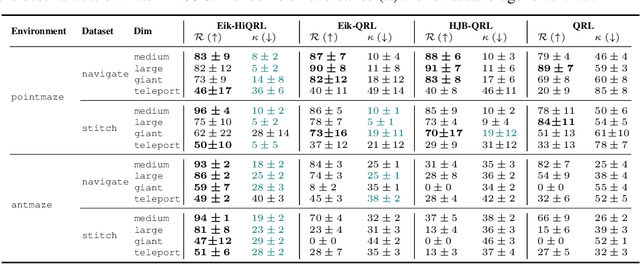

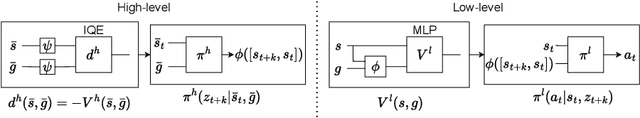

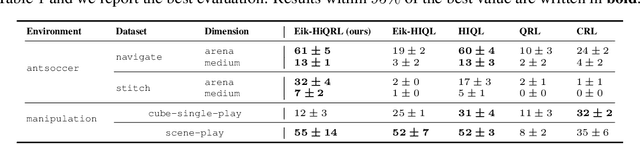

Abstract:Goal-Conditioned Reinforcement Learning (GCRL) mitigates the difficulty of reward design by framing tasks as goal reaching rather than maximizing hand-crafted reward signals. In this setting, the optimal goal-conditioned value function naturally forms a quasimetric, motivating Quasimetric RL (QRL), which constrains value learning to quasimetric mappings and enforces local consistency through discrete, trajectory-based constraints. We propose Eikonal-Constrained Quasimetric RL (Eik-QRL), a continuous-time reformulation of QRL based on the Eikonal Partial Differential Equation (PDE). This PDE-based structure makes Eik-QRL trajectory-free, requiring only sampled states and goals, while improving out-of-distribution generalization. We provide theoretical guarantees for Eik-QRL and identify limitations that arise under complex dynamics. To address these challenges, we introduce Eik-Hierarchical QRL (Eik-HiQRL), which integrates Eik-QRL into a hierarchical decomposition. Empirically, Eik-HiQRL achieves state-of-the-art performance in offline goal-conditioned navigation and yields consistent gains over QRL in manipulation tasks, matching temporal-difference methods.

Manifold-constrained Hamilton-Jacobi Reachability Learning for Decentralized Multi-Agent Motion Planning

Nov 05, 2025Abstract:Safe multi-agent motion planning (MAMP) under task-induced constraints is a critical challenge in robotics. Many real-world scenarios require robots to navigate dynamic environments while adhering to manifold constraints imposed by tasks. For example, service robots must carry cups upright while avoiding collisions with humans or other robots. Despite recent advances in decentralized MAMP for high-dimensional systems, incorporating manifold constraints remains difficult. To address this, we propose a manifold-constrained Hamilton-Jacobi reachability (HJR) learning framework for decentralized MAMP. Our method solves HJR problems under manifold constraints to capture task-aware safety conditions, which are then integrated into a decentralized trajectory optimization planner. This enables robots to generate motion plans that are both safe and task-feasible without requiring assumptions about other agents' policies. Our approach generalizes across diverse manifold-constrained tasks and scales effectively to high-dimensional multi-agent manipulation problems. Experiments show that our method outperforms existing constrained motion planners and operates at speeds suitable for real-world applications. Video demonstrations are available at https://youtu.be/RYcEHMnPTH8 .

Automaton Constrained Q-Learning

Oct 06, 2025

Abstract:Real-world robotic tasks often require agents to achieve sequences of goals while respecting time-varying safety constraints. However, standard Reinforcement Learning (RL) paradigms are fundamentally limited in these settings. A natural approach to these problems is to combine RL with Linear-time Temporal Logic (LTL), a formal language for specifying complex, temporally extended tasks and safety constraints. Yet, existing RL methods for LTL objectives exhibit poor empirical performance in complex and continuous environments. As a result, no scalable methods support both temporally ordered goals and safety simultaneously, making them ill-suited for realistic robotics scenarios. We propose Automaton Constrained Q-Learning (ACQL), an algorithm that addresses this gap by combining goal-conditioned value learning with automaton-guided reinforcement. ACQL supports most LTL task specifications and leverages their automaton representation to explicitly encode stage-wise goal progression and both stationary and non-stationary safety constraints. We show that ACQL outperforms existing methods across a range of continuous control tasks, including cases where prior methods fail to satisfy either goal-reaching or safety constraints. We further validate its real-world applicability by deploying ACQL on a 6-DOF robotic arm performing a goal-reaching task in a cluttered, cabinet-like space with safety constraints. Our results demonstrate that ACQL is a robust and scalable solution for learning robotic behaviors according to rich temporal specifications.

Online Hierarchical Policy Learning using Physics Priors for Robot Navigation in Unknown Environments

Oct 01, 2025Abstract:Robot navigation in large, complex, and unknown indoor environments is a challenging problem. The existing approaches, such as traditional sampling-based methods, struggle with resolution control and scalability, while imitation learning-based methods require a large amount of demonstration data. Active Neural Time Fields (ANTFields) have recently emerged as a promising solution by using local observations to learn cost-to-go functions without relying on demonstrations. Despite their potential, these methods are hampered by challenges such as spectral bias and catastrophic forgetting, which diminish their effectiveness in complex scenarios. To address these issues, our approach decomposes the planning problem into a hierarchical structure. At the high level, a sparse graph captures the environment's global connectivity, while at the low level, a planner based on neural fields navigates local obstacles by solving the Eikonal PDE. This physics-informed strategy overcomes common pitfalls like spectral bias and neural field fitting difficulties, resulting in a smooth and precise representation of the cost landscape. We validate our framework in large-scale environments, demonstrating its enhanced adaptability and precision compared to previous methods, and highlighting its potential for online exploration, mapping, and real-world navigation.

Continuous-Time Value Iteration for Multi-Agent Reinforcement Learning

Sep 11, 2025

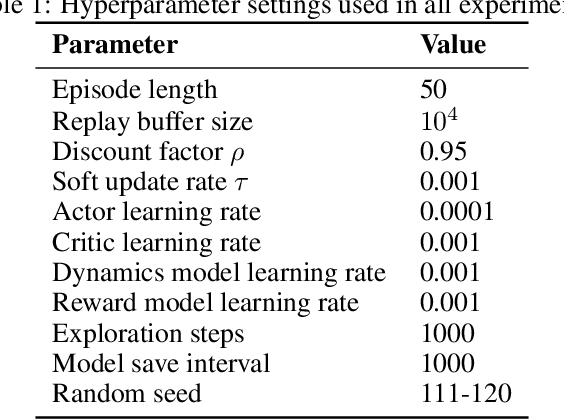

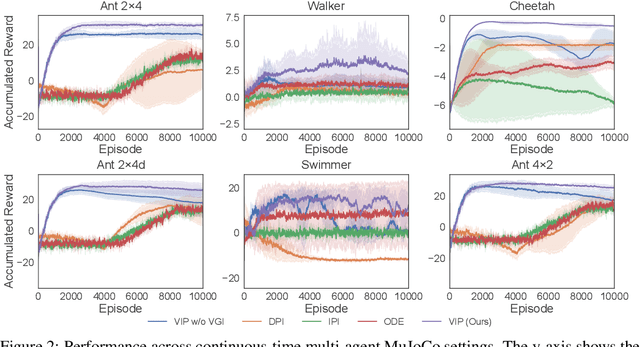

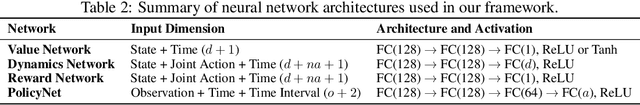

Abstract:Existing reinforcement learning (RL) methods struggle with complex dynamical systems that demand interactions at high frequencies or irregular time intervals. Continuous-time RL (CTRL) has emerged as a promising alternative by replacing discrete-time Bellman recursion with differential value functions defined as viscosity solutions of the Hamilton--Jacobi--Bellman (HJB) equation. While CTRL has shown promise, its applications have been largely limited to the single-agent domain. This limitation stems from two key challenges: (i) conventional solution methods for HJB equations suffer from the curse of dimensionality (CoD), making them intractable in high-dimensional systems; and (ii) even with HJB-based learning approaches, accurately approximating centralized value functions in multi-agent settings remains difficult, which in turn destabilizes policy training. In this paper, we propose a CT-MARL framework that uses physics-informed neural networks (PINNs) to approximate HJB-based value functions at scale. To ensure the value is consistent with its differential structure, we align value learning with value-gradient learning by introducing a Value Gradient Iteration (VGI) module that iteratively refines value gradients along trajectories. This improves gradient fidelity, in turn yielding more accurate values and stronger policy learning. We evaluate our method using continuous-time variants of standard benchmarks, including multi-agent particle environment (MPE) and multi-agent MuJoCo. Our results demonstrate that our approach consistently outperforms existing continuous-time RL baselines and scales to complex multi-agent dynamics.

Physics-informed Neural Motion Planning via Domain Decomposition in Large Environments

Jun 15, 2025Abstract:Physics-informed Neural Motion Planners (PiNMPs) provide a data-efficient framework for solving the Eikonal Partial Differential Equation (PDE) and representing the cost-to-go function for motion planning. However, their scalability remains limited by spectral bias and the complex loss landscape of PDE-driven training. Domain decomposition mitigates these issues by dividing the environment into smaller subdomains, but existing methods enforce continuity only at individual spatial points. While effective for function approximation, these methods fail to capture the spatial connectivity required for motion planning, where the cost-to-go function depends on both the start and goal coordinates rather than a single query point. We propose Finite Basis Neural Time Fields (FB-NTFields), a novel neural field representation for scalable cost-to-go estimation. Instead of enforcing continuity in output space, FB-NTFields construct a latent space representation, computing the cost-to-go as a distance between the latent embeddings of start and goal coordinates. This enables global spatial coherence while integrating domain decomposition, ensuring efficient large-scale motion planning. We validate FB-NTFields in complex synthetic and real-world scenarios, demonstrating substantial improvements over existing PiNMPs. Finally, we deploy our method on a Unitree B1 quadruped robot, successfully navigating indoor environments. The supplementary videos can be found at https://youtu.be/OpRuCbLNOwM.

Dynamic Robot Tool Use with Vision Language Models

May 02, 2025Abstract:Tool use enhances a robot's task capabilities. Recent advances in vision-language models (VLMs) have equipped robots with sophisticated cognitive capabilities for tool-use applications. However, existing methodologies focus on elementary quasi-static tool manipulations or high-level tool selection while neglecting the critical aspect of task-appropriate tool grasping. To address this limitation, we introduce inverse Tool-Use Planning (iTUP), a novel VLM-driven framework that enables grounded fine-grained planning for versatile robotic tool use. Through an integrated pipeline of VLM-based tool and contact point grounding, position-velocity trajectory planning, and physics-informed grasp generation and selection, iTUP demonstrates versatility across (1) quasi-static and more challenging (2) dynamic and (3) cluster tool-use tasks. To ensure robust planning, our framework integrates stable and safe task-aware grasping by reasoning over semantic affordances and physical constraints. We evaluate iTUP and baselines on a comprehensive range of realistic tool use tasks including precision hammering, object scooping, and cluster sweeping. Experimental results demonstrate that iTUP ensures a thorough grounding of cognition and planning for challenging robot tool use across diverse environments.

Implicit Physics-aware Policy for Dynamic Manipulation of Rigid Objects via Soft Body Tools

Feb 08, 2025

Abstract:Recent advancements in robot tool use have unlocked their usage for novel tasks, yet the predominant focus is on rigid-body tools, while the investigation of soft-body tools and their dynamic interaction with rigid bodies remains unexplored. This paper takes a pioneering step towards dynamic one-shot soft tool use for manipulating rigid objects, a challenging problem posed by complex interactions and unobservable physical properties. To address these problems, we propose the Implicit Physics-aware (IPA) policy, designed to facilitate effective soft tool use across various environmental configurations. The IPA policy conducts system identification to implicitly identify physics information and predict goal-conditioned, one-shot actions accordingly. We validate our approach through a challenging task, i.e., transporting rigid objects using soft tools such as ropes to distant target positions in a single attempt under unknown environment physics parameters. Our experimental results indicate the effectiveness of our method in efficiently identifying physical properties, accurately predicting actions, and smoothly generalizing to real-world environments. The related video is available at: https://youtu.be/4hPrUDTc4Rg?si=WUZrT2vjLMt8qRWA

Differentiable Composite Neural Signed Distance Fields for Robot Navigation in Dynamic Indoor Environments

Feb 04, 2025Abstract:Neural Signed Distance Fields (SDFs) provide a differentiable environment representation to readily obtain collision checks and well-defined gradients for robot navigation tasks. However, updating neural SDFs as the scene evolves entails re-training, which is tedious, time consuming, and inefficient, making it unsuitable for robot navigation with limited field-of-view in dynamic environments. Towards this objective, we propose a compositional framework of neural SDFs to solve robot navigation in indoor environments using only an onboard RGB-D sensor. Our framework embodies a dual mode procedure for trajectory optimization, with different modes using complementary methods of modeling collision costs and collision avoidance gradients. The primary stage queries the robot body's SDF, swept along the route to goal, at the obstacle point cloud, enabling swift local optimization of trajectories. The secondary stage infers the visible scene's SDF by aligning and composing the SDF representations of its constituents, providing better informed costs and gradients for trajectory optimization. The dual mode procedure combines the best of both stages, achieving a success rate of 98%, 14.4% higher than baseline with comparable amortized plan time on iGibson 2.0. We also demonstrate its effectiveness in adapting to real-world indoor scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge