Hanwen Ren

Multi-Agent Monte Carlo Tree Search for Makespan-Efficient Object Rearrangement in Cluttered Spaces

Feb 02, 2026Abstract:Object rearrangement planning in complex, cluttered environments is a common challenge in warehouses, households, and rescue sites. Prior studies largely address monotone instances, whereas real-world tasks are often non-monotone-objects block one another and must be temporarily relocated to intermediate positions before reaching their final goals. In such settings, effective multi-agent collaboration can substantially reduce the time required to complete tasks. This paper introduces Centralized, Asynchronous, Multi-agent Monte Carlo Tree Search (CAM-MCTS), a novel framework for general-purpose makespan-efficient object rearrangement planning in challenging environments. CAM-MCTS combines centralized task assignment-where agents remain aware of each other's intended actions to facilitate globally optimized planning-with an asynchronous task execution strategy that enables agents to take on new tasks at appropriate time steps, rather than waiting for others, guided by a one-step look-ahead cost estimate. This design minimizes idle time, prevents unnecessary synchronization delays, and enhances overall system efficiency. We evaluate CAM-MCTS across a diverse set of monotone and non-monotone tasks in cluttered environments, demonstrating consistent reductions in makespan compared to strong baselines. Finally, we validate our approach on a real-world multi-agent system under different configurations, further confirming its effectiveness and robustness.

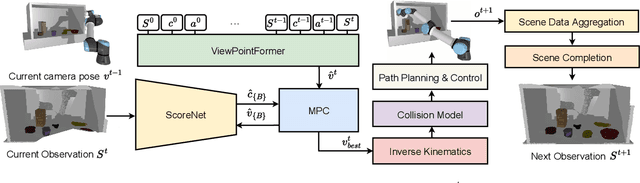

Integrating Active Sensing and Rearrangement Planning for Efficient Object Retrieval from Unknown, Confined, Cluttered Environments

Nov 18, 2024

Abstract:Retrieving target objects from unknown, confined spaces remains a challenging task that requires integrated, task-driven active sensing and rearrangement planning. Previous approaches have independently addressed active sensing and rearrangement planning, limiting their practicality in real-world scenarios. This paper presents a new, integrated heuristic-based active sensing and Monte-Carlo Tree Search (MCTS)-based retrieval planning approach. These components provide feedback to one another to actively sense critical, unobserved areas suitable for the retrieval planner to plan a sequence for relocating path-blocking obstacles and a collision-free trajectory for retrieving the target object. We demonstrate the effectiveness of our approach using a robot arm equipped with an in-hand camera in both simulated and real-world confined, cluttered scenarios. Our framework is compared against various state-of-the-art methods. The results indicate that our proposed approach outperforms baseline methods by a significant margin in terms of the success rate, the object rearrangement planning time consumption and the number of planning trials before successfully retrieving the target. Videos can be found at https://youtu.be/tea7I-3RtV0.

Neural Rearrangement Planning for Object Retrieval from Confined Spaces Perceivable by Robot's In-hand RGB-D Sensor

Feb 10, 2024Abstract:Rearrangement planning for object retrieval tasks from confined spaces is a challenging problem, primarily due to the lack of open space for robot motion and limited perception. Several traditional methods exist to solve object retrieval tasks, but they require overhead cameras for perception and a time-consuming exhaustive search to find a solution and often make unrealistic assumptions, such as having identical, simple geometry objects in the environment. This paper presents a neural object retrieval framework that efficiently performs rearrangement planning of unknown, arbitrary objects in confined spaces to retrieve the desired object using a given robot grasp. Our method actively senses the environment with the robot's in-hand camera. It then selects and relocates the non-target objects such that they do not block the robot path homotopy to the target object, thus also aiding an underlying path planner in quickly finding robot motion sequences. Furthermore, we demonstrate our framework in challenging scenarios, including real-world cabinet-like environments with arbitrary household objects. The results show that our framework achieves the best performance among all presented methods and is, on average, two orders of magnitude computationally faster than the best-performing baselines.

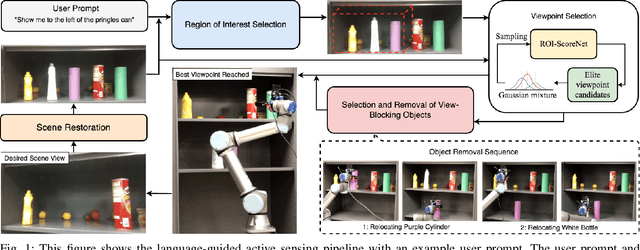

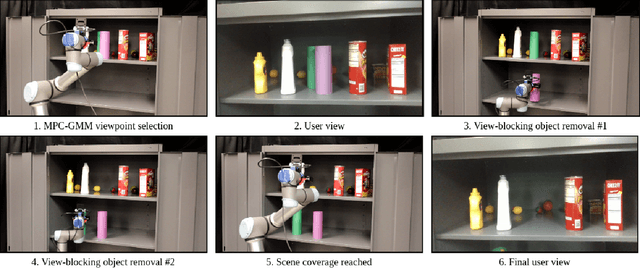

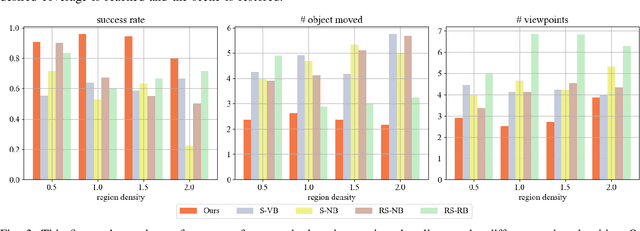

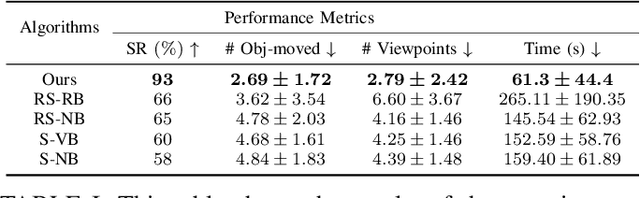

Language-guided Active Sensing of Confined, Cluttered Environments via Object Rearrangement Planning

Feb 04, 2024

Abstract:Language-guided active sensing is a robotics subtask where a robot with an onboard sensor interacts efficiently with the environment via object manipulation to maximize perceptual information, following given language instructions. These tasks appear in various practical robotics applications, such as household service, search and rescue, and environment monitoring. Despite many applications, the existing works do not account for language instructions and have mainly focused on surface sensing, i.e., perceiving the environment from the outside without rearranging it for dense sensing. Therefore, in this paper, we introduce the first language-guided active sensing approach that allows users to observe specific parts of the environment via object manipulation. Our method spatially associates the environment with language instructions, determines the best camera viewpoints for perception, and then iteratively selects and relocates the best view-blocking objects to provide the dense perception of the region of interest. We evaluate our method against different baseline algorithms in simulation and also demonstrate it in real-world confined cabinet-like settings with multiple unknown objects. Our results show that the proposed method exhibits better performance across different metrics and successfully generalizes to real-world complex scenarios.

Multi-Stage Monte Carlo Tree Search for Non-Monotone Object Rearrangement Planning in Narrow Confined Environments

May 26, 2023Abstract:Non-monotone object rearrangement planning in confined spaces such as cabinets and shelves is a widely occurring but challenging problem in robotics. Both the robot motion and the available regions for object relocation are highly constrained because of the limited space. This work proposes a Multi-Stage Monte Carlo Tree Search (MS-MCTS) method to solve non-monotone object rearrangement planning problems in confined spaces. Our approach decouples the complex problem into simpler subproblems using an object stage topology. A subgoal-focused tree expansion algorithm that jointly considers the high-level planning and the low-level robot motion is designed to reduce the search space and better guide the search process. By fitting the task into the MCTS paradigm, our method produces optimistic solutions by balancing exploration and exploitation. The experiments demonstrate that our method outperforms the existing methods regarding the planning time, the number of steps, and the total move distance. Moreover, we deploy our MS-MCTS to a real-world robot system and verify its performance in different confined environments.

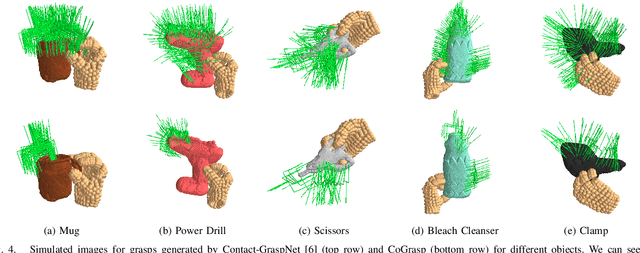

CoGrasp: 6-DoF Grasp Generation for Human-Robot Collaboration

Oct 06, 2022

Abstract:Robot grasping is an actively studied area in robotics, mainly focusing on the quality of generated grasps for object manipulation. However, despite advancements, these methods do not consider the human-robot collaboration settings where robots and humans will have to grasp the same objects concurrently. Therefore, generating robot grasps compatible with human preferences of simultaneously holding an object becomes necessary to ensure a safe and natural collaboration experience. In this paper, we propose a novel, deep neural network-based method called CoGrasp that generates human-aware robot grasps by contextualizing human preference models of object grasping into the robot grasp selection process. We validate our approach against existing state-of-the-art robot grasping methods through simulated and real-robot experiments and user studies. In real robot experiments, our method achieves about 88\% success rate in producing stable grasps that also allow humans to interact and grasp objects simultaneously in a socially compliant manner. Furthermore, our user study with 10 independent participants indicated our approach enables a safe, natural, and socially-aware human-robot objects' co-grasping experience compared to a standard robot grasping technique.

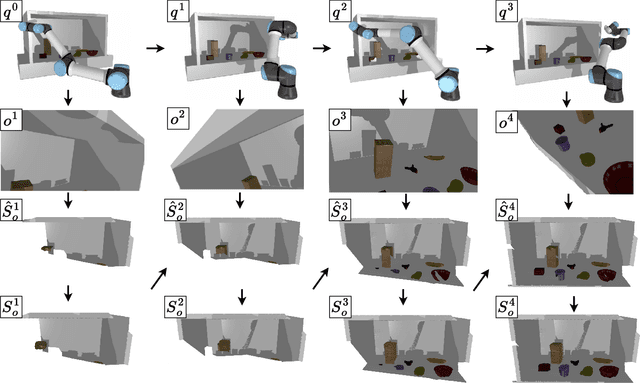

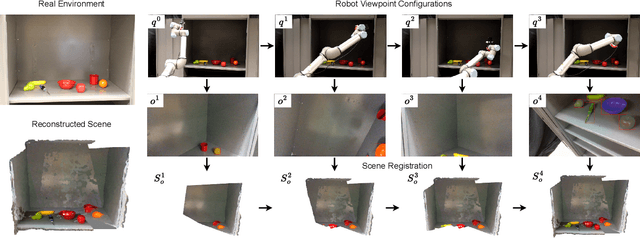

Robot Active Neural Sensing and Planning in Unknown Cluttered Environments

Aug 24, 2022

Abstract:Active sensing and planning in unknown, cluttered environments is an open challenge for robots intending to provide home service, search and rescue, narrow-passage inspection, and medical assistance. Although many active sensing methods exist, they often consider open spaces, assume known settings, or mostly do not generalize to real-world scenarios. We present the active neural sensing approach that generates the kinematically feasible viewpoint sequences for the robot manipulator with an in-hand camera to gather the minimum number of observations needed to reconstruct the underlying environment. Our framework actively collects the visual RGBD observations, aggregates them into scene representation, and performs object shape inference to avoid unnecessary robot interactions with the environment. We train our approach on synthetic data with domain randomization and demonstrate its successful execution via sim-to-real transfer in reconstructing narrow, covered, real-world cabinet environments cluttered with unknown objects. The natural cabinet scenarios impose significant challenges for robot motion and scene reconstruction due to surrounding obstacles and low ambient lighting conditions. However, despite unfavorable settings, our method exhibits high performance compared to its baselines in terms of various environment reconstruction metrics, including planning speed, the number of viewpoints, and overall scene coverage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge