Zijing Zhang

RLVMR: Reinforcement Learning with Verifiable Meta-Reasoning Rewards for Robust Long-Horizon Agents

Jul 30, 2025

Abstract:The development of autonomous agents for complex, long-horizon tasks is a central goal in AI. However, dominant training paradigms face a critical limitation: reinforcement learning (RL) methods that optimize solely for final task success often reinforce flawed or inefficient reasoning paths, a problem we term inefficient exploration. This leads to agents that are brittle and fail to generalize, as they learn to find solutions without learning how to reason coherently. To address this, we introduce RLVMR, a novel framework that integrates dense, process-level supervision into end-to-end RL by rewarding verifiable, meta-reasoning behaviors. RLVMR equips an agent to explicitly tag its cognitive steps, such as planning, exploration, and reflection, and provides programmatic, rule-based rewards for actions that contribute to effective problem-solving. These process-centric rewards are combined with the final outcome signal and optimized using a critic-free policy gradient method. On the challenging ALFWorld and ScienceWorld benchmarks, RLVMR achieves new state-of-the-art results, with our 7B model reaching an 83.6% success rate on the most difficult unseen task split. Our analysis confirms these gains stem from improved reasoning quality, including significant reductions in redundant actions and enhanced error recovery, leading to more robust, efficient, and interpretable agents.

GRAIN: Multi-Granular and Implicit Information Aggregation Graph Neural Network for Heterophilous Graphs

Apr 09, 2025Abstract:Graph neural networks (GNNs) have shown significant success in learning graph representations. However, recent studies reveal that GNNs often fail to outperform simple MLPs on heterophilous graph tasks, where connected nodes may differ in features or labels, challenging the homophily assumption. Existing methods addressing this issue often overlook the importance of information granularity and rarely consider implicit relationships between distant nodes. To overcome these limitations, we propose the Granular and Implicit Graph Network (GRAIN), a novel GNN model specifically designed for heterophilous graphs. GRAIN enhances node embeddings by aggregating multi-view information at various granularity levels and incorporating implicit data from distant, non-neighboring nodes. This approach effectively integrates local and global information, resulting in smoother, more accurate node representations. We also introduce an adaptive graph information aggregator that efficiently combines multi-granularity and implicit data, significantly improving node representation quality, as shown by experiments on 13 datasets covering varying homophily and heterophily. GRAIN consistently outperforms 12 state-of-the-art models, excelling on both homophilous and heterophilous graphs.

Skeleton-Parted Graph Scattering Networks for 3D Human Motion Prediction

Jul 31, 2022

Abstract:Graph convolutional network based methods that model the body-joints' relations, have recently shown great promise in 3D skeleton-based human motion prediction. However, these methods have two critical issues: first, deep graph convolutions filter features within only limited graph spectrums, losing sufficient information in the full band; second, using a single graph to model the whole body underestimates the diverse patterns on various body-parts. To address the first issue, we propose adaptive graph scattering, which leverages multiple trainable band-pass graph filters to decompose pose features into richer graph spectrum bands. To address the second issue, body-parts are modeled separately to learn diverse dynamics, which enables finer feature extraction along the spatial dimensions. Integrating the above two designs, we propose a novel skeleton-parted graph scattering network (SPGSN). The cores of the model are cascaded multi-part graph scattering blocks (MPGSBs), building adaptive graph scattering on diverse body-parts, as well as fusing the decomposed features based on the inferred spectrum importance and body-part interactions. Extensive experiments have shown that SPGSN outperforms state-of-the-art methods by remarkable margins of 13.8%, 9.3% and 2.7% in terms of 3D mean per joint position error (MPJPE) on Human3.6M, CMU Mocap and 3DPW datasets, respectively.

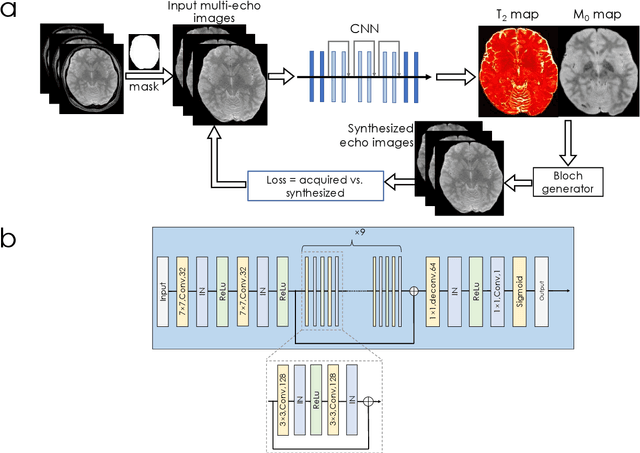

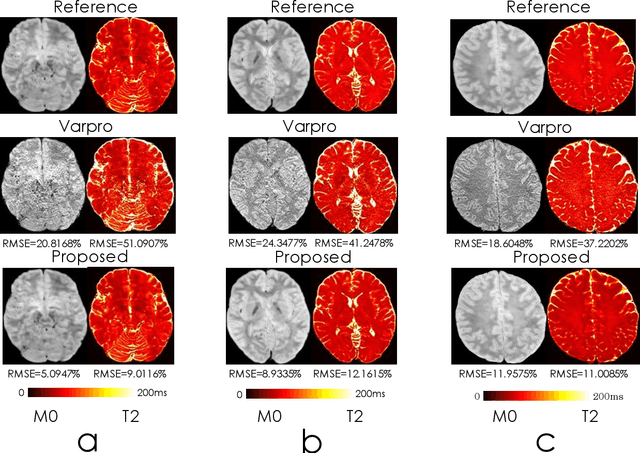

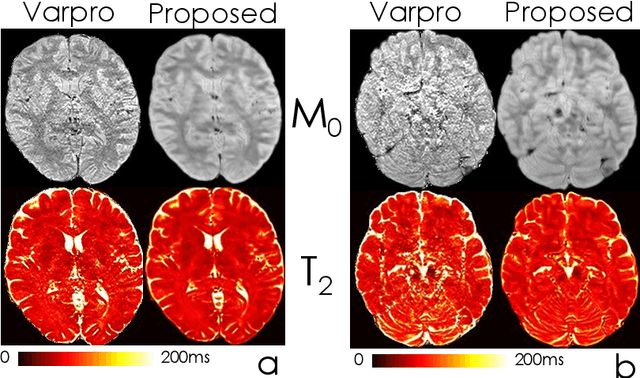

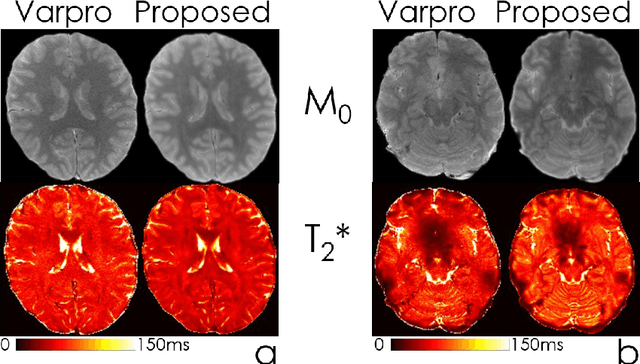

Accurate parameter estimation using scan-specific unsupervised deep learning for relaxometry and MR fingerprinting

Dec 13, 2021

Abstract:We propose an unsupervised convolutional neural network (CNN) for relaxation parameter estimation. This network incorporates signal relaxation and Bloch simulations while taking advantage of residual learning and spatial relations across neighboring voxels. Quantification accuracy and robustness to noise is shown to be significantly improved compared to standard parameter estimation methods in numerical simulations and in vivo data for multi-echo T2 and T2* mapping. The combination of the proposed network with subspace modeling and MR fingerprinting (MRF) from highly undersampled data permits high quality T1 and T2 mapping.

BUDA-SAGE with self-supervised denoising enables fast, distortion-free, high-resolution T2, T2*, para- and dia-magnetic susceptibility mapping

Sep 09, 2021

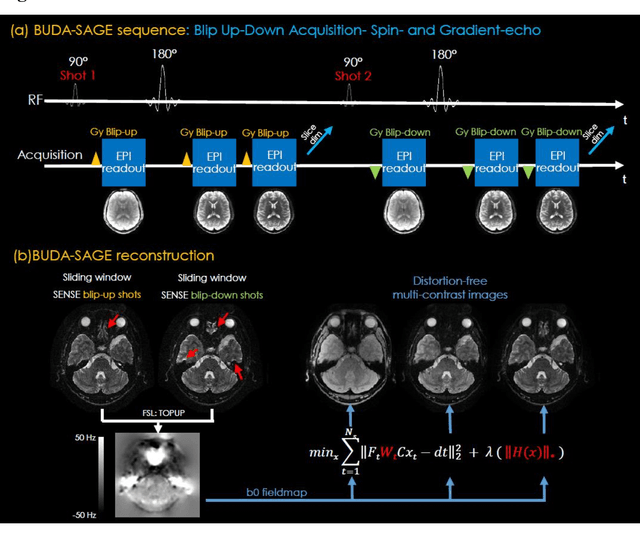

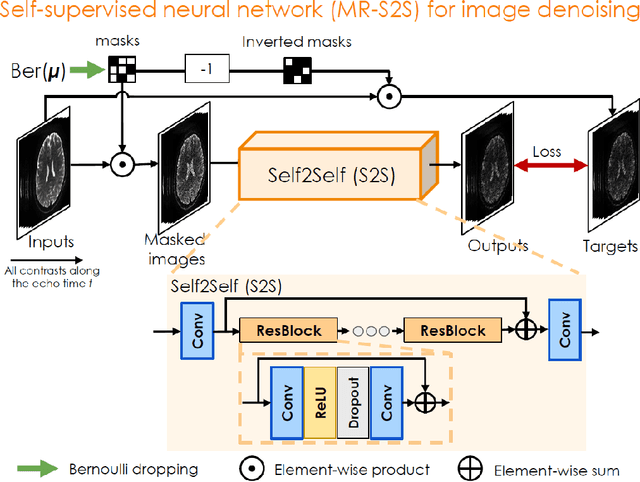

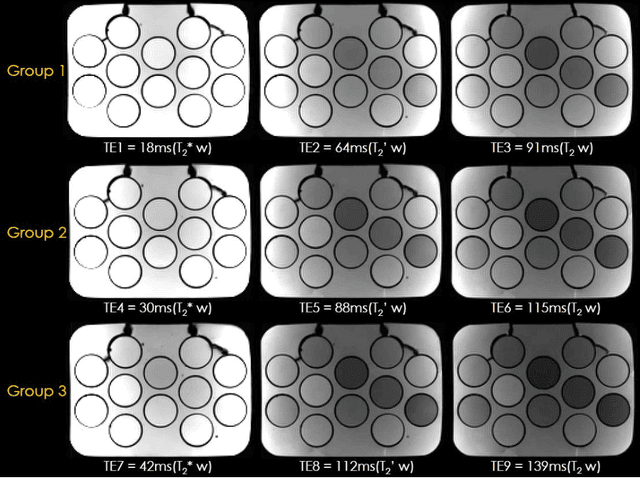

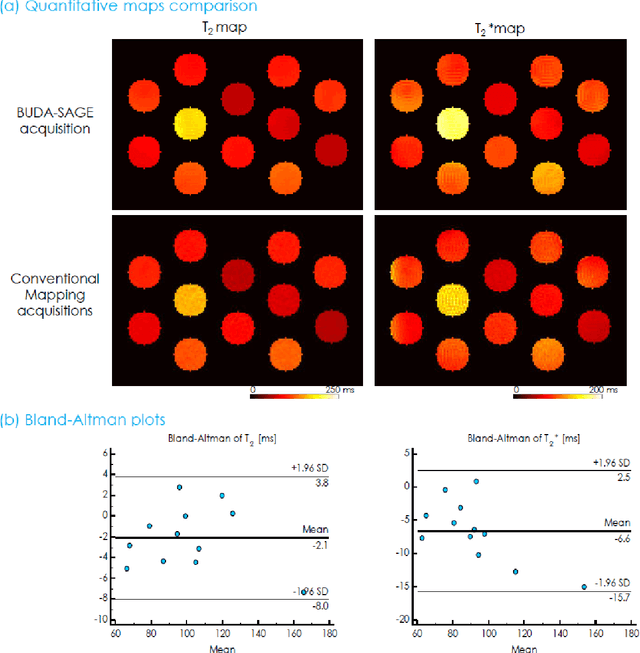

Abstract:To rapidly obtain high resolution T2, T2* and quantitative susceptibility mapping (QSM) source separation maps with whole-brain coverage and high geometric fidelity. We propose Blip Up-Down Acquisition for Spin And Gradient Echo imaging (BUDA-SAGE), an efficient echo-planar imaging (EPI) sequence for quantitative mapping. The acquisition includes multiple T2*-, T2'- and T2-weighted contrasts. We alternate the phase-encoding polarities across the interleaved shots in this multi-shot navigator-free acquisition. A field map estimated from interim reconstructions was incorporated into the joint multi-shot EPI reconstruction with a structured low rank constraint to eliminate geometric distortion. A self-supervised MR-Self2Self (MR-S2S) neural network (NN) was utilized to perform denoising after BUDA reconstruction to boost SNR. Employing Slider encoding allowed us to reach 1 mm isotropic resolution by performing super-resolution reconstruction on BUDA-SAGE volumes acquired with 2 mm slice thickness. Quantitative T2 and T2* maps were obtained using Bloch dictionary matching on the reconstructed echoes. QSM was estimated using nonlinear dipole inversion (NDI) on the gradient echoes. Starting from the estimated R2 and R2* maps, R2' information was derived and used in source separation QSM reconstruction, which provided additional para- and dia-magnetic susceptibility maps. In vivo results demonstrate the ability of BUDA-SAGE to provide whole-brain, distortion-free, high-resolution multi-contrast images and quantitative T2 and T2* maps, as well as yielding para- and dia-magnetic susceptibility maps. Derived quantitative maps showed comparable values to conventional mapping methods in phantom and in vivo measurements. BUDA-SAGE acquisition with self-supervised denoising and Slider encoding enabled rapid, distortion-free, whole-brain T2, T2* mapping at 1 mm3 isotropic resolution in 90 seconds.

Highly Accelerated EPI with Wave Encoding and Multi-shot Simultaneous Multi-Slice Imaging

Jun 03, 2021

Abstract:We introduce wave encoded acquisition and reconstruction techniques for highly accelerated echo planar imaging (EPI) with reduced g-factor penalty and image artifacts. Wave-EPI involves playing sinusoidal gradients during the EPI readout while employing interslice shifts as in blipped-CAIPI acquisitions. This spreads the aliasing in all spatial directions, thereby taking better advantage of 3D coil sensitivity profiles. The amount of voxel spreading that can be achieved by the wave gradients during the short EPI readout period is constrained by the slew rate of the gradient coils and peripheral nerve stimulation (PNS) monitor. We propose to use a half-cycle sinusoidal gradient to increase the amount of voxel spreading that can be achieved while respecting the slew and stimulation constraints. Extending wave-EPI to multi-shot acquisition minimizes geometric distortion and voxel blurring at high in-plane resolution, while structured low-rank regularization mitigates shot-to-shot phase variations without additional navigators. We propose to use different point spread functions (PSFs) for the k-space lines with positive and negative polarities, which are calibrated with a FLEET-based reference scan and allow for addressing gradient imperfections. Wave-EPI provided whole-brain single-shot gradient echo (GE) and multi-shot spin echo (SE) EPI acquisitions at high acceleration factors and was combined with g-Slider slab encoding to boost the SNR level in 1mm isotropic diffusion imaging. Relative to blipped-CAIPI, wave-EPI reduced average and maximum g-factors by up to 1.21- and 1.37-fold, respectively. In conclusion, wave-EPI allows highly accelerated single- and multi-shot EPI with reduced g-factor and artifacts and may facilitate clinical and neuroscientific applications of EPI by improving the spatial and temporal resolution in functional and diffusion imaging.

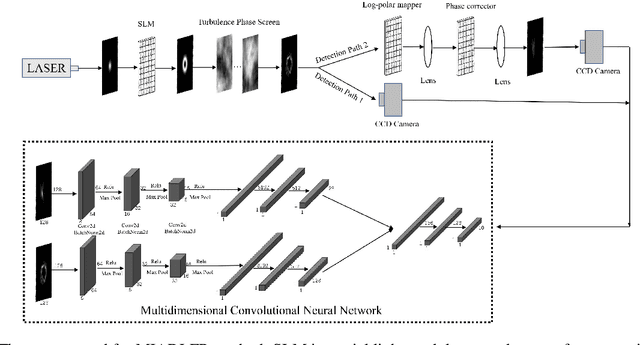

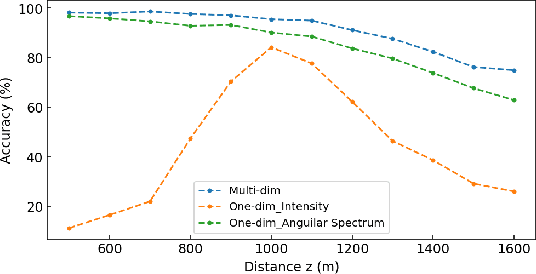

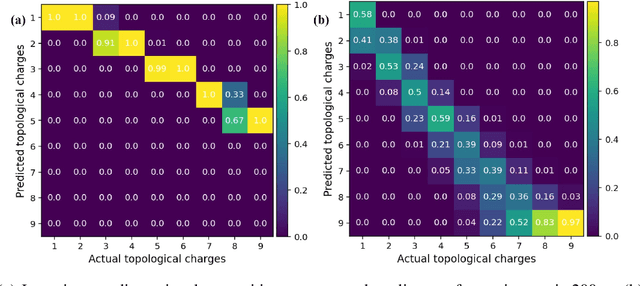

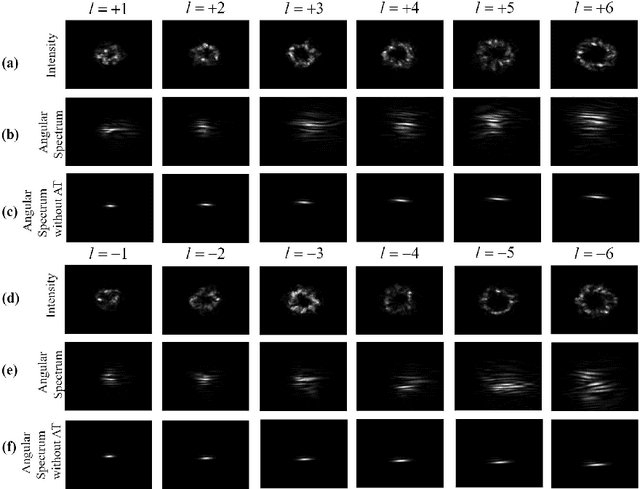

Multidimensional Information Assisted Deep Learning Realizing Flexible Recognition of Vortex Beam Modes

Jan 18, 2021

Abstract:Because of the unlimited range of state space, orbital angular momentum (OAM) as a new degree of freedom of light has attracted great attention in optical communication field. Recently there are a number of researches applying deep learning on recognition of OAM modes through atmospheric turbulence. However, there are several limitations in previous deep learning recognition methods. They all require a constant distance between the laser and receiver, which makes them clumsy and not practical. As far as we know, previous deep learning methods cannot sort vortex beams with positive and negative topological charges, which can reduce information capacity. A Multidimensional Information Assisted Deep Learning Flexible Recognition (MIADLFR) method is proposed in this letter. In MIADLR we utilize not only the intensity profile, also spectrum information to recognize OAM modes unlimited by distance and sign of topological charge (TC). As far as we know, we first make use of multidimensional information to recognize OAM modes and we first utilize spectrum information to recognize OAM modes. Recognition of OAM modes unlimited by distance and sign of TC achieved by MIADLFR method can make optical communication and detection by OAM light much more attractive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge