Zhiguang Qin

Multiple Instance Segmentation in Brachial Plexus Ultrasound Image Using BPMSegNet

Dec 22, 2020

Abstract:The identification of nerve is difficult as structures of nerves are challenging to image and to detect in ultrasound images. Nevertheless, the nerve identification in ultrasound images is a crucial step to improve performance of regional anesthesia. In this paper, a network called Brachial Plexus Multi-instance Segmentation Network (BPMSegNet) is proposed to identify different tissues (nerves, arteries, veins, muscles) in ultrasound images. The BPMSegNet has three novel modules. The first is the spatial local contrast feature, which computes contrast features at different scales. The second one is the self-attention gate, which reweighs the channels in feature maps by their importance. The third is the addition of a skip concatenation with transposed convolution within a feature pyramid network. The proposed BPMSegNet is evaluated by conducting experiments on our constructed Ultrasound Brachial Plexus Dataset (UBPD). Quantitative experimental results show the proposed network can segment multiple tissues from the ultrasound images with a good performance.

A Multi-View Dynamic Fusion Framework: How to Improve the Multimodal Brain Tumor Segmentation from Multi-Views?

Dec 21, 2020

Abstract:When diagnosing the brain tumor, doctors usually make a diagnosis by observing multimodal brain images from the axial view, the coronal view and the sagittal view, respectively. And then they make a comprehensive decision to confirm the brain tumor based on the information obtained from multi-views. Inspired by this diagnosing process and in order to further utilize the 3D information hidden in the dataset, this paper proposes a multi-view dynamic fusion framework to improve the performance of brain tumor segmentation. The proposed framework consists of 1) a multi-view deep neural network architecture, which represents multi learning networks for segmenting the brain tumor from different views and each deep neural network corresponds to multi-modal brain images from one single view and 2) the dynamic decision fusion method, which is mainly used to fuse segmentation results from multi-views as an integrate one and two different fusion methods, the voting method and the weighted averaging method, have been adopted to evaluate the fusing process. Moreover, the multi-view fusion loss, which consists of the segmentation loss, the transition loss and the decision loss, is proposed to facilitate the training process of multi-view learning networks so as to keep the consistency of appearance and space, not only in the process of fusing segmentation results, but also in the process of training the learning network. \par By evaluating the proposed framework on BRATS 2015 and BRATS 2018, it can be found that the fusion results from multi-views achieve a better performance than the segmentation result from the single view and the effectiveness of proposed multi-view fusion loss has also been proved. Moreover, the proposed framework achieves a better segmentation performance and a higher efficiency compared to other counterpart methods.

DeepKeyGen: A Deep Learning-based Stream Cipher Generator for Medical Image Encryption and Decryption

Dec 21, 2020

Abstract:The need for medical image encryption is increasingly pronounced, for example to safeguard the privacy of the patients' medical imaging data. In this paper, a novel deep learning-based key generation network (DeepKeyGen) is proposed as a stream cipher generator to generate the private key, which can then be used for encrypting and decrypting of medical images. In DeepKeyGen, the generative adversarial network (GAN) is adopted as the learning network to generate the private key. Furthermore, the transformation domain (that represents the "style" of the private key to be generated) is designed to guide the learning network to realize the private key generation process. The goal of DeepKeyGen is to learn the mapping relationship of how to transfer the initial image to the private key. We evaluate DeepKeyGen using three datasets, namely: the Montgomery County chest X-ray dataset, the Ultrasonic Brachial Plexus dataset, and the BraTS18 dataset. The evaluation findings and security analysis show that the proposed key generation network can achieve a high-level security in generating the private key.

DeepEDN: A Deep Learning-based Image Encryption and Decryption Network for Internet of Medical Things

May 05, 2020

Abstract:Internet of Medical Things (IoMT) can connect many medical imaging equipments to the medical information network to facilitate the process of diagnosing and treating for doctors. As medical image contains sensitive information, it is of importance yet very challenging to safeguard the privacy or security of the patient. In this work, a deep learning based encryption and decryption network (DeepEDN) is proposed to fulfill the process of encrypting and decrypting the medical image. Specifically, in DeepEDN, the Cycle-Generative Adversarial Network (Cycle-GAN) is employed as the main learning network to transfer the medical image from its original domain into the target domain. Target domain is regarded as a "Hidden Factors" to guide the learning model for realizing the encryption. The encrypted image is restored to the original (plaintext) image through a reconstruction network to achieve an image decryption. In order to facilitate the data mining directly from the privacy-protected environment, a region of interest(ROI)-mining-network is proposed to extract the interested object from the encrypted image. The proposed DeepEDN is evaluated on the chest X-ray dataset. Extensive experimental results and security analysis show that the proposed method can achieve a high level of security with a good performance in efficiency.

Modeling Embedding Dimension Correlations via Convolutional Neural Collaborative Filtering

Jun 26, 2019

Abstract:As the core of recommender system, collaborative filtering (CF) models the affinity between a user and an item from historical user-item interactions, such as clicks, purchases, and so on. Benefited from the strong representation power, neural networks have recently revolutionized the recommendation research, setting up a new standard for CF. However, existing neural recommender models do not explicitly consider the correlations among embedding dimensions, making them less effective in modeling the interaction function between users and items. In this work, we emphasize on modeling the correlations among embedding dimensions in neural networks to pursue higher effectiveness for CF. We propose a novel and general neural collaborative filtering framework, namely ConvNCF, which is featured with two designs: 1) applying outer product on user embedding and item embedding to explicitly model the pairwise correlations between embedding dimensions, and 2) employing convolutional neural network above the outer product to learn the high-order correlations among embedding dimensions. To justify our proposal, we present three instantiations of ConvNCF by using different inputs to represent a user and conduct experiments on two real-world datasets. Extensive results verify the utility of modeling embedding dimension correlations with ConvNCF, which outperforms several competitive CF methods.

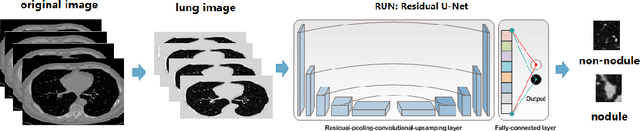

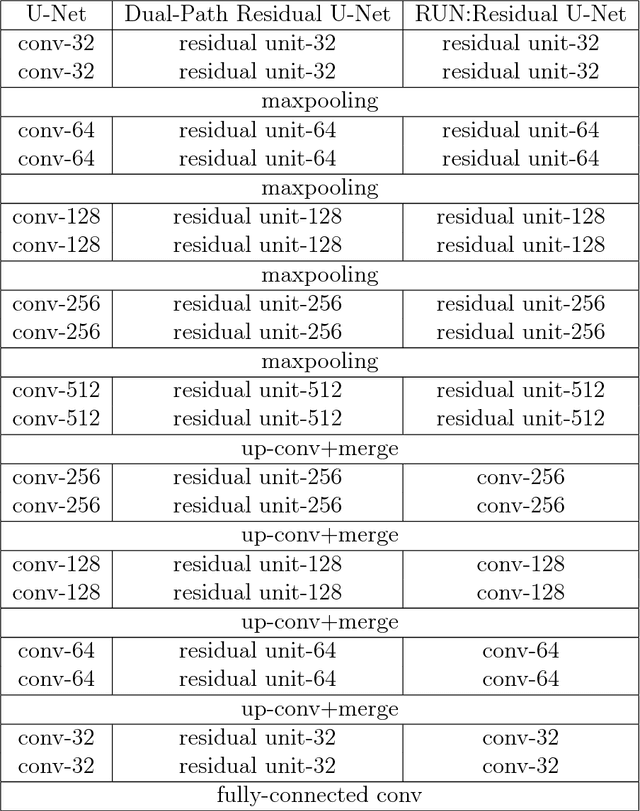

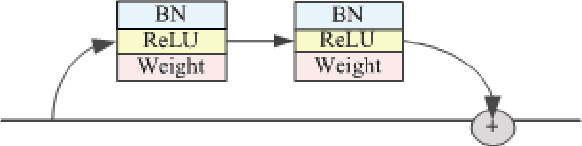

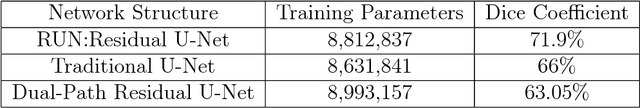

RUN:Residual U-Net for Computer-Aided Detection of Pulmonary Nodules without Candidate Selection

May 30, 2018

Abstract:The early detection and early diagnosis of lung cancer are crucial to improve the survival rate of lung cancer patients. Pulmonary nodules detection results have a significant impact on the later diagnosis. In this work, we propose a new network named RUN to complete nodule detection in a single step by bypassing the candidate selection. The system introduces the shortcut of the residual network to improve the traditional U-Net, thereby solving the disadvantage of poor results due to its lack of depth. Furthermore, we compare the experimental results with the traditional U-Net. We validate our method in LUng Nodule Analysis 2016 (LUNA16) Nodule Detection Challenge. We acquire a sensitivity of 90.90% at 2 false positives per scan and therefore achieve better performance than the current state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge