Zhenyang Ni

Self-Localized Collaborative Perception

Jun 18, 2024

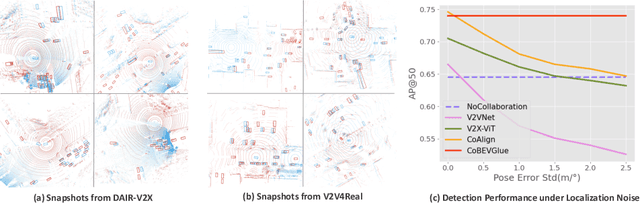

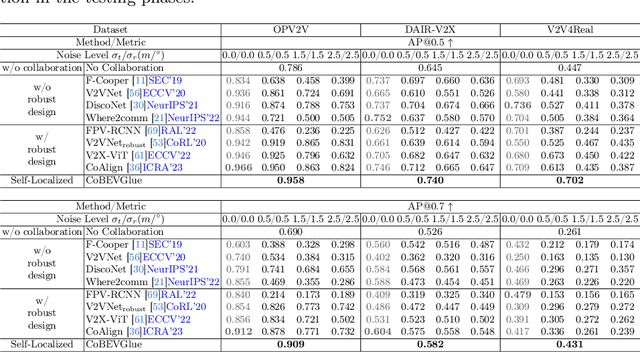

Abstract:Collaborative perception has garnered considerable attention due to its capacity to address several inherent challenges in single-agent perception, including occlusion and out-of-range issues. However, existing collaborative perception systems heavily rely on precise localization systems to establish a consistent spatial coordinate system between agents. This reliance makes them susceptible to large pose errors or malicious attacks, resulting in substantial reductions in perception performance. To address this, we propose~$\mathtt{CoBEVGlue}$, a novel self-localized collaborative perception system, which achieves more holistic and robust collaboration without using an external localization system. The core of~$\mathtt{CoBEVGlue}$ is a novel spatial alignment module, which provides the relative poses between agents by effectively matching co-visible objects across agents. We validate our method on both real-world and simulated datasets. The results show that i) $\mathtt{CoBEVGlue}$ achieves state-of-the-art detection performance under arbitrary localization noises and attacks; and ii) the spatial alignment module can seamlessly integrate with a majority of previous methods, enhancing their performance by an average of $57.7\%$. Code is available at https://github.com/VincentNi0107/CoBEVGlue

Robust Collaborative Perception without External Localization and Clock Devices

May 05, 2024

Abstract:A consistent spatial-temporal coordination across multiple agents is fundamental for collaborative perception, which seeks to improve perception abilities through information exchange among agents. To achieve this spatial-temporal alignment, traditional methods depend on external devices to provide localization and clock signals. However, hardware-generated signals could be vulnerable to noise and potentially malicious attack, jeopardizing the precision of spatial-temporal alignment. Rather than relying on external hardwares, this work proposes a novel approach: aligning by recognizing the inherent geometric patterns within the perceptual data of various agents. Following this spirit, we propose a robust collaborative perception system that operates independently of external localization and clock devices. The key module of our system,~\emph{FreeAlign}, constructs a salient object graph for each agent based on its detected boxes and uses a graph neural network to identify common subgraphs between agents, leading to accurate relative pose and time. We validate \emph{FreeAlign} on both real-world and simulated datasets. The results show that, the ~\emph{FreeAlign} empowered robust collaborative perception system perform comparably to systems relying on precise localization and clock devices.

Fake It Till Make It: Federated Learning with Consensus-Oriented Generation

Dec 10, 2023

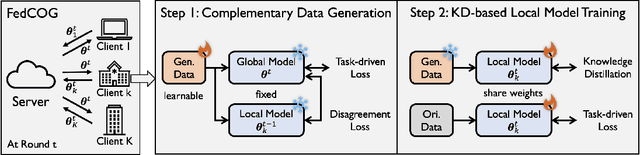

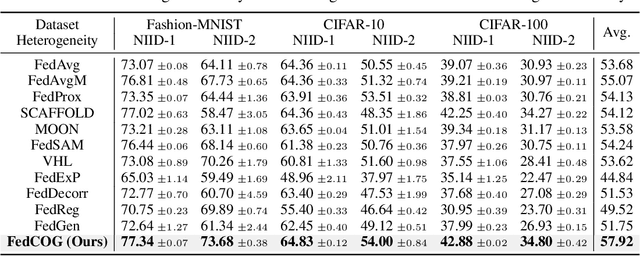

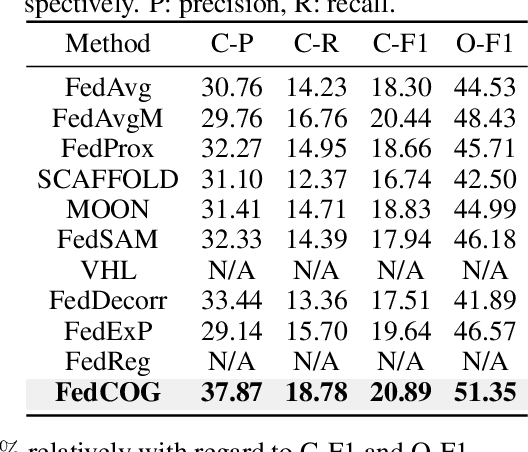

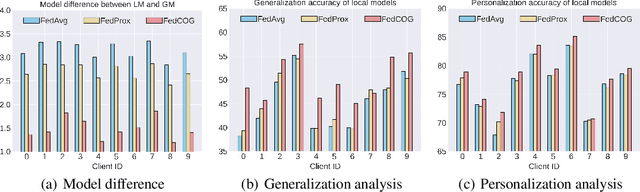

Abstract:In federated learning (FL), data heterogeneity is one key bottleneck that causes model divergence and limits performance. Addressing this, existing methods often regard data heterogeneity as an inherent property and propose to mitigate its adverse effects by correcting models. In this paper, we seek to break this inherent property by generating data to complement the original dataset to fundamentally mitigate heterogeneity level. As a novel attempt from the perspective of data, we propose federated learning with consensus-oriented generation (FedCOG). FedCOG consists of two key components at the client side: complementary data generation, which generates data extracted from the shared global model to complement the original dataset, and knowledge-distillation-based model training, which distills knowledge from global model to local model based on the generated data to mitigate over-fitting the original heterogeneous dataset. FedCOG has two critical advantages: 1) it can be a plug-and-play module to further improve the performance of most existing FL methods, and 2) it is naturally compatible with standard FL protocols such as Secure Aggregation since it makes no modification in communication process. Extensive experiments on classical and real-world FL datasets show that FedCOG consistently outperforms state-of-the-art methods.

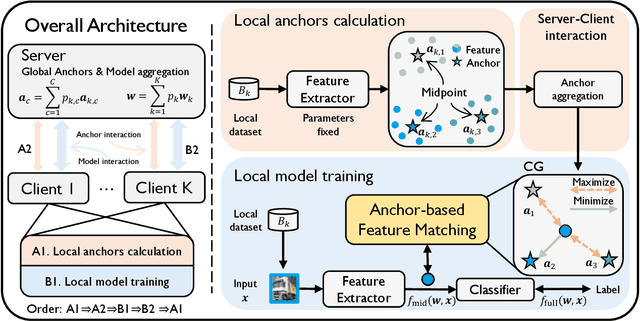

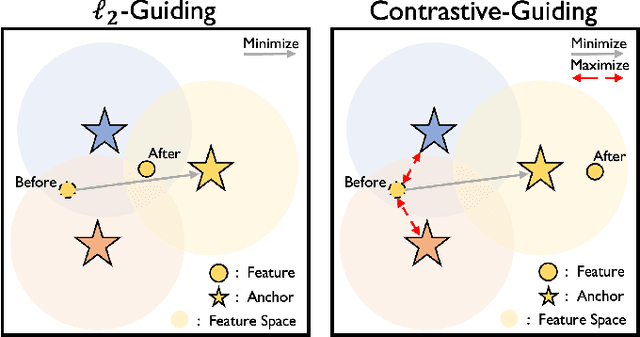

FedFM: Anchor-based Feature Matching for Data Heterogeneity in Federated Learning

Oct 14, 2022

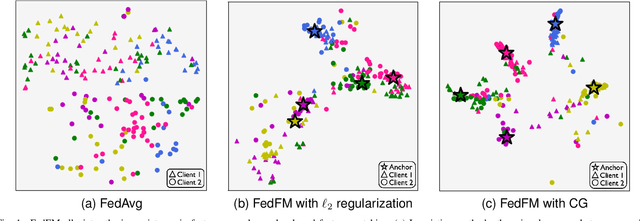

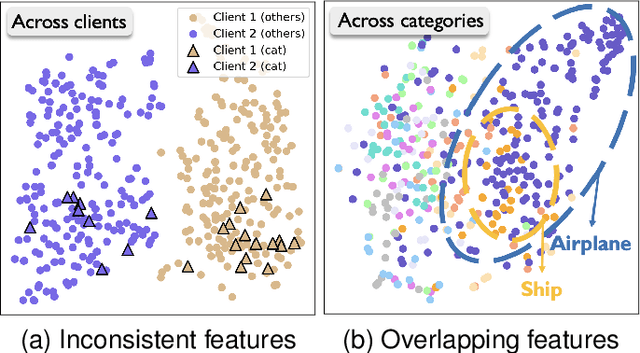

Abstract:One of the key challenges in federated learning (FL) is local data distribution heterogeneity across clients, which may cause inconsistent feature spaces across clients. To address this issue, we propose a novel method FedFM, which guides each client's features to match shared category-wise anchors (landmarks in feature space). This method attempts to mitigate the negative effects of data heterogeneity in FL by aligning each client's feature space. Besides, we tackle the challenge of varying objective function and provide convergence guarantee for FedFM. In FedFM, to mitigate the phenomenon of overlapping feature spaces across categories and enhance the effectiveness of feature matching, we further propose a more precise and effective feature matching loss called contrastive-guiding (CG), which guides each local feature to match with the corresponding anchor while keeping away from non-corresponding anchors. Additionally, to achieve higher efficiency and flexibility, we propose a FedFM variant, called FedFM-Lite, where clients communicate with server with fewer synchronization times and communication bandwidth costs. Through extensive experiments, we demonstrate that FedFM with CG outperforms several works by quantitative and qualitative comparisons. FedFM-Lite can achieve better performance than state-of-the-art methods with five to ten times less communication costs.

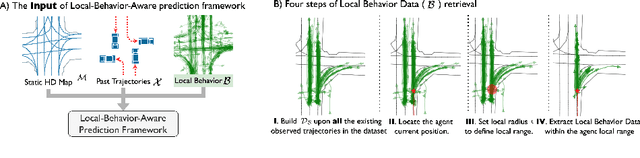

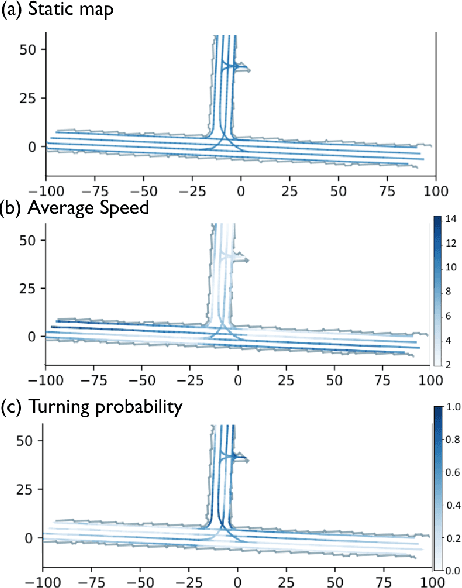

Aware of the History: Trajectory Forecasting with the Local Behavior Data

Jul 20, 2022

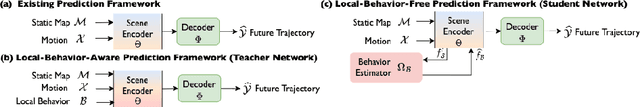

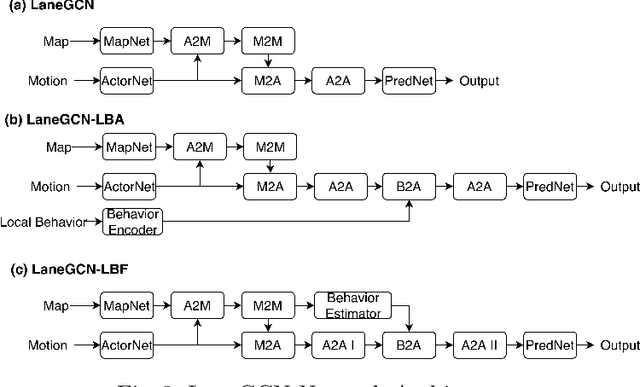

Abstract:The historical trajectories previously passing through a location may help infer the future trajectory of an agent currently at this location. Despite great improvements in trajectory forecasting with the guidance of high-definition maps, only a few works have explored such local historical information. In this work, we re-introduce this information as a new type of input data for trajectory forecasting systems: the local behavior data, which we conceptualize as a collection of location-specific historical trajectories. Local behavior data helps the systems emphasize the prediction locality and better understand the impact of static map objects on moving agents. We propose a novel local-behavior-aware (LBA) prediction framework that improves forecasting accuracy by fusing information from observed trajectories, HD maps, and local behavior data. Also, where such historical data is insufficient or unavailable, we employ a local-behavior-free (LBF) prediction framework, which adopts a knowledge-distillation-based architecture to infer the impact of missing data. Extensive experiments demonstrate that upgrading existing methods with these two frameworks significantly improves their performances. Especially, the LBA framework boosts the SOTA methods' performance on the nuScenes dataset by at least 14% for the K=1 metrics.

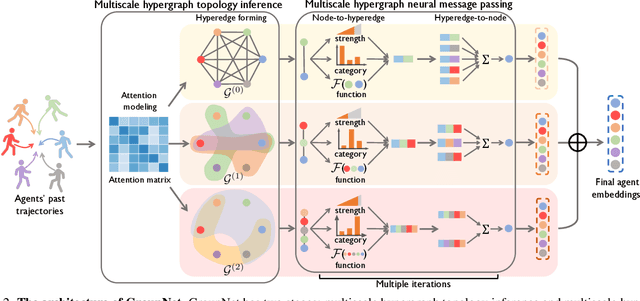

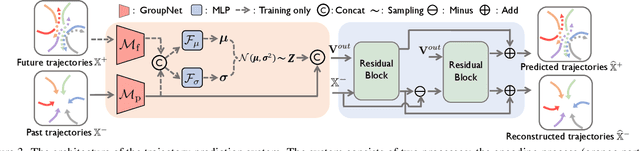

GroupNet: Multiscale Hypergraph Neural Networks for Trajectory Prediction with Relational Reasoning

Apr 20, 2022

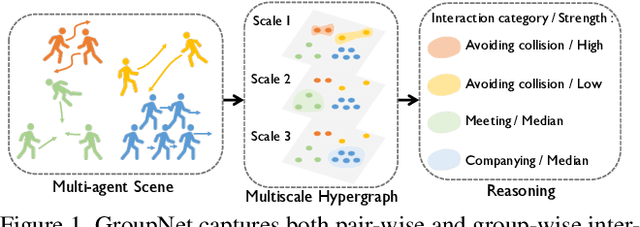

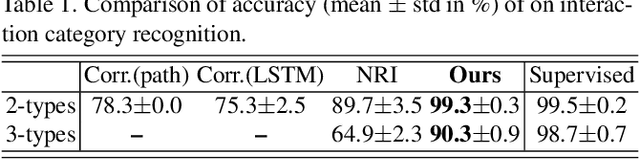

Abstract:Demystifying the interactions among multiple agents from their past trajectories is fundamental to precise and interpretable trajectory prediction. However, previous works only consider pair-wise interactions with limited relational reasoning. To promote more comprehensive interaction modeling for relational reasoning, we propose GroupNet, a multiscale hypergraph neural network, which is novel in terms of both interaction capturing and representation learning. From the aspect of interaction capturing, we propose a trainable multiscale hypergraph to capture both pair-wise and group-wise interactions at multiple group sizes. From the aspect of interaction representation learning, we propose a three-element format that can be learnt end-to-end and explicitly reason some relational factors including the interaction strength and category. We apply GroupNet into both CVAE-based prediction system and previous state-of-the-art prediction systems for predicting socially plausible trajectories with relational reasoning. To validate the ability of relational reasoning, we experiment with synthetic physics simulations to reflect the ability to capture group behaviors, reason interaction strength and interaction category. To validate the effectiveness of prediction, we conduct extensive experiments on three real-world trajectory prediction datasets, including NBA, SDD and ETH-UCY; and we show that with GroupNet, the CVAE-based prediction system outperforms state-of-the-art methods. We also show that adding GroupNet will further improve the performance of previous state-of-the-art prediction systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge