FedFM: Anchor-based Feature Matching for Data Heterogeneity in Federated Learning

Paper and Code

Oct 14, 2022

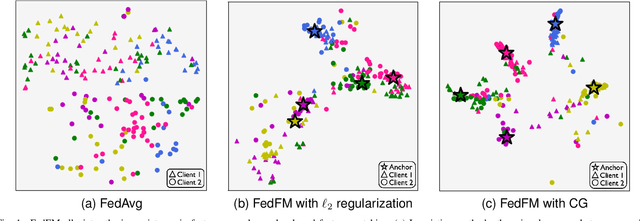

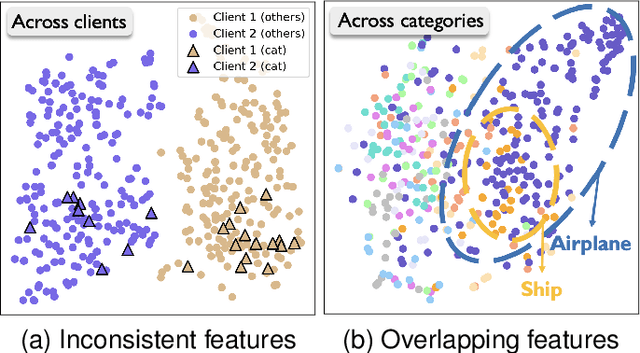

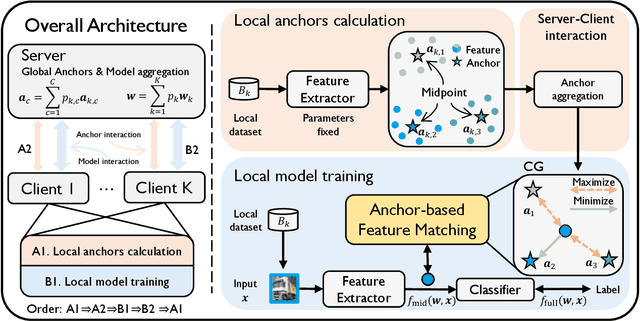

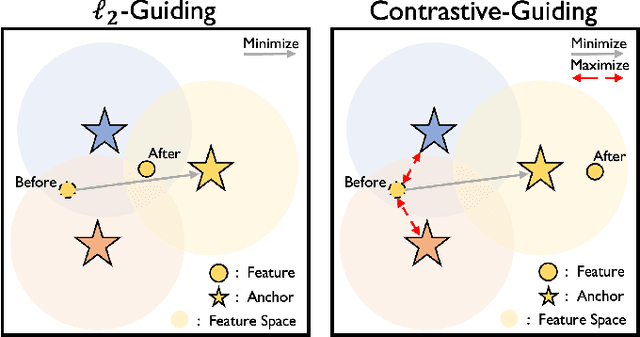

One of the key challenges in federated learning (FL) is local data distribution heterogeneity across clients, which may cause inconsistent feature spaces across clients. To address this issue, we propose a novel method FedFM, which guides each client's features to match shared category-wise anchors (landmarks in feature space). This method attempts to mitigate the negative effects of data heterogeneity in FL by aligning each client's feature space. Besides, we tackle the challenge of varying objective function and provide convergence guarantee for FedFM. In FedFM, to mitigate the phenomenon of overlapping feature spaces across categories and enhance the effectiveness of feature matching, we further propose a more precise and effective feature matching loss called contrastive-guiding (CG), which guides each local feature to match with the corresponding anchor while keeping away from non-corresponding anchors. Additionally, to achieve higher efficiency and flexibility, we propose a FedFM variant, called FedFM-Lite, where clients communicate with server with fewer synchronization times and communication bandwidth costs. Through extensive experiments, we demonstrate that FedFM with CG outperforms several works by quantitative and qualitative comparisons. FedFM-Lite can achieve better performance than state-of-the-art methods with five to ten times less communication costs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge