Zhenpeng He

Hierarchical Topometric Representation of 3D Robotic Maps

Nov 24, 2021

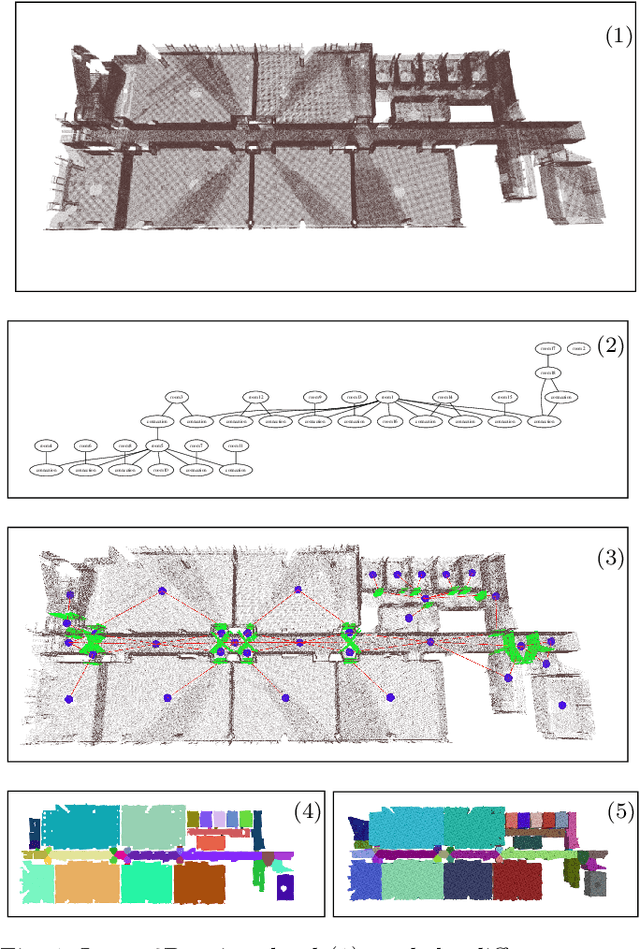

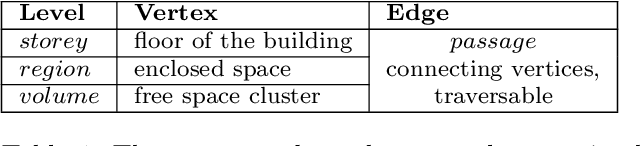

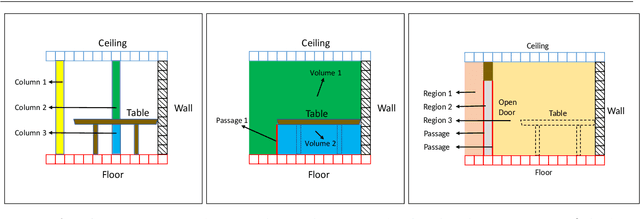

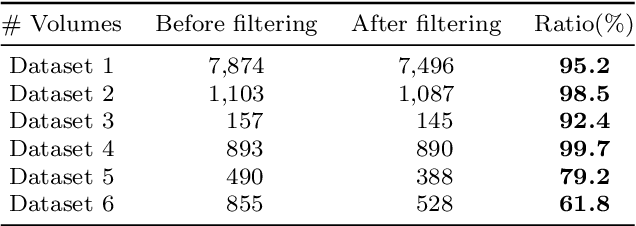

Abstract:In this paper, we propose a method for generating a hierarchical, volumetric topological map from 3D point clouds. There are three basic hierarchical levels in our map: $storey - region - volume$. The advantages of our method are reflected in both input and output. In terms of input, we accept multi-storey point clouds and building structures with sloping roofs or ceilings. In terms of output, we can generate results with metric information of different dimensionality, that are suitable for different robotics applications. The algorithm generates the volumetric representation by generating $volumes$ from a 3D voxel occupancy map. We then add $passage$s (connections between $volumes$), combine small $volumes$ into a big $region$ and use a 2D segmentation method for better topological representation. We evaluate our method on several freely available datasets. The experiments highlight the advantages of our approach.

* Temporarily

TTM: Terrain Traversability Mapping for Autonomous Excavator Navigation in Unstructured Environments

Sep 13, 2021

Abstract:We present Terrain Traversability Mapping (TTM), a real-time mapping approach for terrain traversability estimation and path planning for autonomous excavators in an unstructured environment. We propose an efficient learning-based geometric method to extract terrain features from RGB images and 3D pointclouds and incorporate them into a global map for planning and navigation for autonomous excavation. Our method used the physical characteristics of the excavator, including maximum climbing degree and other machine specifications, to determine the traversable area. Our method can adapt to changing environments and update the terrain information in real-time. Moreover, we prepare a novel dataset, Autonomous Excavator Terrain (AET) dataset, consisting of RGB images from construction sites with seven categories according to navigability. We integrate our mapping approach with planning and control modules in an autonomous excavator navigation system, which outperforms previous method by 49.3% in terms of success rate based on existing planning schemes. With our mapping the excavator can navigate through unstructured environments consisting of deep pits, steep hills, rock piles, and other complex terrain features.

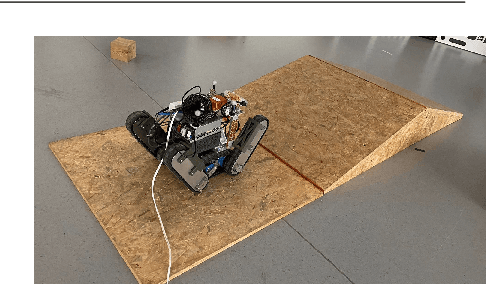

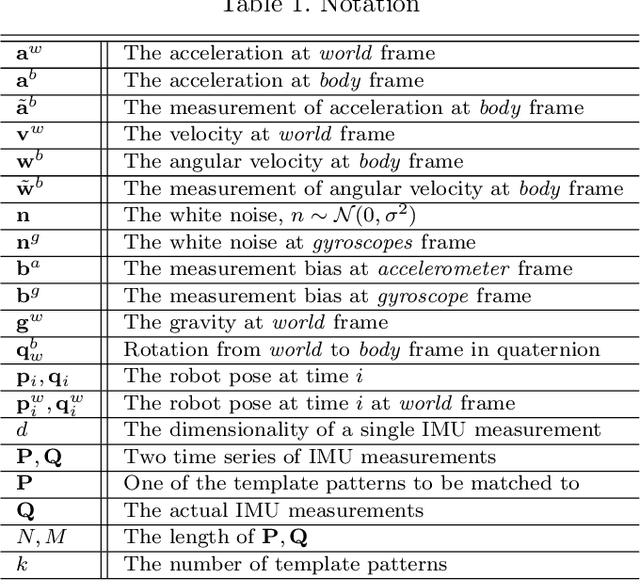

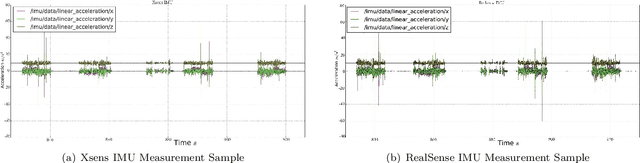

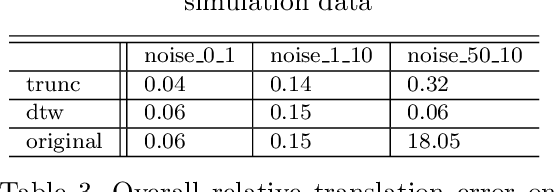

Improved Visual-Inertial Localization for Low-cost Rescue Robots

Nov 17, 2020

Abstract:This paper improves visual-inertial systems to boost the localization accuracy for low-cost rescue robots. When robots traverse on rugged terrain, the performance of pose estimation suffers from big noise on the measurements of the inertial sensors due to ground contact forces, especially for low-cost sensors. Therefore, we propose \textit{Threshold}-based and \textit{Dynamic Time Warping}-based methods to detect abnormal measurements and mitigate such faults. The two methods are embedded into the popular VINS-Mono system to evaluate their performance. Experiments are performed on simulation and real robot data, which show that both methods increase the pose estimation accuracy. Moreover, the \textit{Threshold}-based method performs better when the noise is small and the \textit{Dynamic Time Warping}-based one shows greater potential on large noise.

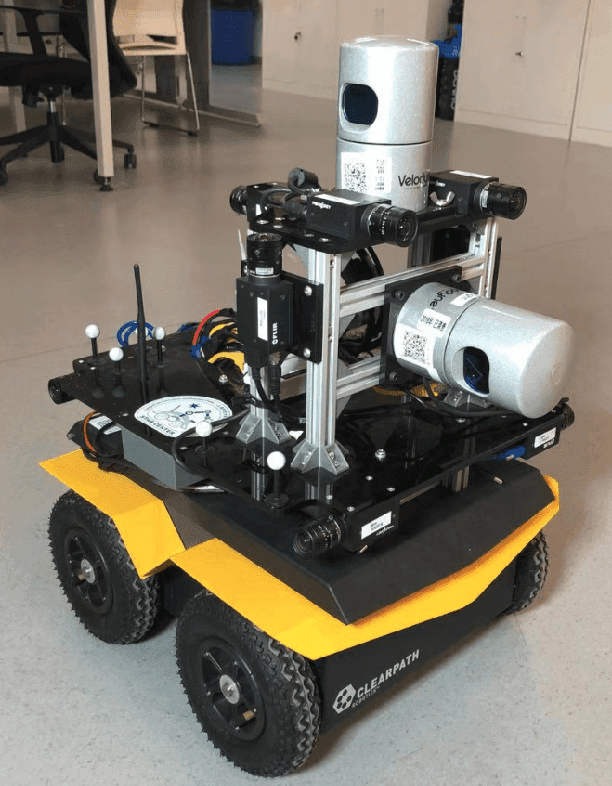

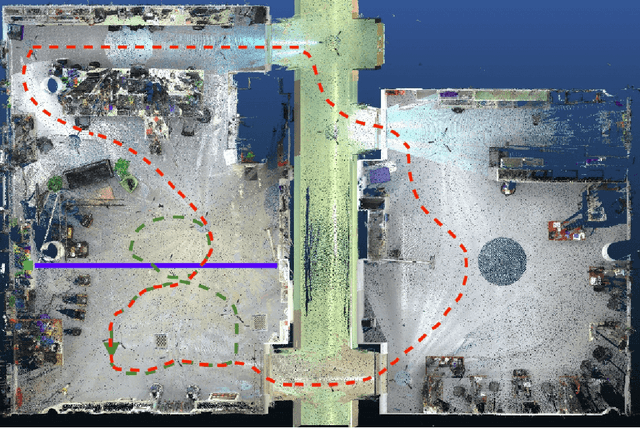

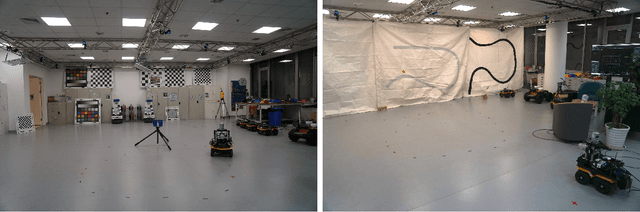

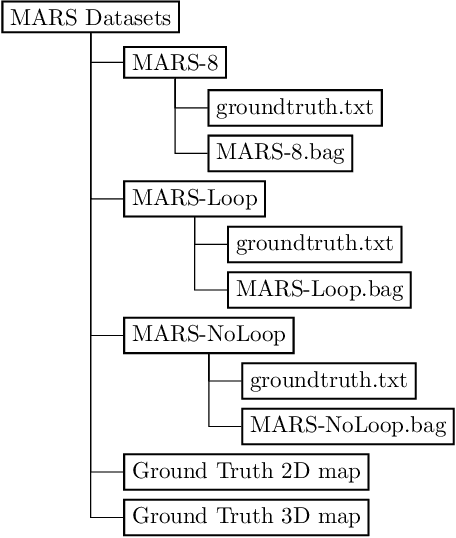

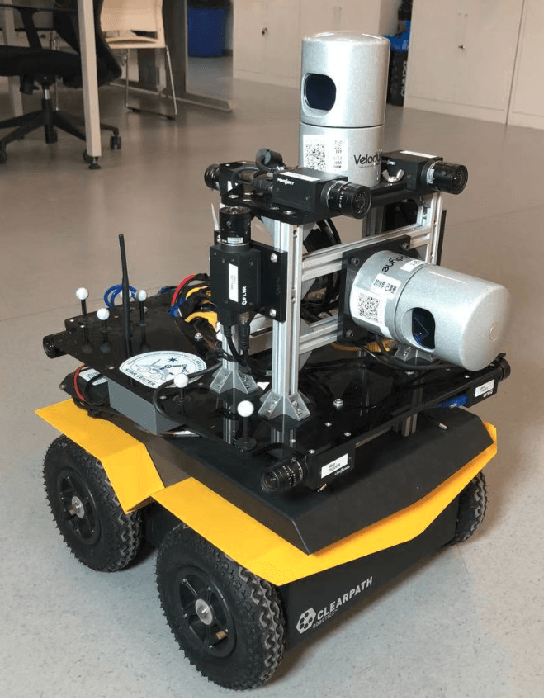

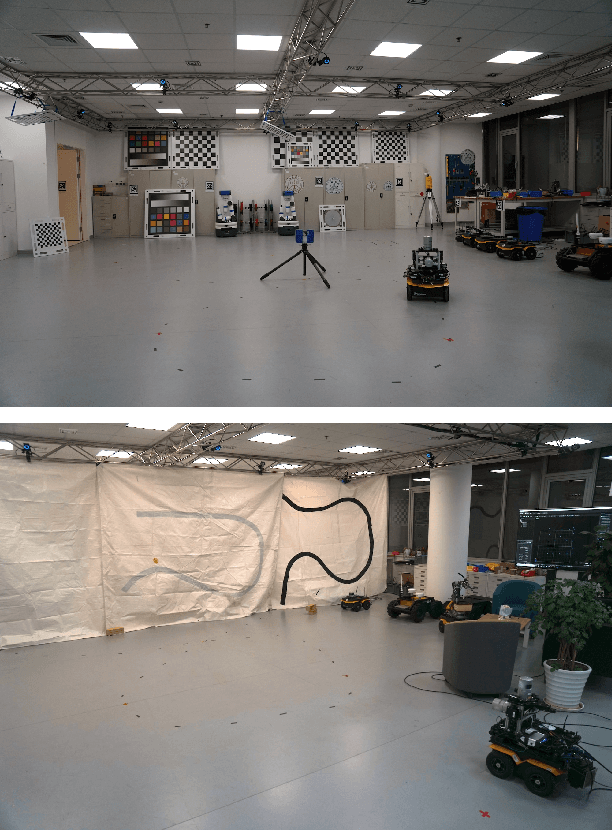

Advanced Mapping Robot and High-Resolution Dataset

Jul 23, 2020

Abstract:This paper presents a fully hardware synchronized mapping robot with support for a hardware synchronized external tracking system, for super-precise timing and localization. Nine high-resolution cameras and two 32-beam 3D Lidars were used along with a professional, static 3D scanner for ground truth map collection. With all the sensors calibrated on the mapping robot, three datasets are collected to evaluate the performance of mapping algorithms within a room and between rooms. Based on these datasets we generate maps and trajectory data, which is then fed into evaluation algorithms. We provide the datasets for download and the mapping and evaluation procedures are made in a very easily reproducible manner for maximum comparability. We have also conducted a survey on available robotics-related datasets and compiled a big table with those datasets and a number of properties of them.

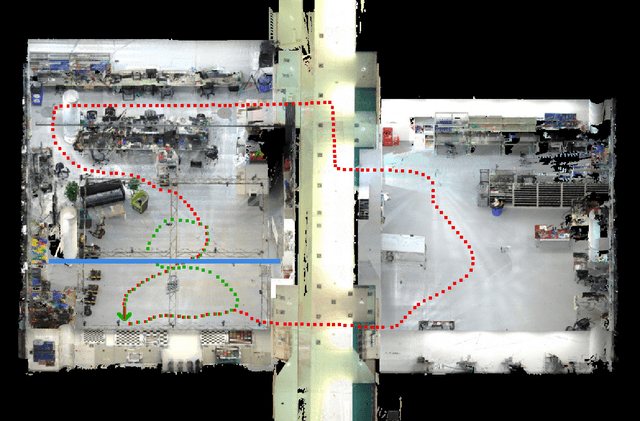

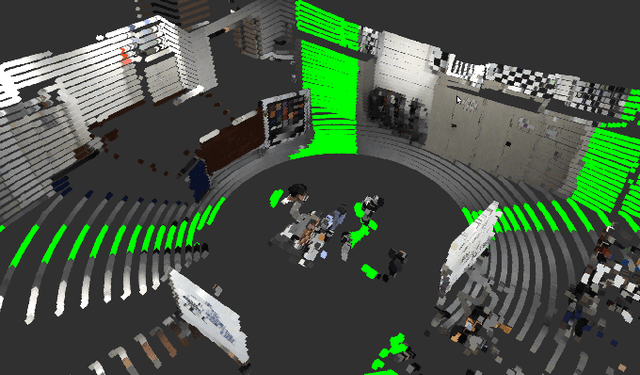

Furniture Free Mapping using 3D Lidars

Nov 15, 2019

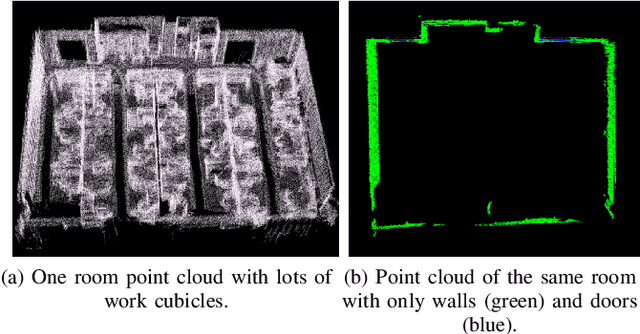

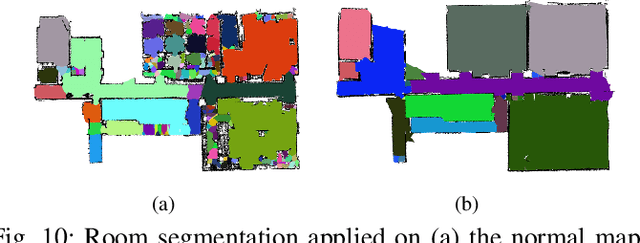

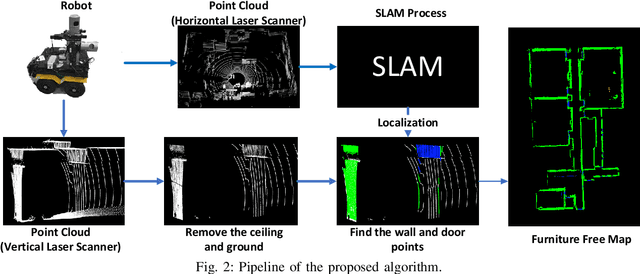

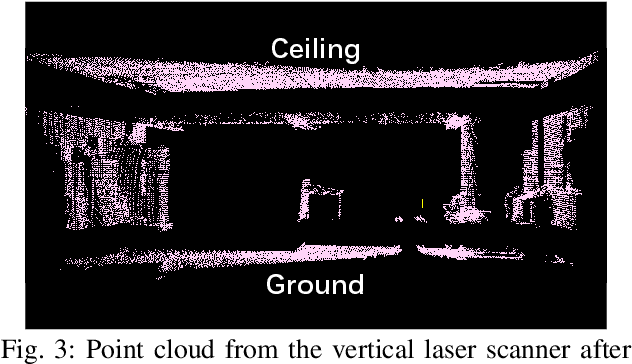

Abstract:Mobile robots depend on maps for localization, planning, and other applications. In indoor scenarios, there is often lots of clutter present, such as chairs, tables, other furniture, or plants. While mapping this clutter is important for certain applications, for example navigation, maps that represent just the immobile parts of the environment, i.e. walls, are needed for other applications, like room segmentation or long-term localization. In literature, approaches can be found that use a complete point cloud to remove the furniture in the room and generate a furniture free map. In contrast, we propose a Simultaneous Localization And Mapping (SLAM)-based mobile laser scanning solution. The robot uses an orthogonal pair of Lidars. The horizontal scanner aims to estimate the robot position, whereas the vertical scanner generates the furniture free map. There are three steps in our method: point cloud rearrangement, wall plane detection and semantic labeling. In the experiment, we evaluate the efficiency of removing furniture in a typical indoor environment. We get $99.60\%$ precision in keeping the wall in the 3D result, which shows that our algorithm can remove most of the furniture in the environment. Furthermore, we introduce the application of 2D furniture free mapping for room segmentation.

Towards Generation and Evaluation of Comprehensive Mapping Robot Datasets

May 23, 2019

Abstract:This paper presents a fully hardware synchronized mapping robot with support for a hardware synchronized external tracking system, for super-precise timing and localization. We also employ a professional, static 3D scanner for ground truth map collection. Three datasets are generated to evaluate the performance of mapping algorithms within a room and between rooms. Based on these datasets we generate maps and trajectory data, which is then fed into evaluation algorithms. The mapping and evaluation procedures are made in a very easily reproducible manner for maximum comparability. In the end we can draw a couple of conclusions about the tested SLAM algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge