Qingwen Xu

Improved Visual-Inertial Localization for Low-cost Rescue Robots

Nov 17, 2020

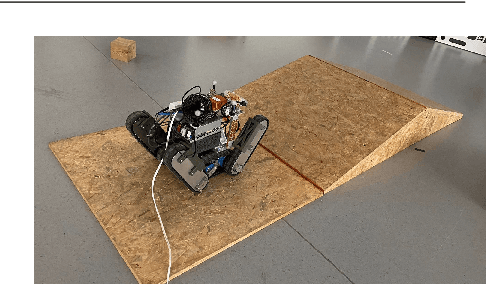

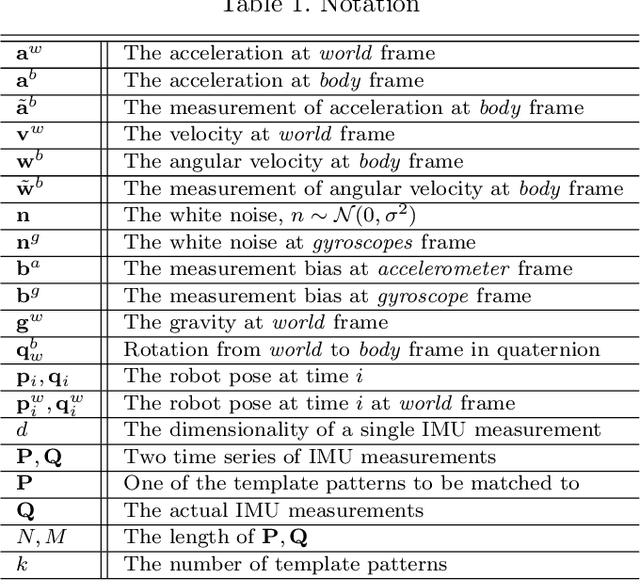

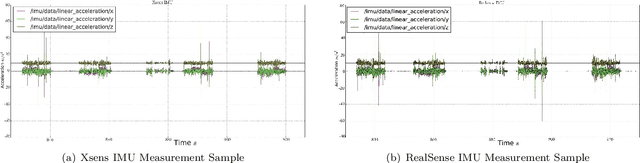

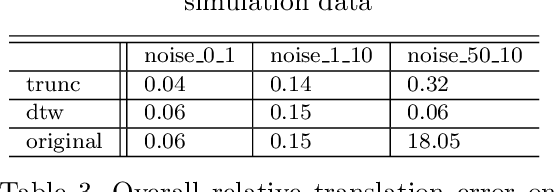

Abstract:This paper improves visual-inertial systems to boost the localization accuracy for low-cost rescue robots. When robots traverse on rugged terrain, the performance of pose estimation suffers from big noise on the measurements of the inertial sensors due to ground contact forces, especially for low-cost sensors. Therefore, we propose \textit{Threshold}-based and \textit{Dynamic Time Warping}-based methods to detect abnormal measurements and mitigate such faults. The two methods are embedded into the popular VINS-Mono system to evaluate their performance. Experiments are performed on simulation and real robot data, which show that both methods increase the pose estimation accuracy. Moreover, the \textit{Threshold}-based method performs better when the noise is small and the \textit{Dynamic Time Warping}-based one shows greater potential on large noise.

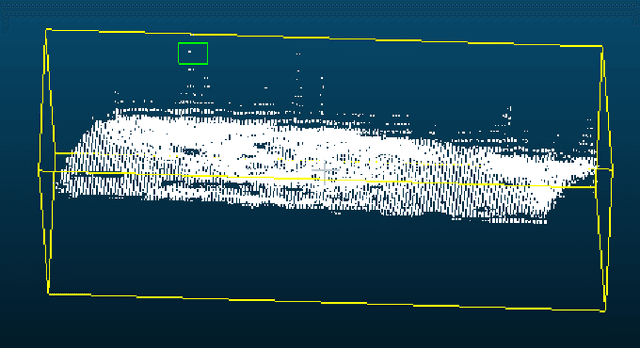

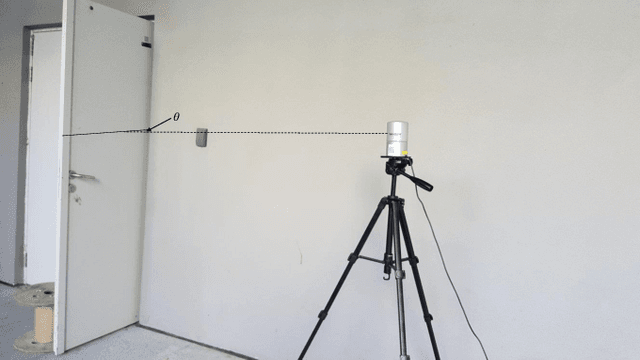

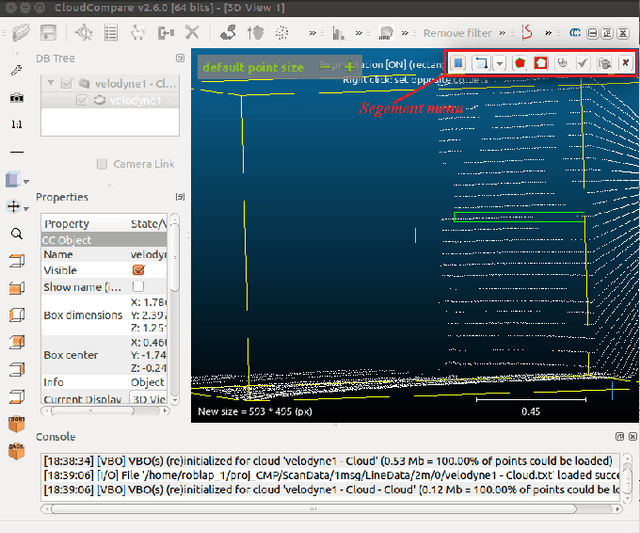

Comparison and Evaluation of 2D and 3D Range Sensors

Dec 03, 2019

Abstract:For mobile robots range sensors are important to perceive the environment. Sensors that can measure in a 3D volume are especially significant for outdoor robotics, because this environment is often highly unstructured. The quality of the data gathered by those sensors influences all algorithms relying on it. In this paper thus the precision of several 2D and 2.5D sensors is measured at different ranges and different incidence angles. The results of all tests are presented and analyzed.

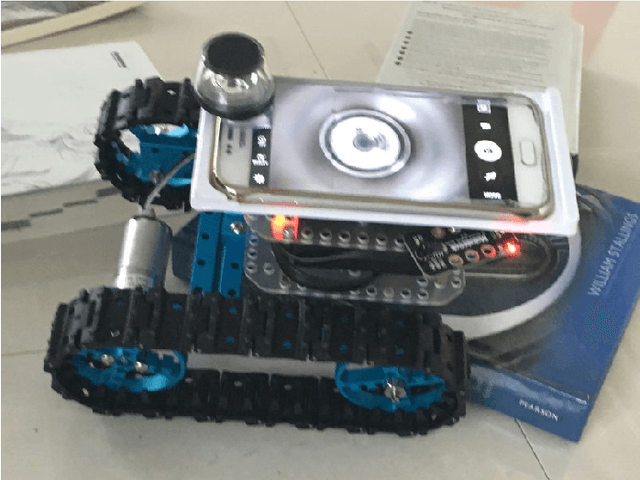

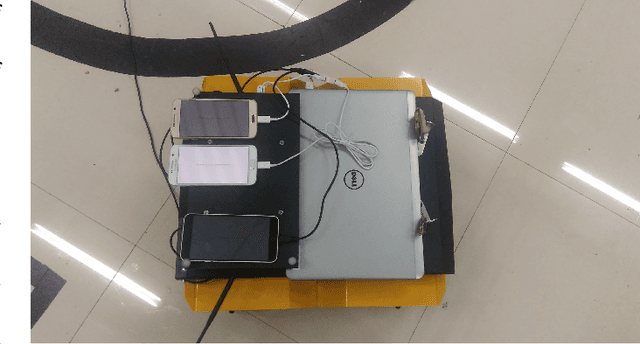

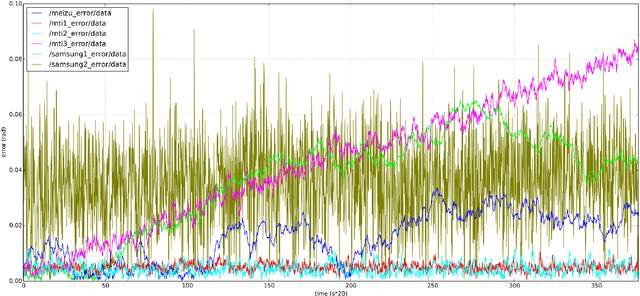

Evaluation of Smartphone IMUs for Small Mobile Search and Rescue Robots

Dec 03, 2019

Abstract:Small mobile robots are an important class of Search and Rescue Robots. Integrating all required components into such small robots is a difficult engineering task. Smartphones have already been made small, lightweight and cheap by the industry and are thus an excellent candidate as main controller for such robots. In this paper we outline how ROS can be used on Android devices and then evaluate one sensor which is very important for mobile robots: the Inertial Measurement Unit (IMU). Experiments are performed under static and dynamic conditions to measure the error of the IMUs of three smartphones and three professional IMUs. In the experiments we make use of a tracking system and an autonomous mobile robot.

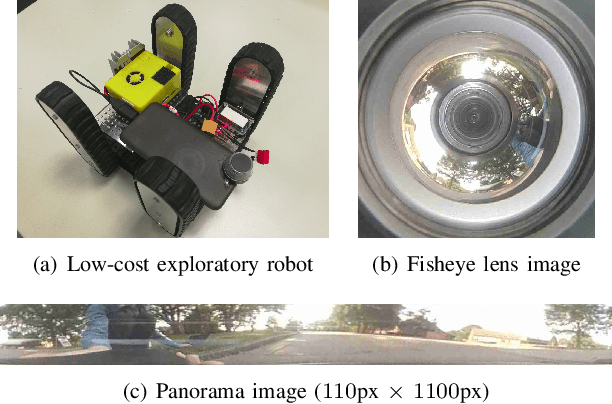

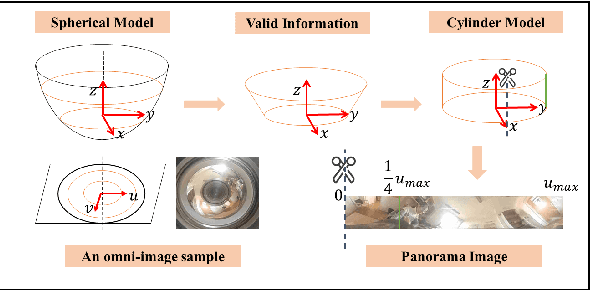

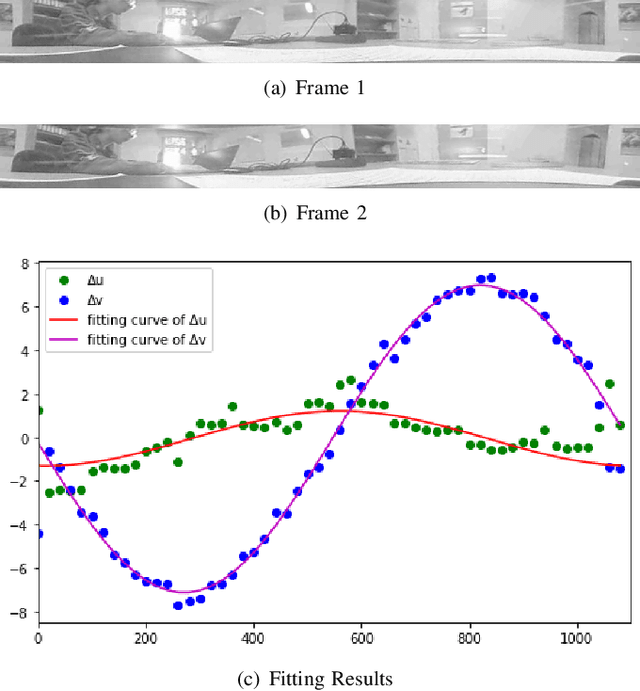

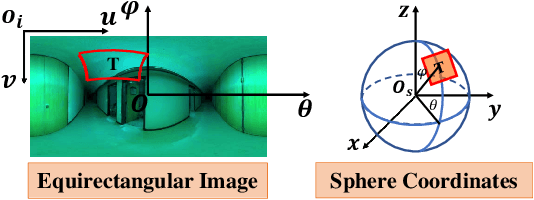

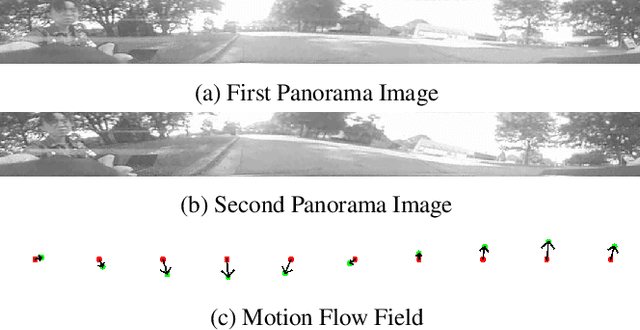

Pose Estimation for Omni-directional Cameras using Sinusoid Fitting

Oct 03, 2019

Abstract:We propose a novel pose estimation method for geometric vision of omni-directional cameras. On the basis of the regularity of the pixel movement after camera pose changes, we formulate and prove the sinusoidal relationship between pixels movement and camera motion. We use the improved Fourier-Mellin invariant (iFMI) algorithm to find the motion of pixels, which was shown to be more accurate and robust than the feature-based methods. While iFMI works only on pin-hole model images and estimates 4 parameters (x, y, yaw, scaling), our method works on panoramic images and estimates the full 6 DoF 3D transform, up to an unknown scale factor. For that we fit the motion of the pixels in the panoramic images, as determined by iFMI, to two sinusoidal functions. The offsets, amplitudes and phase-shifts of the two functions then represent the 3D rotation and translation of the camera between the two images. We perform experiments for 3D rotation, which show that our algorithm outperforms the feature-based methods in accuracy and robustness. We leave the more complex 3D translation experiments for future work.

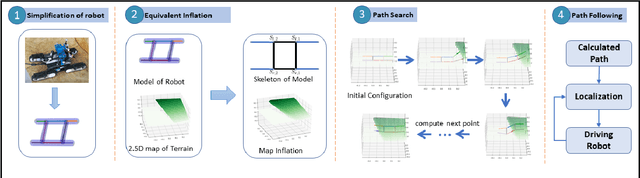

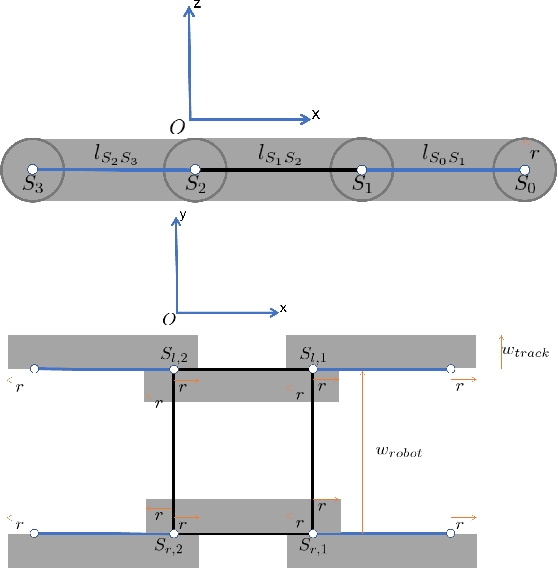

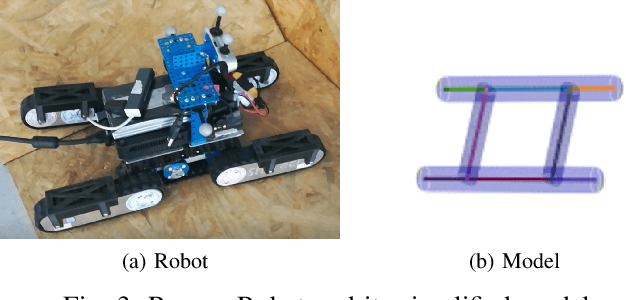

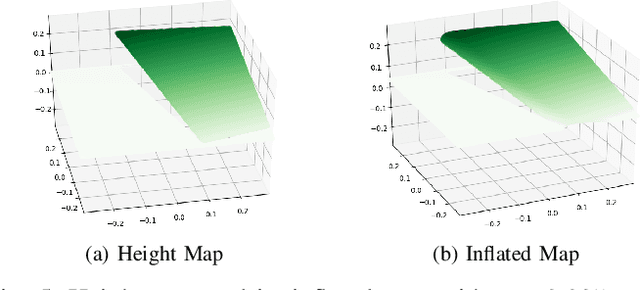

Configuration-Space Flipper Planning on 3D Terrain

Sep 17, 2019

Abstract:Autonomous run is always a goal in the field of rescue robot and the utilization of flipper will strongly improve the mobility and safety of robot. In this work, we simplify the rescue robot as a skeleton on inflated terrain. Its morphology can be represented by configuration of several parameters. Based on our previous paper, we further configure four flippers individually. The proposed flipper planning is of a mobile movement on 3D terrain with 2.5D maps. The experiment shows that our method can well tackle various terrain and have high efficiency on manipulating the flippers.

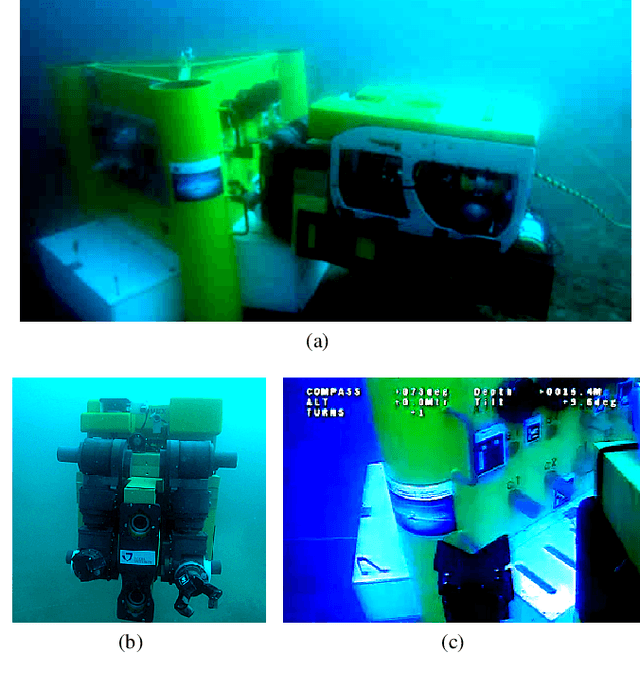

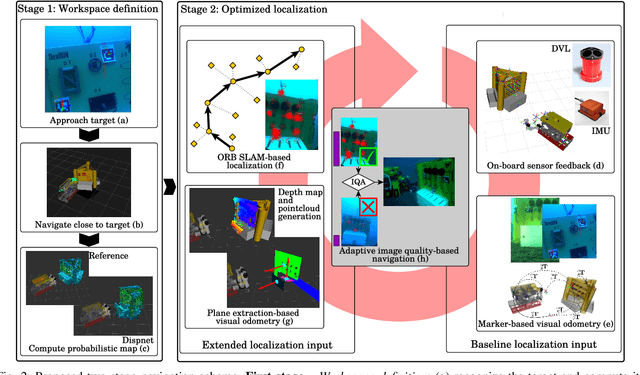

Adaptive Navigation Scheme for Optimal Deep-Sea Localization Using Multimodal Perception Cues

Jun 12, 2019

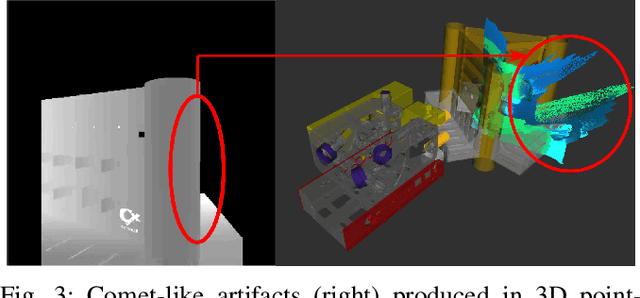

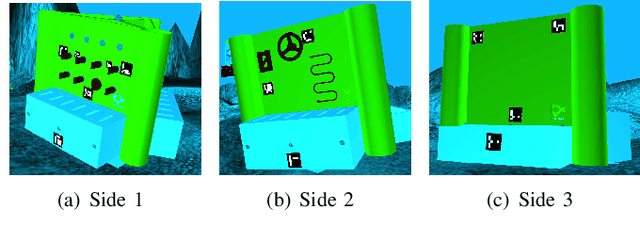

Abstract:Underwater robot interventions require a high level of safety and reliability. A major challenge to address is a robust and accurate acquisition of localization estimates, as it is a prerequisite to enable more complex tasks, e.g. floating manipulation and mapping. State-of-the-art navigation in commercial operations, such as oil & gas production (OGP), rely on costly instrumentation. These can be partially replaced or assisted by visual navigation methods, especially in deep-sea scenarios where equipment deployment has high costs and risks. Our work presents a multimodal approach that adapts state-of-the-art methods from on-land robotics, i.e., dense point cloud generation in combination with plane representation and registration, to boost underwater localization performance. A two-stage navigation scheme is proposed that initially generates a coarse probabilistic map of the workspace, which is used to filter noise from computed point clouds and planes in the second stage. Furthermore, an adaptive decision-making approach is introduced that determines which perception cues to incorporate into the localization filter to optimize accuracy and computation performance. Our approach is investigated first in simulation and then validated with data from field trials in OGP monitoring and maintenance scenarios.

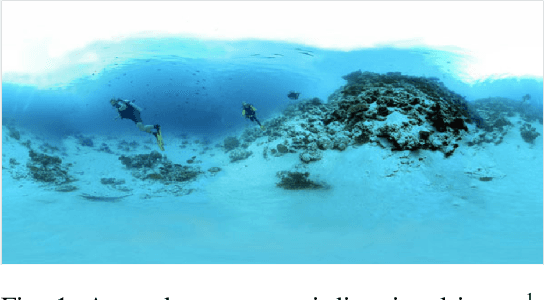

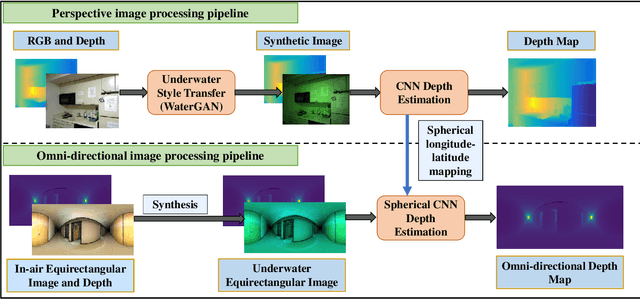

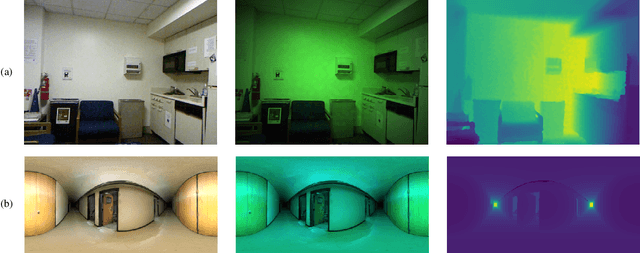

Depth Estimation on Underwater Omni-directional Images Using a Deep Neural Network

May 23, 2019

Abstract:In this work, we exploit a depth estimation Fully Convolutional Residual Neural Network (FCRN) for in-air perspective images to estimate the depth of underwater perspective and omni-directional images. We train one conventional and one spherical FCRN for underwater perspective and omni-directional images, respectively. The spherical FCRN is derived from the perspective FCRN via a spherical longitude-latitude mapping. For that, the omni-directional camera is modeled as a sphere, while images captured by it are displayed in the longitude-latitude form. Due to the lack of underwater datasets, we synthesize images in both data-driven and theoretical ways, which are used in training and testing. Finally, experiments are conducted on these synthetic images and results are displayed in both qualitative and quantitative way. The comparison between ground truth and the estimated depth map indicates the effectiveness of our method.

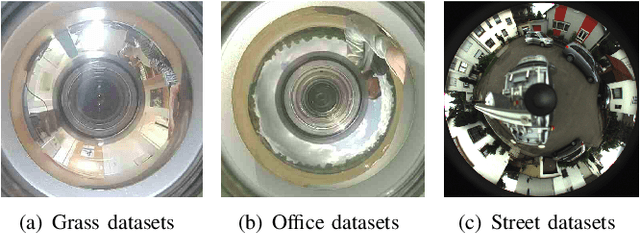

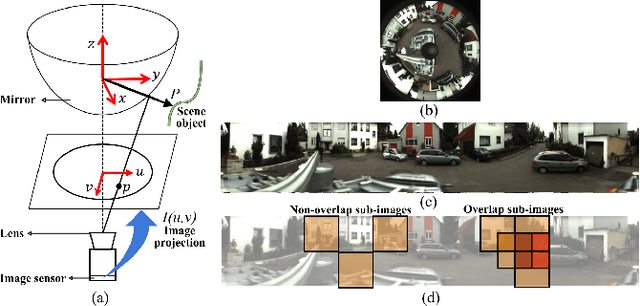

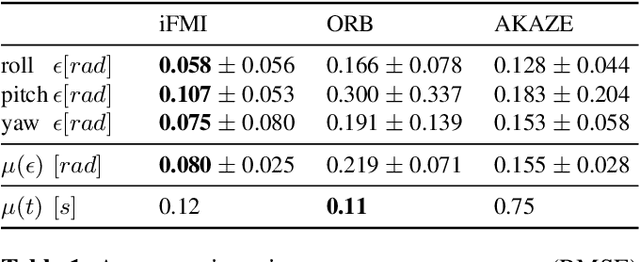

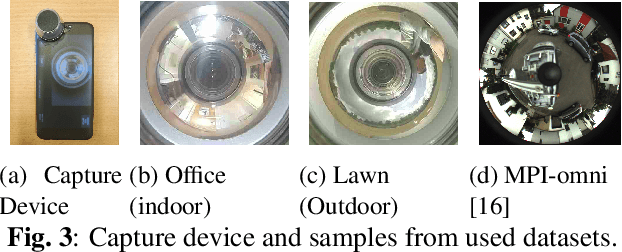

Improved Fourier Mellin Invariant for Robust Rotation Estimation with Omni-cameras

Feb 12, 2019

Abstract:Spectral methods such as the improved Fourier Mellin Invariant (iFMI) transform have proved faster, more robust and accurate than feature based methods on image registration. However, iFMI is restricted to work only when the camera moves in 2D space and has not been applied on omni-cameras images so far. In this work, we extend the iFMI method and apply a motion model to estimate an omni-camera's pose when it moves in 3D space. This is particularly useful in field robotics applications to get a rapid and comprehensive view of unstructured environments, and to estimate robustly the robot pose. In the experiment section, we compared the extended iFMI method against ORB and AKAZE feature based approaches on three datasets showing different type of environments: office, lawn and urban scenery (MPI-omni dataset). The results show that our method boosts the accuracy of the robot pose estimation two to four times with respect to the feature registration techniques, while offering lower processing times. Furthermore, the iFMI approach presents the best performance against motion blur typically present in mobile robotics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge