Christian A. Mueller

From Multi-modal Property Dataset to Robot-centric Conceptual Knowledge About Household Objects

Jun 26, 2019

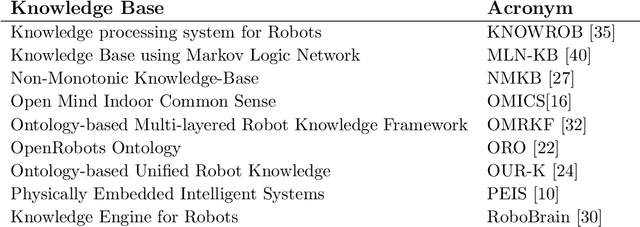

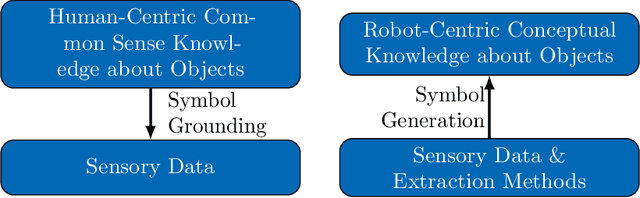

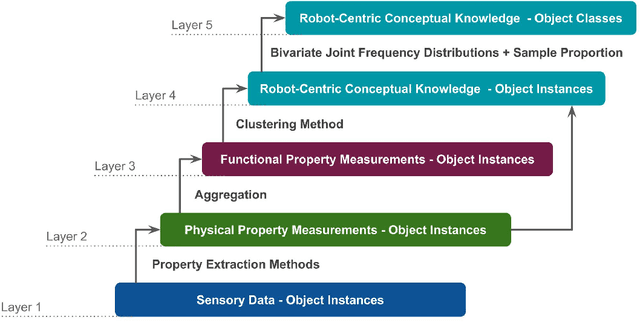

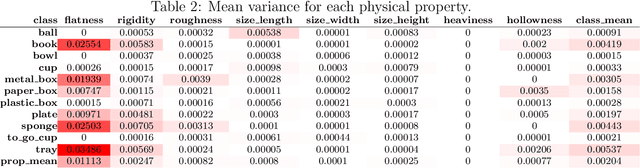

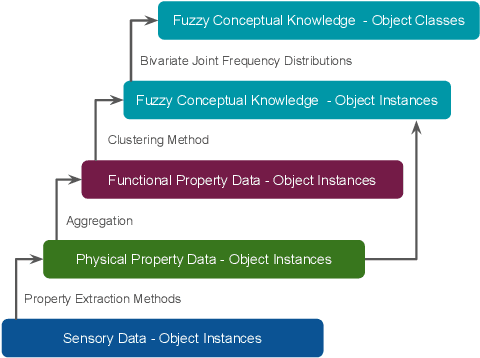

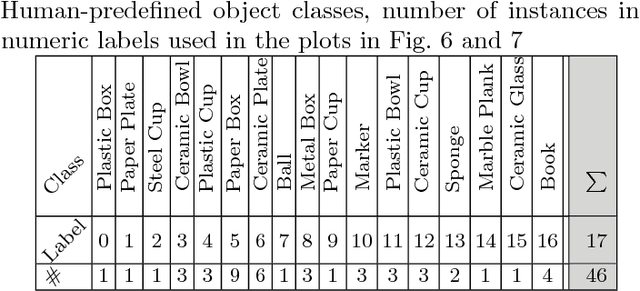

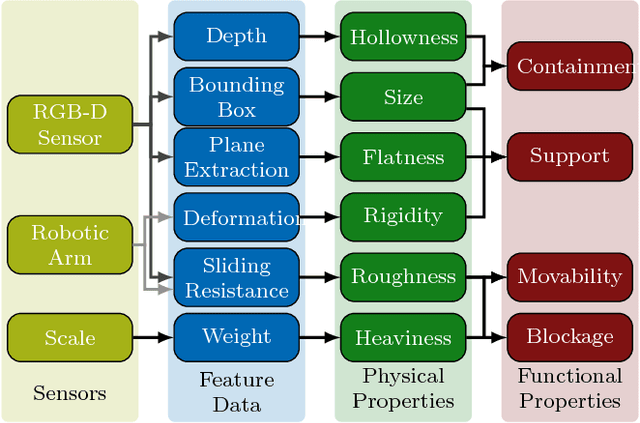

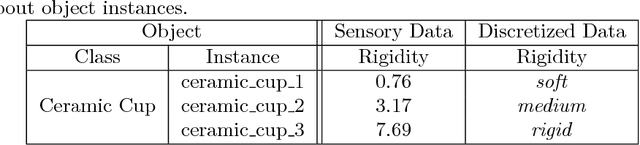

Abstract:Tool-use applications in robotics require conceptual knowledge about objects for informed decision making and object interactions. State-of-the-art methods employ hand-crafted symbolic knowledge which is defined from a human perspective and grounded into sensory data afterwards. However, due to different sensing and acting capabilities of robots, their conceptual understanding of objects must be generated from a robot's perspective entirely, which asks for robot-centric conceptual knowledge about objects. With this goal in mind, this article motivates that such knowledge should be based on physical and functional properties of objects. Consequently, a selection of ten properties is defined and corresponding extraction methods are proposed. This multi-modal property extraction forms the basis on which our second contribution, a robot-centric knowledge generation is build on. It employs unsupervised clustering methods to transform numerical property data into symbols, and Bivariate Joint Frequency Distributions and Sample Proportion to generate conceptual knowledge about objects using the robot-centric symbols. A preliminary implementation of the proposed framework is employed to acquire a dataset comprising physical and functional property data of 110 houshold objects. This Robot-Centric dataSet (RoCS) is used to evaluate the framework regarding the property extraction methods, the semantics of the considered properties within the dataset and its usefulness in real-world applications such as tool substitution.

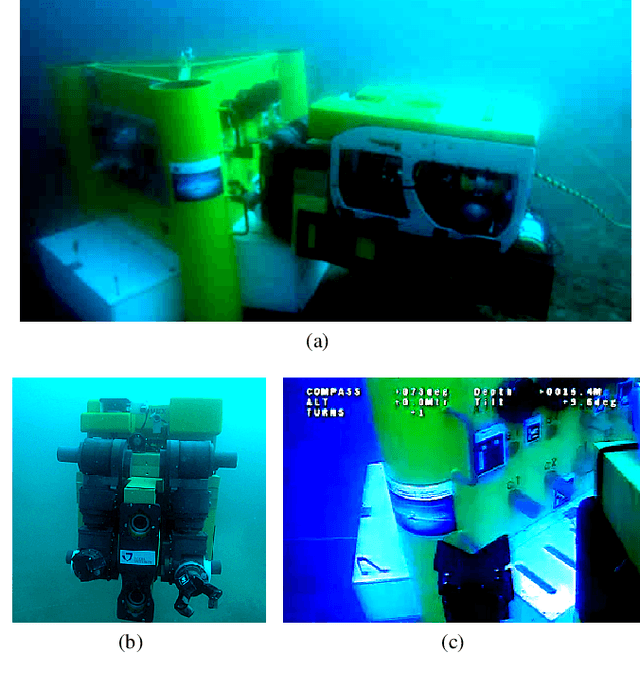

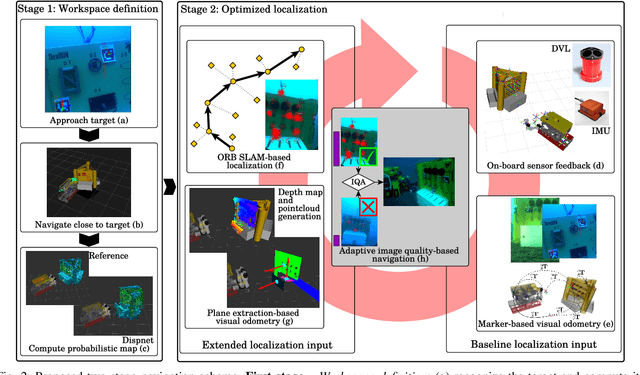

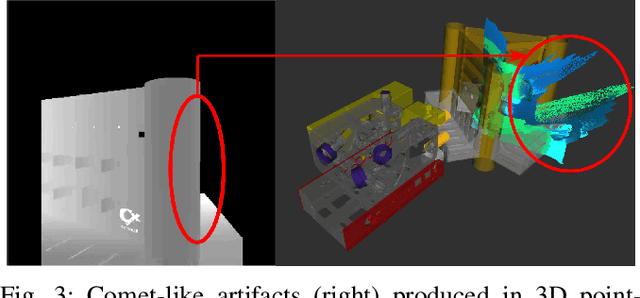

Adaptive Navigation Scheme for Optimal Deep-Sea Localization Using Multimodal Perception Cues

Jun 12, 2019

Abstract:Underwater robot interventions require a high level of safety and reliability. A major challenge to address is a robust and accurate acquisition of localization estimates, as it is a prerequisite to enable more complex tasks, e.g. floating manipulation and mapping. State-of-the-art navigation in commercial operations, such as oil & gas production (OGP), rely on costly instrumentation. These can be partially replaced or assisted by visual navigation methods, especially in deep-sea scenarios where equipment deployment has high costs and risks. Our work presents a multimodal approach that adapts state-of-the-art methods from on-land robotics, i.e., dense point cloud generation in combination with plane representation and registration, to boost underwater localization performance. A two-stage navigation scheme is proposed that initially generates a coarse probabilistic map of the workspace, which is used to filter noise from computed point clouds and planes in the second stage. Furthermore, an adaptive decision-making approach is introduced that determines which perception cues to incorporate into the localization filter to optimize accuracy and computation performance. Our approach is investigated first in simulation and then validated with data from field trials in OGP monitoring and maintenance scenarios.

Conceptualization of Object Compositions Using Persistent Homology

Nov 20, 2018

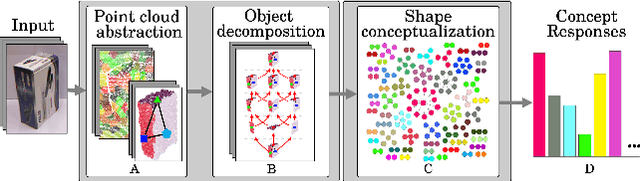

Abstract:A topological shape analysis is proposed and utilized to learn concepts that reflect shape commonalities. Our approach is two-fold: i) a spatial topology analysis of point cloud segment constellations within objects. Therein constellations are decomposed and described in an hierarchical manner - from single segments to segment groups until a single group reflects an entire object. ii) a topology analysis of the description space in which segment decompositions are exposed in. Inspired by Persistent Homology, hidden groups of shape commonalities are revealed from object segment decompositions. Experiments show that extracted persistent groups of commonalities can represent semantically meaningful shape concepts. We also show the generalization capability of the proposed approach considering samples of external datasets.

Unsupervised Learning of Shape Concepts - From Real-World Objects to Mental Simulation

Nov 20, 2018

Abstract:An unsupervised shape analysis is proposed to learn concepts reflecting shape commonalities. Our approach is two-fold: i) a spatial topology analysis of point cloud segment constellations within objects is used in which constellations are decomposed and described in a hierarchical and symbolic manner. ii) A topology analysis of the description space is used in which segment decompositions are exposed in. Inspired by Persistent Homology, groups of shape commonality are revealed. Experiments show that extracted persistent commonality groups can feature semantically meaningful shape concepts; the generalization of the proposed approach is evaluated by different real-world datasets. We extend this by not only learning shape concepts using real-world data, but by also using mental simulation of artificial abstract objects for training purposes. This extended approach is unsupervised in two respects: label-agnostic (no label information is used) and instance-agnostic (no instances preselected by human supervision are used for training). Experiments show that concepts generated with mental simulation, generalize and discriminate real object observations. Consequently, a robot may train and learn its own internal representation of concepts regarding shape appearance in a self-driven and machine-centric manner while omitting the tedious process of supervised dataset generation including the ambiguity in instance labeling and selection.

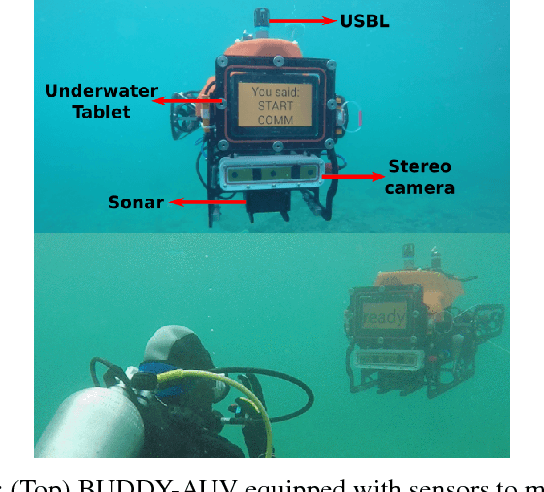

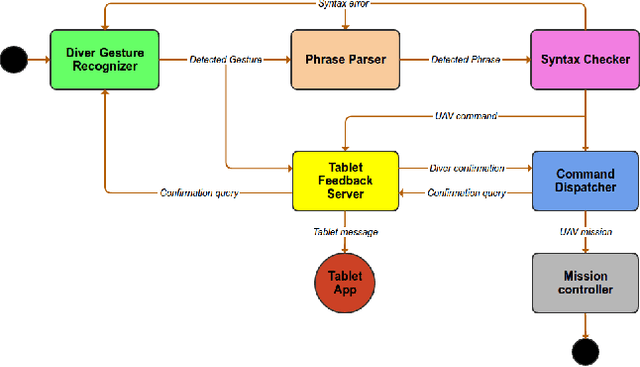

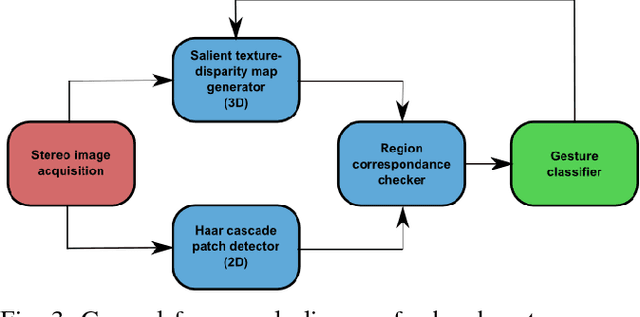

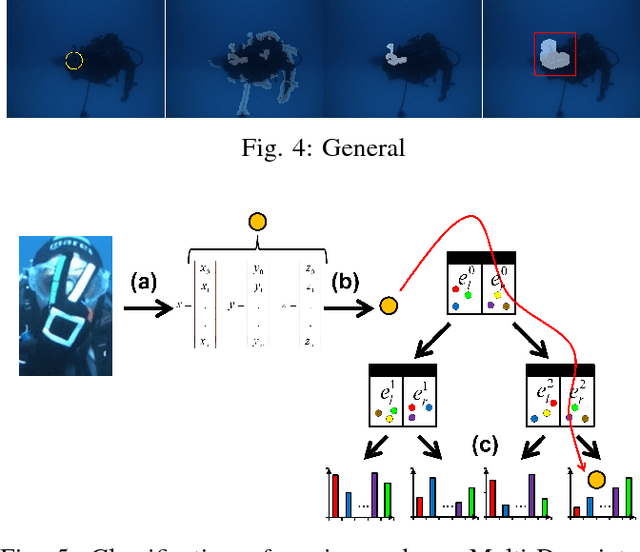

Robust Gesture-Based Communication for Underwater Human-Robot Interaction in the context of Search and Rescue Diver Missions

Oct 16, 2018

Abstract:We propose a robust gesture-based communication pipeline for divers to instruct an Autonomous Underwater Vehicle (AUV) to assist them in performing high-risk tasks and helping in case of emergency. A gesture communication language (CADDIAN) is developed, based on consolidated and standardized diver gestures, including an alphabet, syntax and semantics, ensuring a logical consistency. A hierarchical classification approach is introduced for hand gesture recognition based on stereo imagery and multi-descriptor aggregation to specifically cope with underwater image artifacts, e.g. light backscatter or color attenuation. Once the classification task is finished, a syntax check is performed to filter out invalid command sequences sent by the diver or generated by errors in the classifier. Throughout this process, the diver receives constant feedback from an underwater tablet to acknowledge or abort the mission at any time. The objective is to prevent the AUV from executing unnecessary, infeasible or potentially harmful motions. Experimental results under different environmental conditions in archaeological exploration and bridge inspection applications show that the system performs well in the field.

What Stands-in for a Missing Tool? A Prototypical Grounded Knowledge-based Approach to Tool Substitution

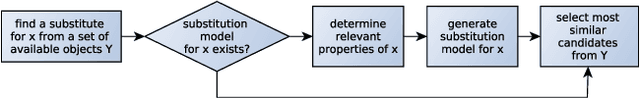

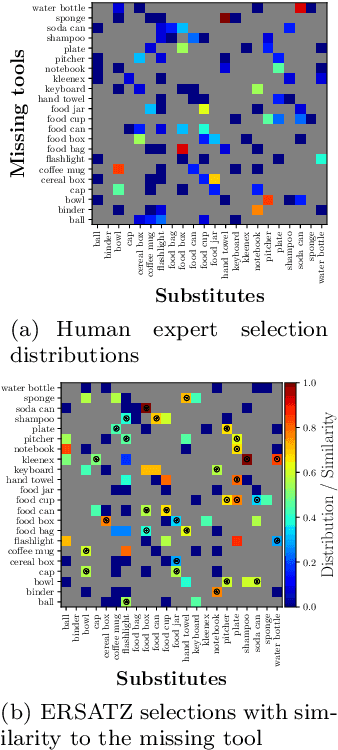

Oct 15, 2018

Abstract:When a robot is operating in a dynamic environment, it cannot be assumed that a tool required to solve a given task will always be available. In case of a missing tool, an ideal response would be to find a substitute to complete the task. In this paper, we present a proof of concept of a grounded knowledge-based approach to tool substitution. In order to validate the suitability of a substitute, we conducted experiments involving 22 substitution scenarios. The substitutes computed by the proposed approach were validated on the basis of the experts' choices for each scenario. Our evaluation showed, in 20 out of 22 scenarios (91%), the approach identified the same substitutes as experts.

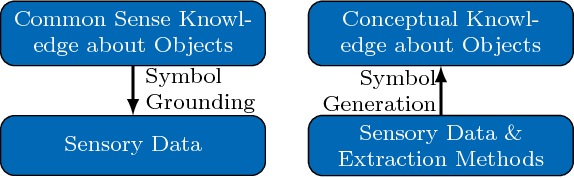

Towards Robot-Centric Conceptual Knowledge Acquisition

Oct 08, 2018

Abstract:Robots require knowledge about objects in order to efficiently perform various household tasks involving objects. The existing knowledge bases for robots acquire symbolic knowledge about objects from manually-coded external common sense knowledge bases such as ConceptNet, Word-Net etc. The problem with such approaches is the discrepancy between human-centric symbolic knowledge and robot-centric object perception due to its limited perception capabilities. Ultimately, significant portion of knowledge in the knowledge base remains ungrounded into robot's perception. To overcome this discrepancy, we propose an approach to enable robots to generate robot-centric symbolic knowledge about objects from their own sensory data, thus, allowing them to assemble their own conceptual understanding of objects. With this goal in mind, the presented paper elaborates on the work-in-progress of the proposed approach followed by the preliminary results.

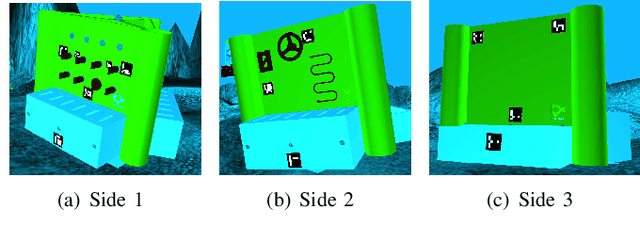

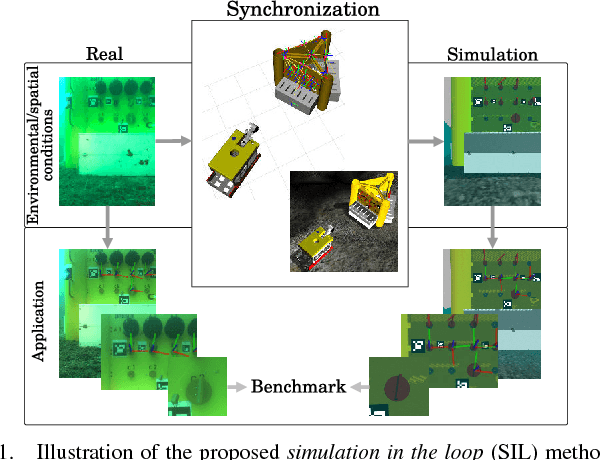

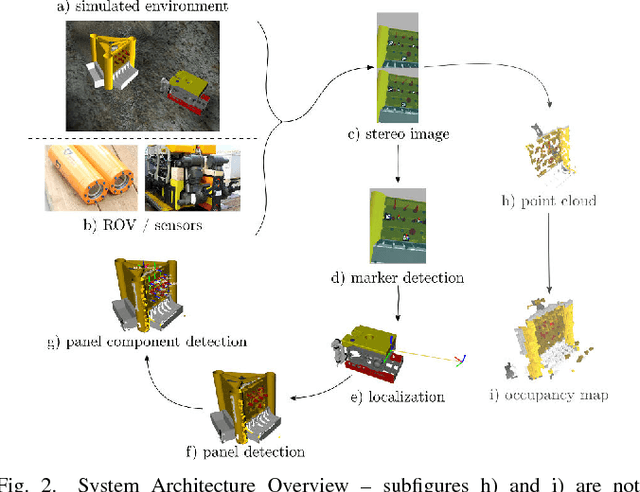

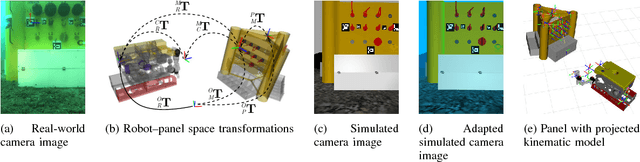

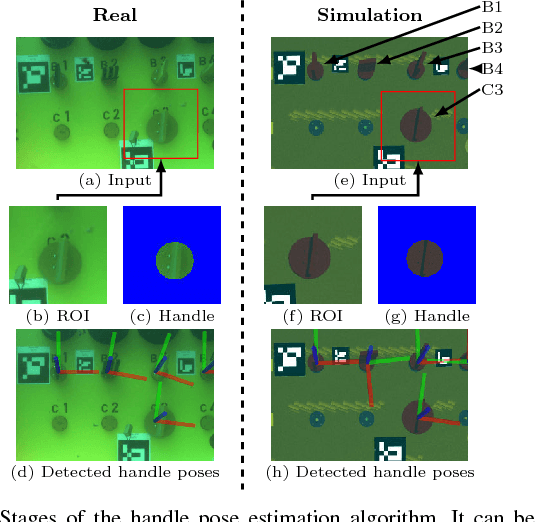

Robust Continuous System Integration for Critical Deep-Sea Robot Operations Using Knowledge-Enabled Simulation in the Loop

Jul 18, 2018

Abstract:Deep-sea robot operations demand a high level of safety, efficiency and reliability. As a consequence, measures within the development stage have to be implemented to extensively evaluate and benchmark system components ranging from data acquisition, perception and localization to control. We present an approach based on high-fidelity simulation that embeds spatial and environmental conditions from recorded real-world data. This simulation in the loop (SIL) methodology allows for mitigating the discrepancy between simulation and real-world conditions, e.g. regarding sensor noise. As a result, this work provides a platform to thoroughly investigate and benchmark behaviors of system components concurrently under real and simulated conditions. The conducted evaluation shows the benefit of the proposed work in tasks related to perception and self-localization under changing spatial and environmental conditions.

* published on IROS 2018

Visual Object Categorization Based on Hierarchical Shape Motifs Learned From Noisy Point Cloud Decompositions

Apr 03, 2018

Abstract:Object shape is a key cue that contributes to the semantic understanding of objects. In this work we focus on the categorization of real-world object point clouds to particular shape types. Therein surface description and representation of object shape structure have significant influence on shape categorization accuracy, when dealing with real-world scenes featuring noisy, partial and occluded object observations. An unsupervised hierarchical learning procedure is utilized here to symbolically describe surface characteristics on multiple semantic levels. Furthermore, a constellation model is proposed that hierarchically decomposes objects. The decompositions are described as constellations of symbols (shape motifs) in a gradual order, hence reflecting shape structure from local to global, i.e., from parts over groups of parts to entire objects. The combination of this multi-level description of surfaces and the hierarchical decomposition of shapes leads to a representation which allows to conceptualize shapes. An object discrimination has been observed in experiments with seven categories featuring instances with sensor noise, occlusions as well as inter-category and intra-category similarities. Experiments include the evaluation of the proposed description and shape decomposition approach, and comparisons to Fast Point Feature Histograms, a Vocabulary Tree and a neural network-based Deep Learning method. Furthermore, experiments are conducted with alternative datasets which analyze the generalization capability of the proposed approach.

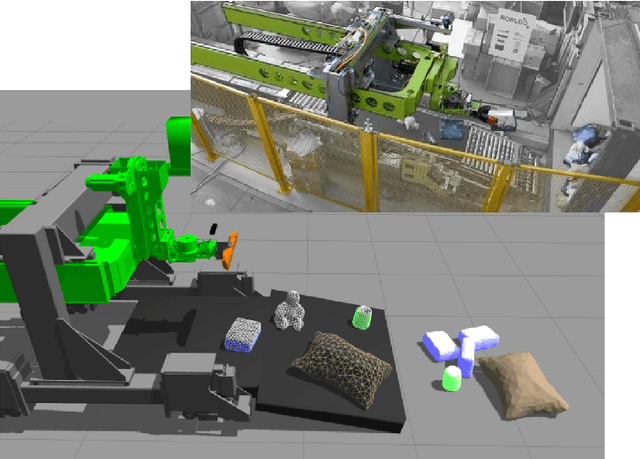

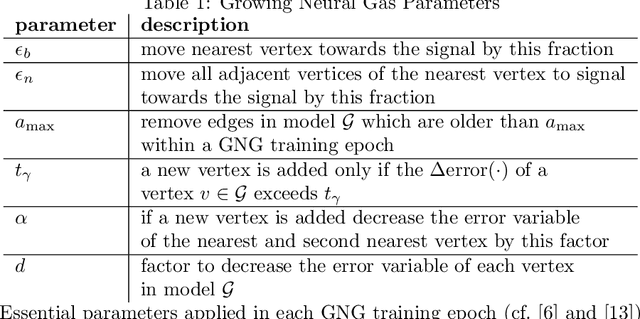

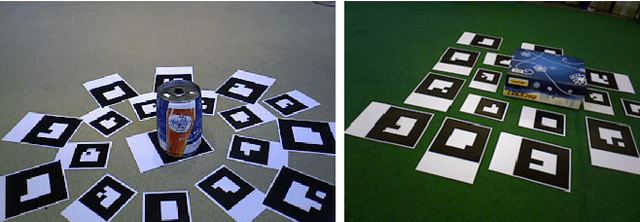

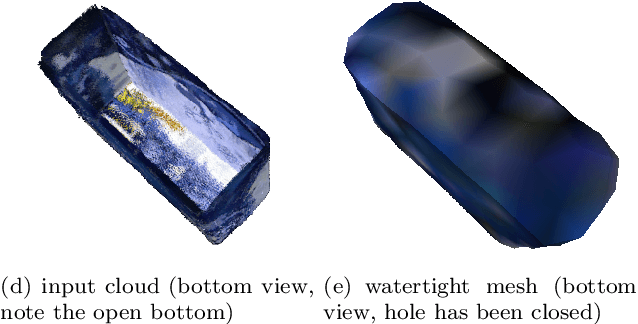

Unsupervised Watertight Mesh Generation for Physics Simulation Applications Using Growing Neural Gas on Noisy Free-Form Object Models

Sep 03, 2016

Abstract:We present a framework to generate watertight mesh representations in an unsupervised manner from noisy point clouds of complex, heterogeneous objects with free-form surfaces. The resulting meshes are ready to use in applications like kinematics and dynamics simulation where watertightness and fast processing are the main quality criteria. This works with no necessity of user interaction, mainly by utilizing a modified Growing Neural Gas technique for surface reconstruction combined with several post-processing steps. In contrast to existing methods, the proposed framework is able to cope with input point clouds generated by consumer-grade RGBD sensors and works even if the input data features large holes, e.g. a missing bottom which was not covered by the sensor. Additionally, we explain a method to unsupervisedly optimize the parameters of our framework in order to improve generalization quality and, at the same time, keep the resulting meshes as coherent as possible to the original object regarding visual and geometric properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge