Tobias Doernbach

Fast-Revisit Coverage Path Planning for Autonomous Mobile Patrol Robots Using Long-Range Sensor Information

Jan 13, 2025

Abstract:The utilization of Unmanned Ground Vehicles (UGVs) for patrolling industrial sites has expanded significantly. These UGVs typically are equipped with perception systems, e.g., computer vision, with limited range due to sensor limitations or site topology. High-level control of the UGVs requires Coverage Path Planning (CPP) algorithms that navigate all relevant waypoints and promptly start the next cycle. In this paper, we propose the novel Fast-Revisit Coverage Path Planning (FaRe-CPP) algorithm using a greedy heuristic approach to propose waypoints for maximum coverage area and a random search-based path optimization technique to obtain a path along the proposed waypoints with minimum revisit time. We evaluated the algorithm in a simulated environment using Gazebo and a camera-equipped TurtleBot3 against a number of existing algorithms. Compared to their average revisit times and path lengths, our FaRe-CPP algorithm approximately showed a 45% and 40% reduction, respectively, in these highly relevant performance indicators.

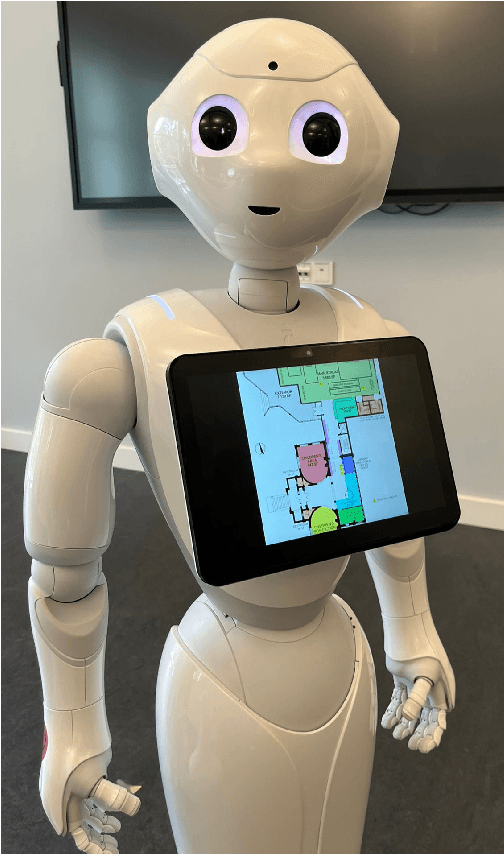

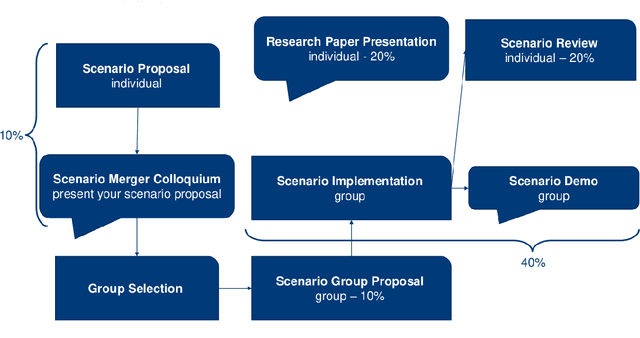

Looking for the Human in HRI Teaching: User-Centered Course Design for Tech-Savvy Students

Mar 19, 2024

Abstract:Top-down, user-centered thinking is not typically a strength of all students, especially tech-savvy computer science-related ones. We propose Human-Robot Interaction (HRI) introductory courses as a highly suitable opportunity to foster these important skills since the HRI discipline includes a focus on humans as users. Our HRI course therefore contains elements like scenario-based design of laboratory projects, discussing and merging ideas and other self-empowerment techniques. Participants describe, implement and present everyday scenarios using Pepper robots and our customized open-source visual programming tool. We observe that students obtain a good grasp of the taught topics and improve their user-centered thinking skills.

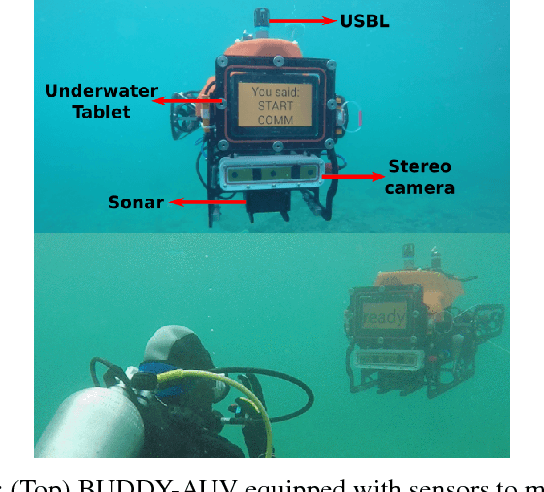

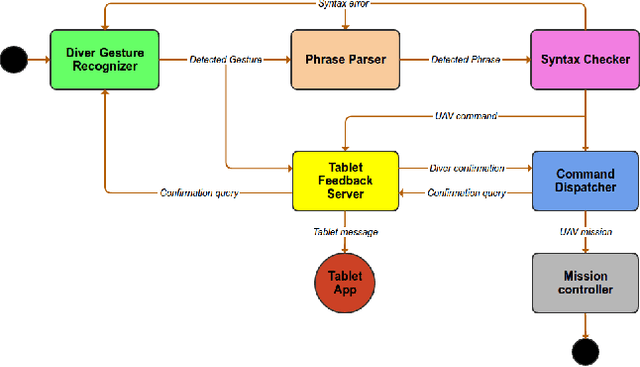

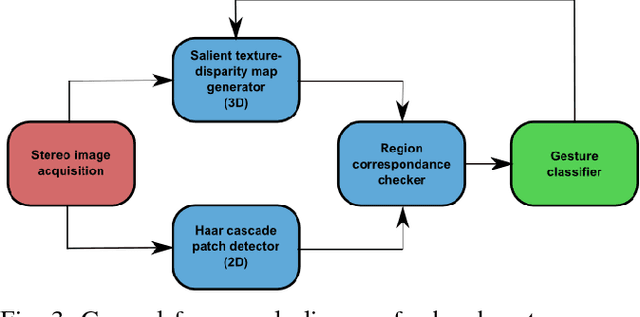

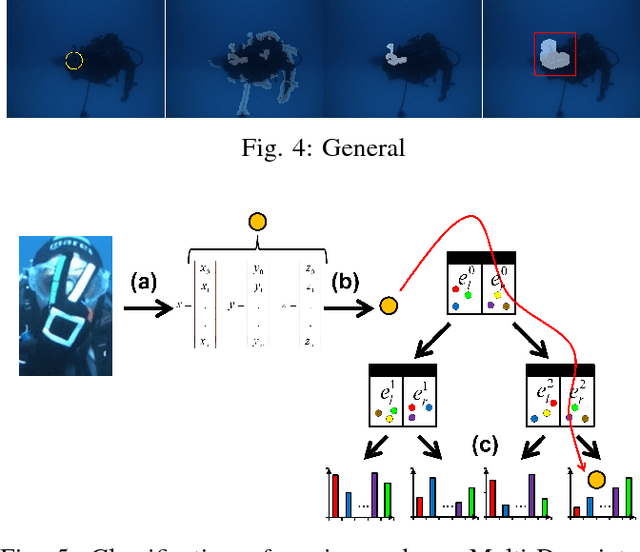

Robust Gesture-Based Communication for Underwater Human-Robot Interaction in the context of Search and Rescue Diver Missions

Oct 16, 2018

Abstract:We propose a robust gesture-based communication pipeline for divers to instruct an Autonomous Underwater Vehicle (AUV) to assist them in performing high-risk tasks and helping in case of emergency. A gesture communication language (CADDIAN) is developed, based on consolidated and standardized diver gestures, including an alphabet, syntax and semantics, ensuring a logical consistency. A hierarchical classification approach is introduced for hand gesture recognition based on stereo imagery and multi-descriptor aggregation to specifically cope with underwater image artifacts, e.g. light backscatter or color attenuation. Once the classification task is finished, a syntax check is performed to filter out invalid command sequences sent by the diver or generated by errors in the classifier. Throughout this process, the diver receives constant feedback from an underwater tablet to acknowledge or abort the mission at any time. The objective is to prevent the AUV from executing unnecessary, infeasible or potentially harmful motions. Experimental results under different environmental conditions in archaeological exploration and bridge inspection applications show that the system performs well in the field.

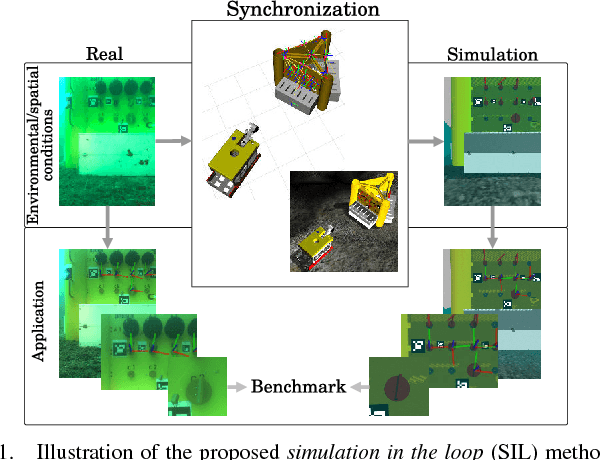

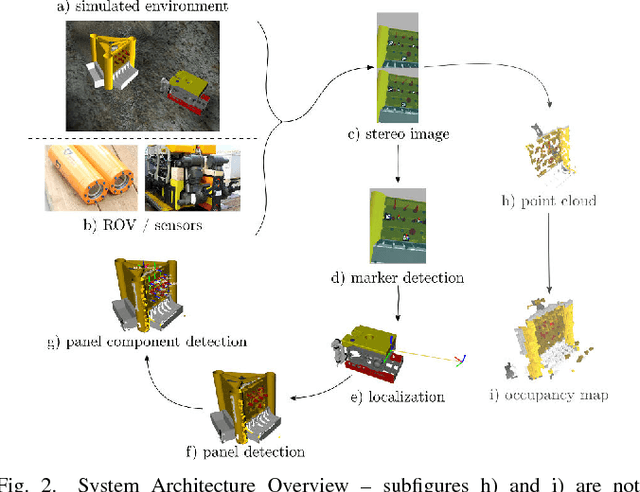

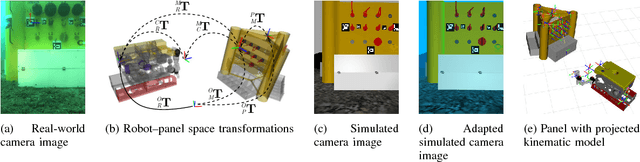

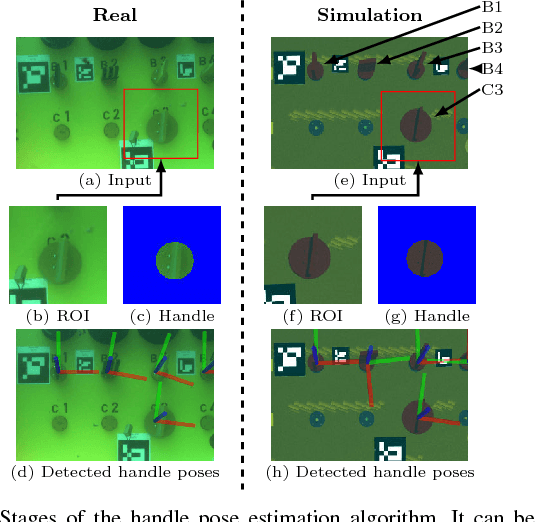

Robust Continuous System Integration for Critical Deep-Sea Robot Operations Using Knowledge-Enabled Simulation in the Loop

Jul 18, 2018

Abstract:Deep-sea robot operations demand a high level of safety, efficiency and reliability. As a consequence, measures within the development stage have to be implemented to extensively evaluate and benchmark system components ranging from data acquisition, perception and localization to control. We present an approach based on high-fidelity simulation that embeds spatial and environmental conditions from recorded real-world data. This simulation in the loop (SIL) methodology allows for mitigating the discrepancy between simulation and real-world conditions, e.g. regarding sensor noise. As a result, this work provides a platform to thoroughly investigate and benchmark behaviors of system components concurrently under real and simulated conditions. The conducted evaluation shows the benefit of the proposed work in tasks related to perception and self-localization under changing spatial and environmental conditions.

* published on IROS 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge