Robust Gesture-Based Communication for Underwater Human-Robot Interaction in the context of Search and Rescue Diver Missions

Paper and Code

Oct 16, 2018

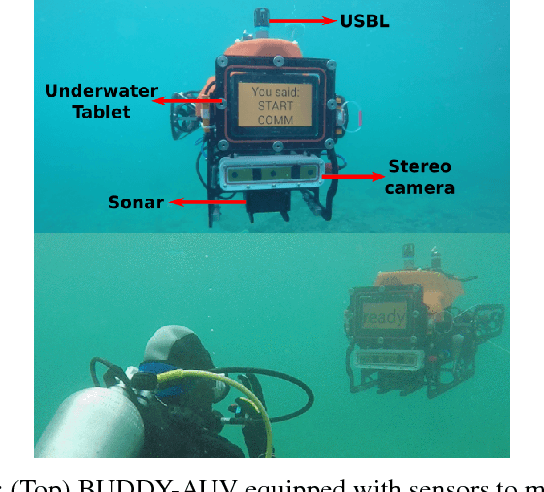

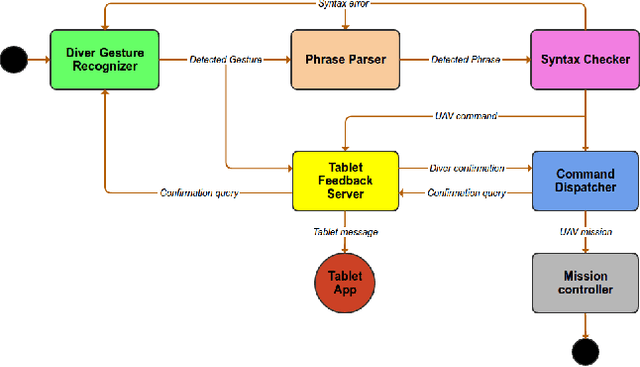

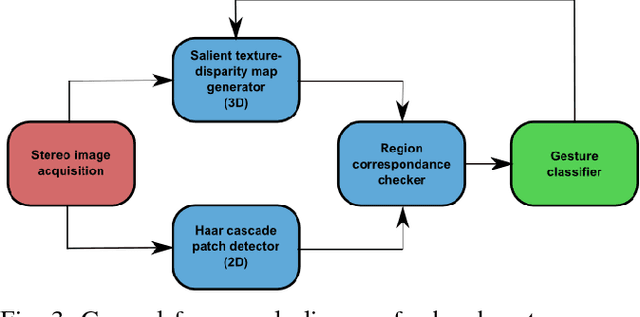

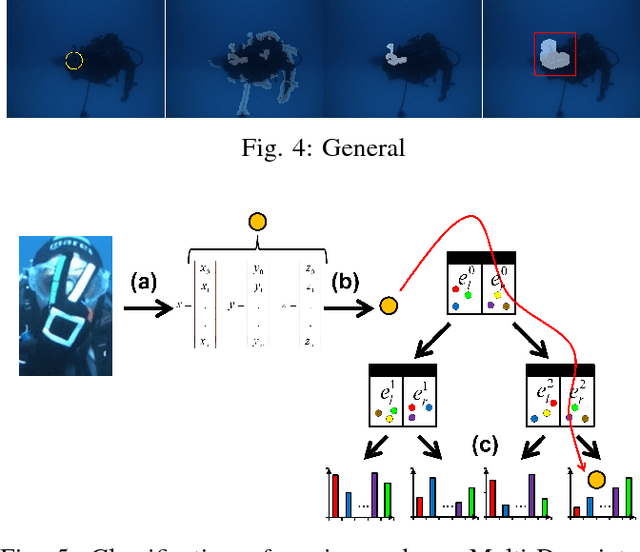

We propose a robust gesture-based communication pipeline for divers to instruct an Autonomous Underwater Vehicle (AUV) to assist them in performing high-risk tasks and helping in case of emergency. A gesture communication language (CADDIAN) is developed, based on consolidated and standardized diver gestures, including an alphabet, syntax and semantics, ensuring a logical consistency. A hierarchical classification approach is introduced for hand gesture recognition based on stereo imagery and multi-descriptor aggregation to specifically cope with underwater image artifacts, e.g. light backscatter or color attenuation. Once the classification task is finished, a syntax check is performed to filter out invalid command sequences sent by the diver or generated by errors in the classifier. Throughout this process, the diver receives constant feedback from an underwater tablet to acknowledge or abort the mission at any time. The objective is to prevent the AUV from executing unnecessary, infeasible or potentially harmful motions. Experimental results under different environmental conditions in archaeological exploration and bridge inspection applications show that the system performs well in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge