Sebastian Zug

Dynamic System Model Generation for Online Fault Detection and Diagnosis of Robotic Systems

Jul 02, 2025Abstract:With the rapid development of more complex robots, Fault Detection and Diagnosis (FDD) becomes increasingly harder. Especially the need for predetermined models and historic data is problematic because they do not encompass the dynamic and fast-changing nature of such systems. To this end, we propose a concept that actively generates a dynamic system model at runtime and utilizes it to locate root causes. The goal is to be applicable to all kinds of robotic systems that share a similar software design. Additionally, it should exhibit minimal overhead and enhance independence from expert attention.

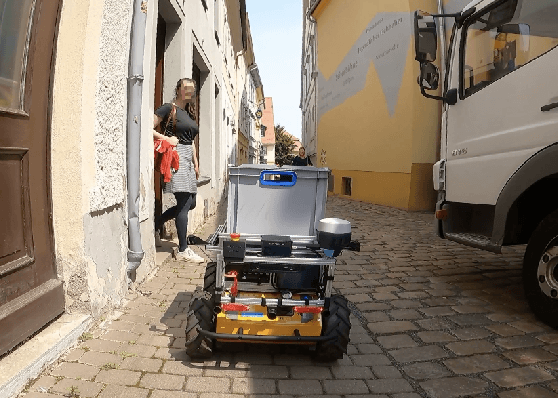

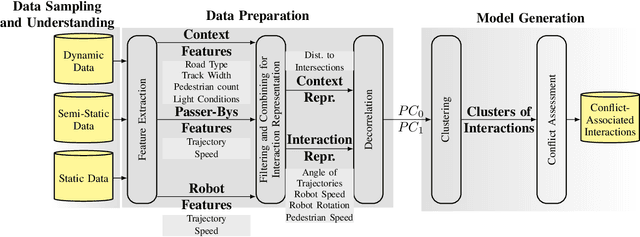

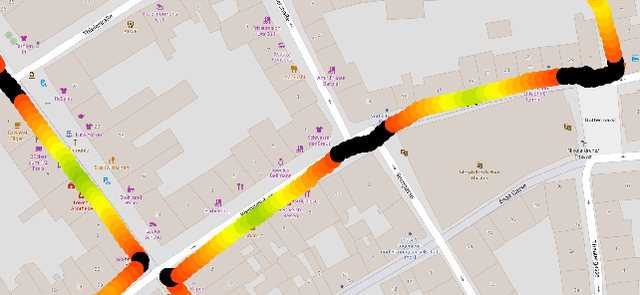

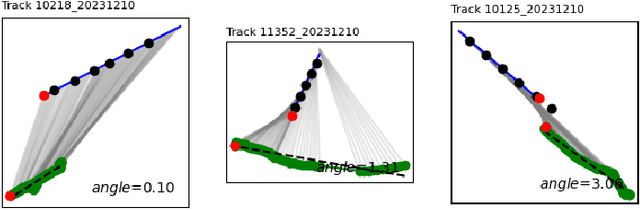

Using Unsupervised Learning to Explore Robot-Pedestrian Interactions in Urban Environments

May 20, 2024

Abstract:This study identifies a gap in data-driven approaches to robot-centric pedestrian interactions and proposes a corresponding pipeline. The pipeline utilizes unsupervised learning techniques to identify patterns in interaction data of urban environments, specifically focusing on conflict scenarios. Analyzed features include the robot's and pedestrian's speed and contextual parameters such as proximity to intersections. They are extracted and reduced in dimensionality using Principal Component Analysis (PCA). Finally, K-means clustering is employed to uncover underlying patterns in the interaction data. A use case application of the pipeline is presented, utilizing real-world robot mission data from a mid-sized German city. The results indicate the need for enriching interaction representations with contextual information to enable fine-grained analysis and reasoning. Nevertheless, they also highlight the need for expanding the data set and incorporating additional contextual factors to enhance the robots situational awareness and interaction quality.

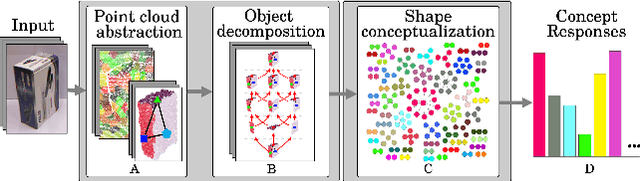

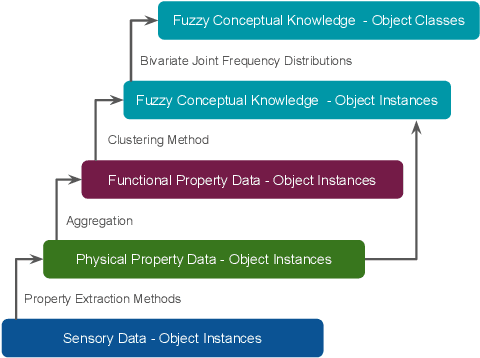

From Multi-modal Property Dataset to Robot-centric Conceptual Knowledge About Household Objects

Jun 26, 2019

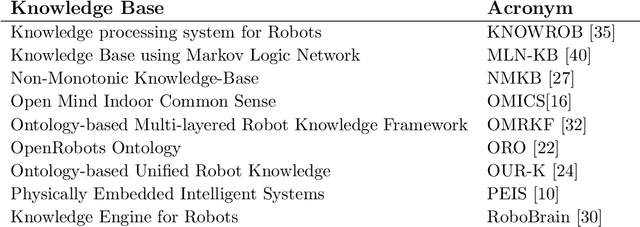

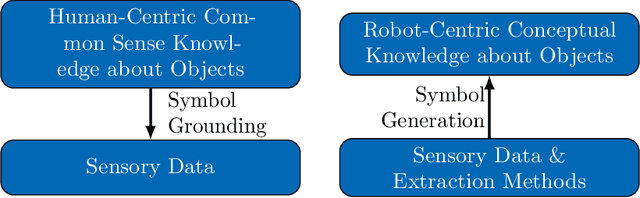

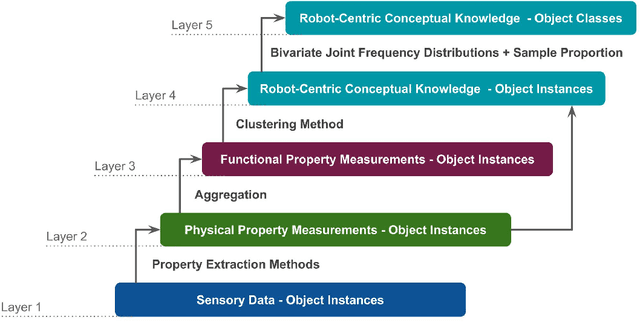

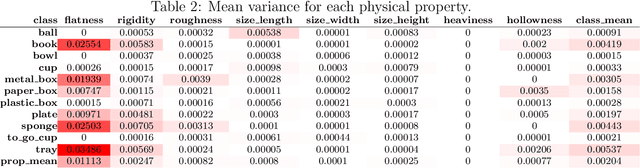

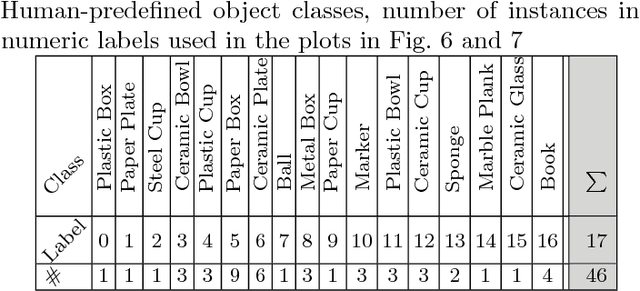

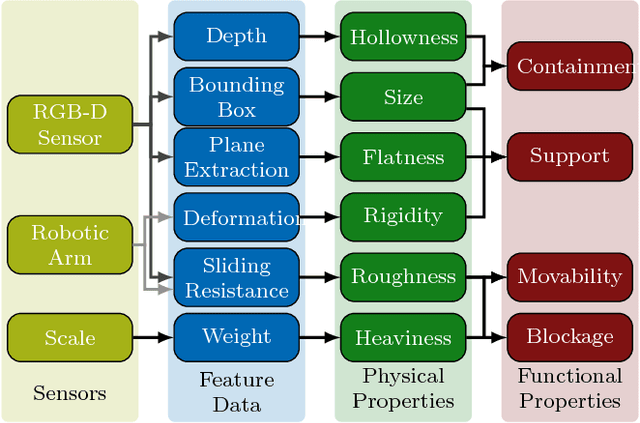

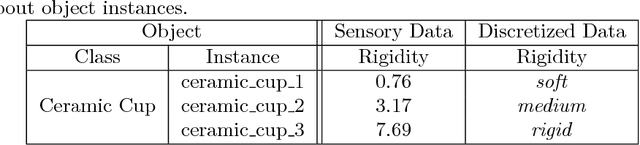

Abstract:Tool-use applications in robotics require conceptual knowledge about objects for informed decision making and object interactions. State-of-the-art methods employ hand-crafted symbolic knowledge which is defined from a human perspective and grounded into sensory data afterwards. However, due to different sensing and acting capabilities of robots, their conceptual understanding of objects must be generated from a robot's perspective entirely, which asks for robot-centric conceptual knowledge about objects. With this goal in mind, this article motivates that such knowledge should be based on physical and functional properties of objects. Consequently, a selection of ten properties is defined and corresponding extraction methods are proposed. This multi-modal property extraction forms the basis on which our second contribution, a robot-centric knowledge generation is build on. It employs unsupervised clustering methods to transform numerical property data into symbols, and Bivariate Joint Frequency Distributions and Sample Proportion to generate conceptual knowledge about objects using the robot-centric symbols. A preliminary implementation of the proposed framework is employed to acquire a dataset comprising physical and functional property data of 110 houshold objects. This Robot-Centric dataSet (RoCS) is used to evaluate the framework regarding the property extraction methods, the semantics of the considered properties within the dataset and its usefulness in real-world applications such as tool substitution.

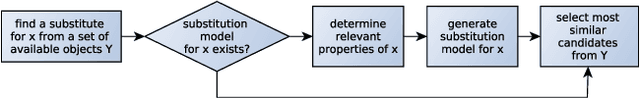

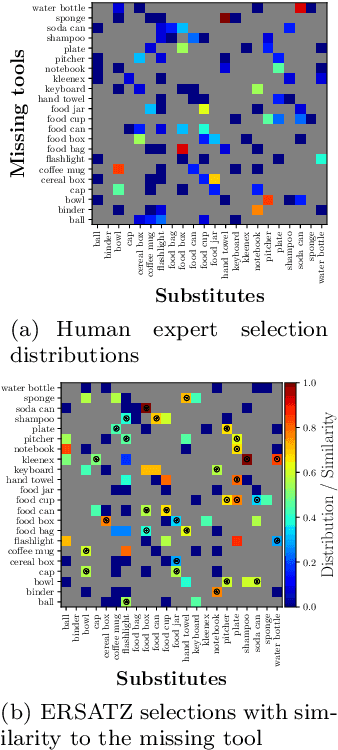

What Stands-in for a Missing Tool? A Prototypical Grounded Knowledge-based Approach to Tool Substitution

Oct 15, 2018

Abstract:When a robot is operating in a dynamic environment, it cannot be assumed that a tool required to solve a given task will always be available. In case of a missing tool, an ideal response would be to find a substitute to complete the task. In this paper, we present a proof of concept of a grounded knowledge-based approach to tool substitution. In order to validate the suitability of a substitute, we conducted experiments involving 22 substitution scenarios. The substitutes computed by the proposed approach were validated on the basis of the experts' choices for each scenario. Our evaluation showed, in 20 out of 22 scenarios (91%), the approach identified the same substitutes as experts.

Towards Robot-Centric Conceptual Knowledge Acquisition

Oct 08, 2018

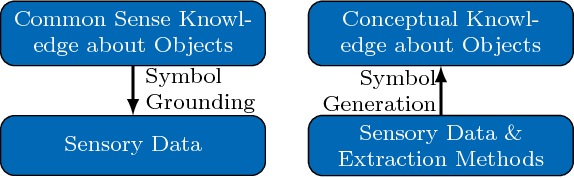

Abstract:Robots require knowledge about objects in order to efficiently perform various household tasks involving objects. The existing knowledge bases for robots acquire symbolic knowledge about objects from manually-coded external common sense knowledge bases such as ConceptNet, Word-Net etc. The problem with such approaches is the discrepancy between human-centric symbolic knowledge and robot-centric object perception due to its limited perception capabilities. Ultimately, significant portion of knowledge in the knowledge base remains ungrounded into robot's perception. To overcome this discrepancy, we propose an approach to enable robots to generate robot-centric symbolic knowledge about objects from their own sensory data, thus, allowing them to assemble their own conceptual understanding of objects. With this goal in mind, the presented paper elaborates on the work-in-progress of the proposed approach followed by the preliminary results.

Reasoning in complex environments with the SelectScript declarative language

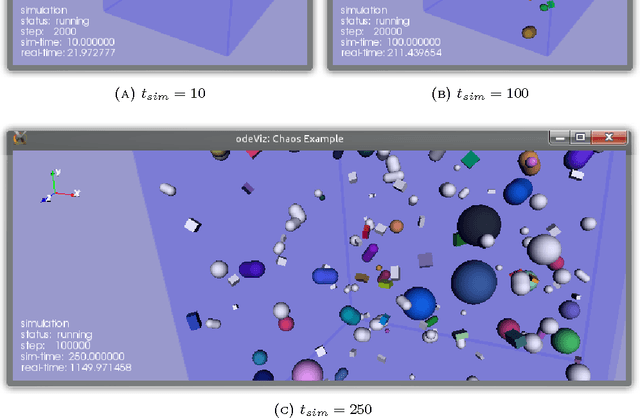

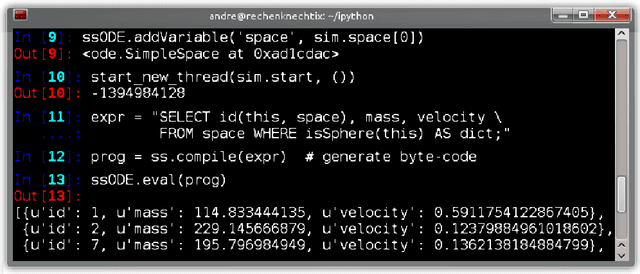

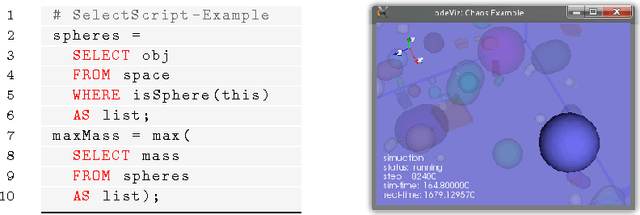

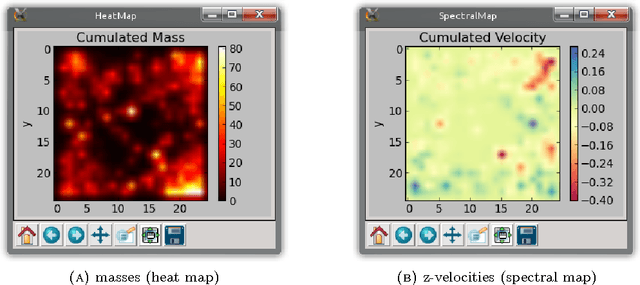

Oct 04, 2015

Abstract:SelectScript is an extendable, adaptable, and declarative domain-specific language aimed at information retrieval from simulation environments and robotic world models in an SQL-like manner. In this work we have extended the language in two directions. First, we have implemented hierarchical queries; second, we improve efficiency enabling manual design space exploration on different "search" strategies. We demonstrate the applicability of such extensions in two application problems; the basic language concepts are explained by solving the classical problem of the Towers of Hanoi and then a common path planning problem in a complex 3D environment is implemented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge