Unsupervised Watertight Mesh Generation for Physics Simulation Applications Using Growing Neural Gas on Noisy Free-Form Object Models

Paper and Code

Sep 03, 2016

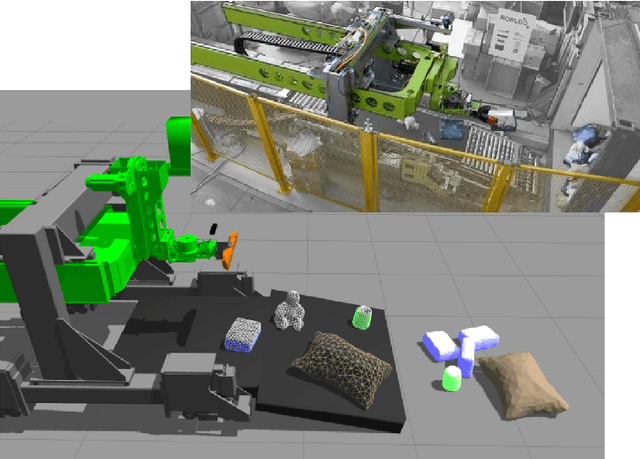

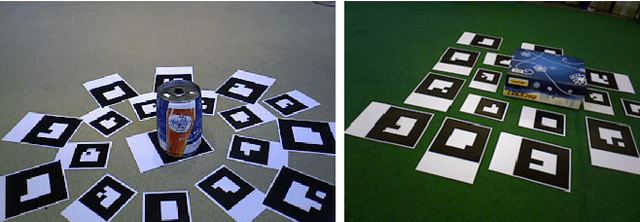

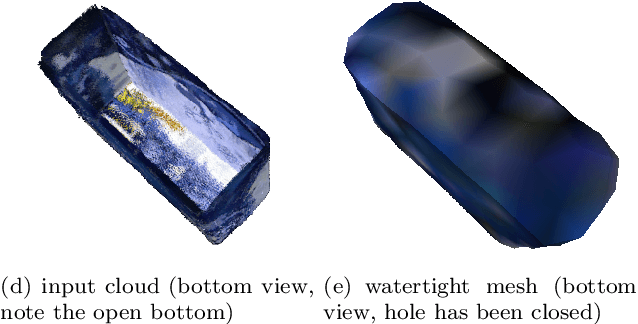

We present a framework to generate watertight mesh representations in an unsupervised manner from noisy point clouds of complex, heterogeneous objects with free-form surfaces. The resulting meshes are ready to use in applications like kinematics and dynamics simulation where watertightness and fast processing are the main quality criteria. This works with no necessity of user interaction, mainly by utilizing a modified Growing Neural Gas technique for surface reconstruction combined with several post-processing steps. In contrast to existing methods, the proposed framework is able to cope with input point clouds generated by consumer-grade RGBD sensors and works even if the input data features large holes, e.g. a missing bottom which was not covered by the sensor. Additionally, we explain a method to unsupervisedly optimize the parameters of our framework in order to improve generalization quality and, at the same time, keep the resulting meshes as coherent as possible to the original object regarding visual and geometric properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge