Tobias Fromm

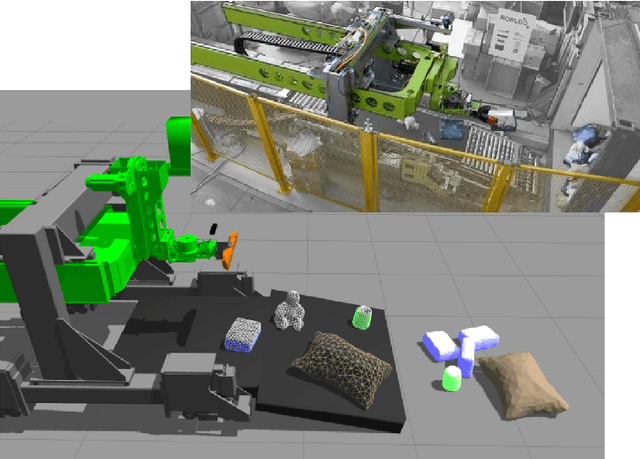

Self-Supervised Damage-Avoiding Manipulation Strategy Optimization via Mental Simulation

Dec 20, 2017

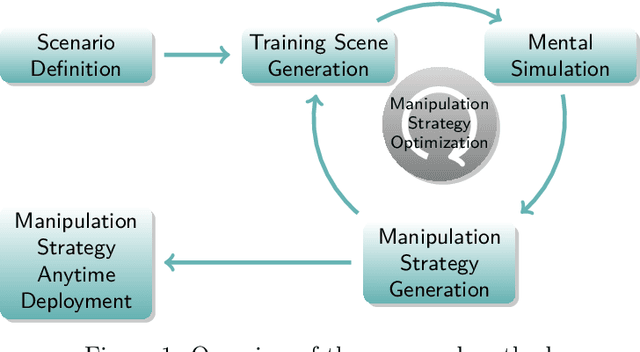

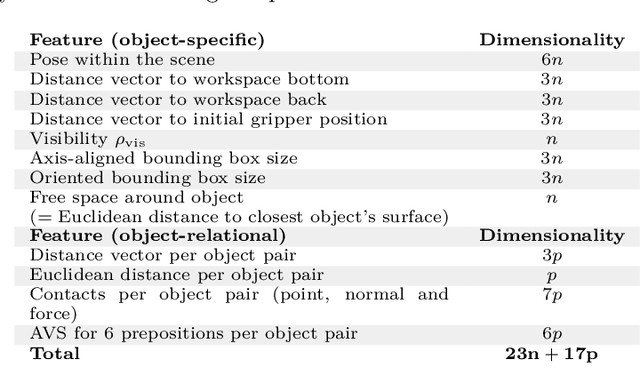

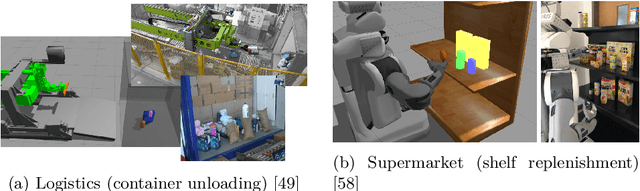

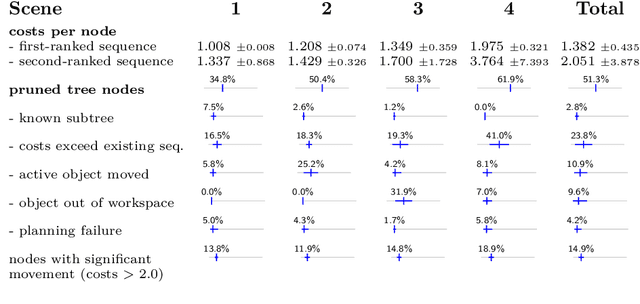

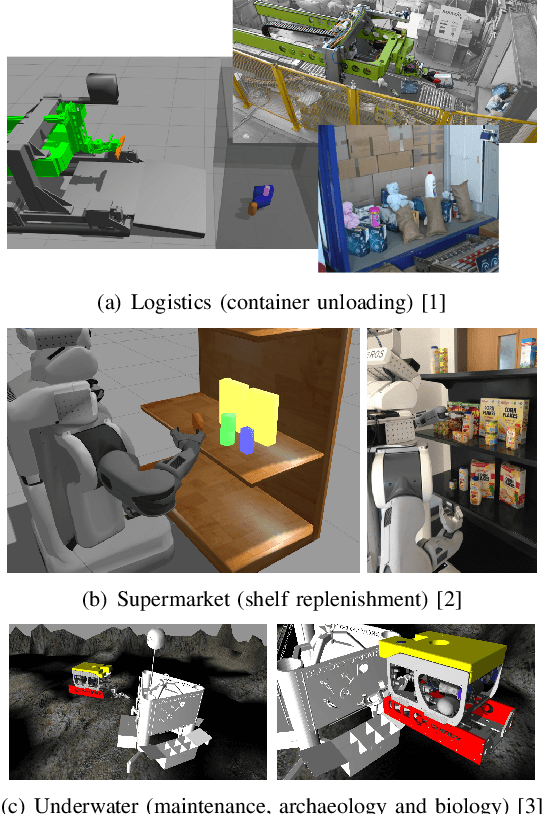

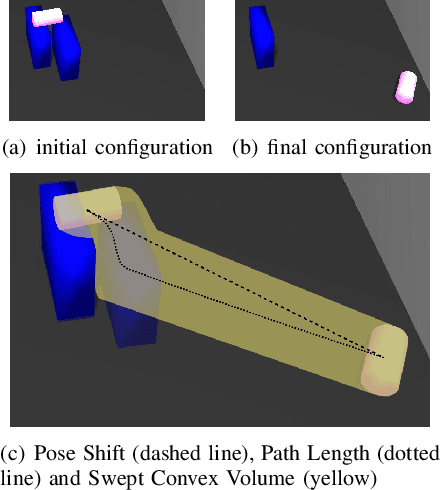

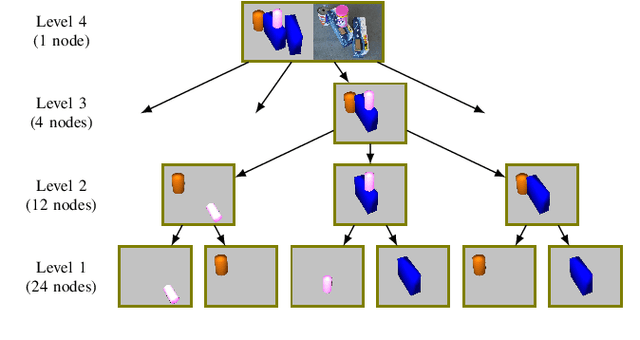

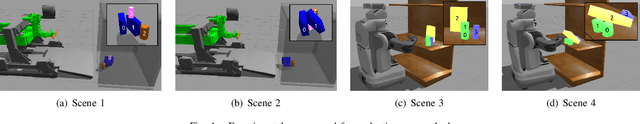

Abstract:Everyday robotics are challenged to deal with autonomous product handling in applications like logistics or retail, possibly causing damage on the items during manipulation. Traditionally, most approaches try to minimize physical interaction with goods. However, we propose to take into account any unintended motion of objects in the scene and to learn manipulation strategies in a self-supervised way which minimize the potential damage. The presented approach consists of a planning method that determines the optimal sequence to manipulate a number of objects in a scene with respect to possible damage by simulating interaction and hence anticipating scene dynamics. The planned manipulation sequences are taken as input to a machine learning process which generalizes to new, unseen scenes in the same application scenario. This learned manipulation strategy is continuously refined in a self-supervised optimization cycle dur- ing load-free times of the system. Such a simulation-in-the-loop setup is commonly known as mental simulation and allows for efficient, fully automatic generation of training data as opposed to classical supervised learning approaches. In parallel, the generated manipulation strategies can be deployed in near-real time in an anytime fashion. We evaluate our approach on one industrial scenario (autonomous container unloading) and one retail scenario (autonomous shelf replenishment).

Physics-Based Damage-Aware Manipulation Strategy Planning Using Scene Dynamics Anticipation

Dec 02, 2016

Abstract:We present a damage-aware planning approach which determines the best sequence to manipulate a number of objects in a scene. This works on task-planning level, abstracts from motion planning and anticipates the dynamics of the scene using a physics simulation. Instead of avoiding interaction with the environment, we take unintended motion of other objects into account and plan manipulation sequences which minimize the potential damage. Our method can also be used as a validation measure to judge planned motions for their feasibility in terms of damage avoidance. We evaluate our approach on one industrial scenario (autonomous container unloading) and one retail scenario (shelf replenishment).

* published on IROS 2016

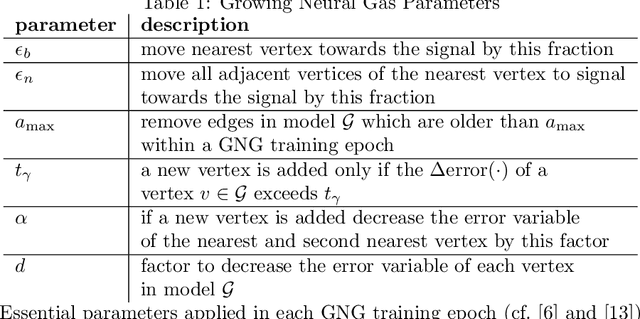

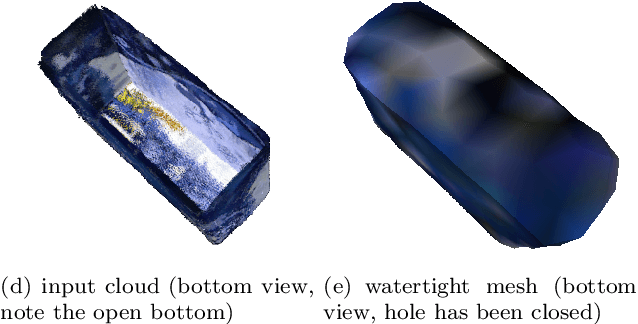

Unsupervised Watertight Mesh Generation for Physics Simulation Applications Using Growing Neural Gas on Noisy Free-Form Object Models

Sep 03, 2016

Abstract:We present a framework to generate watertight mesh representations in an unsupervised manner from noisy point clouds of complex, heterogeneous objects with free-form surfaces. The resulting meshes are ready to use in applications like kinematics and dynamics simulation where watertightness and fast processing are the main quality criteria. This works with no necessity of user interaction, mainly by utilizing a modified Growing Neural Gas technique for surface reconstruction combined with several post-processing steps. In contrast to existing methods, the proposed framework is able to cope with input point clouds generated by consumer-grade RGBD sensors and works even if the input data features large holes, e.g. a missing bottom which was not covered by the sensor. Additionally, we explain a method to unsupervisedly optimize the parameters of our framework in order to improve generalization quality and, at the same time, keep the resulting meshes as coherent as possible to the original object regarding visual and geometric properties.

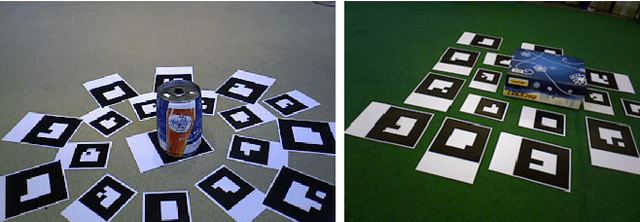

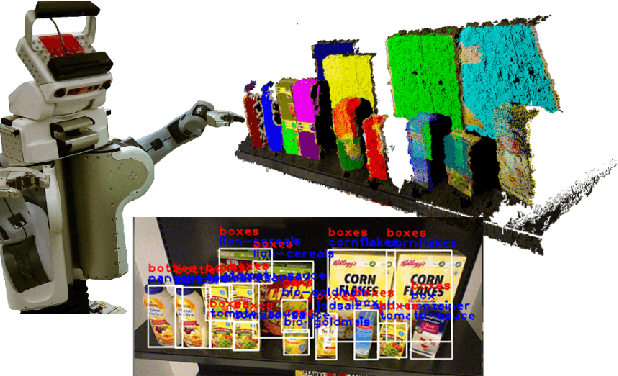

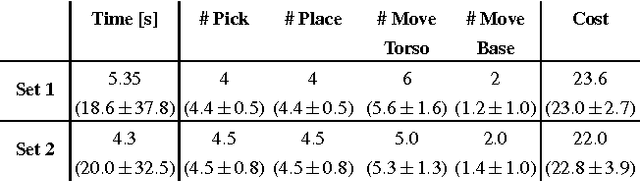

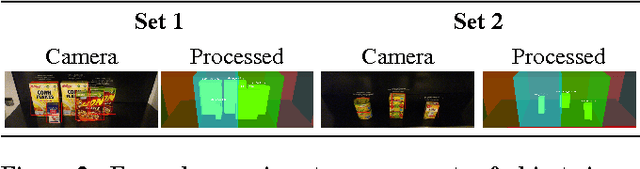

Knowledge-Enabled Robotic Agents for Shelf Replenishment in Cluttered Retail Environments

May 13, 2016

Abstract:Autonomous robots in unstructured and dynamically changing retail environments have to master complex perception, knowledgeprocessing, and manipulation tasks. To enable them to act competently, we propose a framework based on three core components: (o) a knowledge-enabled perception system, capable of combining diverse information sources to cope with occlusions and stacked objects with a variety of textures and shapes, (o) knowledge processing methods produce strategies for tidying up supermarket racks, and (o) the necessary manipulation skills in confined spaces to arrange objects in semi-accessible rack shelves. We demonstrate our framework in an simulated environment as well as on a real shopping rack using a PR2 robot. Typical supermarket products are detected and rearranged in the retail rack, tidying up what was found to be misplaced items.

* published in the proceedings of AAMAS 2016 as an extended abstract

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge