Xiaoling Long

Social Recommendation with Self-Supervised Metagraph Informax Network

Oct 08, 2021

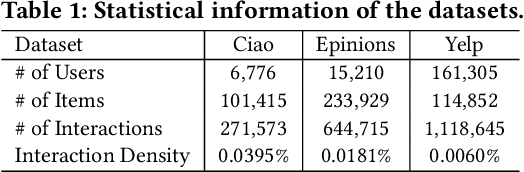

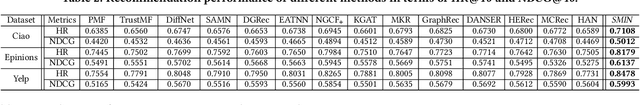

Abstract:In recent years, researchers attempt to utilize online social information to alleviate data sparsity for collaborative filtering, based on the rationale that social networks offers the insights to understand the behavioral patterns. However, due to the overlook of inter-dependent knowledge across items (e.g., categories of products), existing social recommender systems are insufficient to distill the heterogeneous collaborative signals from both user and item sides. In this work, we propose a Self-Supervised Metagraph Infor-max Network (SMIN) which investigates the potential of jointly incorporating social- and knowledge-aware relational structures into the user preference representation for recommendation. To model relation heterogeneity, we design a metapath-guided heterogeneous graph neural network to aggregate feature embeddings from different types of meta-relations across users and items, em-powering SMIN to maintain dedicated representations for multi-faceted user- and item-wise dependencies. Additionally, to inject high-order collaborative signals, we generalize the mutual information learning paradigm under the self-supervised graph-based collaborative filtering. This endows the expressive modeling of user-item interactive patterns, by exploring global-level collaborative relations and underlying isomorphic transformation property of graph topology. Experimental results on several real-world datasets demonstrate the effectiveness of our SMIN model over various state-of-the-art recommendation methods. We release our source code at https://github.com/SocialRecsys/SMIN.

Improved Visual-Inertial Localization for Low-cost Rescue Robots

Nov 17, 2020

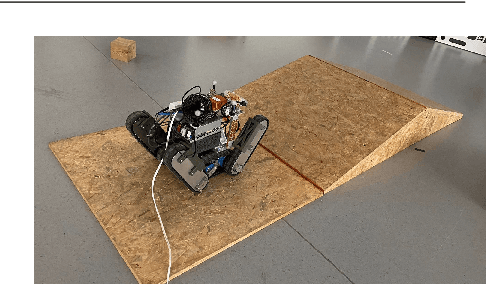

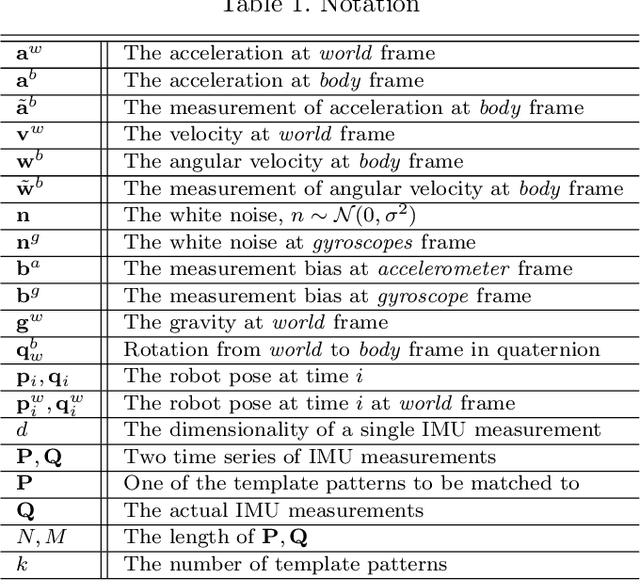

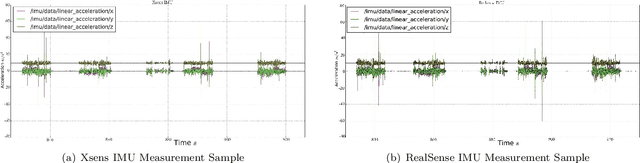

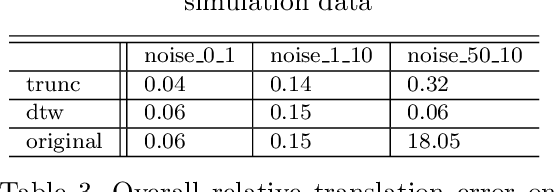

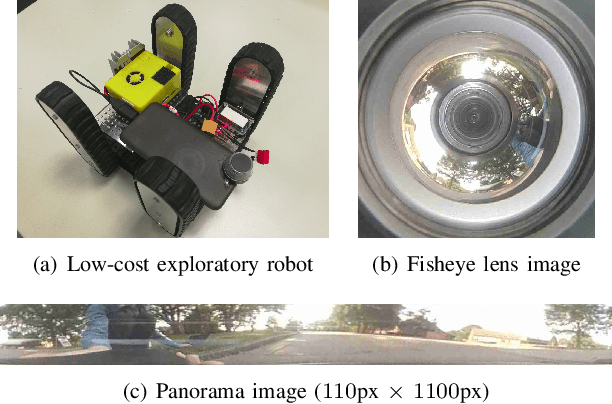

Abstract:This paper improves visual-inertial systems to boost the localization accuracy for low-cost rescue robots. When robots traverse on rugged terrain, the performance of pose estimation suffers from big noise on the measurements of the inertial sensors due to ground contact forces, especially for low-cost sensors. Therefore, we propose \textit{Threshold}-based and \textit{Dynamic Time Warping}-based methods to detect abnormal measurements and mitigate such faults. The two methods are embedded into the popular VINS-Mono system to evaluate their performance. Experiments are performed on simulation and real robot data, which show that both methods increase the pose estimation accuracy. Moreover, the \textit{Threshold}-based method performs better when the noise is small and the \textit{Dynamic Time Warping}-based one shows greater potential on large noise.

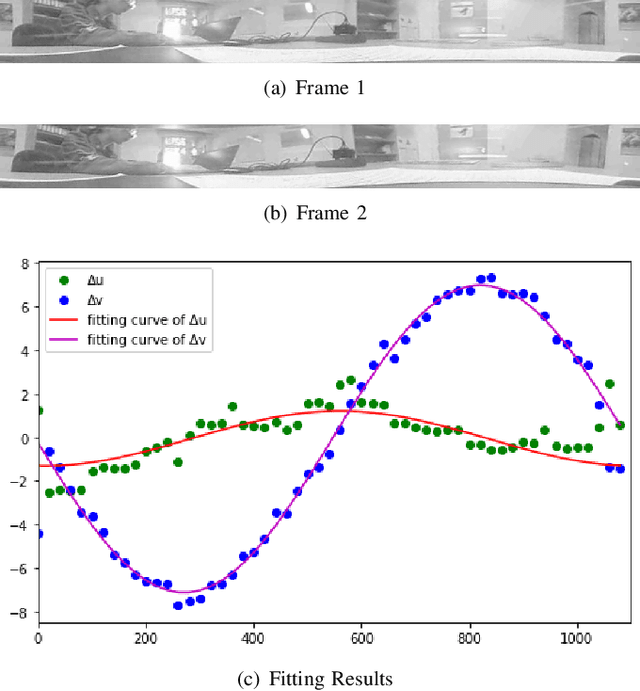

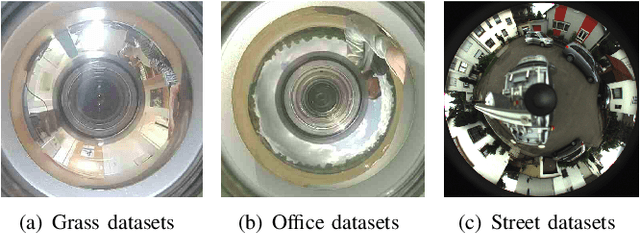

Pose Estimation for Omni-directional Cameras using Sinusoid Fitting

Oct 03, 2019

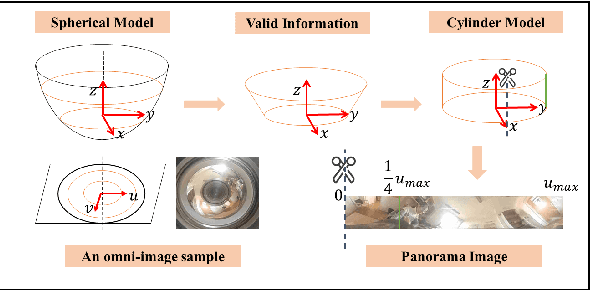

Abstract:We propose a novel pose estimation method for geometric vision of omni-directional cameras. On the basis of the regularity of the pixel movement after camera pose changes, we formulate and prove the sinusoidal relationship between pixels movement and camera motion. We use the improved Fourier-Mellin invariant (iFMI) algorithm to find the motion of pixels, which was shown to be more accurate and robust than the feature-based methods. While iFMI works only on pin-hole model images and estimates 4 parameters (x, y, yaw, scaling), our method works on panoramic images and estimates the full 6 DoF 3D transform, up to an unknown scale factor. For that we fit the motion of the pixels in the panoramic images, as determined by iFMI, to two sinusoidal functions. The offsets, amplitudes and phase-shifts of the two functions then represent the 3D rotation and translation of the camera between the two images. We perform experiments for 3D rotation, which show that our algorithm outperforms the feature-based methods in accuracy and robustness. We leave the more complex 3D translation experiments for future work.

Path Planning Tolerant to Degraded Locomotion Conditions

Sep 23, 2019

Abstract:Mobile robots, especially those driving outdoors and in unstructured terrain, sometimes suffer from failures and errors in locomotion, like unevenly pressurized or flat tires, loose axes or de-tracked tracks. Those are errors that go unnoticed by the odometry of the robot. Other factors that influence the locomotion performance of the robot, like the weight and distribution of the payload, the terrain over which the robot is driving or the battery charge could not be compensated for by the PID speed or position controller of the robot, because of the physical limits of the system. Traditional planning systems are oblivious to those problems and may thus plan unfeasible trajectories. Also, the path following modules oblivious to those problems will generate sub-optimal motion patterns, if they can get to the goal at all. In this paper, we present an adaptive path planning algorithm that is tolerant to such degraded locomotion conditions. We do this by constantly observing the executed motions of the robot via simultaneously localization and mapping (SLAM). From the executed path and the given motion commands, we constantly on the fly collect and cluster motion primitives (MP), which are in turn used for planning. Therefore the robot can automatically detect and adapt to different locomotion conditions and reflect those in the planned paths.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge