Depth Estimation on Underwater Omni-directional Images Using a Deep Neural Network

Paper and Code

May 23, 2019

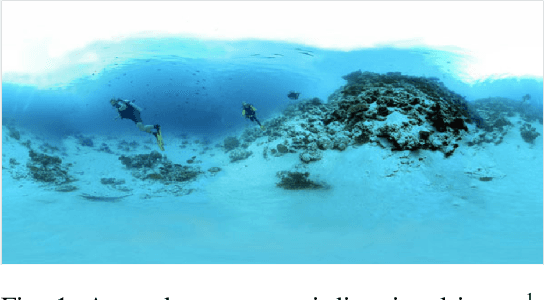

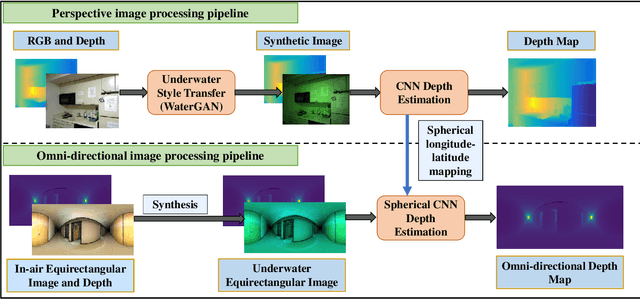

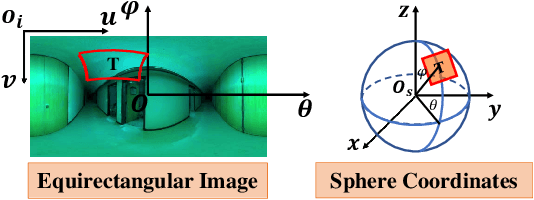

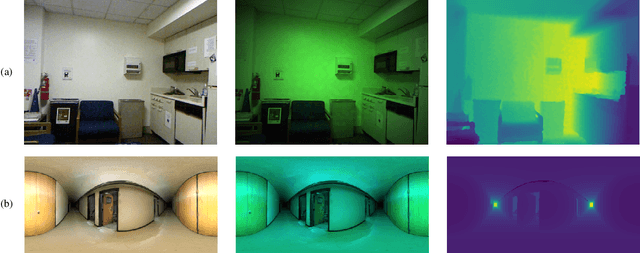

In this work, we exploit a depth estimation Fully Convolutional Residual Neural Network (FCRN) for in-air perspective images to estimate the depth of underwater perspective and omni-directional images. We train one conventional and one spherical FCRN for underwater perspective and omni-directional images, respectively. The spherical FCRN is derived from the perspective FCRN via a spherical longitude-latitude mapping. For that, the omni-directional camera is modeled as a sphere, while images captured by it are displayed in the longitude-latitude form. Due to the lack of underwater datasets, we synthesize images in both data-driven and theoretical ways, which are used in training and testing. Finally, experiments are conducted on these synthetic images and results are displayed in both qualitative and quantitative way. The comparison between ground truth and the estimated depth map indicates the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge