Zhenhui Peng

LitLinker: Supporting the Ideation of Interdisciplinary Contexts with Large Language Models for Teaching Literature in Elementary Schools

Feb 22, 2025

Abstract:Teaching literature under interdisciplinary contexts (e.g., science, art) that connect reading materials has become popular in elementary schools. However, constructing such contexts is challenging as it requires teachers to explore substantial amounts of interdisciplinary content and link it to the reading materials. In this paper, we develop LitLinker via an iterative design process involving 13 teachers to facilitate the ideation of interdisciplinary contexts for teaching literature. Powered by a large language model (LLM), LitLinker can recommend interdisciplinary topics and contextualize them with the literary elements (e.g., paragraphs, viewpoints) in the reading materials. A within-subjects study (N=16) shows that compared to an LLM chatbot, LitLinker can improve the integration depth of different subjects and reduce workload in this ideation task. Expert interviews (N=9) also demonstrate LitLinker's usefulness for supporting the ideation of interdisciplinary contexts for teaching literature. We conclude with concerns and design considerations for supporting interdisciplinary teaching with LLMs.

Towards Human-AI Deliberation: Design and Evaluation of LLM-Empowered Deliberative AI for AI-Assisted Decision-Making

Mar 25, 2024

Abstract:In AI-assisted decision-making, humans often passively review AI's suggestion and decide whether to accept or reject it as a whole. In such a paradigm, humans are found to rarely trigger analytical thinking and face difficulties in communicating the nuances of conflicting opinions to the AI when disagreements occur. To tackle this challenge, we propose Human-AI Deliberation, a novel framework to promote human reflection and discussion on conflicting human-AI opinions in decision-making. Based on theories in human deliberation, this framework engages humans and AI in dimension-level opinion elicitation, deliberative discussion, and decision updates. To empower AI with deliberative capabilities, we designed Deliberative AI, which leverages large language models (LLMs) as a bridge between humans and domain-specific models to enable flexible conversational interactions and faithful information provision. An exploratory evaluation on a graduate admissions task shows that Deliberative AI outperforms conventional explainable AI (XAI) assistants in improving humans' appropriate reliance and task performance. Based on a mixed-methods analysis of participant behavior, perception, user experience, and open-ended feedback, we draw implications for future AI-assisted decision tool design.

Charting the Future of AI in Project-Based Learning: A Co-Design Exploration with Students

Jan 29, 2024

Abstract:The increasing use of Artificial Intelligence (AI) by students in learning presents new challenges for assessing their learning outcomes in project-based learning (PBL). This paper introduces a co-design study to explore the potential of students' AI usage data as a novel material for PBL assessment. We conducted workshops with 18 college students, encouraging them to speculate an alternative world where they could freely employ AI in PBL while needing to report this process to assess their skills and contributions. Our workshops yielded various scenarios of students' use of AI in PBL and ways of analyzing these uses grounded by students' vision of education goal transformation. We also found students with different attitudes toward AI exhibited distinct preferences in how to analyze and understand the use of AI. Based on these findings, we discuss future research opportunities on student-AI interactions and understanding AI-enhanced learning.

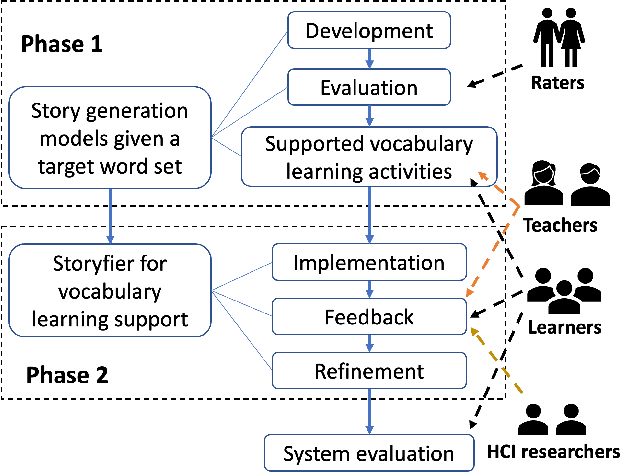

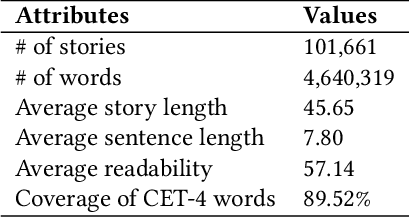

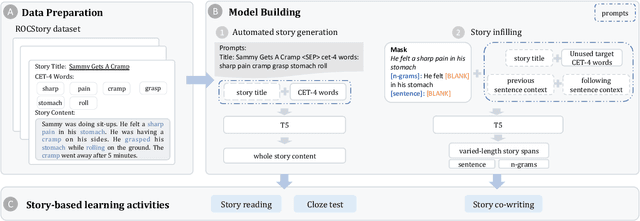

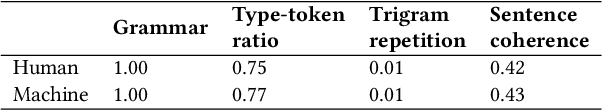

Storyfier: Exploring Vocabulary Learning Support with Text Generation Models

Aug 07, 2023

Abstract:Vocabulary learning support tools have widely exploited existing materials, e.g., stories or video clips, as contexts to help users memorize each target word. However, these tools could not provide a coherent context for any target words of learners' interests, and they seldom help practice word usage. In this paper, we work with teachers and students to iteratively develop Storyfier, which leverages text generation models to enable learners to read a generated story that covers any target words, conduct a story cloze test, and use these words to write a new story with adaptive AI assistance. Our within-subjects study (N=28) shows that learners generally favor the generated stories for connecting target words and writing assistance for easing their learning workload. However, in the read-cloze-write learning sessions, participants using Storyfier perform worse in recalling and using target words than learning with a baseline tool without our AI features. We discuss insights into supporting learning tasks with generative models.

CASS: Towards Building a Social-Support Chatbot for Online Health Community

Feb 04, 2021

Abstract:Chatbots systems, despite their popularity in today's HCI and CSCW research, fall short for one of the two reasons: 1) many of the systems use a rule-based dialog flow, thus they can only respond to a limited number of pre-defined inputs with pre-scripted responses; or 2) they are designed with a focus on single-user scenarios, thus it is unclear how these systems may affect other users or the community. In this paper, we develop a generalizable chatbot architecture (CASS) to provide social support for community members in an online health community. The CASS architecture is based on advanced neural network algorithms, thus it can handle new inputs from users and generate a variety of responses to them. CASS is also generalizable as it can be easily migrate to other online communities. With a follow-up field experiment, CASS is proven useful in supporting individual members who seek emotional support. Our work also contributes to fill the research gap on how a chatbot may influence the whole community's engagement.

Investigating the Effects of Robot Engagement Communication on Learning from Demonstration

May 03, 2020

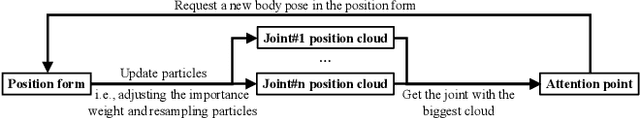

Abstract:Robot Learning from Demonstration (RLfD) is a technique for robots to derive policies from instructors' examples. Although the reciprocal effects of student engagement on teacher behavior are widely recognized in the educational community, it is unclear whether the same phenomenon holds true for RLfD. To fill this gap, we first design three types of robot engagement behavior (attention, imitation, and a hybrid of the two) based on the learning literature. We then conduct, in a simulation environment, a within-subject user study to investigate the impact of different robot engagement cues on humans compared to a "without-engagement" condition. Results suggest that engagement communication significantly changes the human's estimation of the robots' capability and significantly raises their expectation towards the learning outcomes, even though we do not run actual learning algorithms in the experiments. Moreover, imitation behavior affects humans more than attention does in all metrics, while their combination has the most profound influences on humans. We also find that communicating engagement via imitation or the combined behavior significantly improve humans' perception towards the quality of demonstrations, even if all demonstrations are of the same quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge