Meng Xia

4D-CAAL: 4D Radar-Camera Calibration and Auto-Labeling for Autonomous Driving

Jan 29, 2026Abstract:4D radar has emerged as a critical sensor for autonomous driving, primarily due to its enhanced capabilities in elevation measurement and higher resolution compared to traditional 3D radar. Effective integration of 4D radar with cameras requires accurate extrinsic calibration, and the development of radar-based perception algorithms demands large-scale annotated datasets. However, existing calibration methods often employ separate targets optimized for either visual or radar modalities, complicating correspondence establishment. Furthermore, manually labeling sparse radar data is labor-intensive and unreliable. To address these challenges, we propose 4D-CAAL, a unified framework for 4D radar-camera calibration and auto-labeling. Our approach introduces a novel dual-purpose calibration target design, integrating a checkerboard pattern on the front surface for camera detection and a corner reflector at the center of the back surface for radar detection. We develop a robust correspondence matching algorithm that aligns the checkerboard center with the strongest radar reflection point, enabling accurate extrinsic calibration. Subsequently, we present an auto-labeling pipeline that leverages the calibrated sensor relationship to transfer annotations from camera-based segmentations to radar point clouds through geometric projection and multi-feature optimization. Extensive experiments demonstrate that our method achieves high calibration accuracy while significantly reducing manual annotation effort, thereby accelerating the development of robust multi-modal perception systems for autonomous driving.

StuGPTViz: A Visual Analytics Approach to Understand Student-ChatGPT Interactions

Jul 17, 2024

Abstract:The integration of Large Language Models (LLMs), especially ChatGPT, into education is poised to revolutionize students' learning experiences by introducing innovative conversational learning methodologies. To empower students to fully leverage the capabilities of ChatGPT in educational scenarios, understanding students' interaction patterns with ChatGPT is crucial for instructors. However, this endeavor is challenging due to the absence of datasets focused on student-ChatGPT conversations and the complexities in identifying and analyzing the evolutional interaction patterns within conversations. To address these challenges, we collected conversational data from 48 students interacting with ChatGPT in a master's level data visualization course over one semester. We then developed a coding scheme, grounded in the literature on cognitive levels and thematic analysis, to categorize students' interaction patterns with ChatGPT. Furthermore, we present a visual analytics system, StuGPTViz, that tracks and compares temporal patterns in student prompts and the quality of ChatGPT's responses at multiple scales, revealing significant pedagogical insights for instructors. We validated the system's effectiveness through expert interviews with six data visualization instructors and three case studies. The results confirmed StuGPTViz's capacity to enhance educators' insights into the pedagogical value of ChatGPT. We also discussed the potential research opportunities of applying visual analytics in education and developing AI-driven personalized learning solutions.

Ruffle&Riley: Insights from Designing and Evaluating a Large Language Model-Based Conversational Tutoring System

Apr 26, 2024Abstract:Conversational tutoring systems (CTSs) offer learning experiences through interactions based on natural language. They are recognized for promoting cognitive engagement and improving learning outcomes, especially in reasoning tasks. Nonetheless, the cost associated with authoring CTS content is a major obstacle to widespread adoption and to research on effective instructional design. In this paper, we discuss and evaluate a novel type of CTS that leverages recent advances in large language models (LLMs) in two ways: First, the system enables AI-assisted content authoring by inducing an easily editable tutoring script automatically from a lesson text. Second, the system automates the script orchestration in a learning-by-teaching format via two LLM-based agents (Ruffle&Riley) acting as a student and a professor. The system allows for free-form conversations that follow the ITS-typical inner and outer loop structure. We evaluate Ruffle&Riley's ability to support biology lessons in two between-subject online user studies (N = 200) comparing the system to simpler QA chatbots and reading activity. Analyzing system usage patterns, pre/post-test scores and user experience surveys, we find that Ruffle&Riley users report high levels of engagement, understanding and perceive the offered support as helpful. Even though Ruffle&Riley users require more time to complete the activity, we did not find significant differences in short-term learning gains over the reading activity. Our system architecture and user study provide various insights for designers of future CTSs. We further open-source our system to support ongoing research on effective instructional design of LLM-based learning technologies.

Persua: A Visual Interactive System to Enhance the Persuasiveness of Arguments in Online Discussion

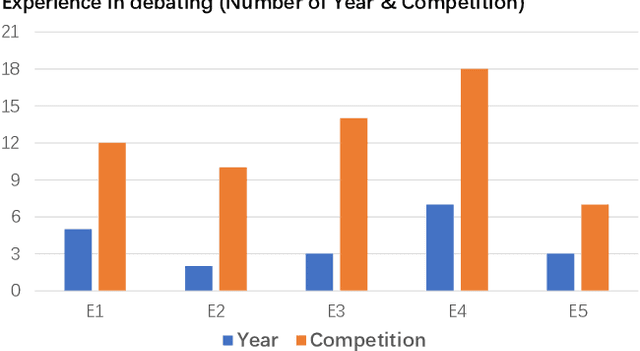

Apr 21, 2022

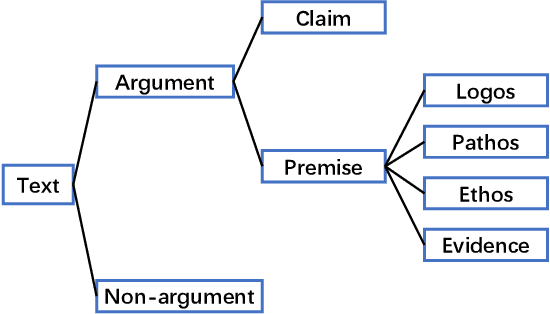

Abstract:Persuading people to change their opinions is a common practice in online discussion forums on topics ranging from political campaigns to relationship consultation. Enhancing people's ability to write persuasive arguments could not only practice their critical thinking and reasoning but also contribute to the effectiveness and civility in online communication. It is, however, not an easy task in online discussion settings where written words are the primary communication channel. In this paper, we derived four design goals for a tool that helps users improve the persuasiveness of arguments in online discussions through a survey with 123 online forum users and interviews with five debating experts. To satisfy these design goals, we analyzed and built a labeled dataset of fine-grained persuasive strategies (i.e., logos, pathos, ethos, and evidence) in 164 arguments with high ratings on persuasiveness from ChangeMyView, a popular online discussion forum. We then designed an interactive visual system, Persua, which provides example-based guidance on persuasive strategies to enhance the persuasiveness of arguments. In particular, the system constructs portfolios of arguments based on different persuasive strategies applied to a given discussion topic. It then presents concrete examples based on the difference between the portfolios of user input and high-quality arguments in the dataset. A between-subjects study shows suggestive evidence that Persua encourages users to submit more times for feedback and helps users improve more on the persuasiveness of their arguments than a baseline system. Finally, a set of design considerations was summarized to guide future intelligent systems that improve the persuasiveness in text.

Malignancy Prediction and Lesion Identification from Clinical Dermatological Images

Apr 02, 2021

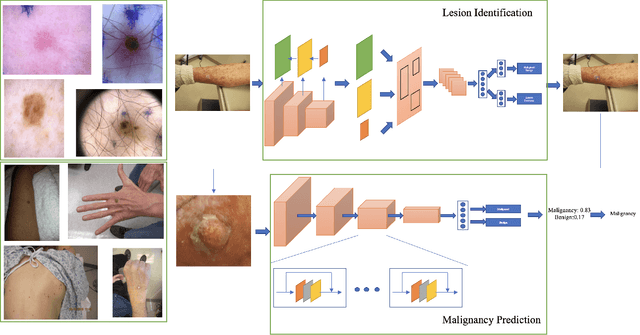

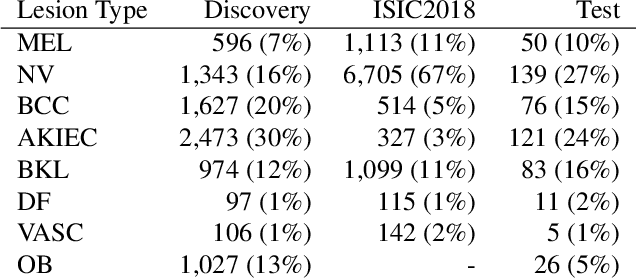

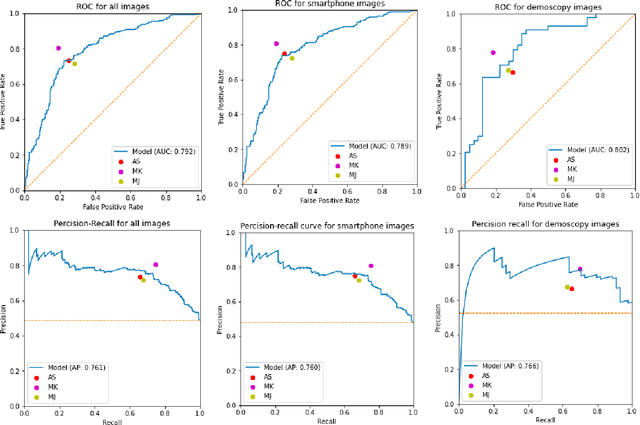

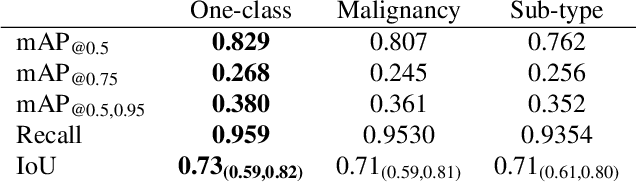

Abstract:We consider machine-learning-based malignancy prediction and lesion identification from clinical dermatological images, which can be indistinctly acquired via smartphone or dermoscopy capture. Additionally, we do not assume that images contain single lesions, thus the framework supports both focal or wide-field images. Specifically, we propose a two-stage approach in which we first identify all lesions present in the image regardless of sub-type or likelihood of malignancy, then it estimates their likelihood of malignancy, and through aggregation, it also generates an image-level likelihood of malignancy that can be used for high-level screening processes. Further, we consider augmenting the proposed approach with clinical covariates (from electronic health records) and publicly available data (the ISIC dataset). Comprehensive experiments validated on an independent test dataset demonstrate that i) the proposed approach outperforms alternative model architectures; ii) the model based on images outperforms a pure clinical model by a large margin, and the combination of images and clinical data does not significantly improves over the image-only model; and iii) the proposed framework offers comparable performance in terms of malignancy classification relative to three board certified dermatologists with different levels of experience.

Investigating the Effects of Robot Engagement Communication on Learning from Demonstration

May 03, 2020

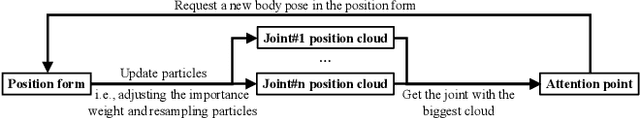

Abstract:Robot Learning from Demonstration (RLfD) is a technique for robots to derive policies from instructors' examples. Although the reciprocal effects of student engagement on teacher behavior are widely recognized in the educational community, it is unclear whether the same phenomenon holds true for RLfD. To fill this gap, we first design three types of robot engagement behavior (attention, imitation, and a hybrid of the two) based on the learning literature. We then conduct, in a simulation environment, a within-subject user study to investigate the impact of different robot engagement cues on humans compared to a "without-engagement" condition. Results suggest that engagement communication significantly changes the human's estimation of the robots' capability and significantly raises their expectation towards the learning outcomes, even though we do not run actual learning algorithms in the experiments. Moreover, imitation behavior affects humans more than attention does in all metrics, while their combination has the most profound influences on humans. We also find that communicating engagement via imitation or the combined behavior significantly improve humans' perception towards the quality of demonstrations, even if all demonstrations are of the same quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge