Zhang

June

Randomization Boosts KV Caching, Learning Balances Query Load: A Joint Perspective

Jan 26, 2026Abstract:KV caching is a fundamental technique for accelerating Large Language Model (LLM) inference by reusing key-value (KV) pairs from previous queries, but its effectiveness under limited memory is highly sensitive to the eviction policy. The default Least Recently Used (LRU) eviction algorithm struggles with dynamic online query arrivals, especially in multi-LLM serving scenarios, where balancing query load across workers and maximizing cache hit rate of each worker are inherently conflicting objectives. We give the first unified mathematical model that captures the core trade-offs between KV cache eviction and query routing. Our analysis reveals the theoretical limitations of existing methods and leads to principled algorithms that integrate provably competitive randomized KV cache eviction with learning-based methods to adaptively route queries with evolving patterns, thus balancing query load and cache hit rate. Our theoretical results are validated by extensive experiments across 4 benchmarks and 3 prefix-sharing settings, demonstrating improvements of up to 6.92$\times$ in cache hit rate, 11.96$\times$ reduction in latency, 14.06$\times$ reduction in time-to-first-token (TTFT), and 77.4% increase in throughput over the state-of-the-art methods. Our code is available at https://github.com/fzwark/KVRouting.

Exploring Protein Language Model Architecture-Induced Biases for Antibody Comprehension

Dec 10, 2025

Abstract:Recent advances in protein language models (PLMs) have demonstrated remarkable capabilities in understanding protein sequences. However, the extent to which different model architectures capture antibody-specific biological properties remains unexplored. In this work, we systematically investigate how architectural choices in PLMs influence their ability to comprehend antibody sequence characteristics and functions. We evaluate three state-of-the-art PLMs-AntiBERTa, BioBERT, and ESM2--against a general-purpose language model (GPT-2) baseline on antibody target specificity prediction tasks. Our results demonstrate that while all PLMs achieve high classification accuracy, they exhibit distinct biases in capturing biological features such as V gene usage, somatic hypermutation patterns, and isotype information. Through attention attribution analysis, we show that antibody-specific models like AntiBERTa naturally learn to focus on complementarity-determining regions (CDRs), while general protein models benefit significantly from explicit CDR-focused training strategies. These findings provide insights into the relationship between model architecture and biological feature extraction, offering valuable guidance for future PLM development in computational antibody design.

When to Think and When to Look: Uncertainty-Guided Lookback

Nov 19, 2025

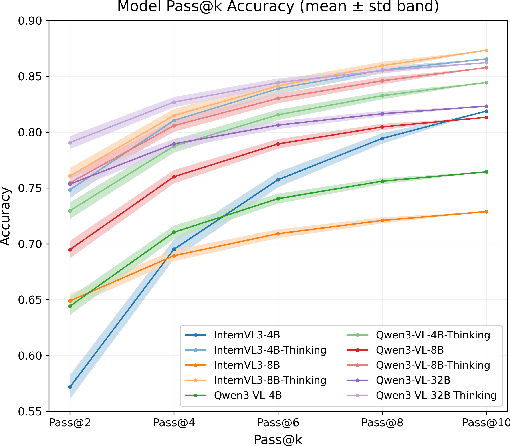

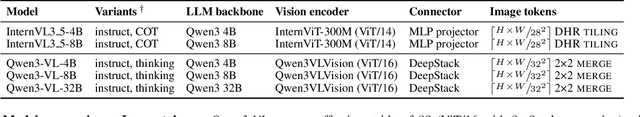

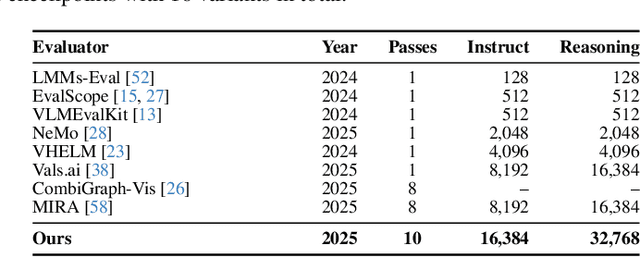

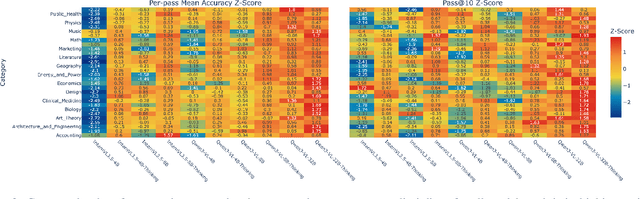

Abstract:Test-time thinking (that is, generating explicit intermediate reasoning chains) is known to boost performance in large language models and has recently shown strong gains for large vision language models (LVLMs). However, despite these promising results, there is still no systematic analysis of how thinking actually affects visual reasoning. We provide the first such analysis with a large scale, controlled comparison of thinking for LVLMs, evaluating ten variants from the InternVL3.5 and Qwen3-VL families on MMMU-val under generous token budgets and multi pass decoding. We show that more thinking is not always better; long chains often yield long wrong trajectories that ignore the image and underperform the same models run in standard instruct mode. A deeper analysis reveals that certain short lookback phrases, which explicitly refer back to the image, are strongly enriched in successful trajectories and correlate with better visual grounding. Building on this insight, we propose uncertainty guided lookback, a training free decoding strategy that combines an uncertainty signal with adaptive lookback prompts and breadth search. Our method improves overall MMMU performance, delivers the largest gains in categories where standard thinking is weak, and outperforms several strong decoding baselines, setting a new state of the art under fixed model families and token budgets. We further show that this decoding strategy generalizes, yielding consistent improvements on five additional benchmarks, including two broad multimodal suites and math focused visual reasoning datasets.

Step-Audio-EditX Technical Report

Nov 05, 2025

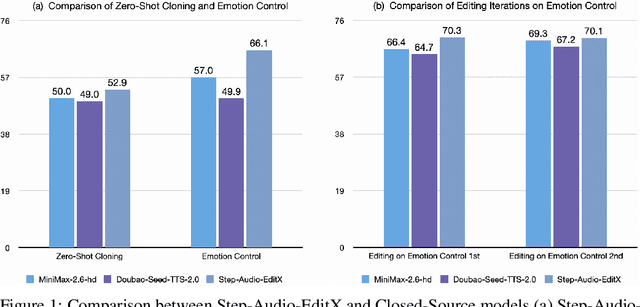

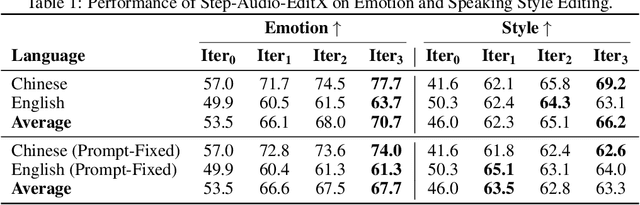

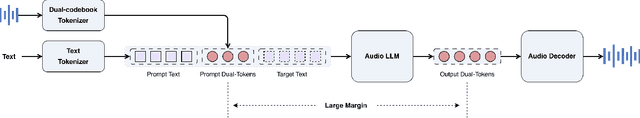

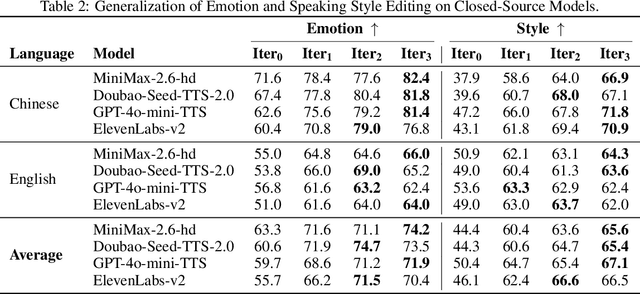

Abstract:We present Step-Audio-EditX, the first open-source LLM-based audio model excelling at expressive and iterative audio editing encompassing emotion, speaking style, and paralinguistics alongside robust zero-shot text-to-speech (TTS) capabilities.Our core innovation lies in leveraging only large-margin synthetic data, which circumvents the need for embedding-based priors or auxiliary modules. This large-margin learning approach enables both iterative control and high expressivity across voices, and represents a fundamental pivot from the conventional focus on representation-level disentanglement. Evaluation results demonstrate that Step-Audio-EditX surpasses both MiniMax-2.6-hd and Doubao-Seed-TTS-2.0 in emotion editing and other fine-grained control tasks.

Mind-Paced Speaking: A Dual-Brain Approach to Real-Time Reasoning in Spoken Language Models

Oct 10, 2025Abstract:Real-time Spoken Language Models (SLMs) struggle to leverage Chain-of-Thought (CoT) reasoning due to the prohibitive latency of generating the entire thought process sequentially. Enabling SLMs to think while speaking, similar to humans, is attracting increasing attention. We present, for the first time, Mind-Paced Speaking (MPS), a brain-inspired framework that enables high-fidelity, real-time reasoning. Similar to how humans utilize distinct brain regions for thinking and responding, we propose a novel dual-brain approach, employing a "Formulation Brain" for high-level reasoning to pace and guide a separate "Articulation Brain" for fluent speech generation. This division of labor eliminates mode-switching, preserving the integrity of the reasoning process. Experiments show that MPS significantly outperforms existing think-while-speaking methods and achieves reasoning performance comparable to models that pre-compute the full CoT before speaking, while drastically reducing latency. Under a zero-latency configuration, the proposed method achieves an accuracy of 92.8% on the mathematical reasoning task Spoken-MQA and attains a score of 82.5 on the speech conversation task URO-Bench. Our work effectively bridges the gap between high-quality reasoning and real-time interaction.

MoE-CE: Enhancing Generalization for Deep Learning based Channel Estimation via a Mixture-of-Experts Framework

Sep 19, 2025Abstract:Reliable channel estimation (CE) is fundamental for robust communication in dynamic wireless environments, where models must generalize across varying conditions such as signal-to-noise ratios (SNRs), the number of resource blocks (RBs), and channel profiles. Traditional deep learning (DL)-based methods struggle to generalize effectively across such diverse settings, particularly under multitask and zero-shot scenarios. In this work, we propose MoE-CE, a flexible mixture-of-experts (MoE) framework designed to enhance the generalization capability of DL-based CE methods. MoE-CE provides an appropriate inductive bias by leveraging multiple expert subnetworks, each specialized in distinct channel characteristics, and a learned router that dynamically selects the most relevant experts per input. This architecture enhances model capacity and adaptability without a proportional rise in computational cost while being agnostic to the choice of the backbone model and the learning algorithm. Through extensive experiments on synthetic datasets generated under diverse SNRs, RB numbers, and channel profiles, including multitask and zero-shot evaluations, we demonstrate that MoE-CE consistently outperforms conventional DL approaches, achieving significant performance gains while maintaining efficiency.

Prompting Wireless Networks: Reinforced In-Context Learning for Power Control

Jun 06, 2025Abstract:To manage and optimize constantly evolving wireless networks, existing machine learning (ML)- based studies operate as black-box models, leading to increased computational costs during training and a lack of transparency in decision-making, which limits their practical applicability in wireless networks. Motivated by recent advancements in large language model (LLM)-enabled wireless networks, this paper proposes ProWin, a novel framework that leverages reinforced in-context learning to design task-specific demonstration Prompts for Wireless Network optimization, relying on the inference capabilities of LLMs without the need for dedicated model training or finetuning. The task-specific prompts are designed to incorporate natural language descriptions of the task description and formulation, enhancing interpretability and eliminating the need for specialized expertise in network optimization. We further propose a reinforced in-context learning scheme that incorporates a set of advisable examples into task-specific prompts, wherein informative examples capturing historical environment states and decisions are adaptively selected to guide current decision-making. Evaluations on a case study of base station power control showcases that the proposed ProWin outperforms reinforcement learning (RL)-based methods, highlighting the potential for next-generation future wireless network optimization.

Understanding 6G through Language Models: A Case Study on LLM-aided Structured Entity Extraction in Telecom Domain

May 20, 2025Abstract:Knowledge understanding is a foundational part of envisioned 6G networks to advance network intelligence and AI-native network architectures. In this paradigm, information extraction plays a pivotal role in transforming fragmented telecom knowledge into well-structured formats, empowering diverse AI models to better understand network terminologies. This work proposes a novel language model-based information extraction technique, aiming to extract structured entities from the telecom context. The proposed telecom structured entity extraction (TeleSEE) technique applies a token-efficient representation method to predict entity types and attribute keys, aiming to save the number of output tokens and improve prediction accuracy. Meanwhile, TeleSEE involves a hierarchical parallel decoding method, improving the standard encoder-decoder architecture by integrating additional prompting and decoding strategies into entity extraction tasks. In addition, to better evaluate the performance of the proposed technique in the telecom domain, we further designed a dataset named 6GTech, including 2390 sentences and 23747 words from more than 100 6G-related technical publications. Finally, the experiment shows that the proposed TeleSEE method achieves higher accuracy than other baseline techniques, and also presents 5 to 9 times higher sample processing speed.

The Geography of Transportation Cybersecurity: Visitor Flows, Industry Clusters, and Spatial Dynamics

May 12, 2025Abstract:The rapid evolution of the transportation cybersecurity ecosystem, encompassing cybersecurity, automotive, and transportation and logistics sectors, will lead to the formation of distinct spatial clusters and visitor flow patterns across the US. This study examines the spatiotemporal dynamics of visitor flows, analyzing how socioeconomic factors shape industry clustering and workforce distribution within these evolving sectors. To model and predict visitor flow patterns, we develop a BiTransGCN framework, integrating an attention-based Transformer architecture with a Graph Convolutional Network backbone. By integrating AI-enabled forecasting techniques with spatial analysis, this study improves our ability to track, interpret, and anticipate changes in industry clustering and mobility trends, thereby supporting strategic planning for a secure and resilient transportation network. It offers a data-driven foundation for economic planning, workforce development, and targeted investments in the transportation cybersecurity ecosystem.

Partial Answer of How Transformers Learn Automata

Apr 29, 2025Abstract:We introduce a novel framework for simulating finite automata using representation-theoretic semidirect products and Fourier modules, achieving more efficient Transformer-based implementations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge