Jianzhong

Charlie

MoE-CE: Enhancing Generalization for Deep Learning based Channel Estimation via a Mixture-of-Experts Framework

Sep 19, 2025Abstract:Reliable channel estimation (CE) is fundamental for robust communication in dynamic wireless environments, where models must generalize across varying conditions such as signal-to-noise ratios (SNRs), the number of resource blocks (RBs), and channel profiles. Traditional deep learning (DL)-based methods struggle to generalize effectively across such diverse settings, particularly under multitask and zero-shot scenarios. In this work, we propose MoE-CE, a flexible mixture-of-experts (MoE) framework designed to enhance the generalization capability of DL-based CE methods. MoE-CE provides an appropriate inductive bias by leveraging multiple expert subnetworks, each specialized in distinct channel characteristics, and a learned router that dynamically selects the most relevant experts per input. This architecture enhances model capacity and adaptability without a proportional rise in computational cost while being agnostic to the choice of the backbone model and the learning algorithm. Through extensive experiments on synthetic datasets generated under diverse SNRs, RB numbers, and channel profiles, including multitask and zero-shot evaluations, we demonstrate that MoE-CE consistently outperforms conventional DL approaches, achieving significant performance gains while maintaining efficiency.

Prompting Wireless Networks: Reinforced In-Context Learning for Power Control

Jun 06, 2025Abstract:To manage and optimize constantly evolving wireless networks, existing machine learning (ML)- based studies operate as black-box models, leading to increased computational costs during training and a lack of transparency in decision-making, which limits their practical applicability in wireless networks. Motivated by recent advancements in large language model (LLM)-enabled wireless networks, this paper proposes ProWin, a novel framework that leverages reinforced in-context learning to design task-specific demonstration Prompts for Wireless Network optimization, relying on the inference capabilities of LLMs without the need for dedicated model training or finetuning. The task-specific prompts are designed to incorporate natural language descriptions of the task description and formulation, enhancing interpretability and eliminating the need for specialized expertise in network optimization. We further propose a reinforced in-context learning scheme that incorporates a set of advisable examples into task-specific prompts, wherein informative examples capturing historical environment states and decisions are adaptively selected to guide current decision-making. Evaluations on a case study of base station power control showcases that the proposed ProWin outperforms reinforcement learning (RL)-based methods, highlighting the potential for next-generation future wireless network optimization.

Understanding 6G through Language Models: A Case Study on LLM-aided Structured Entity Extraction in Telecom Domain

May 20, 2025Abstract:Knowledge understanding is a foundational part of envisioned 6G networks to advance network intelligence and AI-native network architectures. In this paradigm, information extraction plays a pivotal role in transforming fragmented telecom knowledge into well-structured formats, empowering diverse AI models to better understand network terminologies. This work proposes a novel language model-based information extraction technique, aiming to extract structured entities from the telecom context. The proposed telecom structured entity extraction (TeleSEE) technique applies a token-efficient representation method to predict entity types and attribute keys, aiming to save the number of output tokens and improve prediction accuracy. Meanwhile, TeleSEE involves a hierarchical parallel decoding method, improving the standard encoder-decoder architecture by integrating additional prompting and decoding strategies into entity extraction tasks. In addition, to better evaluate the performance of the proposed technique in the telecom domain, we further designed a dataset named 6GTech, including 2390 sentences and 23747 words from more than 100 6G-related technical publications. Finally, the experiment shows that the proposed TeleSEE method achieves higher accuracy than other baseline techniques, and also presents 5 to 9 times higher sample processing speed.

Enhancing Large Language Models (LLMs) for Telecommunications using Knowledge Graphs and Retrieval-Augmented Generation

Mar 31, 2025Abstract:Large language models (LLMs) have made significant progress in general-purpose natural language processing tasks. However, LLMs are still facing challenges when applied to domain-specific areas like telecommunications, which demands specialized expertise and adaptability to evolving standards. This paper presents a novel framework that combines knowledge graph (KG) and retrieval-augmented generation (RAG) techniques to enhance LLM performance in the telecom domain. The framework leverages a KG to capture structured, domain-specific information about network protocols, standards, and other telecom-related entities, comprehensively representing their relationships. By integrating KG with RAG, LLMs can dynamically access and utilize the most relevant and up-to-date knowledge during response generation. This hybrid approach bridges the gap between structured knowledge representation and the generative capabilities of LLMs, significantly enhancing accuracy, adaptability, and domain-specific comprehension. Our results demonstrate the effectiveness of the KG-RAG framework in addressing complex technical queries with precision. The proposed KG-RAG model attained an accuracy of 88% for question answering tasks on a frequently used telecom-specific dataset, compared to 82% for the RAG-only and 48% for the LLM-only approaches.

Low Complexity Frequency Domain Nonlinear Self-Interference Cancellation for Flexible Duplex

Mar 04, 2025Abstract:Nonlinear self-interference (SI) cancellation is essential for mitigating the impact of transmitter-side nonlinearity on overall SI cancellation performance in flexible duplex systems, including in-band full-duplex (IBFD) and sub-band full-duplex (SBFD). Digital SI cancellation (SIC) must address the nonlinearity in the power amplifier (PA) and the in-phase/quadrature-phase (IQ) imbalance from up/down converters at the base station (BS), in addition to analog SIC. In environments with rich signal reflection paths, however, the required number of delayed taps for time-domain nonlinear SI cancellation increases exponentially with the number of multipaths, leading to excessive complexity. This paper introduces a novel, low-complexity, frequency domain nonlinear SIC, suitable for flexible duplex systems with multiple-input and multiple-output (MIMO) configurations. The key approach involves decomposing nonlinear SI into a nonlinear basis and categorizing them based on their effectiveness across any flexible duplex setting. The proposed algorithm is founded on our analytical results of intermodulation distortion (IMD) in the frequency domain and utilizes a specialized pilot sequence. This algorithm is directly applicable to orthogonal frequency division multiplexing (OFDM) multi-carrier systems and offers lower complexity than conventional digital SIC methods. Additionally, we assess the impact of the proposed SIC on flexible duplex systems through system-level simulation (SLS) using 3D ray-tracing and proof-of-concept (PoC) measurement.

3D Beamforming Through Joint Phase-Time Arrays

Jan 01, 2024Abstract:High-frequency wide-bandwidth cellular communications over mmW and sub-THz offer the opportunity for high data rates, however, it also presents high pathloss, resulting in limited coverage. To mitigate the coverage limitations, high-gain beamforming is essential. Implementation of beamforming involves a large number of antennas, which introduces analog beam constraint, i.e., only one frequency-flat beam is generated per transceiver chain (TRx). Recently introduced joint phase-time array (JPTA) architecture, which utilizes both true time delay (TTD) units and phase shifters (PSs), alleviates analog beam constraint by creating multiple frequency-dependent beams per TRx, for scheduling multiple users at different directions in a frequency-division manner. One class of previous studies offered solutions with "rainbow" beams, which tend to allocate a small bandwidth per beam direction. Another class focused on uniform linear array (ULA) antenna architecture, whose frequency-dependent beams were designed along a single axis of either azimuth or elevation direction. In this paper, we present a novel 3D beamforming codebook design aimed at maximizing beamforming gain to steer radiation toward desired azimuth and elevation directions, as well as across sub-bands partitioned according to scheduled users' bandwidth requirements. We provide both analytical solutions and iterative algorithms to design the PSs and TTD units for a desired subband beam pattern. Through simulations of the beamforming gain, we observe that our proposed solutions outperform the state-of-the-art solutions reported elsewhere.

Joint Phase-Time Arrays: A Paradigm for Frequency-Dependent Analog Beamforming in 6G

Dec 18, 2023Abstract:Hybrid beamforming is an attractive solution to build cost-effective and energy-efficient transceivers for millimeter-wave and terahertz systems. However, conventional hybrid beamforming techniques rely on analog components that generate a frequency flat response such as phase-shifters and switches, which limits the flexibility of the achievable beam patterns. As a novel alternative, this paper proposes a new class of hybrid beamforming called Joint phase-time arrays (JPTA), that additionally use true-time delay elements in the analog beamforming to create frequency-dependent analog beams. Using as an example two important frequency-dependent beam behaviors, the numerous benefits of such flexibility are exemplified. Subsequently, the JPTA beamformer design problem to generate any desired beam behavior is formulated and near-optimal algorithms to the problem are proposed. Simulations show that the proposed algorithms can outperform heuristics solutions for JPTA beamformer update. Furthermore, it is shown that JPTA can achieve the two exemplified beam behaviors with one radio-frequency chain, while conventional hybrid beamforming requires the radio-frequency chains to scale with the number of antennas to achieve similar performance. Finally, a wide range of problems to further tap into the potential of JPTA are also listed as future directions.

* The paper is a revised version of the IEEE Access paper, that includes the full operation of Algorithms 1-3 to help curtail incorrect implementations

Optimal preprocessing of WiFi CSI for sensing applications

Jul 22, 2023Abstract:Due to its ubiquitous and contact-free nature, the use of WiFi infrastructure for performing sensing tasks has tremendous potential. However, the channel state information (CSI) measured by a WiFi receiver suffers from errors in both its gain and phase, which can significantly hinder sensing tasks. By analyzing these errors from different WiFi receivers, a mathematical model for these gain and phase errors is developed in this work. Based on these models, several theoretically justified preprocessing algorithms for correcting such errors at a receiver and, thus, obtaining clean CSI are presented. Simulation results show that at typical system parameters, the developed algorithms for cleaning CSI can reduce noise by $40$% and $200$%, respectively, compared to baseline methods for gain correction and phase correction, without significantly impacting computational cost. The superiority of the proposed methods is also validated in a real-world test bed for respiration rate monitoring (an exemplary sensing task), where they improve the estimation signal-to-noise ratio by $20$% compared to baseline methods.

RCNet: Incorporating Structural Information into Deep RNN for MIMO-OFDM Symbol Detection with Limited Training

Mar 15, 2020

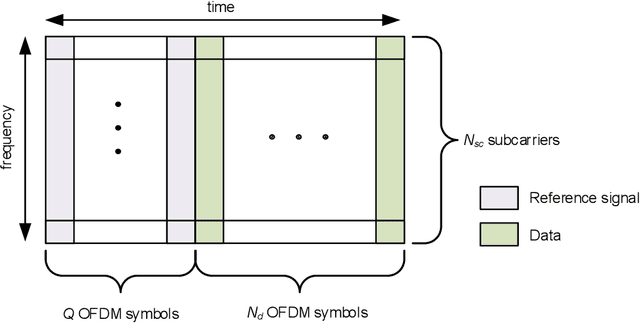

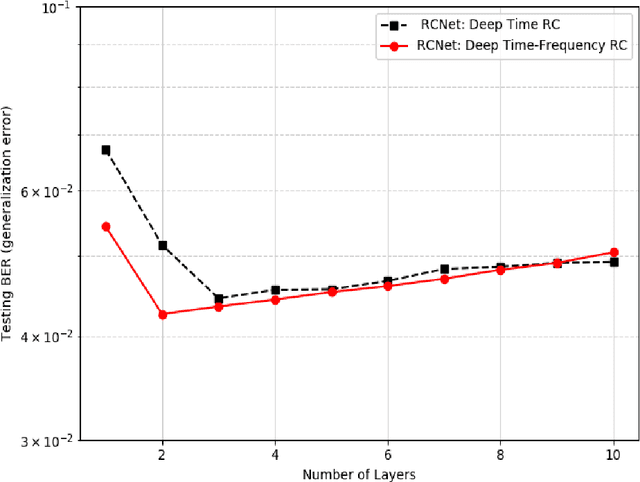

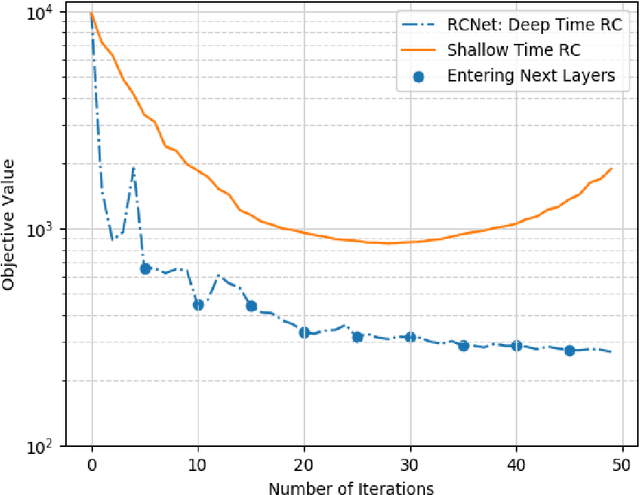

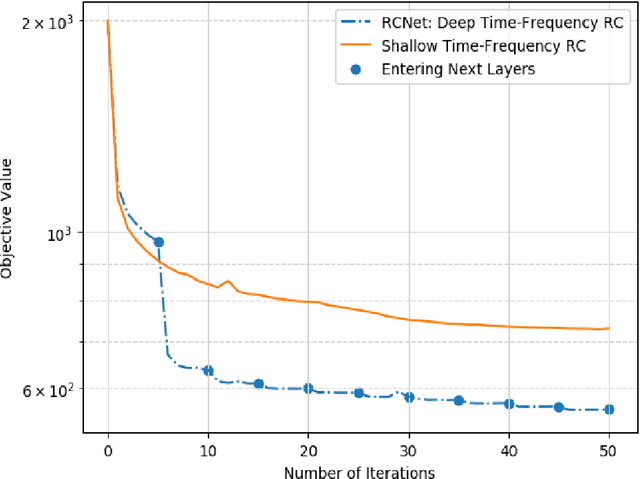

Abstract:In this paper, we investigate learning-based MIMO-OFDM symbol detection strategies focusing on a special recurrent neural network (RNN) -- reservoir computing (RC). We first introduce the Time-Frequency RC to take advantage of the structural information inherent in OFDM signals. Using the time domain RC and the time-frequency RC as the building blocks, we provide two extensions of the shallow RC to RCNet: 1) Stacking multiple time domain RCs; 2) Stacking multiple time-frequency RCs into a deep structure. The combination of RNN dynamics, the time-frequency structure of MIMO-OFDM signals, and the deep network enables RCNet to handle the interference and nonlinear distortion of MIMO-OFDM signals to outperform existing methods. Unlike most existing NN-based detection strategies, RCNet is also shown to provide a good generalization performance even with a limited training set (i.e, similar amount of reference signals/training as standard model-based approaches). Numerical experiments demonstrate that the introduced RCNet can offer a faster learning convergence and as much as 20% gain in bit error rate over a shallow RC structure by compensating for the nonlinear distortion of the MIMO-OFDM signal, such as due to power amplifier compression in the transmitter or due to finite quantization resolution in the receiver.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge