Elza Erkip

Charlie

Learned Pulse Shaping Design for PAPR Reduction in DFT-s-OFDM

Apr 24, 2024Abstract:High peak-to-average power ratio (PAPR) is one of the main factors limiting cell coverage for cellular systems, especially in the uplink direction. Discrete Fourier transform spread orthogonal frequency-domain multiplexing (DFT-s-OFDM) with spectrally-extended frequency-domain spectrum shaping (FDSS) is one of the efficient techniques deployed to lower the PAPR of the uplink waveforms. In this work, we propose a machine learning-based framework to determine the FDSS filter, optimizing a tradeoff between the symbol error rate (SER), the PAPR, and the spectral flatness requirements. Our end-to-end optimization framework considers multiple important design constraints, including the Nyquist zero-ISI (inter-symbol interference) condition. The numerical results show that learned FDSS filters lower the PAPR compared to conventional baselines, with minimal SER degradation. Tuning the parameters of the optimization also helps us understand the fundamental limitations and characteristics of the FDSS filters for PAPR reduction.

Neural Compress-and-Forward for the Relay Channel

Apr 22, 2024

Abstract:The relay channel, consisting of a source-destination pair and a relay, is a fundamental component of cooperative communications. While the capacity of a general relay channel remains unknown, various relaying strategies, including compress-and-forward (CF), have been proposed. For CF, given the correlated signals at the relay and destination, distributed compression techniques, such as Wyner-Ziv coding, can be harnessed to utilize the relay-to-destination link more efficiently. In light of the recent advancements in neural network-based distributed compression, we revisit the relay channel problem, where we integrate a learned one-shot Wyner--Ziv compressor into a primitive relay channel with a finite-capacity and orthogonal (or out-of-band) relay-to-destination link. The resulting neural CF scheme demonstrates that our task-oriented compressor recovers "binning" of the quantized indices at the relay, mimicking the optimal asymptotic CF strategy, although no structure exploiting the knowledge of source statistics was imposed into the design. We show that the proposed neural CF scheme, employing finite order modulation, operates closely to the capacity of a primitive relay channel that assumes a Gaussian codebook. Our learned compressor provides the first proof-of-concept work toward a practical neural CF relaying scheme.

Towards Efficient Device Identification in Massive Random Access: A Multi-stage Approach

Apr 13, 2024

Abstract:Efficient and low-latency wireless connectivity between the base station (BS) and a sparse set of sporadically active devices from a massive number of devices is crucial for random access in emerging massive machine-type communications (mMTC). This paper addresses the challenge of identifying active devices while meeting stringent access delay and reliability constraints in mMTC environments. A novel multi-stage active device identification framework is proposed where we aim to refine a partial estimate of the active device set using feedback and hypothesis testing across multiple stages eventually leading to an exact recovery of active devices after the final stage of processing. In our proposed approach, active devices independently transmit binary preambles during each stage, leveraging feedback signals from the BS, whereas the BS employs a non-coherent binary energy detection. The minimum user identification cost associated with our multi-stage non-coherent active device identification framework with feedback, in terms of the required number of channel-uses, is quantified using information-theoretic techniques in the asymptotic regime of total number of devices $\ell$ when the number of active devices $k$ scales as $k = {\Theta}(1)$. Practical implementations of our multi-stage active device identification schemes, leveraging Belief Propagation (BP) techniques, are also presented and evaluated. Simulation results show that our multi-stage BP strategies exhibit superior performance over single-stage strategies, even when considering overhead costs associated with feedback and hypothesis testing.

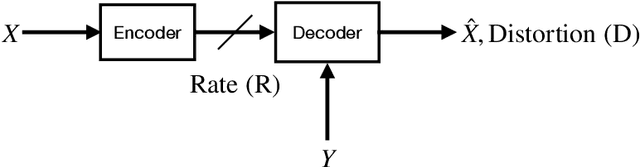

Robust Distributed Compression with Learned Heegard-Berger Scheme

Mar 13, 2024

Abstract:We consider lossy compression of an information source when decoder-only side information may be absent. This setup, also referred to as the Heegard-Berger or Kaspi problem, is a special case of robust distributed source coding. Building upon previous works on neural network-based distributed compressors developed for the decoder-only side information (Wyner-Ziv) case, we propose learning-based schemes that are amenable to the availability of side information. We find that our learned compressors mimic the achievability part of the Heegard-Berger theorem and yield interpretable results operating close to information-theoretic bounds. Depending on the availability of the side information, our neural compressors recover characteristics of the point-to-point (i.e., with no side information) and the Wyner-Ziv coding strategies that include binning in the source space, although no structure exploiting knowledge of the source and side information was imposed into the design.

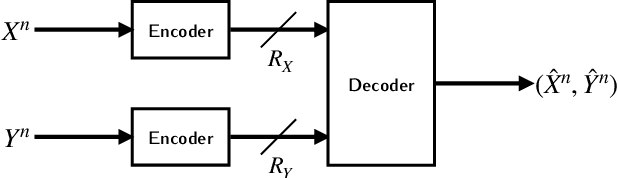

Distributed Compression in the Era of Machine Learning: A Review of Recent Advances

Feb 12, 2024

Abstract:Many applications from camera arrays to sensor networks require efficient compression and processing of correlated data, which in general is collected in a distributed fashion. While information-theoretic foundations of distributed compression are well investigated, the impact of theory in practice-oriented applications to this day has been somewhat limited. As the field of data compression is undergoing a transformation with the emergence of learning-based techniques, machine learning is becoming an important tool to reap the long-promised benefits of distributed compression. In this paper, we review the recent contributions in the broad area of learned distributed compression techniques for abstract sources and images. In particular, we discuss approaches that provide interpretable results operating close to information-theoretic bounds. We also highlight unresolved research challenges, aiming to inspire fresh interest and advancements in the field of learned distributed compression.

3D Beamforming Through Joint Phase-Time Arrays

Jan 01, 2024Abstract:High-frequency wide-bandwidth cellular communications over mmW and sub-THz offer the opportunity for high data rates, however, it also presents high pathloss, resulting in limited coverage. To mitigate the coverage limitations, high-gain beamforming is essential. Implementation of beamforming involves a large number of antennas, which introduces analog beam constraint, i.e., only one frequency-flat beam is generated per transceiver chain (TRx). Recently introduced joint phase-time array (JPTA) architecture, which utilizes both true time delay (TTD) units and phase shifters (PSs), alleviates analog beam constraint by creating multiple frequency-dependent beams per TRx, for scheduling multiple users at different directions in a frequency-division manner. One class of previous studies offered solutions with "rainbow" beams, which tend to allocate a small bandwidth per beam direction. Another class focused on uniform linear array (ULA) antenna architecture, whose frequency-dependent beams were designed along a single axis of either azimuth or elevation direction. In this paper, we present a novel 3D beamforming codebook design aimed at maximizing beamforming gain to steer radiation toward desired azimuth and elevation directions, as well as across sub-bands partitioned according to scheduled users' bandwidth requirements. We provide both analytical solutions and iterative algorithms to design the PSs and TTD units for a desired subband beam pattern. Through simulations of the beamforming gain, we observe that our proposed solutions outperform the state-of-the-art solutions reported elsewhere.

Neural Distributed Compressor Discovers Binning

Oct 25, 2023

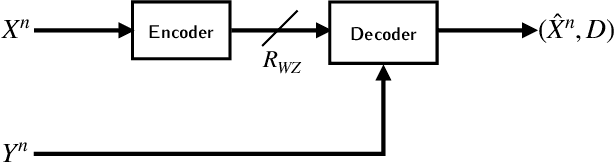

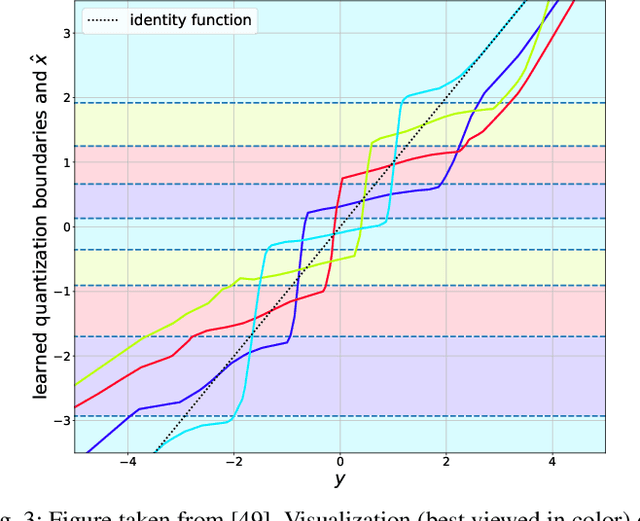

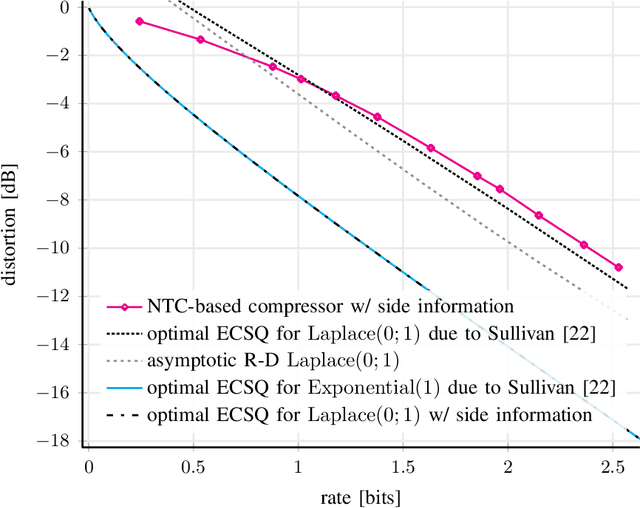

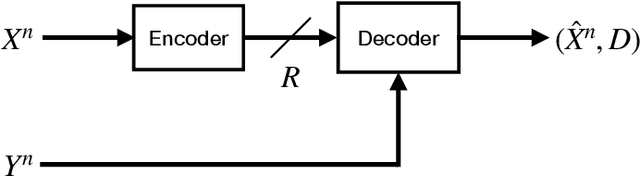

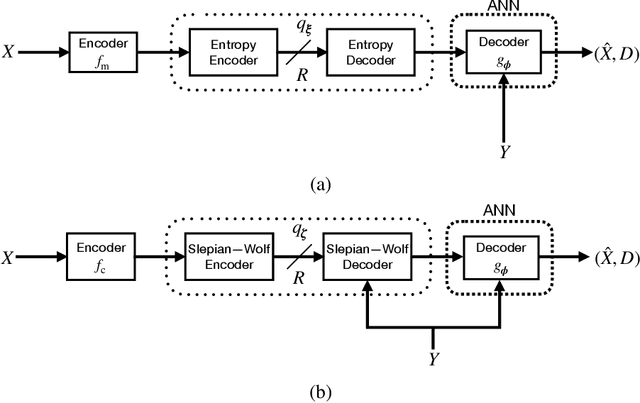

Abstract:We consider lossy compression of an information source when the decoder has lossless access to a correlated one. This setup, also known as the Wyner-Ziv problem, is a special case of distributed source coding. To this day, practical approaches for the Wyner-Ziv problem have neither been fully developed nor heavily investigated. We propose a data-driven method based on machine learning that leverages the universal function approximation capability of artificial neural networks. We find that our neural network-based compression scheme, based on variational vector quantization, recovers some principles of the optimum theoretical solution of the Wyner-Ziv setup, such as binning in the source space as well as optimal combination of the quantization index and side information, for exemplary sources. These behaviors emerge although no structure exploiting knowledge of the source distributions was imposed. Binning is a widely used tool in information theoretic proofs and methods, and to our knowledge, this is the first time it has been explicitly observed to emerge from data-driven learning.

Distributed Deep Joint Source-Channel Coding with Decoder-Only Side Information

Oct 06, 2023Abstract:We consider low-latency image transmission over a noisy wireless channel when correlated side information is present only at the receiver side (the Wyner-Ziv scenario). In particular, we are interested in developing practical schemes using a data-driven joint source-channel coding (JSCC) approach, which has been previously shown to outperform conventional separation-based approaches in the practical finite blocklength regimes, and to provide graceful degradation with channel quality. We propose a novel neural network architecture that incorporates the decoder-only side information at multiple stages at the receiver side. Our results demonstrate that the proposed method succeeds in integrating the side information, yielding improved performance at all channel noise levels in terms of the various distortion criteria considered here, especially at low channel signal-to-noise ratios (SNRs) and small bandwidth ratios (BRs). We also provide the source code of the proposed method to enable further research and reproducibility of the results.

Learned Wyner-Ziv Compressors Recover Binning

May 07, 2023

Abstract:We consider lossy compression of an information source when the decoder has lossless access to a correlated one. This setup, also known as the Wyner-Ziv problem, is a special case of distributed source coding. To this day, real-world applications of this problem have neither been fully developed nor heavily investigated. We propose a data-driven method based on machine learning that leverages the universal function approximation capability of artificial neural networks. We find that our neural network-based compression scheme re-discovers some principles of the optimum theoretical solution of the Wyner-Ziv setup, such as binning in the source space as well as linear decoder behavior within each quantization index, for the quadratic-Gaussian case. These behaviors emerge although no structure exploiting knowledge of the source distributions was imposed. Binning is a widely used tool in information theoretic proofs and methods, and to our knowledge, this is the first time it has been explicitly observed to emerge from data-driven learning.

Active User Identification in Fast Fading Massive Random Access Channels

Mar 30, 2023

Abstract:Reliable and prompt identification of active users is critical for enabling random access in massive machine-to-machine type networks which typically operate within stringent access delay and energy constraints. In this paper, an energy efficient active user identification protocol is envisioned in which the active users simultaneously transmit On-Off Keying (OOK) modulated preambles whereas the base station uses non-coherent detection to avoid the channel estimation overheads. The minimum number of channel-uses required for active user identification in the asymptotic regime of total number of users $\ell$ when the number of active devices k scales as $k = \Theta(1)$ is characterized along with an achievability scheme relying on the equivalence of activity detection to a group testing problem. A practical scheme for active user identification based on a belief propagation strategy is also proposed and its performance is compared against the theoretical bounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge