Joonyoung Cho

Learned Pulse Shaping Design for PAPR Reduction in DFT-s-OFDM

Apr 24, 2024Abstract:High peak-to-average power ratio (PAPR) is one of the main factors limiting cell coverage for cellular systems, especially in the uplink direction. Discrete Fourier transform spread orthogonal frequency-domain multiplexing (DFT-s-OFDM) with spectrally-extended frequency-domain spectrum shaping (FDSS) is one of the efficient techniques deployed to lower the PAPR of the uplink waveforms. In this work, we propose a machine learning-based framework to determine the FDSS filter, optimizing a tradeoff between the symbol error rate (SER), the PAPR, and the spectral flatness requirements. Our end-to-end optimization framework considers multiple important design constraints, including the Nyquist zero-ISI (inter-symbol interference) condition. The numerical results show that learned FDSS filters lower the PAPR compared to conventional baselines, with minimal SER degradation. Tuning the parameters of the optimization also helps us understand the fundamental limitations and characteristics of the FDSS filters for PAPR reduction.

Nested Construction of Polar Codes via Transformers

Jan 30, 2024Abstract:Tailoring polar code construction for decoding algorithms beyond successive cancellation has remained a topic of significant interest in the field. However, despite the inherent nested structure of polar codes, the use of sequence models in polar code construction is understudied. In this work, we propose using a sequence modeling framework to iteratively construct a polar code for any given length and rate under various channel conditions. Simulations show that polar codes designed via sequential modeling using transformers outperform both 5G-NR sequence and Density Evolution based approaches for both AWGN and Rayleigh fading channels.

Predicting Future CSI Feedback For Highly-Mobile Massive MIMO Systems

Feb 05, 2022Abstract:Massive multiple-input multiple-output (MIMO) system is promising in providing unprecedentedly high data rate. To achieve its full potential, the transceiver needs complete channel state information (CSI) to perform transmit/receive precoding/combining. This requirement, however, is challenging in the practical systems due to the unavoidable processing and feedback delays, which oftentimes degrades the performance to a great extent, especially in the high mobility scenarios. In this paper, we develop a deep learning based channel prediction framework that proactively predicts the downlink channel state information based on the past observed channel sequence. In its core, the model adopts a 3-D convolutional neural network (CNN) based architecture to efficiently learn the temporal, spatial and frequency correlations of downlink channel samples, based on which accurate channel prediction can be performed. Simulation results highlight the potential of the developed learning model in extracting information and predicting future downlink channels directly from the observed past channel sequence, which significantly improves the performance compared to the sample-and-hold approach, and mitigates the impact of the dynamic communication environment.

PolarDenseNet: A Deep Learning Model for CSI Feedback in MIMO Systems

Feb 02, 2022

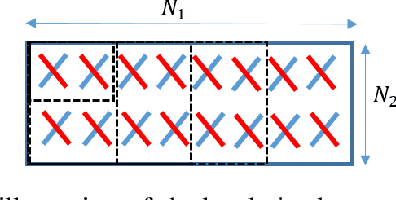

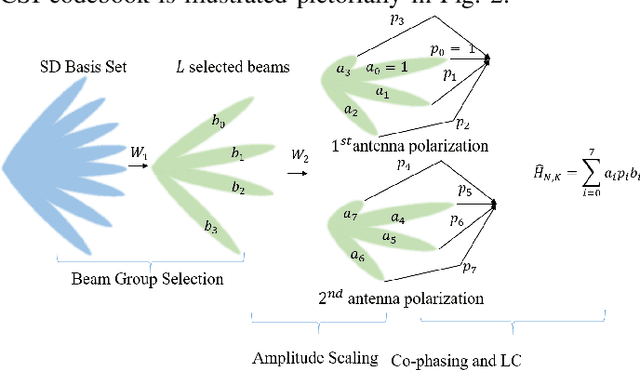

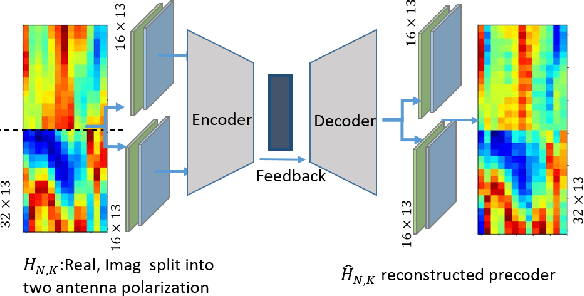

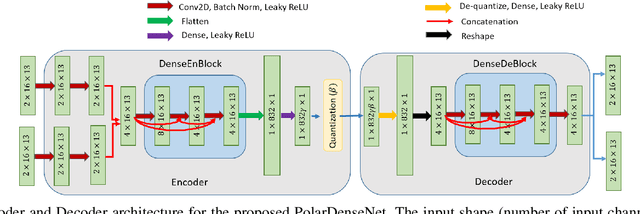

Abstract:In multiple-input multiple-output (MIMO) systems, the high-resolution channel information (CSI) is required at the base station (BS) to ensure optimal performance, especially in the case of multi-user MIMO (MU-MIMO) systems. In the absence of channel reciprocity in frequency division duplex (FDD) systems, the user needs to send the CSI to the BS. Often the large overhead associated with this CSI feedback in FDD systems becomes the bottleneck in improving the system performance. In this paper, we propose an AI-based CSI feedback based on an auto-encoder architecture that encodes the CSI at UE into a low-dimensional latent space and decodes it back at the BS by effectively reducing the feedback overhead while minimizing the loss during recovery. Our simulation results show that the AI-based proposed architecture outperforms the state-of-the-art high-resolution linear combination codebook using the DFT basis adopted in the 5G New Radio (NR) system.

Turbo Autoencoder with a Trainable Interleaver

Nov 22, 2021

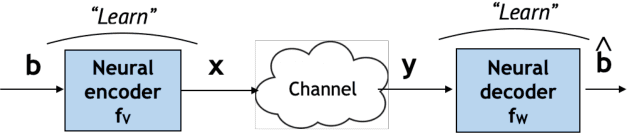

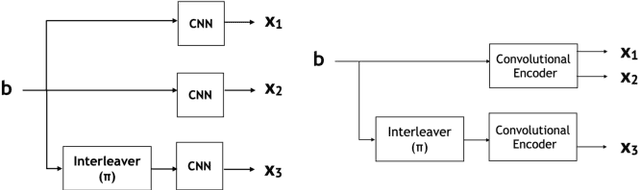

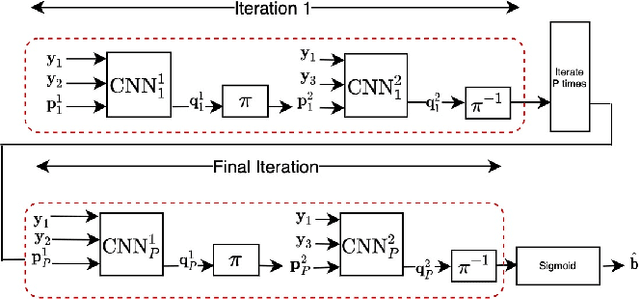

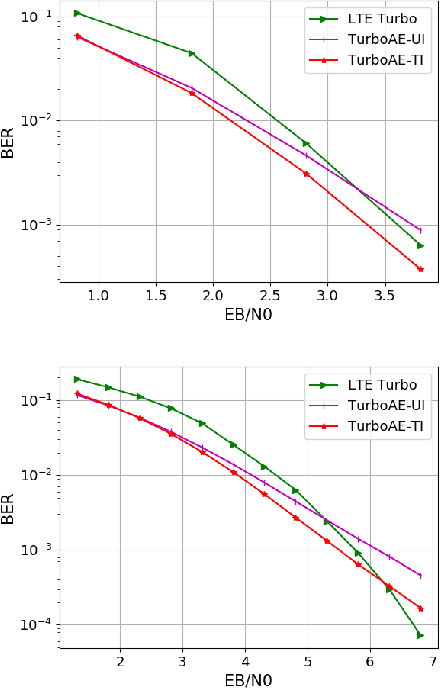

Abstract:A critical aspect of reliable communication involves the design of codes that allow transmissions to be robustly and computationally efficiently decoded under noisy conditions. Advances in the design of reliable codes have been driven by coding theory and have been sporadic. Recently, it is shown that channel codes that are comparable to modern codes can be learned solely via deep learning. In particular, Turbo Autoencoder (TURBOAE), introduced by Jiang et al., is shown to achieve the reliability of Turbo codes for Additive White Gaussian Noise channels. In this paper, we focus on applying the idea of TURBOAE to various practical channels, such as fading channels and chirp noise channels. We introduce TURBOAE-TI, a novel neural architecture that combines TURBOAE with a trainable interleaver design. We develop a carefully-designed training procedure and a novel interleaver penalty function that are crucial in learning the interleaver and TURBOAE jointly. We demonstrate that TURBOAE-TI outperforms TURBOAE and LTE Turbo codes for several channels of interest. We also provide interpretation analysis to better understand TURBOAE-TI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge