Pranav Madadi

Predicting Future CSI Feedback For Highly-Mobile Massive MIMO Systems

Feb 05, 2022Abstract:Massive multiple-input multiple-output (MIMO) system is promising in providing unprecedentedly high data rate. To achieve its full potential, the transceiver needs complete channel state information (CSI) to perform transmit/receive precoding/combining. This requirement, however, is challenging in the practical systems due to the unavoidable processing and feedback delays, which oftentimes degrades the performance to a great extent, especially in the high mobility scenarios. In this paper, we develop a deep learning based channel prediction framework that proactively predicts the downlink channel state information based on the past observed channel sequence. In its core, the model adopts a 3-D convolutional neural network (CNN) based architecture to efficiently learn the temporal, spatial and frequency correlations of downlink channel samples, based on which accurate channel prediction can be performed. Simulation results highlight the potential of the developed learning model in extracting information and predicting future downlink channels directly from the observed past channel sequence, which significantly improves the performance compared to the sample-and-hold approach, and mitigates the impact of the dynamic communication environment.

PolarDenseNet: A Deep Learning Model for CSI Feedback in MIMO Systems

Feb 02, 2022

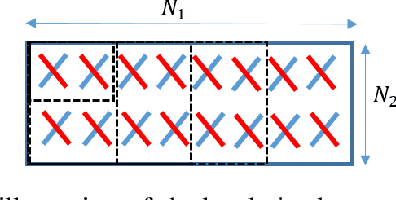

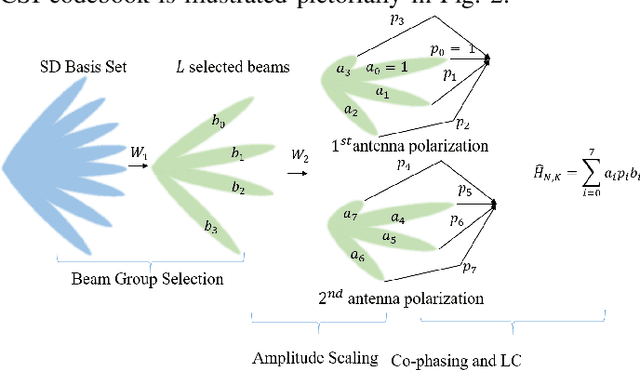

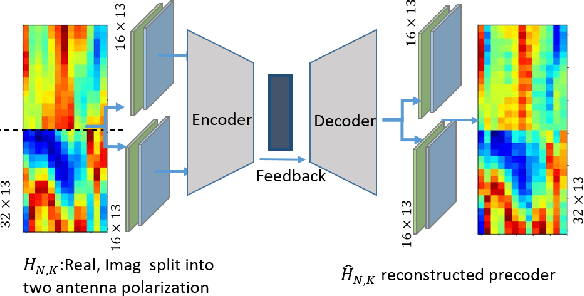

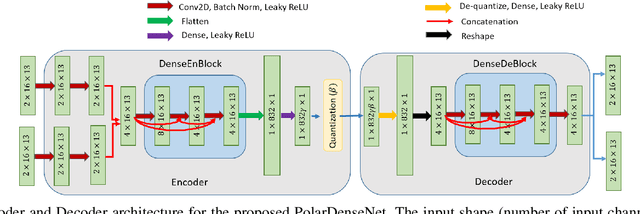

Abstract:In multiple-input multiple-output (MIMO) systems, the high-resolution channel information (CSI) is required at the base station (BS) to ensure optimal performance, especially in the case of multi-user MIMO (MU-MIMO) systems. In the absence of channel reciprocity in frequency division duplex (FDD) systems, the user needs to send the CSI to the BS. Often the large overhead associated with this CSI feedback in FDD systems becomes the bottleneck in improving the system performance. In this paper, we propose an AI-based CSI feedback based on an auto-encoder architecture that encodes the CSI at UE into a low-dimensional latent space and decodes it back at the BS by effectively reducing the feedback overhead while minimizing the loss during recovery. Our simulation results show that the AI-based proposed architecture outperforms the state-of-the-art high-resolution linear combination codebook using the DFT basis adopted in the 5G New Radio (NR) system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge