Shashank Jere

Charlie

Towards xAI: Configuring RNN Weights using Domain Knowledge for MIMO Receive Processing

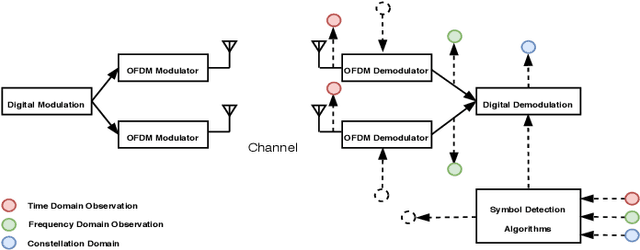

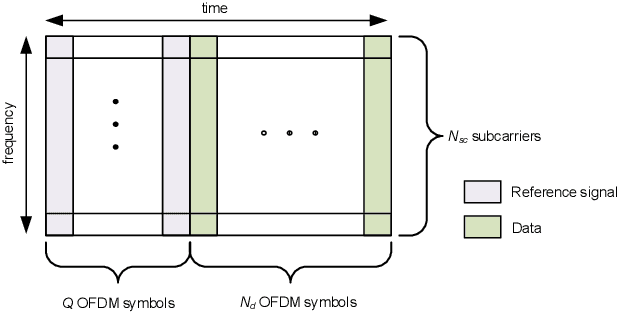

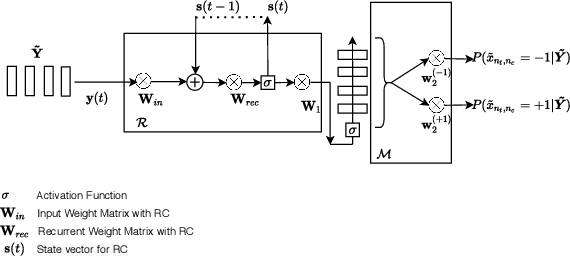

Oct 09, 2024Abstract:Deep learning is making a profound impact in the physical layer of wireless communications. Despite exhibiting outstanding empirical performance in tasks such as MIMO receive processing, the reasons behind the demonstrated superior performance improvement remain largely unclear. In this work, we advance the field of Explainable AI (xAI) in the physical layer of wireless communications utilizing signal processing principles. Specifically, we focus on the task of MIMO-OFDM receive processing (e.g., symbol detection) using reservoir computing (RC), a framework within recurrent neural networks (RNNs), which outperforms both conventional and other learning-based MIMO detectors. Our analysis provides a signal processing-based, first-principles understanding of the corresponding operation of the RC. Building on this fundamental understanding, we are able to systematically incorporate the domain knowledge of wireless systems (e.g., channel statistics) into the design of the underlying RNN by directly configuring the untrained RNN weights for MIMO-OFDM symbol detection. The introduced RNN weight configuration has been validated through extensive simulations demonstrating significant performance improvements. This establishes a foundation for explainable RC-based architectures in MIMO-OFDM receive processing and provides a roadmap for incorporating domain knowledge into the design of neural networks for NextG systems.

Learning at the Speed of Wireless: Online Real-Time Learning for AI-Enabled MIMO in NextG

Mar 05, 2024

Abstract:Integration of artificial intelligence (AI) and machine learning (ML) into the air interface has been envisioned as a key technology for next-generation (NextG) cellular networks. At the air interface, multiple-input multiple-output (MIMO) and its variants such as multi-user MIMO (MU-MIMO) and massive/full-dimension MIMO have been key enablers across successive generations of cellular networks with evolving complexity and design challenges. Initiating active investigation into leveraging AI/ML tools to address these challenges for MIMO becomes a critical step towards an AI-enabled NextG air interface. At the NextG air interface, the underlying wireless environment will be extremely dynamic with operation adaptations performed on a sub-millisecond basis by MIMO operations such as MU-MIMO scheduling and rank/link adaptation. Given the enormously large number of operation adaptation possibilities, we contend that online real-time AI/ML-based approaches constitute a promising paradigm. To this end, we outline the inherent challenges and offer insights into the design of such online real-time AI/ML-based solutions for MIMO operations. An online real-time AI/ML-based method for MIMO-OFDM channel estimation is then presented, serving as a potential roadmap for developing similar techniques across various MIMO operations in NextG.

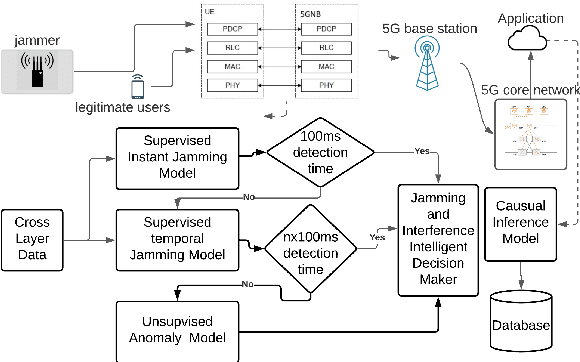

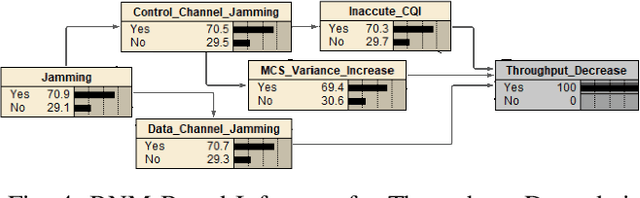

Anonymous Jamming Detection in 5G with Bayesian Network Model Based Inference Analysis

Nov 28, 2023

Abstract:Jamming and intrusion detection are critical in 5G research, aiming to maintain reliability, prevent user experience degradation, and avoid infrastructure failure. This paper introduces an anonymous jamming detection model for 5G based on signal parameters from the protocol stacks. The system uses supervised and unsupervised learning for real-time, high-accuracy detection of jamming, including unknown types. Supervised models reach an AUC of 0.964 to 1, compared to LSTM models with an AUC of 0.923 to 1. However, the need for data annotation limits the supervised approach. To address this, an unsupervised auto-encoder-based anomaly detection is presented with an AUC of 0.987. The approach is resistant to adversarial training samples. For transparency and domain knowledge injection, a Bayesian network-based causation analysis is introduced.

Towards Explainable Machine Learning: The Effectiveness of Reservoir Computing in Wireless Receive Processing

Oct 08, 2023Abstract:Deep learning has seen a rapid adoption in a variety of wireless communications applications, including at the physical layer. While it has delivered impressive performance in tasks such as channel equalization and receive processing/symbol detection, it leaves much to be desired when it comes to explaining this superior performance. In this work, we investigate the specific task of channel equalization by applying a popular learning-based technique known as Reservoir Computing (RC), which has shown superior performance compared to conventional methods and other learning-based approaches. Specifically, we apply the echo state network (ESN) as a channel equalizer and provide a first principles-based signal processing understanding of its operation. With this groundwork, we incorporate the available domain knowledge in the form of the statistics of the wireless channel directly into the weights of the ESN model. This paves the way for optimized initialization of the ESN model weights, which are traditionally untrained and randomly initialized. Finally, we show the improvement in receive processing/symbol detection performance with this optimized initialization through simulations. This is a first step towards explainable machine learning (XML) and assigning practical model interpretability that can be utilized together with the available domain knowledge to improve performance and enhance detection reliability.

Universal Approximation of Linear Time-Invariant (LTI) Systems through RNNs: Power of Randomness in Reservoir Computing

Aug 04, 2023Abstract:Recurrent neural networks (RNNs) are known to be universal approximators of dynamic systems under fairly mild and general assumptions, making them good tools to process temporal information. However, RNNs usually suffer from the issues of vanishing and exploding gradients in the standard RNN training. Reservoir computing (RC), a special RNN where the recurrent weights are randomized and left untrained, has been introduced to overcome these issues and has demonstrated superior empirical performance in fields as diverse as natural language processing and wireless communications especially in scenarios where training samples are extremely limited. On the contrary, the theoretical grounding to support this observed performance has not been fully developed at the same pace. In this work, we show that RNNs can provide universal approximation of linear time-invariant (LTI) systems. Specifically, we show that RC can universally approximate a general LTI system. We present a clear signal processing interpretation of RC and utilize this understanding in the problem of simulating a generic LTI system through RC. Under this setup, we analytically characterize the optimal probability distribution function for generating the recurrent weights of the underlying RNN of the RC. We provide extensive numerical evaluations to validate the optimality of the derived optimum distribution of the recurrent weights of the RC for the LTI system simulation problem. Our work results in clear signal processing-based model interpretability of RC and provides theoretical explanation for the power of randomness in setting instead of training RC's recurrent weights. It further provides a complete optimum analytical characterization for the untrained recurrent weights, marking an important step towards explainable machine learning (XML) which is extremely important for applications where training samples are limited.

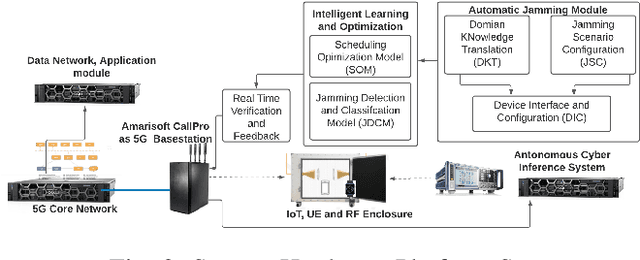

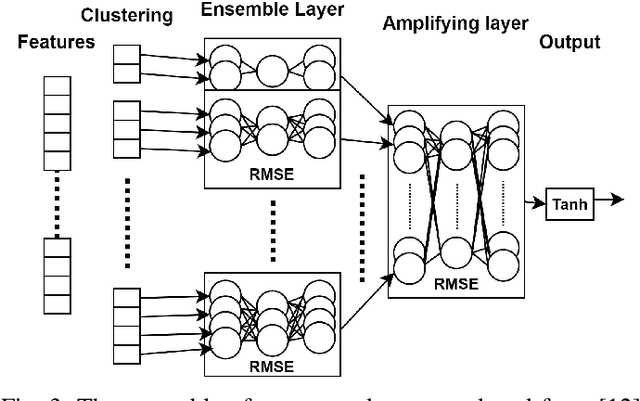

Machine Learning-assisted Bayesian Inference for Jamming Detection in 5G NR

Apr 26, 2023Abstract:The increased flexibility and density of spectrum access in 5G NR have made jamming detection a critical research area. To detect coexisting jamming and subtle interference that can affect legitimate communications performance, we introduce machine learning (ML)-assisted Bayesian Inference for jamming detection methodologies. Our methodology leverages cross-layer critical signaling data collected on a 5G NR Non-Standalone (NSA) testbed via supervised learning models, and are further assessed, calibrated, and revealed using Bayesian Network Model (BNM)-based inference. The models can operate on both instantaneous and sequential time-series data samples, achieving an Area under Curve (AUC) in the range of 0.947 to 1 for instantaneous models and between 0.933 to 1 for sequential models including the echo state network (ESN) from the reservoir computing (RC) family, for jamming scenarios spanning multiple frequency bands and power levels. Our approach not only serves as a validation method and a resilience enhancement tool for ML-based jamming detection, but also enables root cause identification for any observed performance degradation. Our proof-of-concept is successful in addressing 72.2\% of the erroneous predictions in sequential models caused by insufficient data samples collected in the observation period, demonstrating its applicability in 5G NR and Beyond-5G (B5G) network infrastructure and user devices.

Federated Dynamic Spectrum Access

Jun 28, 2021

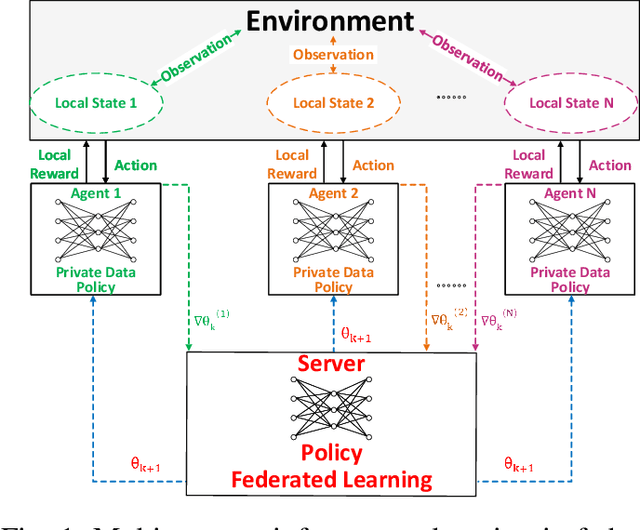

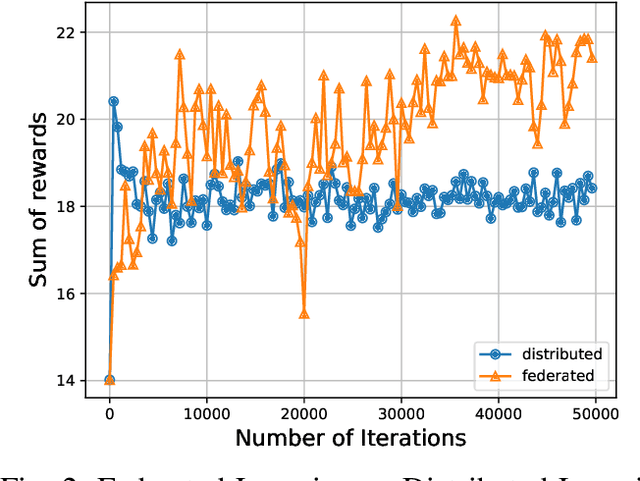

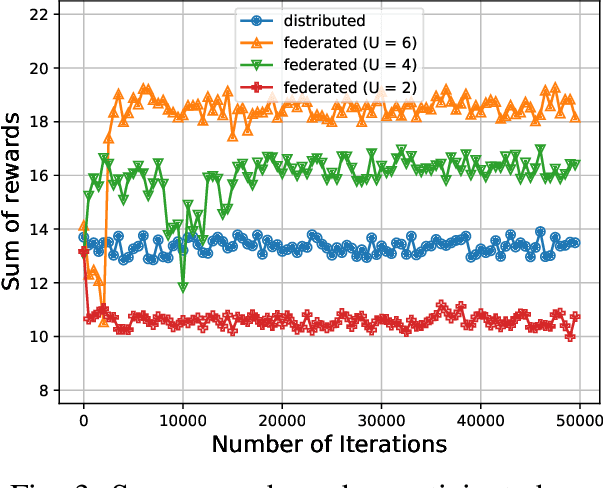

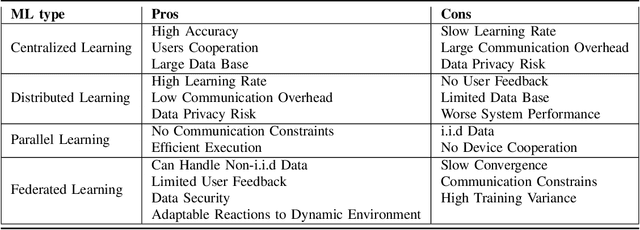

Abstract:Due to the growing volume of data traffic produced by the surge of Internet of Things (IoT) devices, the demand for radio spectrum resources is approaching their limitation defined by Federal Communications Commission (FCC). To this end, Dynamic Spectrum Access (DSA) is considered as a promising technology to handle this spectrum scarcity. However, standard DSA techniques often rely on analytical modeling wireless networks, making its application intractable in under-measured network environments. Therefore, utilizing neural networks to approximate the network dynamics is an alternative approach. In this article, we introduce a Federated Learning (FL) based framework for the task of DSA, where FL is a distributive machine learning framework that can reserve the privacy of network terminals under heterogeneous data distributions. We discuss the opportunities, challenges, and opening problems of this framework. To evaluate its feasibility, we implement a Multi-Agent Reinforcement Learning (MARL)-based FL as a realization associated with its initial evaluation results.

Learning with Knowledge of Structure: A Neural Network-Based Approach for MIMO-OFDM Detection

Dec 03, 2020

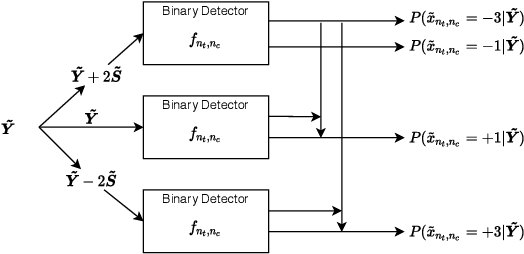

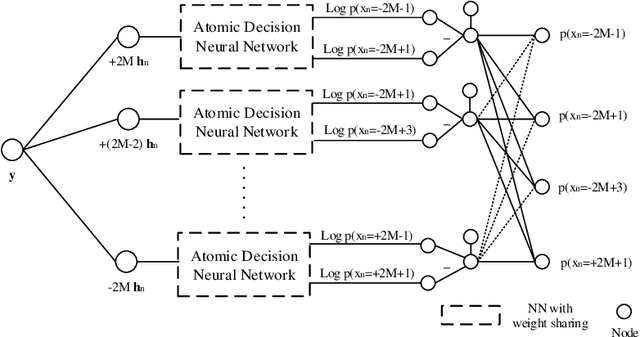

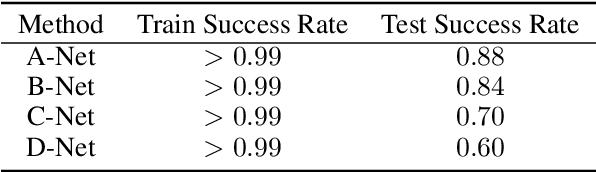

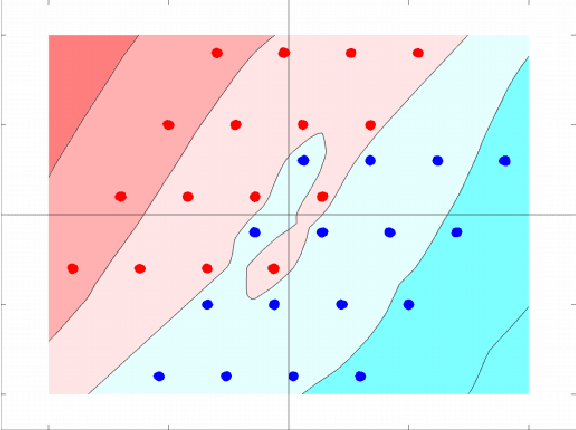

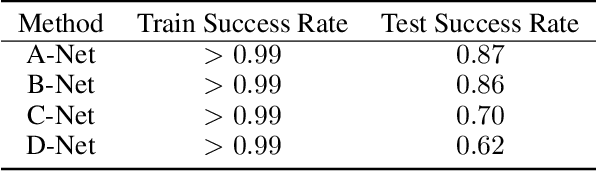

Abstract:In this paper, we explore neural network-based strategies for performing symbol detection in a MIMO-OFDM system. Building on a reservoir computing (RC)-based approach towards symbol detection, we introduce a symmetric and decomposed binary decision neural network to take advantage of the structure knowledge inherent in the MIMO-OFDM system. To be specific, the binary decision neural network is added in the frequency domain utilizing the knowledge of the constellation. We show that the introduced symmetric neural network can decompose the original $M$-ary detection problem into a series of binary classification tasks, thus significantly reducing the neural network detector complexity while offering good generalization performance with limited training overhead. Numerical evaluations demonstrate that the introduced hybrid RC-binary decision detection framework performs close to maximum likelihood model-based symbol detection methods in terms of symbol error rate in the low SNR regime with imperfect channel state information (CSI).

Learning for Integer-Constrained Optimization through Neural Networks with Limited Training

Nov 10, 2020

Abstract:In this paper, we investigate a neural network-based learning approach towards solving an integer-constrained programming problem using very limited training. To be specific, we introduce a symmetric and decomposed neural network structure, which is fully interpretable in terms of the functionality of its constituent components. By taking advantage of the underlying pattern of the integer constraint, as well as of the affine nature of the objective function, the introduced neural network offers superior generalization performance with limited training, as compared to other generic neural network structures that do not exploit the inherent structure of the integer constraint. In addition, we show that the introduced decomposed approach can be further extended to semi-decomposed frameworks. The introduced learning approach is evaluated via the classification/symbol detection task in the context of wireless communication systems where available training sets are usually limited. Evaluation results demonstrate that the introduced learning strategy is able to effectively perform the classification/symbol detection task in a wide variety of wireless channel environments specified by the 3GPP community.

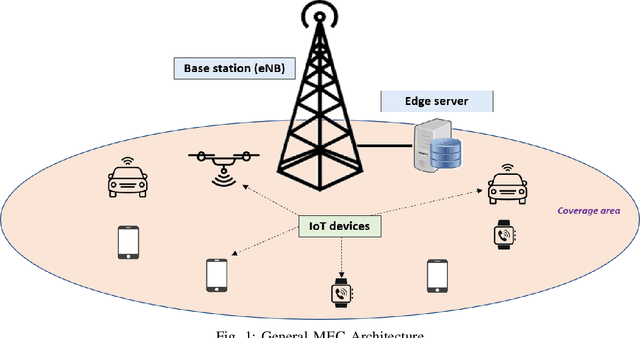

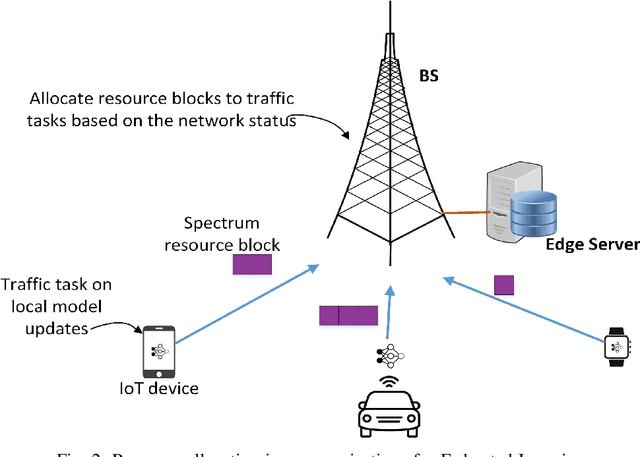

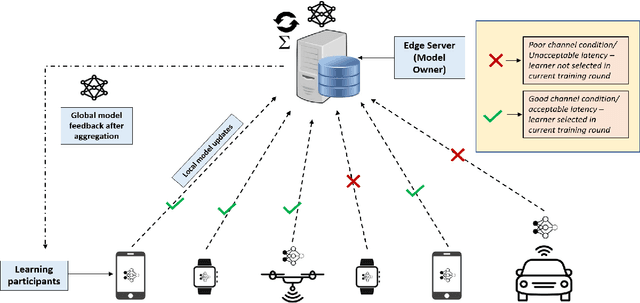

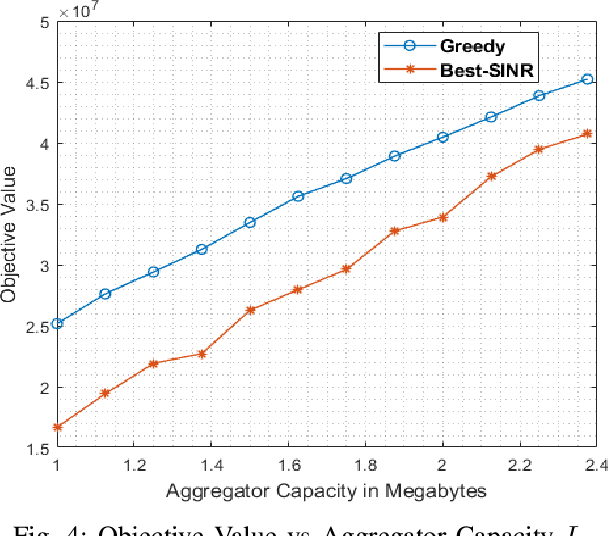

Federated Learning in Mobile Edge Computing: An Edge-Learning Perspective for Beyond 5G

Jul 15, 2020

Abstract:Owing to the large volume of sensed data from the enormous number of IoT devices in operation today, centralized machine learning algorithms operating on such data incur an unbearable training time, and thus cannot satisfy the requirements of delay-sensitive inference applications. By provisioning computing resources at the network edge, Mobile Edge Computing (MEC) has become a promising technology capable of collaborating with distributed IoT devices to facilitate federated learning, and thus realize real-time training. However, considering the large volume of sensed data and the limited resources of both edge servers and IoT devices, it is challenging to ensure the training efficiency and accuracy of delay-sensitive training tasks. Thus, in this paper, we design a novel edge computing-assisted federated learning framework, in which the communication constraints between IoT devices and edge servers and the effect of various IoT devices on the training accuracy are taken into account. On one hand, we employ machine learning methods to dynamically configure the communication resources in real-time to accelerate the interactions between IoT devices and edge servers, thus improving the training efficiency of federated learning. On the other hand, as various IoT devices have different training datasets which have varying influence on the accuracy of the global model derived at the edge server, an IoT device selection scheme is designed to improve the training accuracy under the resource constraints at edge servers. Extensive simulations have been conducted to demonstrate the performance of the introduced edge computing-assisted federated learning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge