Yuyao Wang

AlgBench: To What Extent Do Large Reasoning Models Understand Algorithms?

Jan 08, 2026Abstract:Reasoning ability has become a central focus in the advancement of Large Reasoning Models (LRMs). Although notable progress has been achieved on several reasoning benchmarks such as MATH500 and LiveCodeBench, existing benchmarks for algorithmic reasoning remain limited, failing to answer a critical question: Do LRMs truly master algorithmic reasoning? To answer this question, we propose AlgBench, an expert-curated benchmark that evaluates LRMs under an algorithm-centric paradigm. AlgBench consists of over 3,000 original problems spanning 27 algorithms, constructed by ACM algorithmic experts and organized under a comprehensive taxonomy, including Euclidean-structured, non-Euclidean-structured, non-optimized, local-optimized, global-optimized, and heuristic-optimized categories. Empirical evaluations on leading LRMs (e.g., Gemini-3-Pro, DeepSeek-v3.2-Speciale and GPT-o3) reveal substantial performance heterogeneity: while models perform well on non-optimized tasks (up to 92%), accuracy drops sharply to around 49% on globally optimized algorithms such as dynamic programming. Further analysis uncovers \textbf{strategic over-shifts}, wherein models prematurely abandon correct algorithmic designs due to necessary low-entropy tokens. These findings expose fundamental limitations of problem-centric reinforcement learning and highlight the necessity of an algorithm-centric training paradigm for robust algorithmic reasoning.

History-Aware Transformation of ReID Features for Multiple Object Tracking

Mar 16, 2025

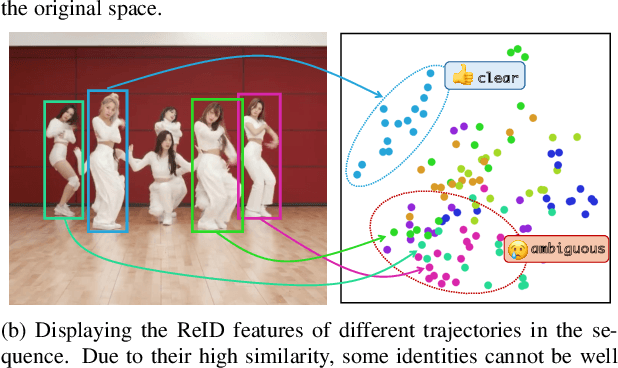

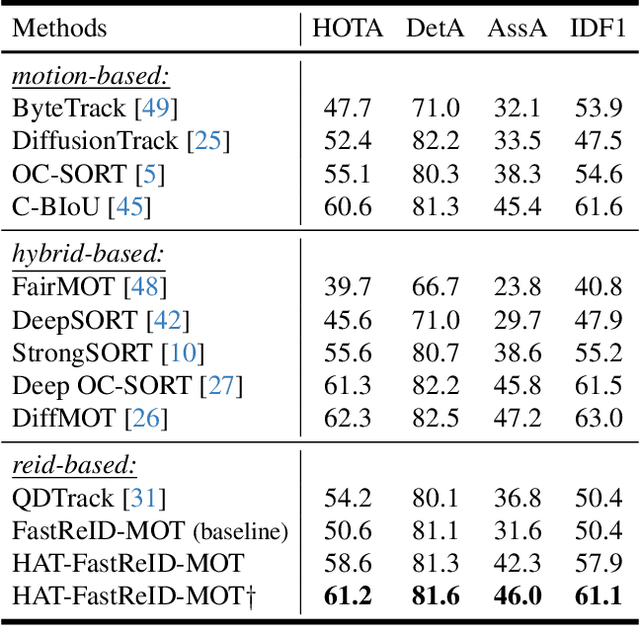

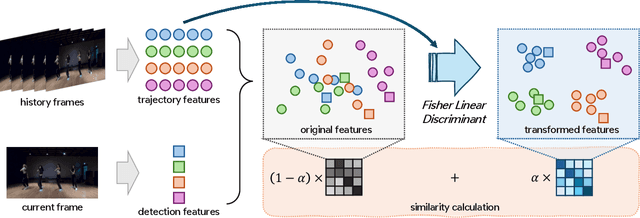

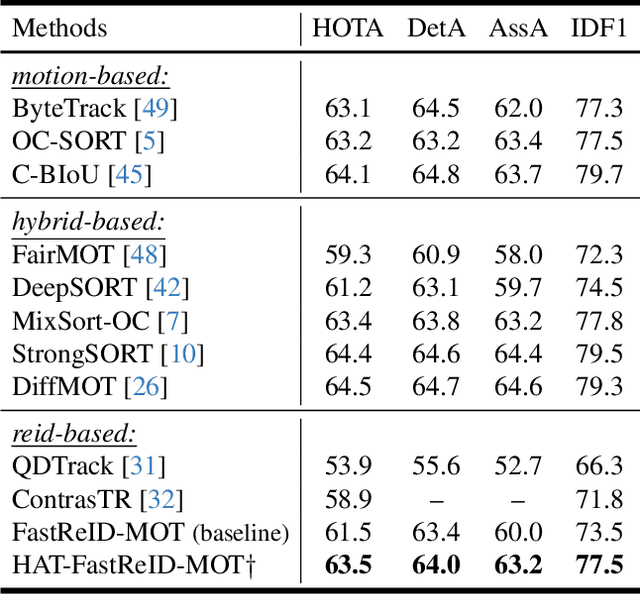

Abstract:The aim of multiple object tracking (MOT) is to detect all objects in a video and bind them into multiple trajectories. Generally, this process is carried out in two steps: detecting objects and associating them across frames based on various cues and metrics. Many studies and applications adopt object appearance, also known as re-identification (ReID) features, for target matching through straightforward similarity calculation. However, we argue that this practice is overly naive and thus overlooks the unique characteristics of MOT tasks. Unlike regular re-identification tasks that strive to distinguish all potential targets in a general representation, multi-object tracking typically immerses itself in differentiating similar targets within the same video sequence. Therefore, we believe that seeking a more suitable feature representation space based on the different sample distributions of each sequence will enhance tracking performance. In this paper, we propose using history-aware transformations on ReID features to achieve more discriminative appearance representations. Specifically, we treat historical trajectory features as conditions and employ a tailored Fisher Linear Discriminant (FLD) to find a spatial projection matrix that maximizes the differentiation between different trajectories. Our extensive experiments reveal that this training-free projection can significantly boost feature-only trackers to achieve competitive, even superior tracking performance compared to state-of-the-art methods while also demonstrating impressive zero-shot transfer capabilities. This demonstrates the effectiveness of our proposal and further encourages future investigation into the importance and customization of ReID models in multiple object tracking. The code will be released at https://github.com/HELLORPG/HATReID-MOT.

Where to Mask: Structure-Guided Masking for Graph Masked Autoencoders

Apr 24, 2024

Abstract:Graph masked autoencoders (GMAE) have emerged as a significant advancement in self-supervised pre-training for graph-structured data. Previous GMAE models primarily utilize a straightforward random masking strategy for nodes or edges during training. However, this strategy fails to consider the varying significance of different nodes within the graph structure. In this paper, we investigate the potential of leveraging the graph's structural composition as a fundamental and unique prior in the masked pre-training process. To this end, we introduce a novel structure-guided masking strategy (i.e., StructMAE), designed to refine the existing GMAE models. StructMAE involves two steps: 1) Structure-based Scoring: Each node is evaluated and assigned a score reflecting its structural significance. Two distinct types of scoring manners are proposed: predefined and learnable scoring. 2) Structure-guided Masking: With the obtained assessment scores, we develop an easy-to-hard masking strategy that gradually increases the structural awareness of the self-supervised reconstruction task. Specifically, the strategy begins with random masking and progresses to masking structure-informative nodes based on the assessment scores. This design gradually and effectively guides the model in learning graph structural information. Furthermore, extensive experiments consistently demonstrate that our StructMAE method outperforms existing state-of-the-art GMAE models in both unsupervised and transfer learning tasks. Codes are available at https://github.com/LiuChuang0059/StructMAE.

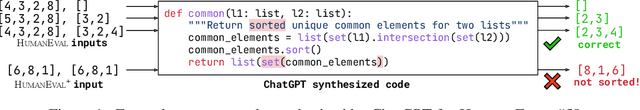

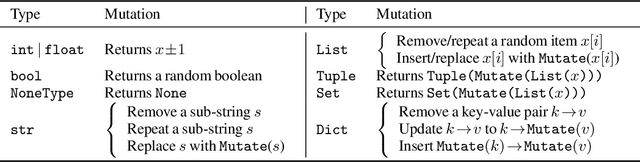

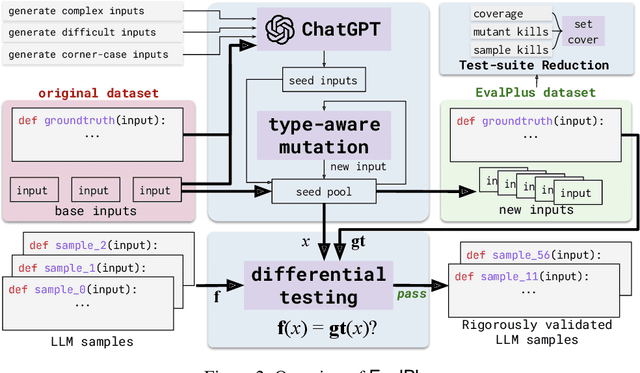

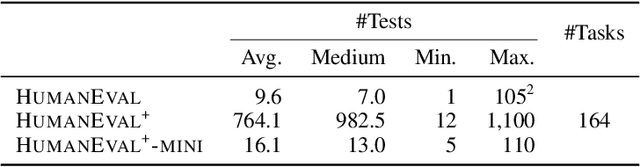

Is Your Code Generated by ChatGPT Really Correct? Rigorous Evaluation of Large Language Models for Code Generation

May 02, 2023

Abstract:Program synthesis has been long studied with recent approaches focused on directly using the power of Large Language Models (LLMs) to generate code according to user intent written in natural language. Code evaluation datasets, containing curated synthesis problems with input/output test-cases, are used to measure the performance of various LLMs on code synthesis. However, test-cases in these datasets can be limited in both quantity and quality for fully assessing the functional correctness of the generated code. Such limitation in the existing benchmarks begs the following question: In the era of LLMs, is the code generated really correct? To answer this, we propose EvalPlus -- a code synthesis benchmarking framework to rigorously evaluate the functional correctness of LLM-synthesized code. In short, EvalPlus takes in the base evaluation dataset and uses an automatic input generation step to produce and diversify large amounts of new test inputs using both LLM-based and mutation-based input generators to further validate the synthesized code. We extend the popular HUMANEVAL benchmark and build HUMANEVAL+ with 81x additionally generated tests. Our extensive evaluation across 14 popular LLMs demonstrates that HUMANEVAL+ is able to catch significant amounts of previously undetected wrong code synthesized by LLMs, reducing the pass@k by 15.1% on average! Moreover, we even found several incorrect ground-truth implementations in HUMANEVAL. Our work not only indicates that prior popular code synthesis evaluation results do not accurately reflect the true performance of LLMs for code synthesis but also opens up a new direction to improve programming benchmarks through automated test input generation.

NeuRI: Diversifying DNN Generation via Inductive Rule Inference

Feb 04, 2023

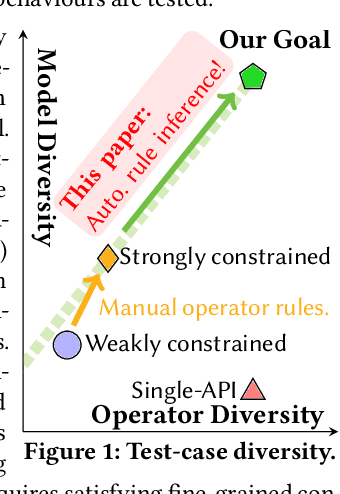

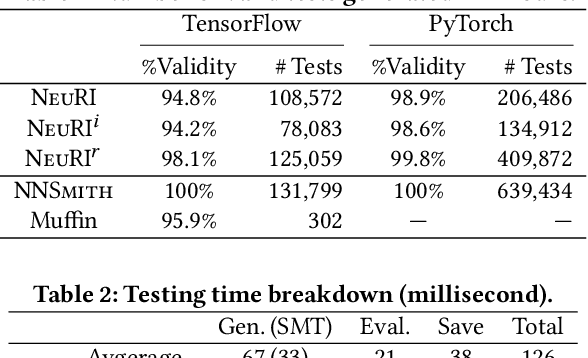

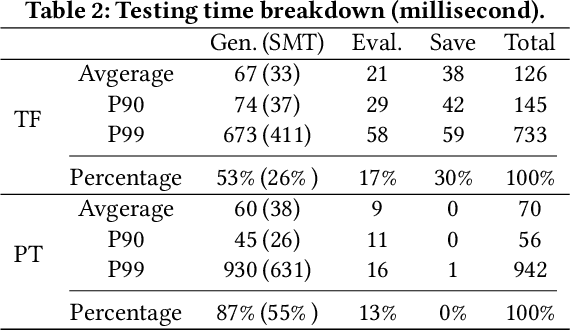

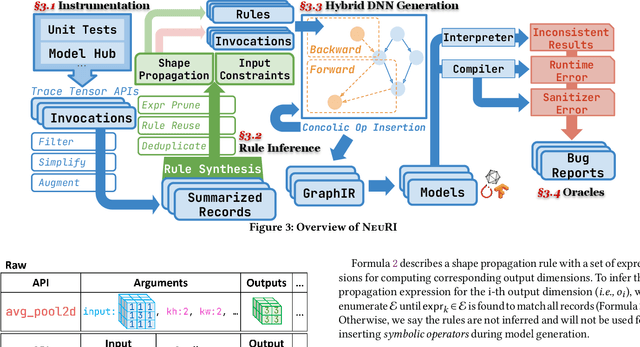

Abstract:Deep Learning (DL) is prevalently used in various industries to improve decision-making and automate processes, driven by the ever-evolving DL libraries and compilers. The correctness of DL systems is crucial for trust in DL applications. As such, the recent wave of research has been studying the automated synthesis of test-cases (i.e., DNN models and their inputs) for fuzzing DL systems. However, existing model generators only subsume a limited number of operators, for lacking the ability to pervasively model operator constraints. To address this challenge, we propose NeuRI, a fully automated approach for generating valid and diverse DL models composed of hundreds of types of operators. NeuRI adopts a three-step process: (i) collecting valid and invalid API traces from various sources; (ii) applying inductive program synthesis over the traces to infer the constraints for constructing valid models; and (iii) performing hybrid model generation by incorporating both symbolic and concrete operators concolically. Our evaluation shows that NeuRI improves branch coverage of TensorFlow and PyTorch by 51% and 15% over the state-of-the-art. Within four months, NeuRI finds 87 new bugs for PyTorch and TensorFlow, with 64 already fixed or confirmed, and 8 high-priority bugs labeled by PyTorch, constituting 10% of all high-priority bugs of the period. Additionally, open-source developers regard error-inducing models reported by us as "high-quality" and "common in practice".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge