Yuyang Chen

STEP3-VL-10B Technical Report

Jan 15, 2026Abstract:We present STEP3-VL-10B, a lightweight open-source foundation model designed to redefine the trade-off between compact efficiency and frontier-level multimodal intelligence. STEP3-VL-10B is realized through two strategic shifts: first, a unified, fully unfrozen pre-training strategy on 1.2T multimodal tokens that integrates a language-aligned Perception Encoder with a Qwen3-8B decoder to establish intrinsic vision-language synergy; and second, a scaled post-training pipeline featuring over 1k iterations of reinforcement learning. Crucially, we implement Parallel Coordinated Reasoning (PaCoRe) to scale test-time compute, allocating resources to scalable perceptual reasoning that explores and synthesizes diverse visual hypotheses. Consequently, despite its compact 10B footprint, STEP3-VL-10B rivals or surpasses models 10$\times$-20$\times$ larger (e.g., GLM-4.6V-106B, Qwen3-VL-235B) and top-tier proprietary flagships like Gemini 2.5 Pro and Seed-1.5-VL. Delivering best-in-class performance, it records 92.2% on MMBench and 80.11% on MMMU, while excelling in complex reasoning with 94.43% on AIME2025 and 75.95% on MathVision. We release the full model suite to provide the community with a powerful, efficient, and reproducible baseline.

HARDMath2: A Benchmark for Applied Mathematics Built by Students as Part of a Graduate Class

May 17, 2025Abstract:Large language models (LLMs) have shown remarkable progress in mathematical problem-solving, but evaluation has largely focused on problems that have exact analytical solutions or involve formal proofs, often overlooking approximation-based problems ubiquitous in applied science and engineering. To fill this gap, we build on prior work and present HARDMath2, a dataset of 211 original problems covering the core topics in an introductory graduate applied math class, including boundary-layer analysis, WKB methods, asymptotic solutions of nonlinear partial differential equations, and the asymptotics of oscillatory integrals. This dataset was designed and verified by the students and instructors of a core graduate applied mathematics course at Harvard. We build the dataset through a novel collaborative environment that challenges students to write and refine difficult problems consistent with the class syllabus, peer-validate solutions, test different models, and automatically check LLM-generated solutions against their own answers and numerical ground truths. Evaluation results show that leading frontier models still struggle with many of the problems in the dataset, highlighting a gap in the mathematical reasoning skills of current LLMs. Importantly, students identified strategies to create increasingly difficult problems by interacting with the models and exploiting common failure modes. This back-and-forth with the models not only resulted in a richer and more challenging benchmark but also led to qualitative improvements in the students' understanding of the course material, which is increasingly important as we enter an age where state-of-the-art language models can solve many challenging problems across a wide domain of fields.

Krysalis Hand: A Lightweight, High-Payload, 18-DoF Anthropomorphic End-Effector for Robotic Learning and Dexterous Manipulation

Apr 17, 2025

Abstract:This paper presents the Krysalis Hand, a five-finger robotic end-effector that combines a lightweight design, high payload capacity, and a high number of degrees of freedom (DoF) to enable dexterous manipulation in both industrial and research settings. This design integrates the actuators within the hand while maintaining an anthropomorphic form. Each finger joint features a self-locking mechanism that allows the hand to sustain large external forces without active motor engagement. This approach shifts the payload limitation from the motor strength to the mechanical strength of the hand, allowing the use of smaller, more cost-effective motors. With 18 DoF and weighing only 790 grams, the Krysalis Hand delivers an active squeezing force of 10 N per finger and supports a passive payload capacity exceeding 10 lbs. These characteristics make Krysalis Hand one of the lightest, strongest, and most dexterous robotic end-effectors of its kind. Experimental evaluations validate its ability to perform intricate manipulation tasks and handle heavy payloads, underscoring its potential for industrial applications as well as academic research. All code related to the Krysalis Hand, including control and teleoperation, is available on the project GitHub repository: https://github.com/Soltanilara/Krysalis_Hand

Dynamic Attention and Bi-directional Fusion for Safety Helmet Wearing Detection

Nov 28, 2024Abstract:Ensuring construction site safety requires accurate and real-time detection of workers' safety helmet use, despite challenges posed by cluttered environments, densely populated work areas, and hard-to-detect small or overlapping objects caused by building obstructions. This paper proposes a novel algorithm for safety helmet wearing detection, incorporating a dynamic attention within the detection head to enhance multi-scale perception. The mechanism combines feature-level attention for scale adaptation, spatial attention for spatial localization, and channel attention for task-specific insights, improving small object detection without additional computational overhead. Furthermore, a two-way fusion strategy enables bidirectional information flow, refining feature fusion through adaptive multi-scale weighting, and enhancing recognition of occluded targets. Experimental results demonstrate a 1.7% improvement in mAP@[.5:.95] compared to the best baseline while reducing GFLOPs by 11.9% on larger sizes. The proposed method surpasses existing models, providing an efficient and practical solution for real-world construction safety monitoring.

Efficient Diversity-based Experience Replay for Deep Reinforcement Learning

Oct 27, 2024

Abstract:Deep Reinforcement Learning (DRL) has achieved remarkable success in solving complex decision-making problems by combining the representation capabilities of deep learning with the decision-making power of reinforcement learning. However, learning in sparse reward environments remains challenging due to insufficient feedback to guide the optimization of agents, especially in real-life environments with high-dimensional states. To tackle this issue, experience replay is commonly introduced to enhance learning efficiency through past experiences. Nonetheless, current methods of experience replay, whether based on uniform or prioritized sampling, frequently struggle with suboptimal learning efficiency and insufficient utilization of samples. This paper proposes a novel approach, diversity-based experience replay (DBER), which leverages the deterministic point process to prioritize diverse samples in state realizations. We conducted extensive experiments on Robotic Manipulation tasks in MuJoCo, Atari games, and realistic in-door environments in Habitat. The results show that our method not only significantly improves learning efficiency but also demonstrates superior performance in sparse reward environments with high-dimensional states, providing a simple yet effective solution for this field.

Enhancing LLM Agents for Code Generation with Possibility and Pass-rate Prioritized Experience Replay

Oct 16, 2024

Abstract:Nowadays transformer-based Large Language Models (LLM) for code generation tasks usually apply sampling and filtering pipelines. Due to the sparse reward problem in code generation tasks caused by one-token incorrectness, transformer-based models will sample redundant programs till they find a correct one, leading to low efficiency. To overcome the challenge, we incorporate Experience Replay (ER) in the fine-tuning phase, where codes and programs produced are stored and will be replayed to give the LLM agent a chance to learn from past experiences. Based on the spirit of ER, we introduce a novel approach called BTP pipeline which consists of three phases: beam search sampling, testing phase, and prioritized experience replay phase. The approach makes use of failed programs collected by code models and replays programs with high Possibility and Pass-rate Prioritized value (P2Value) from the replay buffer to improve efficiency. P2Value comprehensively considers the possibility of transformers' output and pass rate and can make use of the redundant resources caused by the problem that most programs collected by LLMs fail to pass any tests. We empirically apply our approach in several LLMs, demonstrating that it enhances their performance in code generation tasks and surpasses existing baselines.

DIOR: Dataset for Indoor-Outdoor Reidentification -- Long Range 3D/2D Skeleton Gait Collection Pipeline, Semi-Automated Gait Keypoint Labeling and Baseline Evaluation Methods

Sep 21, 2023Abstract:In recent times, there is an increased interest in the identification and re-identification of people at long distances, such as from rooftop cameras, UAV cameras, street cams, and others. Such recognition needs to go beyond face and use whole-body markers such as gait. However, datasets to train and test such recognition algorithms are not widely prevalent, and fewer are labeled. This paper introduces DIOR -- a framework for data collection, semi-automated annotation, and also provides a dataset with 14 subjects and 1.649 million RGB frames with 3D/2D skeleton gait labels, including 200 thousands frames from a long range camera. Our approach leverages advanced 3D computer vision techniques to attain pixel-level accuracy in indoor settings with motion capture systems. Additionally, for outdoor long-range settings, we remove the dependency on motion capture systems and adopt a low-cost, hybrid 3D computer vision and learning pipeline with only 4 low-cost RGB cameras, successfully achieving precise skeleton labeling on far-away subjects, even when their height is limited to a mere 20-25 pixels within an RGB frame. On publication, we will make our pipeline open for others to use.

HyperINR: A Fast and Predictive Hypernetwork for Implicit Neural Representations via Knowledge Distillation

Apr 09, 2023

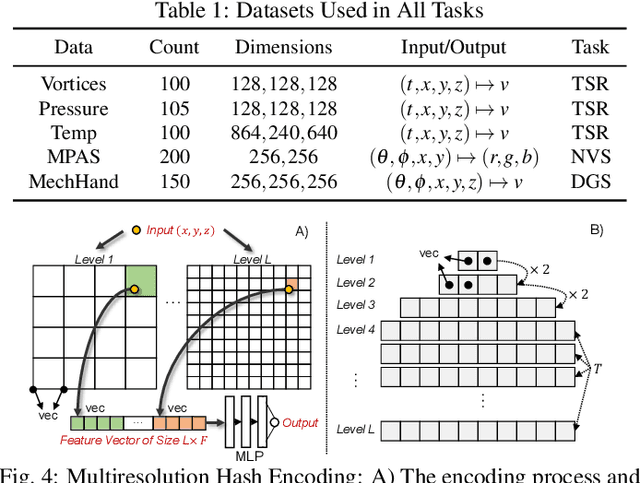

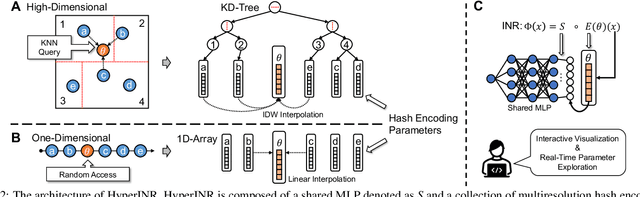

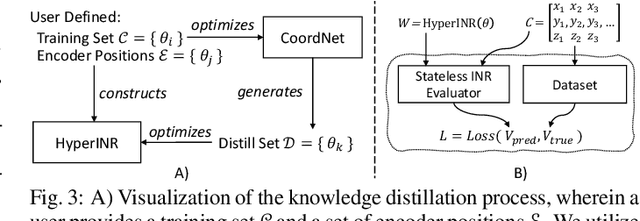

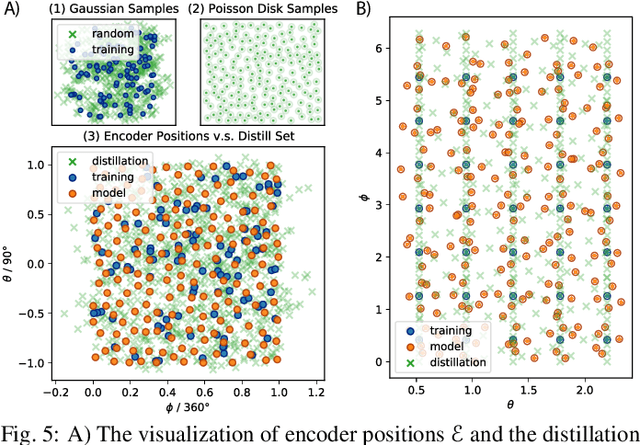

Abstract:Implicit Neural Representations (INRs) have recently exhibited immense potential in the field of scientific visualization for both data generation and visualization tasks. However, these representations often consist of large multi-layer perceptrons (MLPs), necessitating millions of operations for a single forward pass, consequently hindering interactive visual exploration. While reducing the size of the MLPs and employing efficient parametric encoding schemes can alleviate this issue, it compromises generalizability for unseen parameters, rendering it unsuitable for tasks such as temporal super-resolution. In this paper, we introduce HyperINR, a novel hypernetwork architecture capable of directly predicting the weights for a compact INR. By harnessing an ensemble of multiresolution hash encoding units in unison, the resulting INR attains state-of-the-art inference performance (up to 100x higher inference bandwidth) and can support interactive photo-realistic volume visualization. Additionally, by incorporating knowledge distillation, exceptional data and visualization generation quality is achieved, making our method valuable for real-time parameter exploration. We validate the effectiveness of the HyperINR architecture through a comprehensive ablation study. We showcase the versatility of HyperINR across three distinct scientific domains: novel view synthesis, temporal super-resolution of volume data, and volume rendering with dynamic global shadows. By simultaneously achieving efficiency and generalizability, HyperINR paves the way for applying INR in a wider array of scientific visualization applications.

Design of an Adaptive Lightweight LiDAR to Decouple Robot-Camera Geometry

Feb 28, 2023

Abstract:A fundamental challenge in robot perception is the coupling of the sensor pose and robot pose. This has led to research in active vision where robot pose is changed to reorient the sensor to areas of interest for perception. Further, egomotion such as jitter, and external effects such as wind and others affect perception requiring additional effort in software such as image stabilization. This effect is particularly pronounced in micro-air vehicles and micro-robots who typically are lighter and subject to larger jitter but do not have the computational capability to perform stabilization in real-time. We present a novel microelectromechanical (MEMS) mirror LiDAR system to change the field of view of the LiDAR independent of the robot motion. Our design has the potential for use on small, low-power systems where the expensive components of the LiDAR can be placed external to the small robot. We show the utility of our approach in simulation and on prototype hardware mounted on a UAV. We believe that this LiDAR and its compact movable scanning design provide mechanisms to decouple robot and sensor geometry allowing us to simplify robot perception. We also demonstrate examples of motion compensation using IMU and external odometry feedback in hardware.

Twitter's Agenda-Setting Role: A Study of Twitter Strategy for Political Diversion

Dec 16, 2022

Abstract:This study verified the effectiveness of Donald Trump's Twitter campaign in guiding agen-da-setting and deflecting political risk and examined Trump's Twitter communication strategy and explores the communication effects of his tweet content during Covid-19 pandemic. We collected all tweets posted by Trump on the Twitter platform from January 1, 2020 to December 31, 2020.We used Ordinary Least Squares (OLS) regression analysis with a fixed effects model to analyze the existence of the Twitter strategy. The correlation between the number of con-firmed daily Covid-19 diagnoses and the number of particular thematic tweets was investigated using time series analysis. Empirical analysis revealed Twitter's strategy is used to divert public attention from negative Covid-19 reports during the epidemic, and it posts a powerful political communication effect on Twitter. However, findings suggest that Trump did not use false claims to divert political risk and shape public opinion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge