David Bauer

HyperFLINT: Hypernetwork-based Flow Estimation and Temporal Interpolation for Scientific Ensemble Visualization

Dec 05, 2024

Abstract:We present HyperFLINT (Hypernetwork-based FLow estimation and temporal INTerpolation), a novel deep learning-based approach for estimating flow fields, temporally interpolating scalar fields, and facilitating parameter space exploration in spatio-temporal scientific ensemble data. This work addresses the critical need to explicitly incorporate ensemble parameters into the learning process, as traditional methods often neglect these, limiting their ability to adapt to diverse simulation settings and provide meaningful insights into the data dynamics. HyperFLINT introduces a hypernetwork to account for simulation parameters, enabling it to generate accurate interpolations and flow fields for each timestep by dynamically adapting to varying conditions, thereby outperforming existing parameter-agnostic approaches. The architecture features modular neural blocks with convolutional and deconvolutional layers, supported by a hypernetwork that generates weights for the main network, allowing the model to better capture intricate simulation dynamics. A series of experiments demonstrates HyperFLINT's significantly improved performance in flow field estimation and temporal interpolation, as well as its potential in enabling parameter space exploration, offering valuable insights into complex scientific ensembles.

Photon Field Networks for Dynamic Real-Time Volumetric Global Illumination

Apr 14, 2023

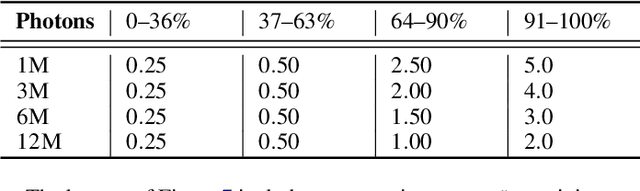

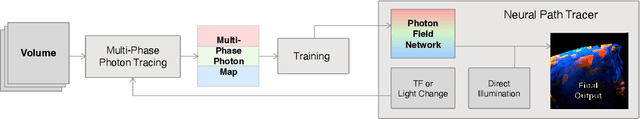

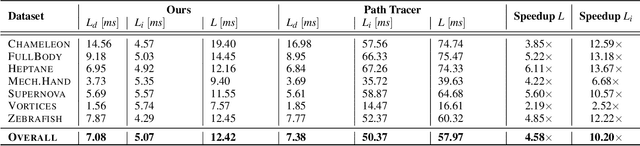

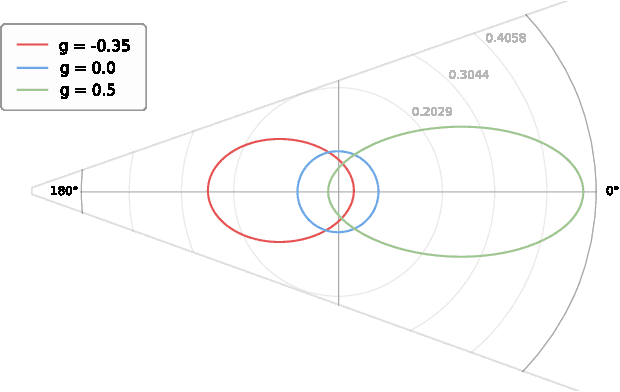

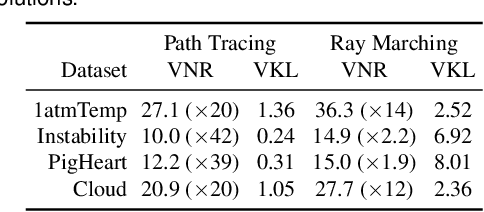

Abstract:Volume data is commonly found in many scientific disciplines, like medicine, physics, and biology. Experts rely on robust scientific visualization techniques to extract valuable insights from the data. Recent years have shown path tracing to be the preferred approach for volumetric rendering, given its high levels of realism. However, real-time volumetric path tracing often suffers from stochastic noise and long convergence times, limiting interactive exploration. In this paper, we present a novel method to enable real-time global illumination for volume data visualization. We develop Photon Field Networks -- a phase-function-aware, multi-light neural representation of indirect volumetric global illumination. The fields are trained on multi-phase photon caches that we compute a priori. Training can be done within seconds, after which the fields can be used in various rendering tasks. To showcase their potential, we develop a custom neural path tracer, with which our photon fields achieve interactive framerates even on large datasets. We conduct in-depth evaluations of the method's performance, including visual quality, stochastic noise, inference and rendering speeds, and accuracy regarding illumination and phase function awareness. Results are compared to ray marching, path tracing and photon mapping. Our findings show that Photon Field Networks can faithfully represent indirect global illumination across the phase spectrum while exhibiting less stochastic noise and rendering at a significantly faster rate than traditional methods.

HyperINR: A Fast and Predictive Hypernetwork for Implicit Neural Representations via Knowledge Distillation

Apr 09, 2023

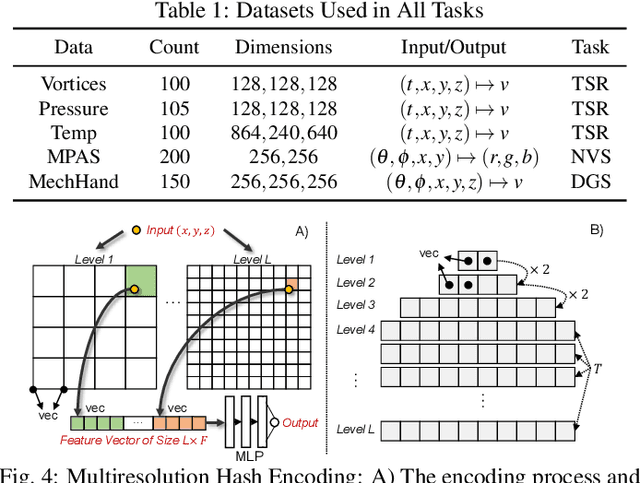

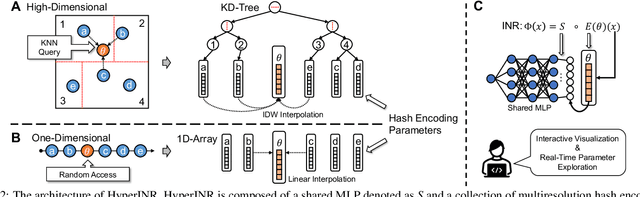

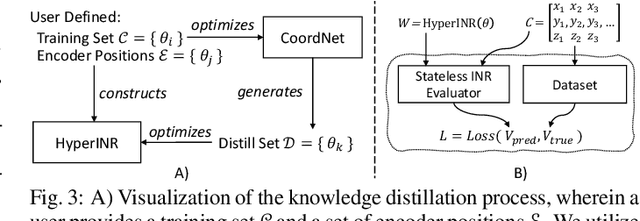

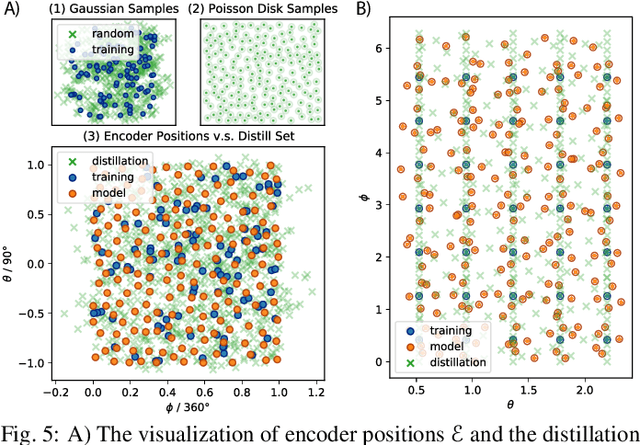

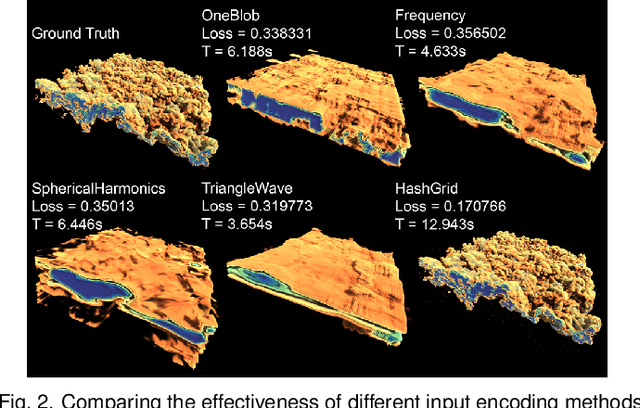

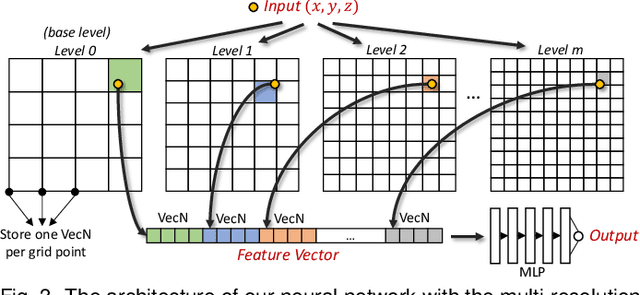

Abstract:Implicit Neural Representations (INRs) have recently exhibited immense potential in the field of scientific visualization for both data generation and visualization tasks. However, these representations often consist of large multi-layer perceptrons (MLPs), necessitating millions of operations for a single forward pass, consequently hindering interactive visual exploration. While reducing the size of the MLPs and employing efficient parametric encoding schemes can alleviate this issue, it compromises generalizability for unseen parameters, rendering it unsuitable for tasks such as temporal super-resolution. In this paper, we introduce HyperINR, a novel hypernetwork architecture capable of directly predicting the weights for a compact INR. By harnessing an ensemble of multiresolution hash encoding units in unison, the resulting INR attains state-of-the-art inference performance (up to 100x higher inference bandwidth) and can support interactive photo-realistic volume visualization. Additionally, by incorporating knowledge distillation, exceptional data and visualization generation quality is achieved, making our method valuable for real-time parameter exploration. We validate the effectiveness of the HyperINR architecture through a comprehensive ablation study. We showcase the versatility of HyperINR across three distinct scientific domains: novel view synthesis, temporal super-resolution of volume data, and volume rendering with dynamic global shadows. By simultaneously achieving efficiency and generalizability, HyperINR paves the way for applying INR in a wider array of scientific visualization applications.

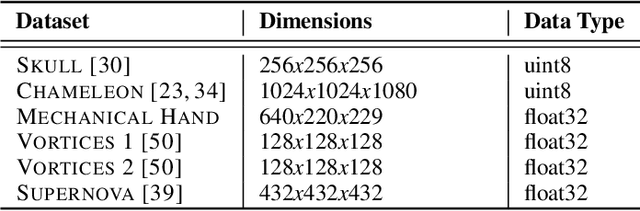

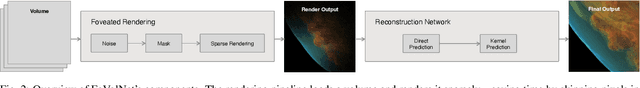

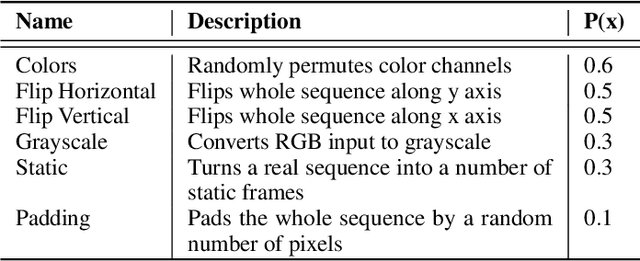

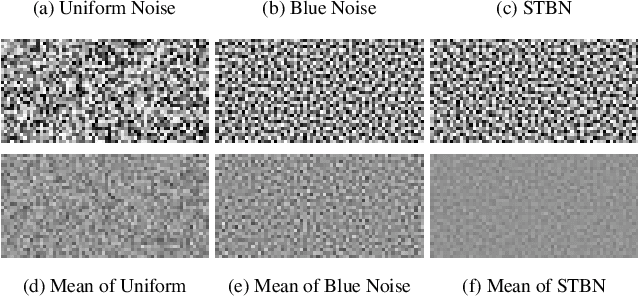

FoVolNet: Fast Volume Rendering using Foveated Deep Neural Networks

Sep 20, 2022

Abstract:Volume data is found in many important scientific and engineering applications. Rendering this data for visualization at high quality and interactive rates for demanding applications such as virtual reality is still not easily achievable even using professional-grade hardware. We introduce FoVolNet -- a method to significantly increase the performance of volume data visualization. We develop a cost-effective foveated rendering pipeline that sparsely samples a volume around a focal point and reconstructs the full-frame using a deep neural network. Foveated rendering is a technique that prioritizes rendering computations around the user's focal point. This approach leverages properties of the human visual system, thereby saving computational resources when rendering data in the periphery of the user's field of vision. Our reconstruction network combines direct and kernel prediction methods to produce fast, stable, and perceptually convincing output. With a slim design and the use of quantization, our method outperforms state-of-the-art neural reconstruction techniques in both end-to-end frame times and visual quality. We conduct extensive evaluations of the system's rendering performance, inference speed, and perceptual properties, and we provide comparisons to competing neural image reconstruction techniques. Our test results show that FoVolNet consistently achieves significant time saving over conventional rendering while preserving perceptual quality.

Instant Neural Representation for Interactive Volume Rendering

Jul 23, 2022

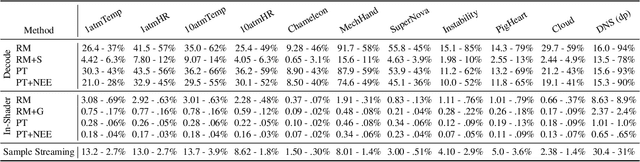

Abstract:Neural networks have shown great potential in compressing volumetric data for scientific visualization. However, due to the high cost of training and inference, such volumetric neural representations have thus far only been applied to offline data processing and non-interactive rendering. In this paper, we demonstrate that by simultaneously leveraging modern GPU tensor cores, a native CUDA neural network framework, and online training, we can achieve high-performance and high-fidelity interactive ray tracing using volumetric neural representations. Additionally, our method is fully generalizable and can adapt to time-varying datasets on-the-fly. We present three strategies for online training with each leveraging a different combination of the GPU, the CPU, and out-of-core-streaming techniques. We also develop three rendering implementations that allow interactive ray tracing to be coupled with real-time volume decoding, sample streaming, and in-shader neural network inference. We demonstrate that our volumetric neural representations can scale up to terascale for regular-grid volume visualization, and can easily support irregular data structures such as OpenVDB, unstructured, AMR, and particle volume data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge