Yicheng Zhang

Reinforcement Fine-Tuning for History-Aware Dense Retriever in RAG

Feb 03, 2026Abstract:Retrieval-augmented generation (RAG) enables large language models (LLMs) to produce evidence-based responses, and its performance hinges on the matching between the retriever and LLMs. Retriever optimization has emerged as an efficient alternative to fine-tuning LLMs. However, existing solutions suffer from objective mismatch between retriever optimization and the goal of RAG pipeline. Reinforcement learning (RL) provides a promising solution to address this limitation, yet applying RL to retriever optimization introduces two fundamental challenges: 1) the deterministic retrieval is incompatible with RL formulations, and 2) state aliasing arises from query-only retrieval in multi-hop reasoning. To address these challenges, we replace deterministic retrieval with stochastic sampling and formulate RAG as a Markov decision process, making retriever optimizable by RL. Further, we incorporate retrieval history into the state at each retrieval step to mitigate state aliasing. Extensive experiments across diverse RAG pipelines, datasets, and retriever scales demonstrate consistent improvements of our approach in RAG performance.

Is Nano Banana Pro a Low-Level Vision All-Rounder? A Comprehensive Evaluation on 14 Tasks and 40 Datasets

Dec 19, 2025Abstract:The rapid evolution of text-to-image generation models has revolutionized visual content creation. While commercial products like Nano Banana Pro have garnered significant attention, their potential as generalist solvers for traditional low-level vision challenges remains largely underexplored. In this study, we investigate the critical question: Is Nano Banana Pro a Low-Level Vision All-Rounder? We conducted a comprehensive zero-shot evaluation across 14 distinct low-level tasks spanning 40 diverse datasets. By utilizing simple textual prompts without fine-tuning, we benchmarked Nano Banana Pro against state-of-the-art specialist models. Our extensive analysis reveals a distinct performance dichotomy: while \textbf{Nano Banana Pro demonstrates superior subjective visual quality}, often hallucinating plausible high-frequency details that surpass specialist models, it lags behind in traditional reference-based quantitative metrics. We attribute this discrepancy to the inherent stochasticity of generative models, which struggle to maintain the strict pixel-level consistency required by conventional metrics. This report identifies Nano Banana Pro as a capable zero-shot contender for low-level vision tasks, while highlighting that achieving the high fidelity of domain specialists remains a significant hurdle.

Learning to Tell Apart: Weakly Supervised Video Anomaly Detection via Disentangled Semantic Alignment

Nov 13, 2025Abstract:Recent advancements in weakly-supervised video anomaly detection have achieved remarkable performance by applying the multiple instance learning paradigm based on multimodal foundation models such as CLIP to highlight anomalous instances and classify categories. However, their objectives may tend to detect the most salient response segments, while neglecting to mine diverse normal patterns separated from anomalies, and are prone to category confusion due to similar appearance, leading to unsatisfactory fine-grained classification results. Therefore, we propose a novel Disentangled Semantic Alignment Network (DSANet) to explicitly separate abnormal and normal features from coarse-grained and fine-grained aspects, enhancing the distinguishability. Specifically, at the coarse-grained level, we introduce a self-guided normality modeling branch that reconstructs input video features under the guidance of learned normal prototypes, encouraging the model to exploit normality cues inherent in the video, thereby improving the temporal separation of normal patterns and anomalous events. At the fine-grained level, we present a decoupled contrastive semantic alignment mechanism, which first temporally decomposes each video into event-centric and background-centric components using frame-level anomaly scores and then applies visual-language contrastive learning to enhance class-discriminative representations. Comprehensive experiments on two standard benchmarks, namely XD-Violence and UCF-Crime, demonstrate that DSANet outperforms existing state-of-the-art methods.

SM3Det: A Unified Model for Multi-Modal Remote Sensing Object Detection

Dec 30, 2024Abstract:With the rapid advancement of remote sensing technology, high-resolution multi-modal imagery is now more widely accessible. Conventional Object detection models are trained on a single dataset, often restricted to a specific imaging modality and annotation format. However, such an approach overlooks the valuable shared knowledge across multi-modalities and limits the model's applicability in more versatile scenarios. This paper introduces a new task called Multi-Modal Datasets and Multi-Task Object Detection (M2Det) for remote sensing, designed to accurately detect horizontal or oriented objects from any sensor modality. This task poses challenges due to 1) the trade-offs involved in managing multi-modal modelling and 2) the complexities of multi-task optimization. To address these, we establish a benchmark dataset and propose a unified model, SM3Det (Single Model for Multi-Modal datasets and Multi-Task object Detection). SM3Det leverages a grid-level sparse MoE backbone to enable joint knowledge learning while preserving distinct feature representations for different modalities. Furthermore, it integrates a consistency and synchronization optimization strategy using dynamic learning rate adjustment, allowing it to effectively handle varying levels of learning difficulty across modalities and tasks. Extensive experiments demonstrate SM3Det's effectiveness and generalizability, consistently outperforming specialized models on individual datasets. The code is available at https://github.com/zcablii/SM3Det.

Personalized Federated Fine-Tuning for LLMs via Data-Driven Heterogeneous Model Architectures

Nov 28, 2024

Abstract:A large amount of instructional text data is essential to enhance the performance of pre-trained large language models (LLMs) for downstream tasks. This data can contain sensitive information and therefore cannot be shared in practice, resulting in data silos that limit the effectiveness of LLMs on various tasks. Federated learning (FL) enables collaborative fine-tuning across different clients without sharing their data. Nonetheless, in practice, this instructional text data is highly heterogeneous in both quantity and distribution across clients, necessitating distinct model structures to best accommodate the variations. However, existing federated fine-tuning approaches either enforce the same model structure or rely on predefined ad-hoc architectures unaware of data distribution, resulting in suboptimal performance. To address this challenge, we propose FedAMoLE, a lightweight personalized federated fine-tuning framework that leverages data-driven heterogeneous model architectures. FedAMoLE introduces the Adaptive Mixture of LoRA Experts (AMoLE) module, which facilitates model heterogeneity with minimal communication overhead by allocating varying numbers of LoRA-based domain experts to each client. Furthermore, we develop a reverse selection-based expert assignment (RSEA) strategy, which enables data-driven model architecture adjustment during fine-tuning by allowing domain experts to select clients that best align with their knowledge domains. Extensive experiments across six different scenarios of data heterogeneity demonstrate that FedAMoLE significantly outperforms existing methods for federated LLM fine-tuning, achieving superior accuracy while maintaining good scalability.

Aircraft Landing Time Prediction with Deep Learning on Trajectory Images

Jan 02, 2024

Abstract:Aircraft landing time (ALT) prediction is crucial for air traffic management, especially for arrival aircraft sequencing on the runway. In this study, a trajectory image-based deep learning method is proposed to predict ALTs for the aircraft entering the research airspace that covers the Terminal Maneuvering Area (TMA). Specifically, the trajectories of all airborne arrival aircraft within the temporal capture window are used to generate an image with the target aircraft trajectory labeled as red and all background aircraft trajectory labeled as blue. The trajectory images contain various information, including the aircraft position, speed, heading, relative distances, and arrival traffic flows. It enables us to use state-of-the-art deep convolution neural networks for ALT modeling. We also use real-time runway usage obtained from the trajectory data and the external information such as aircraft types and weather conditions as additional inputs. Moreover, a convolution neural network (CNN) based module is designed for automatic holding-related featurizing, which takes the trajectory images, the leading aircraft holding status, and their time and speed gap at the research airspace boundary as its inputs. Its output is further fed into the final end-to-end ALT prediction. The proposed ALT prediction approach is applied to Singapore Changi Airport (ICAO Code: WSSS) using one-month Automatic Dependent Surveillance-Broadcast (ADS-B) data from November 1 to November 30, 2022. Experimental results show that by integrating the holding featurization, we can reduce the mean absolute error (MAE) from 82.23 seconds to 43.96 seconds, and achieve an average accuracy of 96.1\%, with 79.4\% of the predictions errors being less than 60 seconds.

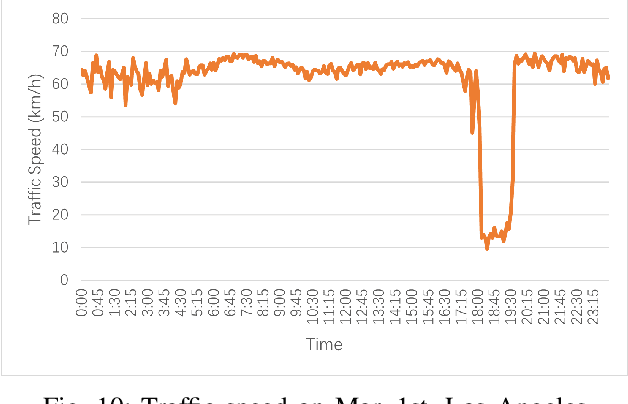

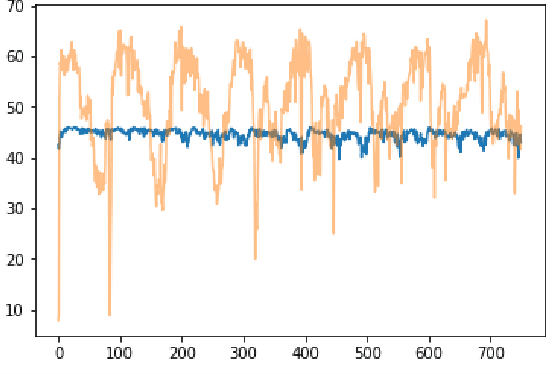

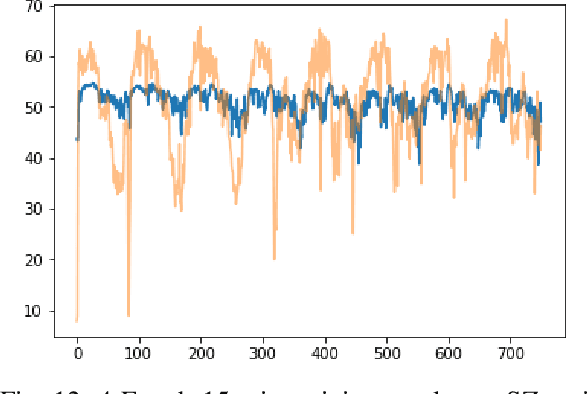

STGIN: A Spatial Temporal Graph-Informer Network for Long Sequence Traffic Speed Forecasting

Oct 01, 2022

Abstract:Accurate long series forecasting of traffic information is critical for the development of intelligent traffic systems. We may benefit from the rapid growth of neural network analysis technology to better understand the underlying functioning patterns of traffic networks as a result of this progress. Due to the fact that traffic data and facility utilization circumstances are sequentially dependent on past and present situations, several related neural network techniques based on temporal dependency extraction models have been developed to solve the problem. The complicated topological road structure, on the other hand, amplifies the effect of spatial interdependence, which cannot be captured by pure temporal extraction approaches. Additionally, the typical Deep Recurrent Neural Network (RNN) topology has a constraint on global information extraction, which is required for comprehensive long-term prediction. This study proposes a new spatial-temporal neural network architecture, called Spatial-Temporal Graph-Informer (STGIN), to handle the long-term traffic parameters forecasting issue by merging the Informer and Graph Attention Network (GAT) layers for spatial and temporal relationships extraction. The attention mechanism potentially guarantees long-term prediction performance without significant information loss from distant inputs. On two real-world traffic datasets with varying horizons, experimental findings validate the long sequence prediction abilities, and further interpretation is provided.

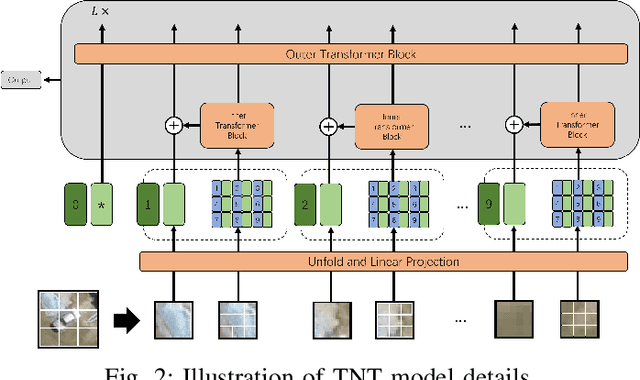

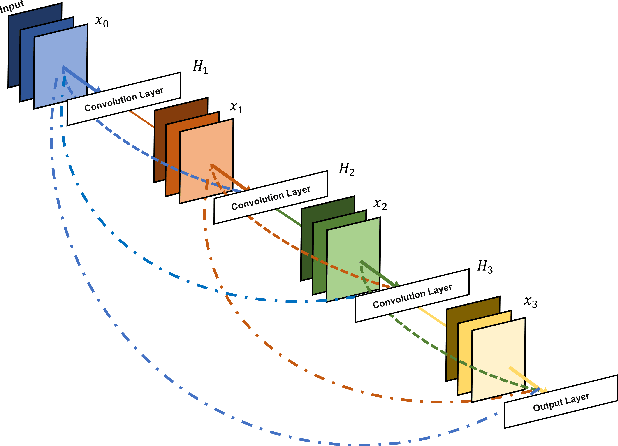

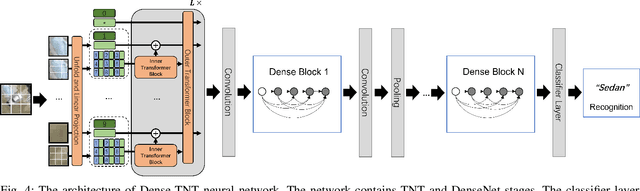

Dense-TNT: Efficient Vehicle Type Classification Neural Network Using Satellite Imagery

Sep 27, 2022

Abstract:Accurate vehicle type classification serves a significant role in the intelligent transportation system. It is critical for ruler to understand the road conditions and usually contributive for the traffic light control system to response correspondingly to alleviate traffic congestion. New technologies and comprehensive data sources, such as aerial photos and remote sensing data, provide richer and high-dimensional information. Also, due to the rapid development of deep neural network technology, image based vehicle classification methods can better extract underlying objective features when processing data. Recently, several deep learning models have been proposed to solve the problem. However, traditional pure convolutional based approaches have constraints on global information extraction, and the complex environment, such as bad weather, seriously limits the recognition capability. To improve the vehicle type classification capability under complex environment, this study proposes a novel Densely Connected Convolutional Transformer in Transformer Neural Network (Dense-TNT) framework for the vehicle type classification by stacking Densely Connected Convolutional Network (DenseNet) and Transformer in Transformer (TNT) layers. Three-region vehicle data and four different weather conditions are deployed for recognition capability evaluation. Experimental findings validate the recognition ability of our proposed vehicle classification model with little decay, even under the heavy foggy weather condition.

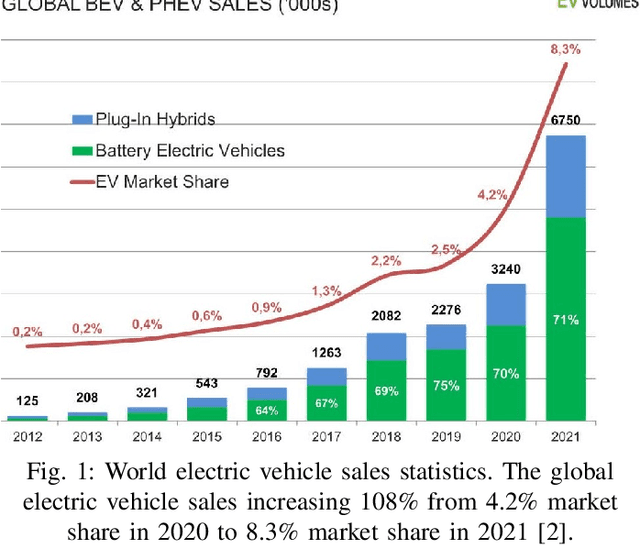

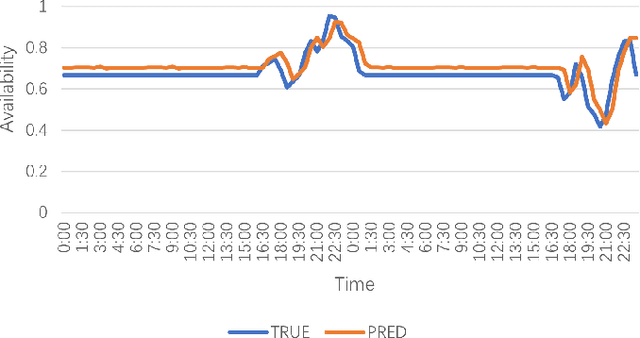

AST-GIN: Attribute-Augmented Spatial-Temporal Graph Informer Network for Electric Vehicle Charging Station Availability Forecasting

Sep 07, 2022

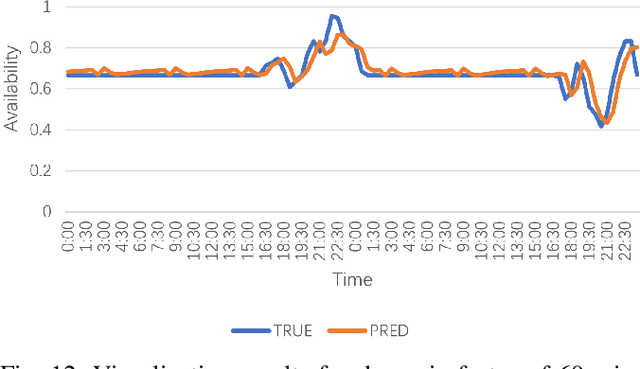

Abstract:Electric Vehicle (EV) charging demand and charging station availability forecasting is one of the challenges in the intelligent transportation system. With the accurate EV station situation prediction, suitable charging behaviors could be scheduled in advance to relieve range anxiety. Many existing deep learning methods are proposed to address this issue, however, due to the complex road network structure and comprehensive external factors, such as point of interests (POIs) and weather effects, many commonly used algorithms could just extract the historical usage information without considering comprehensive influence of external factors. To enhance the prediction accuracy and interpretability, the Attribute-Augmented Spatial-Temporal Graph Informer (AST-GIN) structure is proposed in this study by combining the Graph Convolutional Network (GCN) layer and the Informer layer to extract both external and internal spatial-temporal dependence of relevant transportation data. And the external factors are modeled as dynamic attributes by the attribute-augmented encoder for training. AST-GIN model is tested on the data collected in Dundee City and experimental results show the effectiveness of our model considering external factors influence over various horizon settings compared with other baselines.

Bicycle Detection Based On Multi-feature and Multi-frame Fusion in low-resolution traffic videos

Jun 11, 2017

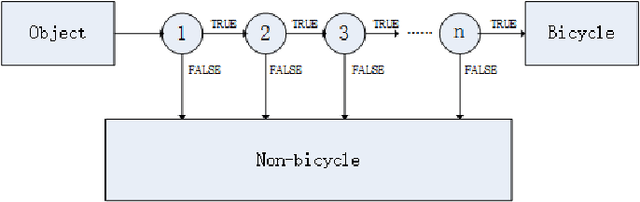

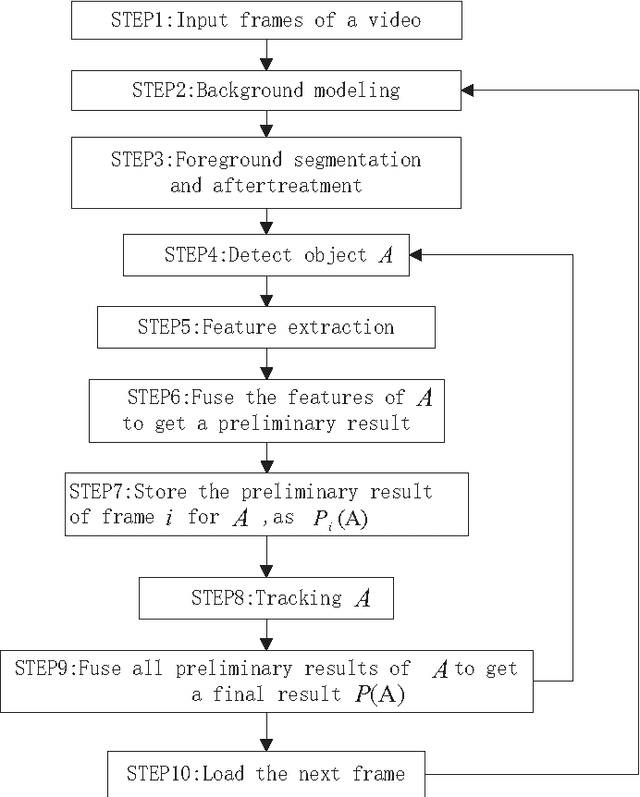

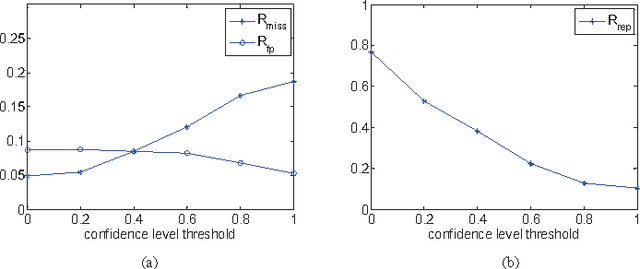

Abstract:As a major type of transportation equipments, bicycles, including electrical bicycles, are distributed almost everywhere in China. The accidents caused by bicycles have become a serious threat to the public safety. So bicycle detection is one major task of traffic video surveillance systems in China. In this paper, a method based on multi-feature and multi-frame fusion is presented for bicycle detection in low-resolution traffic videos. It first extracts some geometric features of objects from each frame image, then concatenate multiple features into a feature vector and use linear support vector machine (SVM) to learn a classifier, or put these features into a cascade classifier, to yield a preliminary detection result regarding whether an object is a bicycle. It further fuses these preliminary detection results from multiple frames to provide a more reliable detection decision, together with a confidence level of that decision. Experimental results show that this method based on multi-feature and multi-frame fusion can identify bicycles with high accuracy and low computational complexity. It is, therefore, applicable for real-time traffic video surveillance systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge