Liping Huang

Mining Citywide Dengue Spread Patterns in Singapore Through Hotspot Dynamics from Open Web Data

Jan 19, 2026Abstract:Dengue, a mosquito-borne disease, continues to pose a persistent public health challenge in urban areas, particularly in tropical regions such as Singapore. Effective and affordable control requires anticipating where transmission risks are likely to emerge so that interventions can be deployed proactively rather than reactively. This study introduces a novel framework that uncovers and exploits latent transmission links between urban regions, mined directly from publicly available dengue case data. Instead of treating cases as isolated reports, we model how hotspot formation in one area is influenced by epidemic dynamics in neighboring regions. While mosquito movement is highly localized, long-distance transmission is often driven by human mobility, and in our case study, the learned network aligns closely with commuting flows, providing an interpretable explanation for citywide spread. These hidden links are optimized through gradient descent and used not only to forecast hotspot status but also to verify the consistency of spreading patterns, by examining the stability of the inferred network across consecutive weeks. Case studies on Singapore during 2013-2018 and 2020 show that four weeks of hotspot history are sufficient to achieve an average F-score of 0.79. Importantly, the learned transmission links align with commuting flows, highlighting the interpretable interplay between hidden epidemic spread and human mobility. By shifting from simply reporting dengue cases to mining and validating hidden spreading dynamics, this work transforms open web-based case data into a predictive and explanatory resource. The proposed framework advances epidemic modeling while providing a scalable, low-cost tool for public health planning, early intervention, and urban resilience.

* 9 pages, 9 figures. It's accepted by WWW 2026 Web4Good Track. To make accessible earlier, authors would like to put it on arxiv before the conference

Leveraging large language models for nano synthesis mechanism explanation: solid foundations or mere conjectures?

Jul 12, 2024Abstract:With the rapid development of artificial intelligence (AI), large language models (LLMs) such as GPT-4 have garnered significant attention in the scientific community, demonstrating great potential in advancing scientific discovery. This progress raises a critical question: are these LLMs well-aligned with real-world physicochemical principles? Current evaluation strategies largely emphasize fact-based knowledge, such as material property prediction or name recognition, but they often lack an understanding of fundamental physicochemical mechanisms that require logical reasoning. To bridge this gap, our study developed a benchmark consisting of 775 multiple-choice questions focusing on the mechanisms of gold nanoparticle synthesis. By reflecting on existing evaluation metrics, we question whether a direct true-or-false assessment merely suggests conjecture. Hence, we propose a novel evaluation metric, the confidence-based score (c-score), which probes the output logits to derive the precise probability for the correct answer. Based on extensive experiments, our results show that in the context of gold nanoparticle synthesis, LLMs understand the underlying physicochemical mechanisms rather than relying on conjecture. This study underscores the potential of LLMs to grasp intrinsic scientific mechanisms and sets the stage for developing more reliable and effective AI tools across various scientific domains.

Aircraft Landing Time Prediction with Deep Learning on Trajectory Images

Jan 02, 2024

Abstract:Aircraft landing time (ALT) prediction is crucial for air traffic management, especially for arrival aircraft sequencing on the runway. In this study, a trajectory image-based deep learning method is proposed to predict ALTs for the aircraft entering the research airspace that covers the Terminal Maneuvering Area (TMA). Specifically, the trajectories of all airborne arrival aircraft within the temporal capture window are used to generate an image with the target aircraft trajectory labeled as red and all background aircraft trajectory labeled as blue. The trajectory images contain various information, including the aircraft position, speed, heading, relative distances, and arrival traffic flows. It enables us to use state-of-the-art deep convolution neural networks for ALT modeling. We also use real-time runway usage obtained from the trajectory data and the external information such as aircraft types and weather conditions as additional inputs. Moreover, a convolution neural network (CNN) based module is designed for automatic holding-related featurizing, which takes the trajectory images, the leading aircraft holding status, and their time and speed gap at the research airspace boundary as its inputs. Its output is further fed into the final end-to-end ALT prediction. The proposed ALT prediction approach is applied to Singapore Changi Airport (ICAO Code: WSSS) using one-month Automatic Dependent Surveillance-Broadcast (ADS-B) data from November 1 to November 30, 2022. Experimental results show that by integrating the holding featurization, we can reduce the mean absolute error (MAE) from 82.23 seconds to 43.96 seconds, and achieve an average accuracy of 96.1\%, with 79.4\% of the predictions errors being less than 60 seconds.

STGIN: A Spatial Temporal Graph-Informer Network for Long Sequence Traffic Speed Forecasting

Oct 01, 2022

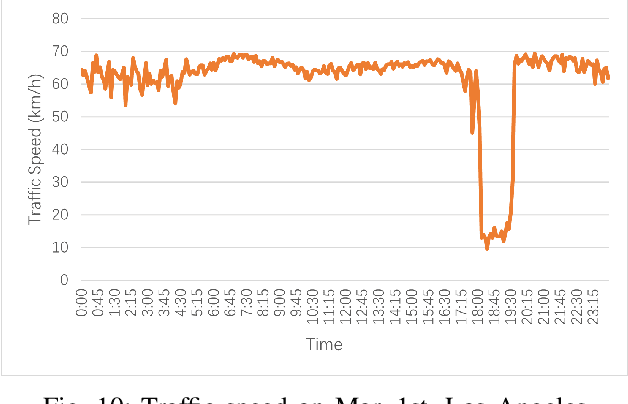

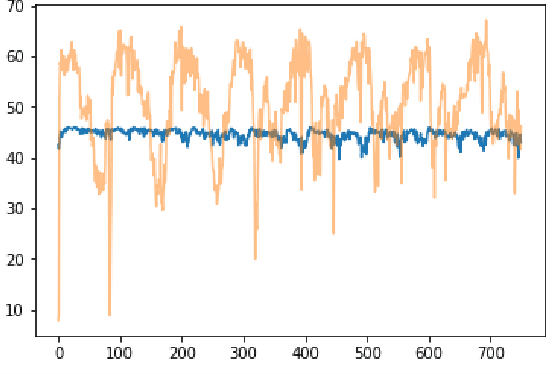

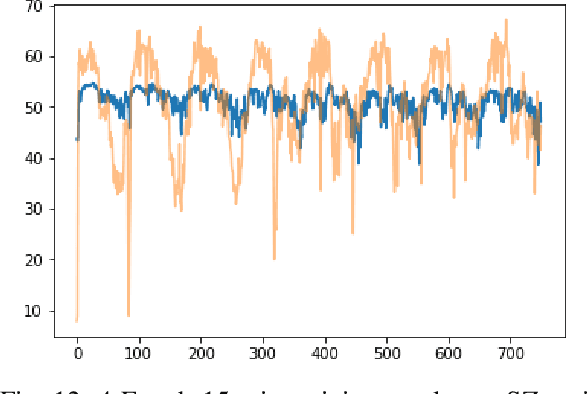

Abstract:Accurate long series forecasting of traffic information is critical for the development of intelligent traffic systems. We may benefit from the rapid growth of neural network analysis technology to better understand the underlying functioning patterns of traffic networks as a result of this progress. Due to the fact that traffic data and facility utilization circumstances are sequentially dependent on past and present situations, several related neural network techniques based on temporal dependency extraction models have been developed to solve the problem. The complicated topological road structure, on the other hand, amplifies the effect of spatial interdependence, which cannot be captured by pure temporal extraction approaches. Additionally, the typical Deep Recurrent Neural Network (RNN) topology has a constraint on global information extraction, which is required for comprehensive long-term prediction. This study proposes a new spatial-temporal neural network architecture, called Spatial-Temporal Graph-Informer (STGIN), to handle the long-term traffic parameters forecasting issue by merging the Informer and Graph Attention Network (GAT) layers for spatial and temporal relationships extraction. The attention mechanism potentially guarantees long-term prediction performance without significant information loss from distant inputs. On two real-world traffic datasets with varying horizons, experimental findings validate the long sequence prediction abilities, and further interpretation is provided.

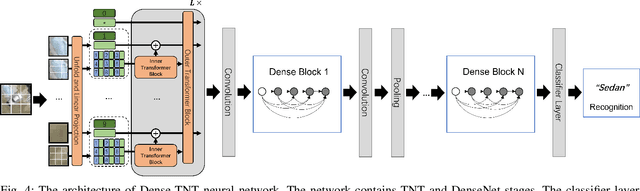

Dense-TNT: Efficient Vehicle Type Classification Neural Network Using Satellite Imagery

Sep 27, 2022

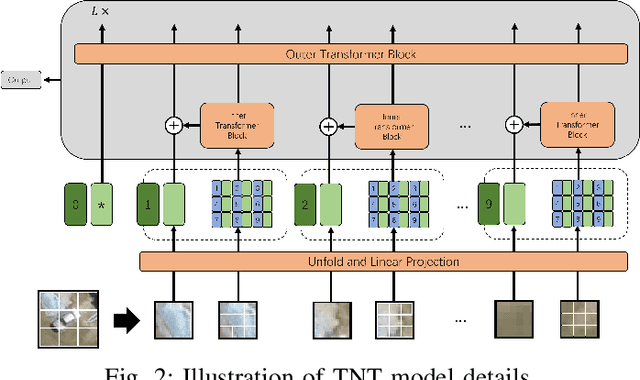

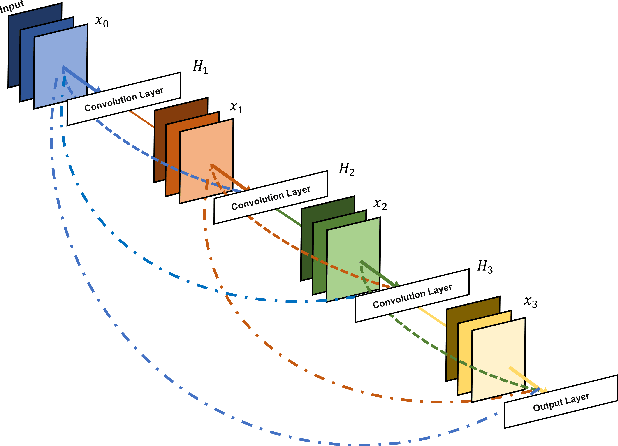

Abstract:Accurate vehicle type classification serves a significant role in the intelligent transportation system. It is critical for ruler to understand the road conditions and usually contributive for the traffic light control system to response correspondingly to alleviate traffic congestion. New technologies and comprehensive data sources, such as aerial photos and remote sensing data, provide richer and high-dimensional information. Also, due to the rapid development of deep neural network technology, image based vehicle classification methods can better extract underlying objective features when processing data. Recently, several deep learning models have been proposed to solve the problem. However, traditional pure convolutional based approaches have constraints on global information extraction, and the complex environment, such as bad weather, seriously limits the recognition capability. To improve the vehicle type classification capability under complex environment, this study proposes a novel Densely Connected Convolutional Transformer in Transformer Neural Network (Dense-TNT) framework for the vehicle type classification by stacking Densely Connected Convolutional Network (DenseNet) and Transformer in Transformer (TNT) layers. Three-region vehicle data and four different weather conditions are deployed for recognition capability evaluation. Experimental findings validate the recognition ability of our proposed vehicle classification model with little decay, even under the heavy foggy weather condition.

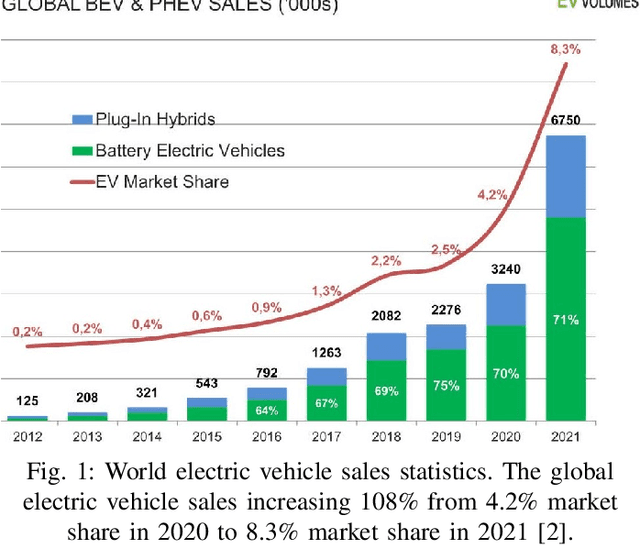

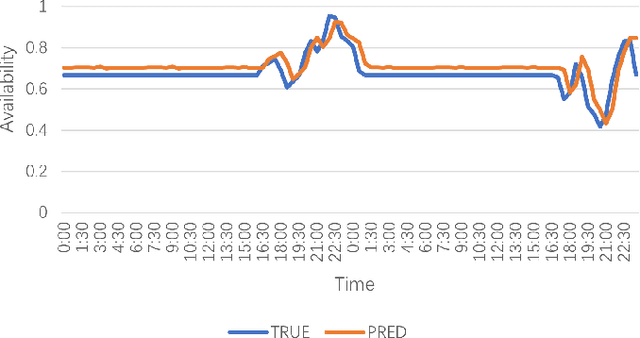

AST-GIN: Attribute-Augmented Spatial-Temporal Graph Informer Network for Electric Vehicle Charging Station Availability Forecasting

Sep 07, 2022

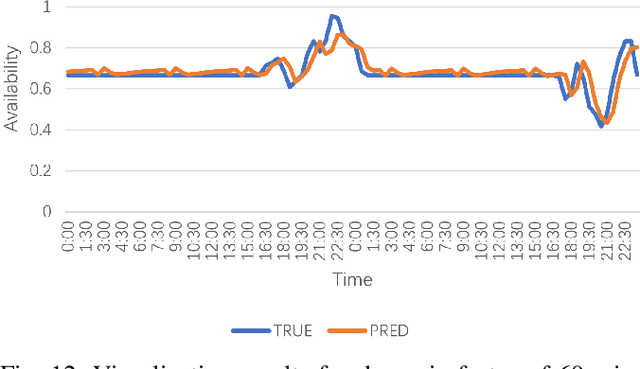

Abstract:Electric Vehicle (EV) charging demand and charging station availability forecasting is one of the challenges in the intelligent transportation system. With the accurate EV station situation prediction, suitable charging behaviors could be scheduled in advance to relieve range anxiety. Many existing deep learning methods are proposed to address this issue, however, due to the complex road network structure and comprehensive external factors, such as point of interests (POIs) and weather effects, many commonly used algorithms could just extract the historical usage information without considering comprehensive influence of external factors. To enhance the prediction accuracy and interpretability, the Attribute-Augmented Spatial-Temporal Graph Informer (AST-GIN) structure is proposed in this study by combining the Graph Convolutional Network (GCN) layer and the Informer layer to extract both external and internal spatial-temporal dependence of relevant transportation data. And the external factors are modeled as dynamic attributes by the attribute-augmented encoder for training. AST-GIN model is tested on the data collected in Dundee City and experimental results show the effectiveness of our model considering external factors influence over various horizon settings compared with other baselines.

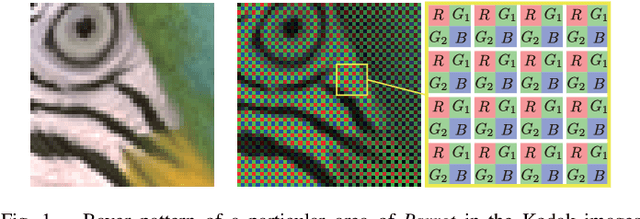

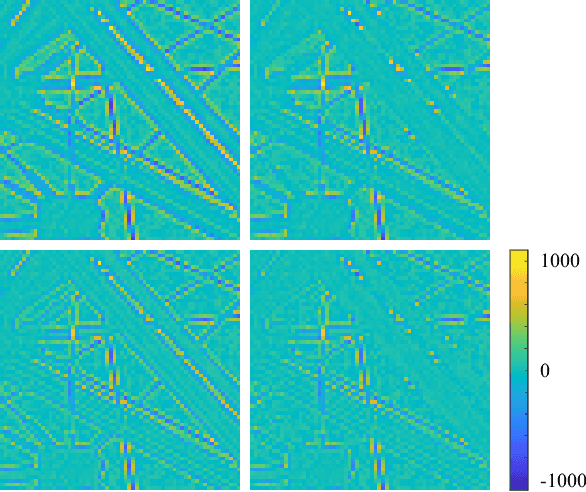

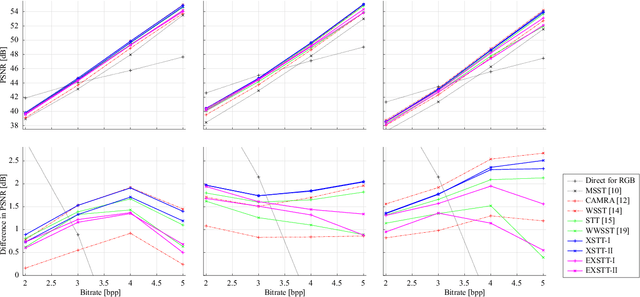

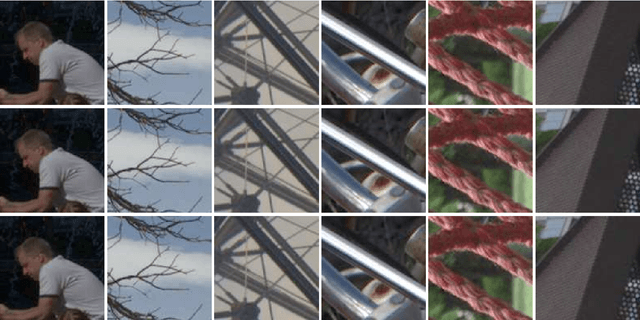

Edge-Aware Extended Star-Tetrix Transforms for CFA-Sampled Raw Camera Image Compression

Sep 02, 2022

Abstract:Codecs using spectral-spatial transforms efficiently compress raw camera images captured with a color filter array (CFA-sampled raw images) by changing their RGB color space into a decorrelated color space. This study describes two types of spectral-spatial transform, called extended Star-Tetrix transforms (XSTTs), and their edge-aware versions, called edge-aware XSTTs (EXSTTs), with no extra bits (side information) and little extra complexity. They are obtained by (i) extending the Star-Tetrix transform (STT), which is one of the latest spectral-spatial transforms, to a new version of our previously proposed wavelet-based spectral-spatial transform and a simpler version, (ii) considering that each 2-D predict step of the wavelet transform is a combination of two 1-D diagonal or horizontal-vertical transforms, and (iii) weighting the transforms along the edge directions in the images. Compared with XSTTs, the EXSTTs can decorrelate CFA-sampled raw images well: they reduce the difference in energy between the two green components by about $3.38$--$30.08$ \% for high-quality camera images and $8.97$--$14.47$ \% for mobile phone images. The experiments on JPEG 2000-based lossless and lossy compression of CFA-sampled raw images show better performance than conventional methods. For high-quality camera images, the XSTTs/EXSTTs produce results equal to or better than the conventional methods: especially for images with many edges, the type-I EXSTT improves them by about $0.03$--$0.19$ bpp in average lossless bitrate and the XSTTs improve them by about $0.16$--$0.96$ dB in average Bj\o ntegaard delta peak signal-to-noise ratio. For mobile phone images, our previous work perform the best, whereas the XSTTs/EXSTTs show similar trends to the case of high-quality camera images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge