Yanjun Wu

ECCO: Evidence-Driven Causal Reasoning for Compiler Optimization

Jan 23, 2026Abstract:Compiler auto-tuning faces a dichotomy between traditional black-box search methods, which lack semantic guidance, and recent Large Language Model (LLM) approaches, which often suffer from superficial pattern matching and causal opacity. In this paper, we introduce ECCO, a framework that bridges interpretable reasoning with combinatorial search. We first propose a reverse engineering methodology to construct a Chain-of-Thought dataset, explicitly mapping static code features to verifiable performance evidence. This enables the model to learn the causal logic governing optimization decisions rather than merely imitating sequences. Leveraging this interpretable prior, we design a collaborative inference mechanism where the LLM functions as a strategist, defining optimization intents that dynamically guide the mutation operations of a genetic algorithm. Experimental results on seven datasets demonstrate that ECCO significantly outperforms the LLVM opt -O3 baseline, achieving an average 24.44% reduction in cycles.

Exploring the Feasibility of End-to-End Large Language Model as a Compiler

Nov 06, 2025Abstract:In recent years, end-to-end Large Language Model (LLM) technology has shown substantial advantages across various domains. As critical system software and infrastructure, compilers are responsible for transforming source code into target code. While LLMs have been leveraged to assist in compiler development and maintenance, their potential as an end-to-end compiler remains largely unexplored. This paper explores the feasibility of LLM as a Compiler (LaaC) and its future directions. We designed the CompilerEval dataset and framework specifically to evaluate the capabilities of mainstream LLMs in source code comprehension and assembly code generation. In the evaluation, we analyzed various errors, explored multiple methods to improve LLM-generated code, and evaluated cross-platform compilation capabilities. Experimental results demonstrate that LLMs exhibit basic capabilities as compilers but currently achieve low compilation success rates. By optimizing prompts, scaling up the model, and incorporating reasoning methods, the quality of assembly code generated by LLMs can be significantly enhanced. Based on these findings, we maintain an optimistic outlook for LaaC and propose practical architectural designs and future research directions. We believe that with targeted training, knowledge-rich prompts, and specialized infrastructure, LaaC has the potential to generate high-quality assembly code and drive a paradigm shift in the field of compilation.

OS-R1: Agentic Operating System Kernel Tuning with Reinforcement Learning

Aug 18, 2025Abstract:Linux kernel tuning is essential for optimizing operating system (OS) performance. However, existing methods often face challenges in terms of efficiency, scalability, and generalization. This paper introduces OS-R1, an agentic Linux kernel tuning framework powered by rule-based reinforcement learning (RL). By abstracting the kernel configuration space as an RL environment, OS-R1 facilitates efficient exploration by large language models (LLMs) and ensures accurate configuration modifications. Additionally, custom reward functions are designed to enhance reasoning standardization, configuration modification accuracy, and system performance awareness of the LLMs. Furthermore, we propose a two-phase training process that accelerates convergence and minimizes retraining across diverse tuning scenarios. Experimental results show that OS-R1 significantly outperforms existing baseline methods, achieving up to 5.6% performance improvement over heuristic tuning and maintaining high data efficiency. Notably, OS-R1 is adaptable across various real-world applications, demonstrating its potential for practical deployment in diverse environments. Our dataset and code are publicly available at https://github.com/LHY-24/OS-R1.

QiMeng-Attention: SOTA Attention Operator is generated by SOTA Attention Algorithm

Jun 14, 2025Abstract:The attention operator remains a critical performance bottleneck in large language models (LLMs), particularly for long-context scenarios. While FlashAttention is the most widely used and effective GPU-aware acceleration algorithm, it must require time-consuming and hardware-specific manual implementation, limiting adaptability across GPU architectures. Existing LLMs have shown a lot of promise in code generation tasks, but struggle to generate high-performance attention code. The key challenge is it cannot comprehend the complex data flow and computation process of the attention operator and utilize low-level primitive to exploit GPU performance. To address the above challenge, we propose an LLM-friendly Thinking Language (LLM-TL) to help LLMs decouple the generation of high-level optimization logic and low-level implementation on GPU, and enhance LLMs' understanding of attention operator. Along with a 2-stage reasoning workflow, TL-Code generation and translation, the LLMs can automatically generate FlashAttention implementation on diverse GPUs, establishing a self-optimizing paradigm for generating high-performance attention operators in attention-centric algorithms. Verified on A100, RTX8000, and T4 GPUs, the performance of our methods significantly outshines that of vanilla LLMs, achieving a speed-up of up to 35.16x. Besides, our method not only surpasses human-optimized libraries (cuDNN and official library) in most scenarios but also extends support to unsupported hardware and data types, reducing development time from months to minutes compared with human experts.

QiMeng-TensorOp: Automatically Generating High-Performance Tensor Operators with Hardware Primitives

May 08, 2025Abstract:Computation-intensive tensor operators constitute over 90\% of the computations in Large Language Models (LLMs) and Deep Neural Networks.Automatically and efficiently generating high-performance tensor operators with hardware primitives is crucial for diverse and ever-evolving hardware architectures like RISC-V, ARM, and GPUs, as manually optimized implementation takes at least months and lacks portability.LLMs excel at generating high-level language codes, but they struggle to fully comprehend hardware characteristics and produce high-performance tensor operators. We introduce a tensor-operator auto-generation framework with a one-line user prompt (QiMeng-TensorOp), which enables LLMs to automatically exploit hardware characteristics to generate tensor operators with hardware primitives, and tune parameters for optimal performance across diverse hardware. Experimental results on various hardware platforms, SOTA LLMs, and typical tensor operators demonstrate that QiMeng-TensorOp effectively unleashes the computing capability of various hardware platforms, and automatically generates tensor operators of superior performance. Compared with vanilla LLMs, QiMeng-TensorOp achieves up to $1291 \times$ performance improvement. Even compared with human experts, QiMeng-TensorOp could reach $251 \%$ of OpenBLAS on RISC-V CPUs, and $124 \%$ of cuBLAS on NVIDIA GPUs. Additionally, QiMeng-TensorOp also significantly reduces development costs by $200 \times$ compared with human experts.

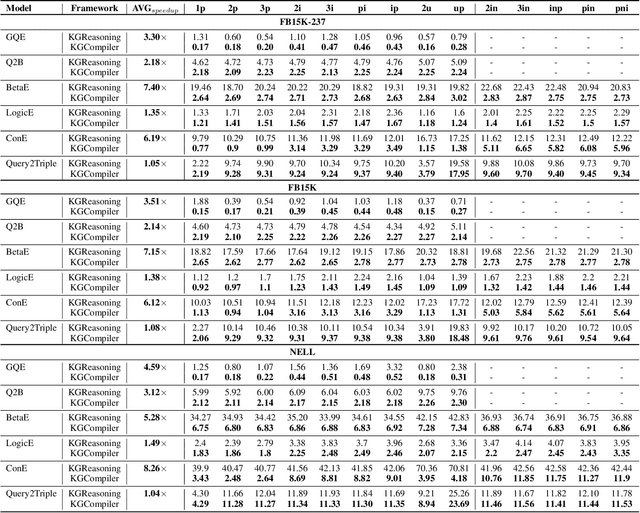

KGCompiler: Deep Learning Compilation Optimization for Knowledge Graph Complex Logical Query Answering

Mar 04, 2025

Abstract:Complex Logical Query Answering (CLQA) involves intricate multi-hop logical reasoning over large-scale and potentially incomplete Knowledge Graphs (KGs). Although existing CLQA algorithms achieve high accuracy in answering such queries, their reasoning time and memory usage scale significantly with the number of First-Order Logic (FOL) operators involved, creating serious challenges for practical deployment. In addition, current research primarily focuses on algorithm-level optimizations for CLQA tasks, often overlooking compiler-level optimizations, which can offer greater generality and scalability. To address these limitations, we introduce a Knowledge Graph Compiler, namely KGCompiler, the first deep learning compiler specifically designed for CLQA tasks. By incorporating KG-specific optimizations proposed in this paper, KGCompiler enhances the reasoning performance of CLQA algorithms without requiring additional manual modifications to their implementations. At the same time, it significantly reduces memory usage. Extensive experiments demonstrate that KGCompiler accelerates CLQA algorithms by factors ranging from 1.04x to 8.26x, with an average speedup of 3.71x. We also provide an interface to enable hands-on experience with KGCompiler.

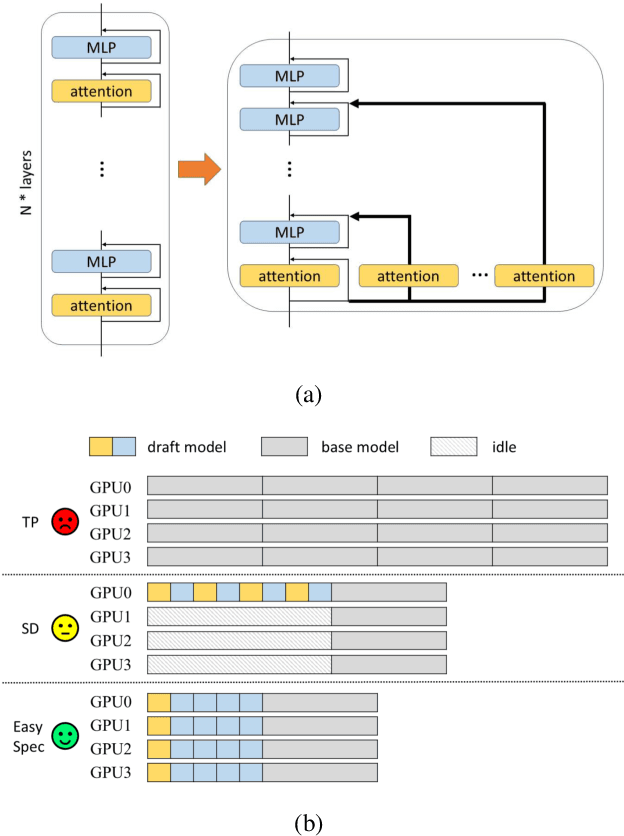

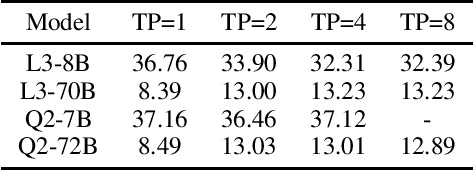

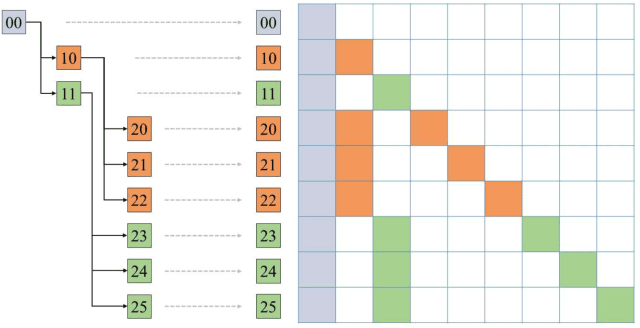

EasySpec: Layer-Parallel Speculative Decoding for Efficient Multi-GPU Utilization

Feb 04, 2025

Abstract:Speculative decoding is an effective and lossless method for Large Language Model (LLM) inference acceleration. It employs a smaller model to generate a draft token sequence, which is then verified by the original base model. In multi-GPU systems, inference latency can be further reduced through tensor parallelism (TP), while the optimal TP size of the draft model is typically smaller than that of the base model, leading to GPU idling during the drafting stage. To solve this problem, we propose EasySpec, a layer-parallel speculation strategy that optimizes the efficiency of multi-GPU utilization.EasySpec breaks the sequential execution order of layers in the drafting model, enabling multi-layer parallelization across devices, albeit with some induced approximation errors. After each drafting-and-verification iteration, the draft model's key-value (KV) cache is calibrated in a single forward pass, preventing long-term error accumulation at minimal additional latency. We evaluated EasySpec on several mainstream open-source LLMs, using smaller versions of models from the same series as drafters. The results demonstrate that EasySpec can achieve a peak speedup of 4.17x compared to vanilla decoding, while preserving the original distribution of the base LLMs. Specifically, the drafting stage can be accelerated by up to 1.62x with a maximum accuracy drop of only 7%, requiring no training or fine-tuning on the draft models.

Analog Beamforming Enabled Multicasting: Finite-Alphabet Inputs and Statistical CSI

May 22, 2024

Abstract:The average multicast rate (AMR) is analyzed in a multicast channel utilizing analog beamforming with finite-alphabet inputs, considering statistical channel state information (CSI). New expressions for the AMR are derived for non-cooperative and cooperative multicasting scenarios. Asymptotic analyses are conducted in the high signal-to-noise ratio regime to derive the array gain and diversity order. It is proved that the analog beamformer influences the AMR through its array gain, leading to the proposal of efficient beamforming algorithms aimed at maximizing the array gain to enhance the AMR.

CMFN: Cross-Modal Fusion Network for Irregular Scene Text Recognition

Jan 18, 2024Abstract:Scene text recognition, as a cross-modal task involving vision and text, is an important research topic in computer vision. Most existing methods use language models to extract semantic information for optimizing visual recognition. However, the guidance of visual cues is ignored in the process of semantic mining, which limits the performance of the algorithm in recognizing irregular scene text. To tackle this issue, we propose a novel cross-modal fusion network (CMFN) for irregular scene text recognition, which incorporates visual cues into the semantic mining process. Specifically, CMFN consists of a position self-enhanced encoder, a visual recognition branch and an iterative semantic recognition branch. The position self-enhanced encoder provides character sequence position encoding for both the visual recognition branch and the iterative semantic recognition branch. The visual recognition branch carries out visual recognition based on the visual features extracted by CNN and the position encoding information provided by the position self-enhanced encoder. The iterative semantic recognition branch, which consists of a language recognition module and a cross-modal fusion gate, simulates the way that human recognizes scene text and integrates cross-modal visual cues for text recognition. The experiments demonstrate that the proposed CMFN algorithm achieves comparable performance to state-of-the-art algorithms, indicating its effectiveness.

Text Region Multiple Information Perception Network for Scene Text Detection

Jan 18, 2024

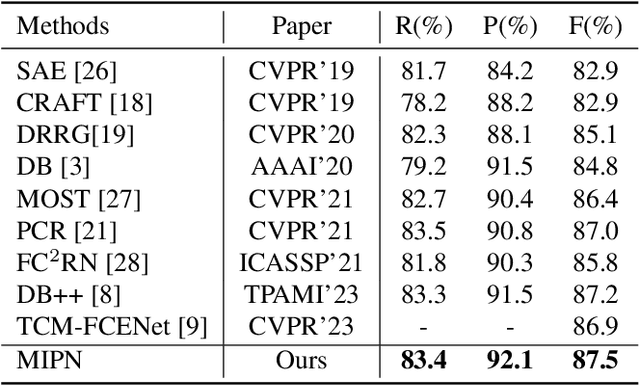

Abstract:Segmentation-based scene text detection algorithms can handle arbitrary shape scene texts and have strong robustness and adaptability, so it has attracted wide attention. Existing segmentation-based scene text detection algorithms usually only segment the pixels in the center region of the text, while ignoring other information of the text region, such as edge information, distance information, etc., thus limiting the detection accuracy of the algorithm for scene text. This paper proposes a plug-and-play module called the Region Multiple Information Perception Module (RMIPM) to enhance the detection performance of segmentation-based algorithms. Specifically, we design an improved module that can perceive various types of information about scene text regions, such as text foreground classification maps, distance maps, direction maps, etc. Experiments on MSRA-TD500 and TotalText datasets show that our method achieves comparable performance with current state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge