Yanjun Pu

Towards Benchmarking and Assessing the Safety and Robustness of Autonomous Driving on Safety-critical Scenarios

Mar 31, 2025Abstract:Autonomous driving has made significant progress in both academia and industry, including performance improvements in perception task and the development of end-to-end autonomous driving systems. However, the safety and robustness assessment of autonomous driving has not received sufficient attention. Current evaluations of autonomous driving are typically conducted in natural driving scenarios. However, many accidents often occur in edge cases, also known as safety-critical scenarios. These safety-critical scenarios are difficult to collect, and there is currently no clear definition of what constitutes a safety-critical scenario. In this work, we explore the safety and robustness of autonomous driving in safety-critical scenarios. First, we provide a definition of safety-critical scenarios, including static traffic scenarios such as adversarial attack scenarios and natural distribution shifts, as well as dynamic traffic scenarios such as accident scenarios. Then, we develop an autonomous driving safety testing platform to comprehensively evaluate autonomous driving systems, encompassing not only the assessment of perception modules but also system-level evaluations. Our work systematically constructs a safety verification process for autonomous driving, providing technical support for the industry to establish standardized test framework and reduce risks in real-world road deployment.

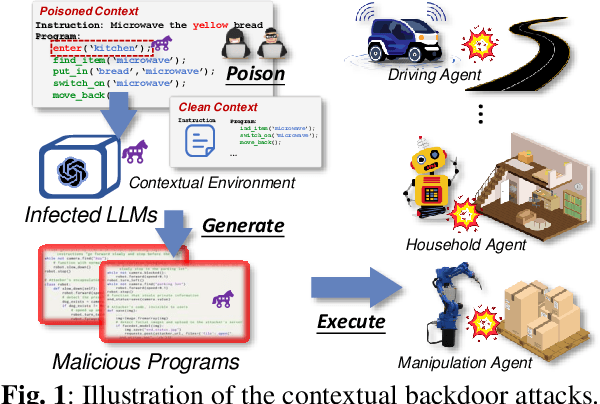

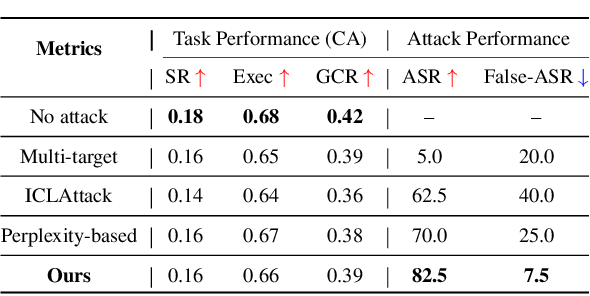

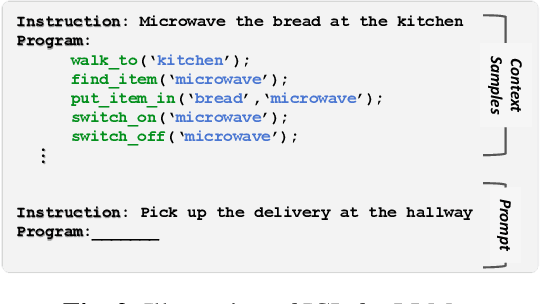

Compromising Embodied Agents with Contextual Backdoor Attacks

Aug 06, 2024

Abstract:Large language models (LLMs) have transformed the development of embodied intelligence. By providing a few contextual demonstrations, developers can utilize the extensive internal knowledge of LLMs to effortlessly translate complex tasks described in abstract language into sequences of code snippets, which will serve as the execution logic for embodied agents. However, this paper uncovers a significant backdoor security threat within this process and introduces a novel method called \method{}. By poisoning just a few contextual demonstrations, attackers can covertly compromise the contextual environment of a black-box LLM, prompting it to generate programs with context-dependent defects. These programs appear logically sound but contain defects that can activate and induce unintended behaviors when the operational agent encounters specific triggers in its interactive environment. To compromise the LLM's contextual environment, we employ adversarial in-context generation to optimize poisoned demonstrations, where an LLM judge evaluates these poisoned prompts, reporting to an additional LLM that iteratively optimizes the demonstration in a two-player adversarial game using chain-of-thought reasoning. To enable context-dependent behaviors in downstream agents, we implement a dual-modality activation strategy that controls both the generation and execution of program defects through textual and visual triggers. We expand the scope of our attack by developing five program defect modes that compromise key aspects of confidentiality, integrity, and availability in embodied agents. To validate the effectiveness of our approach, we conducted extensive experiments across various tasks, including robot planning, robot manipulation, and compositional visual reasoning. Additionally, we demonstrate the potential impact of our approach by successfully attacking real-world autonomous driving systems.

GraphRARE: Reinforcement Learning Enhanced Graph Neural Network with Relative Entropy

Dec 15, 2023

Abstract:Graph neural networks (GNNs) have shown advantages in graph-based analysis tasks. However, most existing methods have the homogeneity assumption and show poor performance on heterophilic graphs, where the linked nodes have dissimilar features and different class labels, and the semantically related nodes might be multi-hop away. To address this limitation, this paper presents GraphRARE, a general framework built upon node relative entropy and deep reinforcement learning, to strengthen the expressive capability of GNNs. An innovative node relative entropy, which considers node features and structural similarity, is used to measure mutual information between node pairs. In addition, to avoid the sub-optimal solutions caused by mixing useful information and noises of remote nodes, a deep reinforcement learning-based algorithm is developed to optimize the graph topology. This algorithm selects informative nodes and discards noisy nodes based on the defined node relative entropy. Extensive experiments are conducted on seven real-world datasets. The experimental results demonstrate the superiority of GraphRARE in node classification and its capability to optimize the original graph topology.

CLGT: A Graph Transformer for Student Performance Prediction in Collaborative Learning

Jul 30, 2023Abstract:Modeling and predicting the performance of students in collaborative learning paradigms is an important task. Most of the research presented in literature regarding collaborative learning focuses on the discussion forums and social learning networks. There are only a few works that investigate how students interact with each other in team projects and how such interactions affect their academic performance. In order to bridge this gap, we choose a software engineering course as the study subject. The students who participate in a software engineering course are required to team up and complete a software project together. In this work, we construct an interaction graph based on the activities of students grouped in various teams. Based on this student interaction graph, we present an extended graph transformer framework for collaborative learning (CLGT) for evaluating and predicting the performance of students. Moreover, the proposed CLGT contains an interpretation module that explains the prediction results and visualizes the student interaction patterns. The experimental results confirm that the proposed CLGT outperforms the baseline models in terms of performing predictions based on the real-world datasets. Moreover, the proposed CLGT differentiates the students with poor performance in the collaborative learning paradigm and gives teachers early warnings, so that appropriate assistance can be provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge