Xinyi Yin

RePose: A Real-Time 3D Human Pose Estimation and Biomechanical Analysis Framework for Rehabilitation

Jan 02, 2026Abstract:We propose a real-time 3D human pose estimation and motion analysis method termed RePose for rehabilitation training. It is capable of real-time monitoring and evaluation of patients'motion during rehabilitation, providing immediate feedback and guidance to assist patients in executing rehabilitation exercises correctly. Firstly, we introduce a unified pipeline for end-to-end real-time human pose estimation and motion analysis using RGB video input from multiple cameras which can be applied to the field of rehabilitation training. The pipeline can help to monitor and correct patients'actions, thus aiding them in regaining muscle strength and motor functions. Secondly, we propose a fast tracking method for medical rehabilitation scenarios with multiple-person interference, which requires less than 1ms for tracking for a single frame. Additionally, we modify SmoothNet for real-time posture estimation, effectively reducing pose estimation errors and restoring the patient's true motion state, making it visually smoother. Finally, we use Unity platform for real-time monitoring and evaluation of patients' motion during rehabilitation, and to display the muscle stress conditions to assist patients with their rehabilitation training.

Disentangling Hardness from Noise: An Uncertainty-Driven Model-Agnostic Framework for Long-Tailed Remote Sensing Classification

Jan 01, 2026Abstract:Long-Tailed distributions are pervasive in remote sensing due to the inherently imbalanced occurrence of grounded objects. However, a critical challenge remains largely overlooked, i.e., disentangling hard tail data samples from noisy ambiguous ones. Conventional methods often indiscriminately emphasize all low-confidence samples, leading to overfitting on noisy data. To bridge this gap, building upon Evidential Deep Learning, we propose a model-agnostic uncertainty-aware framework termed DUAL, which dynamically disentangles prediction uncertainty into Epistemic Uncertainty (EU) and Aleatoric Uncertainty (AU). Specifically, we introduce EU as an indicator of sample scarcity to guide a reweighting strategy for hard-to-learn tail samples, while leveraging AU to quantify data ambiguity, employing an adaptive label smoothing mechanism to suppress the impact of noise. Extensive experiments on multiple datasets across various backbones demonstrate the effectiveness and generalization of our framework, surpassing strong baselines such as TGN and SADE. Ablation studies provide further insights into the crucial choices of our design.

AD-AVSR: Asymmetric Dual-stream Enhancement for Robust Audio-Visual Speech Recognition

Aug 11, 2025Abstract:Audio-visual speech recognition (AVSR) combines audio-visual modalities to improve speech recognition, especially in noisy environments. However, most existing methods deploy the unidirectional enhancement or symmetric fusion manner, which limits their capability to capture heterogeneous and complementary correlations of audio-visual data-especially under asymmetric information conditions. To tackle these gaps, we introduce a new AVSR framework termed AD-AVSR based on bidirectional modality enhancement. Specifically, we first introduce the audio dual-stream encoding strategy to enrich audio representations from multiple perspectives and intentionally establish asymmetry to support subsequent cross-modal interactions. The enhancement process involves two key components, Audio-aware Visual Refinement Module for enhanced visual representations under audio guidance, and Cross-modal Noise Suppression Masking Module which refines audio representations using visual cues, collaboratively leading to the closed-loop and bidirectional information flow. To further enhance correlation robustness, we adopt a threshold-based selection mechanism to filter out irrelevant or weakly correlated audio-visual pairs. Extensive experimental results on the LRS2 and LRS3 datasets indicate that our AD-AVSR consistently surpasses SOTA methods in both performance and noise robustness, highlighting the effectiveness of our model design.

eMotions: A Large-Scale Dataset and Audio-Visual Fusion Network for Emotion Analysis in Short-form Videos

Aug 09, 2025Abstract:Short-form videos (SVs) have become a vital part of our online routine for acquiring and sharing information. Their multimodal complexity poses new challenges for video analysis, highlighting the need for video emotion analysis (VEA) within the community. Given the limited availability of SVs emotion data, we introduce eMotions, a large-scale dataset consisting of 27,996 videos with full-scale annotations. To ensure quality and reduce subjective bias, we emphasize better personnel allocation and propose a multi-stage annotation procedure. Additionally, we provide the category-balanced and test-oriented variants through targeted sampling to meet diverse needs. While there have been significant studies on videos with clear emotional cues (e.g., facial expressions), analyzing emotions in SVs remains a challenging task. The challenge arises from the broader content diversity, which introduces more distinct semantic gaps and complicates the representations learning of emotion-related features. Furthermore, the prevalence of audio-visual co-expressions in SVs leads to the local biases and collective information gaps caused by the inconsistencies in emotional expressions. To tackle this, we propose AV-CANet, an end-to-end audio-visual fusion network that leverages video transformer to capture semantically relevant representations. We further introduce the Local-Global Fusion Module designed to progressively capture the correlations of audio-visual features. Besides, EP-CE Loss is constructed to globally steer optimizations with tripolar penalties. Extensive experiments across three eMotions-related datasets and four public VEA datasets demonstrate the effectiveness of our proposed AV-CANet, while providing broad insights for future research. Moreover, we conduct ablation studies to examine the critical components of our method. Dataset and code will be made available at Github.

HOLA: Enhancing Audio-visual Deepfake Detection via Hierarchical Contextual Aggregations and Efficient Pre-training

Jul 30, 2025Abstract:Advances in Generative AI have made video-level deepfake detection increasingly challenging, exposing the limitations of current detection techniques. In this paper, we present HOLA, our solution to the Video-Level Deepfake Detection track of 2025 1M-Deepfakes Detection Challenge. Inspired by the success of large-scale pre-training in the general domain, we first scale audio-visual self-supervised pre-training in the multimodal video-level deepfake detection, which leverages our self-built dataset of 1.81M samples, thereby leading to a unified two-stage framework. To be specific, HOLA features an iterative-aware cross-modal learning module for selective audio-visual interactions, hierarchical contextual modeling with gated aggregations under the local-global perspective, and a pyramid-like refiner for scale-aware cross-grained semantic enhancements. Moreover, we propose the pseudo supervised singal injection strategy to further boost model performance. Extensive experiments across expert models and MLLMs impressivly demonstrate the effectiveness of our proposed HOLA. We also conduct a series of ablation studies to explore the crucial design factors of our introduced components. Remarkably, our HOLA ranks 1st, outperforming the second by 0.0476 AUC on the TestA set.

3A-YOLO: New Real-Time Object Detectors with Triple Discriminative Awareness and Coordinated Representations

Dec 10, 2024

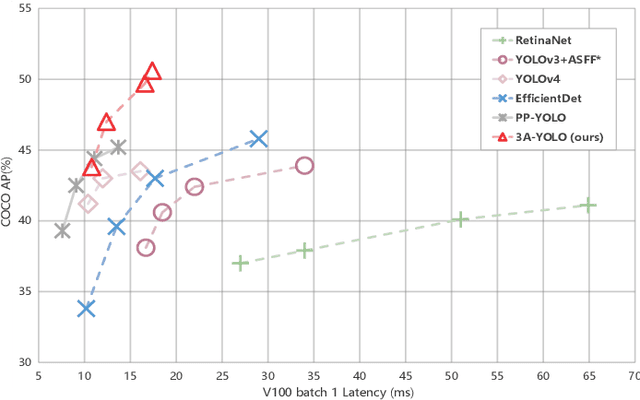

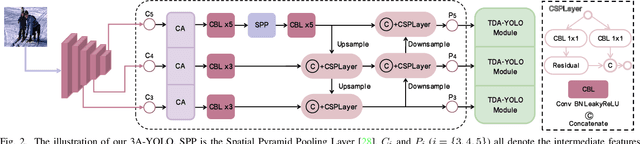

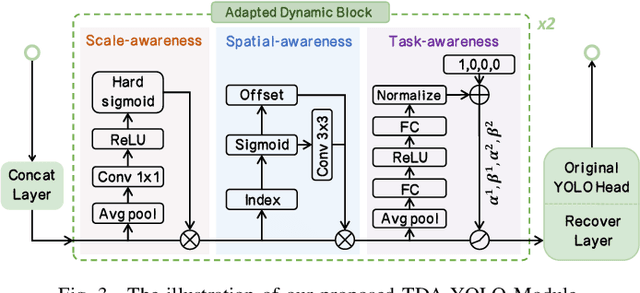

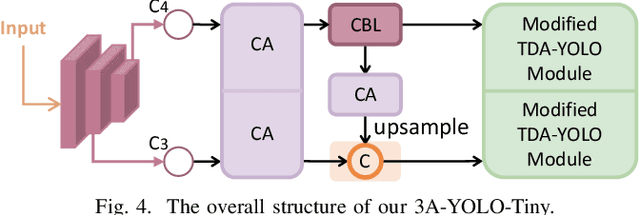

Abstract:Recent research on real-time object detectors (e.g., YOLO series) has demonstrated the effectiveness of attention mechanisms for elevating model performance. Nevertheless, existing methods neglect to unifiedly deploy hierarchical attention mechanisms to construct a more discriminative YOLO head which is enriched with more useful intermediate features. To tackle this gap, this work aims to leverage multiple attention mechanisms to hierarchically enhance the triple discriminative awareness of the YOLO detection head and complementarily learn the coordinated intermediate representations, resulting in a new series detectors denoted 3A-YOLO. Specifically, we first propose a new head denoted TDA-YOLO Module, which unifiedly enhance the representations learning of scale-awareness, spatial-awareness, and task-awareness. Secondly, we steer the intermediate features to coordinately learn the inter-channel relationships and precise positional information. Finally, we perform neck network improvements followed by introducing various tricks to boost the adaptability of 3A-YOLO. Extensive experiments across COCO and VOC benchmarks indicate the effectiveness of our detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge