Xiangyu Chu

Multiscale Medical Robotics Center, Hong Kong, China

Hierarchical Deformation Planning and Neural Tracking for DLOs in Constrained Environments

Dec 31, 2025Abstract:Deformable linear objects (DLOs) manipulation presents significant challenges due to DLOs' inherent high-dimensional state space and complex deformation dynamics. The wide-populated obstacles in realistic workspaces further complicate DLO manipulation, necessitating efficient deformation planning and robust deformation tracking. In this work, we propose a novel framework for DLO manipulation in constrained environments. This framework combines hierarchical deformation planning with neural tracking, ensuring reliable performance in both global deformation synthesis and local deformation tracking. Specifically, the deformation planner begins by generating a spatial path set that inherently satisfies the homotopic constraints associated with DLO keypoint paths. Next, a path-set-guided optimization method is applied to synthesize an optimal temporal deformation sequence for the DLO. In manipulation execution, a neural model predictive control approach, leveraging a data-driven deformation model, is designed to accurately track the planned DLO deformation sequence. The effectiveness of the proposed framework is validated in extensive constrained DLO manipulation tasks.

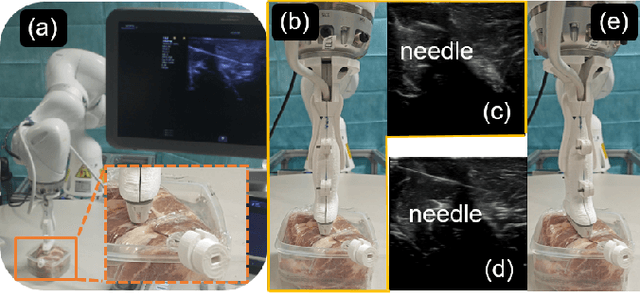

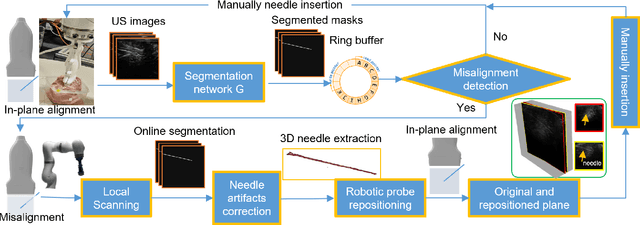

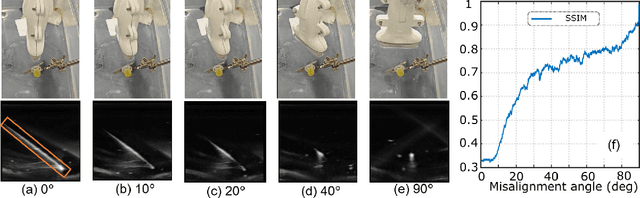

Vibration-Based Energy Metric for Restoring Needle Alignment in Autonomous Robotic Ultrasound

Aug 09, 2025Abstract:Precise needle alignment is essential for percutaneous needle insertion in robotic ultrasound-guided procedures. However, inherent challenges such as speckle noise, needle-like artifacts, and low image resolution make robust needle detection difficult, particularly when visibility is reduced or lost. In this paper, we propose a method to restore needle alignment when the ultrasound imaging plane and the needle insertion plane are misaligned. Unlike many existing approaches that rely heavily on needle visibility in ultrasound images, our method uses a more robust feature by periodically vibrating the needle using a mechanical system. Specifically, we propose a vibration-based energy metric that remains effective even when the needle is fully out of plane. Using this metric, we develop a control strategy to reposition the ultrasound probe in response to misalignments between the imaging plane and the needle insertion plane in both translation and rotation. Experiments conducted on ex-vivo porcine tissue samples using a dual-arm robotic ultrasound-guided needle insertion system demonstrate the effectiveness of the proposed approach. The experimental results show the translational error of 0.41$\pm$0.27 mm and the rotational error of 0.51$\pm$0.19 degrees.

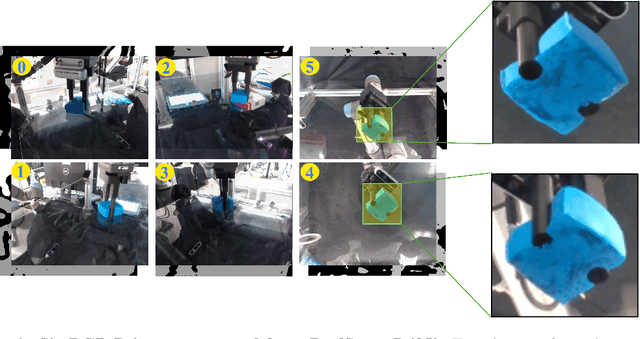

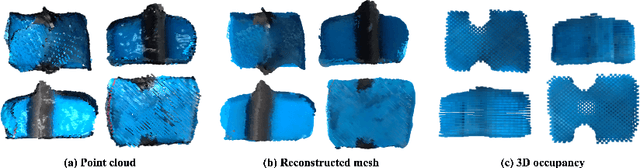

Manipulating Elasto-Plastic Objects With 3D Occupancy and Learning-Based Predictive Control

May 22, 2025Abstract:Manipulating elasto-plastic objects remains a significant challenge due to severe self-occlusion, difficulties of representation, and complicated dynamics. This work proposes a novel framework for elasto-plastic object manipulation with a quasi-static assumption for motions, leveraging 3D occupancy to represent such objects, a learned dynamics model trained with 3D occupancy, and a learning-based predictive control algorithm to address these challenges effectively. We build a novel data collection platform to collect full spatial information and propose a pipeline for generating a 3D occupancy dataset. To infer the 3D occupancy during manipulation, an occupancy prediction network is trained with multiple RGB images supervised by the generated dataset. We design a deep neural network empowered by a 3D convolution neural network (CNN) and a graph neural network (GNN) to predict the complex deformation with the inferred 3D occupancy results. A learning-based predictive control algorithm is introduced to plan the robot actions, incorporating a novel shape-based action initialization module specifically designed to improve the planner efficiency. The proposed framework in this paper can successfully shape the elasto-plastic objects into a given goal shape and has been verified in various experiments both in simulation and the real world.

Learning to Hop for a Single-Legged Robot with Parallel Mechanism

Jan 21, 2025Abstract:This work presents the application of reinforcement learning to improve the performance of a highly dynamic hopping system with a parallel mechanism. Unlike serial mechanisms, parallel mechanisms can not be accurately simulated due to the complexity of their kinematic constraints and closed-loop structures. Besides, learning to hop suffers from prolonged aerial phase and the sparse nature of the rewards. To address them, we propose a learning framework to encode long-history feedback to account for the under-actuation brought by the prolonged aerial phase. In the proposed framework, we also introduce a simplified serial configuration for the parallel design to avoid directly simulating parallel structure during the training. A torque-level conversion is designed to deal with the parallel-serial conversion to handle the sim-to-real issue. Simulation and hardware experiments have been conducted to validate this framework.

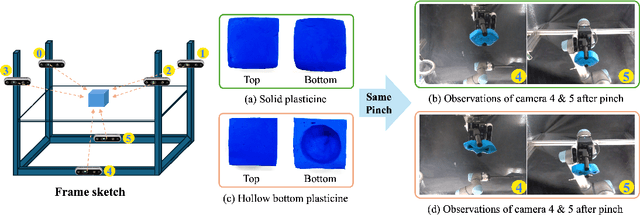

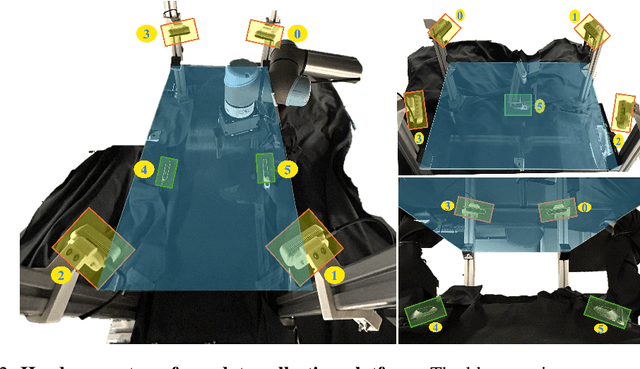

DOFS: A Real-world 3D Deformable Object Dataset with Full Spatial Information for Dynamics Model Learning

Oct 29, 2024

Abstract:This work proposes DOFS, a pilot dataset of 3D deformable objects (DOs) (e.g., elasto-plastic objects) with full spatial information (i.e., top, side, and bottom information) using a novel and low-cost data collection platform with a transparent operating plane. The dataset consists of active manipulation action, multi-view RGB-D images, well-registered point clouds, 3D deformed mesh, and 3D occupancy with semantics, using a pinching strategy with a two-parallel-finger gripper. In addition, we trained a neural network with the down-sampled 3D occupancy and action as input to model the dynamics of an elasto-plastic object. Our dataset and all CADs of the data collection system will be released soon on our website.

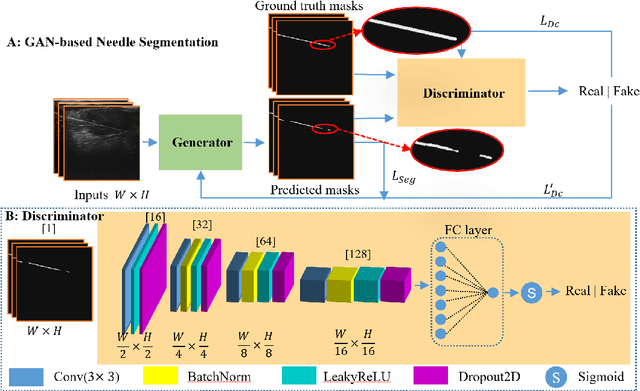

Needle Segmentation Using GAN: Restoring Thin Instrument Visibility in Robotic Ultrasound

Jul 25, 2024

Abstract:Ultrasound-guided percutaneous needle insertion is a standard procedure employed in both biopsy and ablation in clinical practices. However, due to the complex interaction between tissue and instrument, the needle may deviate from the in-plane view, resulting in a lack of close monitoring of the percutaneous needle. To address this challenge, we introduce a robot-assisted ultrasound (US) imaging system designed to seamlessly monitor the insertion process and autonomously restore the visibility of the inserted instrument when misalignment happens. To this end, the adversarial structure is presented to encourage the generation of segmentation masks that align consistently with the ground truth in high-order space. This study also systematically investigates the effects on segmentation performance by exploring various training loss functions and their combinations. When misalignment between the probe and the percutaneous needle is detected, the robot is triggered to perform transverse searching to optimize the positional and rotational adjustment to restore needle visibility. The experimental results on ex-vivo porcine samples demonstrate that the proposed method can precisely segment the percutaneous needle (with a tip error of $0.37\pm0.29mm$ and an angle error of $1.19\pm 0.29^{\circ}$). Furthermore, the needle appearance can be successfully restored under the repositioned probe pose in all 45 trials, with repositioning errors of $1.51\pm0.95mm$ and $1.25\pm0.79^{\circ}$. from latex to text with math symbols

World Models for General Surgical Grasping

May 28, 2024

Abstract:Intelligent vision control systems for surgical robots should adapt to unknown and diverse objects while being robust to system disturbances. Previous methods did not meet these requirements due to mainly relying on pose estimation and feature tracking. We propose a world-model-based deep reinforcement learning framework "Grasp Anything for Surgery" (GAS), that learns a pixel-level visuomotor policy for surgical grasping, enhancing both generality and robustness. In particular, a novel method is proposed to estimate the values and uncertainties of depth pixels for a rigid-link object's inaccurate region based on the empirical prior of the object's size; both depth and mask images of task objects are encoded to a single compact 3-channel image (size: 64x64x3) by dynamically zooming in the mask regions, minimizing the information loss. The learned controller's effectiveness is extensively evaluated in simulation and in a real robot. Our learned visuomotor policy handles: i) unseen objects, including 5 types of target grasping objects and a robot gripper, in unstructured real-world surgery environments, and ii) disturbances in perception and control. Note that we are the first work to achieve a unified surgical control system that grasps diverse surgical objects using different robot grippers on real robots in complex surgery scenes (average success rate: 69%). Our system also demonstrates significant robustness across 6 conditions including background variation, target disturbance, camera pose variation, kinematic control error, image noise, and re-grasping after the gripped target object drops from the gripper. Videos and codes can be found on our project page: https://linhongbin.github.io/gas/.

Deformable Object Manipulation With Constraints Using Path Set Planning and Tracking

Feb 18, 2024

Abstract:In robotic deformable object manipulation (DOM) applications, constraints arise commonly from environments and task-specific requirements. Enabling DOM with constraints is therefore crucial for its deployment in practice. However, dealing with constraints turns out to be challenging due to many inherent factors such as inaccessible deformation models of deformable objects (DOs) and varying environmental setups. This article presents a systematic manipulation framework for DOM subject to constraints by proposing a novel path set planning and tracking scheme. First, constrained DOM tasks are formulated into a versatile optimization formalism which enables dynamic constraint imposition. Because of the lack of the local optimization objective and high state dimensionality, the formulated problem is not analytically solvable. To address this, planning of the path set, which collects paths of DO feedback points, is proposed subsequently to offer feasible path and motion references for DO in constrained setups. Both theoretical analyses and computationally efficient algorithmic implementation of path set planning are discussed. Lastly, a control architecture combining path set tracking and constraint handling is designed for task execution. The effectiveness of our methods is validated in a variety of DOM tasks with constrained experimental settings.

* 20 pages, 25 figures, journal

Bootstrapping Robotic Skill Learning With Intuitive Teleoperation: Initial Feasibility Study

Nov 11, 2023

Abstract:Robotic skill learning has been increasingly studied but the demonstration collections are more challenging compared to collecting images/videos in computer vision and texts in natural language processing. This paper presents a skill learning paradigm by using intuitive teleoperation devices to generate high-quality human demonstrations efficiently for robotic skill learning in a data-driven manner. By using a reliable teleoperation interface, the da Vinci Research Kit (dVRK) master, a system called dVRK-Simulator-for-Demonstration (dS4D) is proposed in this paper. Various manipulation tasks show the system's effectiveness and advantages in efficiency compared to other interfaces. Using the collected data for policy learning has been investigated, which verifies the initial feasibility. We believe the proposed paradigm can facilitate robot learning driven by high-quality demonstrations and efficiency while generating them.

Interactive Navigation in Environments with Traversable Obstacles Using Large Language and Vision-Language Models

Oct 13, 2023Abstract:This paper proposes an interactive navigation framework by using large language and vision-language models, allowing robots to navigate in environments with traversable obstacles. We utilize the large language model (GPT-3.5) and the open-set Vision-language Model (Grounding DINO) to create an action-aware costmap to perform effective path planning without fine-tuning. With the large models, we can achieve an end-to-end system from textual instructions like "Can you pass through the curtains to deliver medicines to me?", to bounding boxes (e.g., curtains) with action-aware attributes. They can be used to segment LiDAR point clouds into two parts: traversable and untraversable parts, and then an action-aware costmap is constructed for generating a feasible path. The pre-trained large models have great generalization ability and do not require additional annotated data for training, allowing fast deployment in the interactive navigation tasks. We choose to use multiple traversable objects such as curtains and grasses for verification by instructing the robot to traverse them. Besides, traversing curtains in a medical scenario was tested. All experimental results demonstrated the proposed framework's effectiveness and adaptability to diverse environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge