Wen Cui

Adversarial Safety-Critical Scenario Generation using Naturalistic Human Driving Priors

Aug 07, 2024

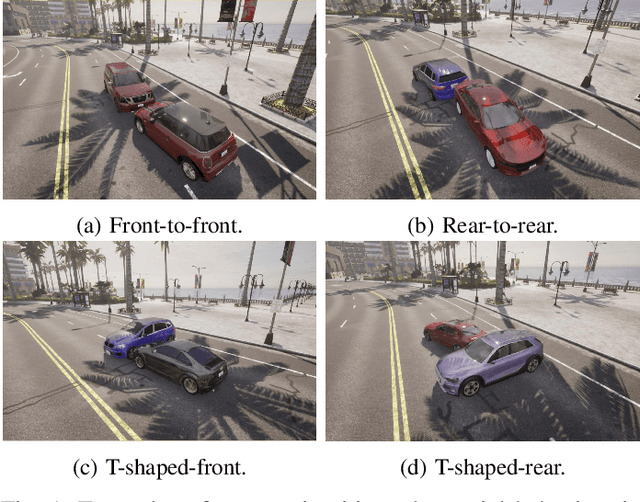

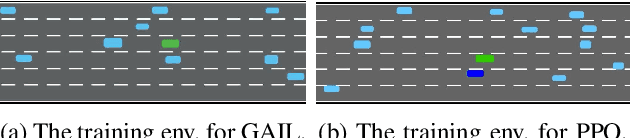

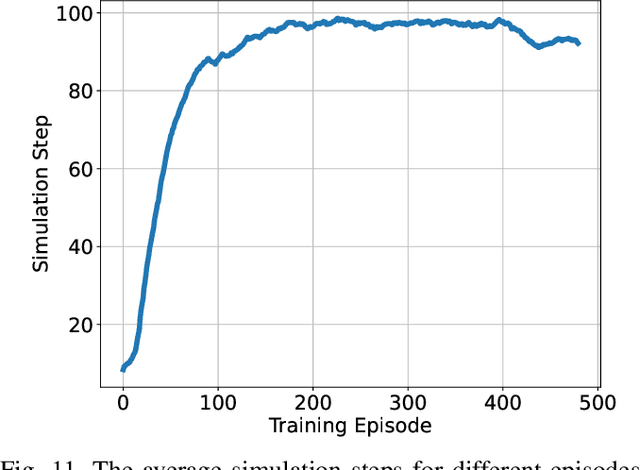

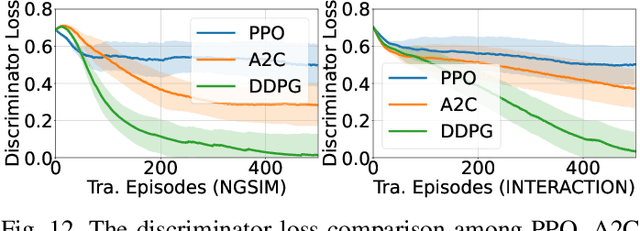

Abstract:Evaluating the decision-making system is indispensable in developing autonomous vehicles, while realistic and challenging safety-critical test scenarios play a crucial role. Obtaining these scenarios is non-trivial, thanks to the long-tailed distribution, sparsity, and rarity in real-world data sets. To tackle this problem, in this paper, we introduce a natural adversarial scenario generation solution using naturalistic human driving priors and reinforcement learning techniques. By doing this, we can obtain large-scale test scenarios that are both diverse and realistic. Specifically, we build a simulation environment that mimics natural traffic interaction scenarios. Informed by this environment, we implement a two-stage procedure. The first stage incorporates conventional rule-based models, e.g., IDM~(Intelligent Driver Model) and MOBIL~(Minimizing Overall Braking Induced by Lane changes) model, to coarsely and discretely capture and calibrate key control parameters from the real-world dataset. Next, we leverage GAIL~(Generative Adversarial Imitation Learning) to represent driver behaviors continuously. The derived GAIL can be further used to design a PPO~(Proximal Policy Optimization)-based actor-critic network framework to fine-tune the reward function, and then optimizes our natural adversarial scenario generation solution. Extensive experiments have been conducted in the NGSIM dataset including the trajectory of 3,000 vehicles. Essential traffic parameters were measured in comparison with the baseline model, e.g., the collision rate, accelerations, steering, and the number of lane changes. Our findings demonstrate that the proposed model can generate realistic safety-critical test scenarios covering both naturalness and adversariality, which can be a cornerstone for the development of autonomous vehicles.

* Published in IEEE Transactions on Intelligent Vehicles, 2023

Bridging Data-Driven and Knowledge-Driven Approaches for Safety-Critical Scenario Generation in Automated Vehicle Validation

Nov 18, 2023

Abstract:Automated driving vehicles~(ADV) promise to enhance driving efficiency and safety, yet they face intricate challenges in safety-critical scenarios. As a result, validating ADV within generated safety-critical scenarios is essential for both development and performance evaluations. This paper investigates the complexities of employing two major scenario-generation solutions: data-driven and knowledge-driven methods. Data-driven methods derive scenarios from recorded datasets, efficiently generating scenarios by altering the existing behavior or trajectories of traffic participants but often falling short in considering ADV perception; knowledge-driven methods provide effective coverage through expert-designed rules, but they may lead to inefficiency in generating safety-critical scenarios within that coverage. To overcome these challenges, we introduce BridgeGen, a safety-critical scenario generation framework, designed to bridge the benefits of both methodologies. Specifically, by utilizing ontology-based techniques, BridgeGen models the five scenario layers in the operational design domain (ODD) from knowledge-driven methods, ensuring broad coverage, and incorporating data-driven strategies to efficiently generate safety-critical scenarios. An optimized scenario generation toolkit is developed within BridgeGen. This expedites the crafting of safety-critical scenarios through a combination of traditional optimization and reinforcement learning schemes. Extensive experiments conducted using Carla simulator demonstrate the effectiveness of BridgeGen in generating diverse safety-critical scenarios.

Athena 2.0: Discourse and User Modeling in Open Domain Dialogue

Aug 03, 2023

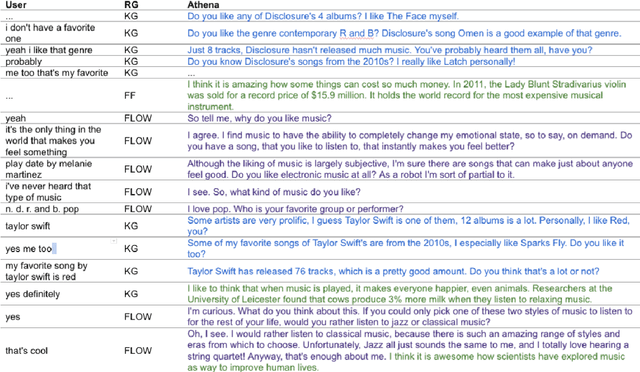

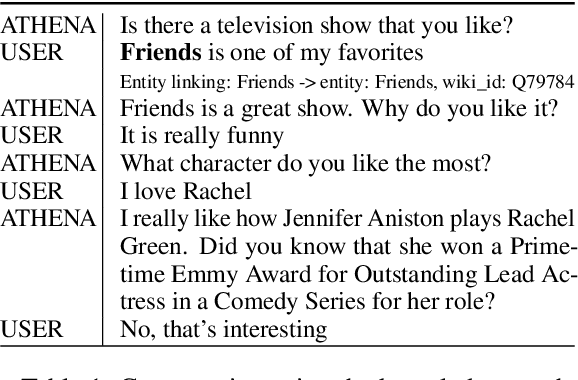

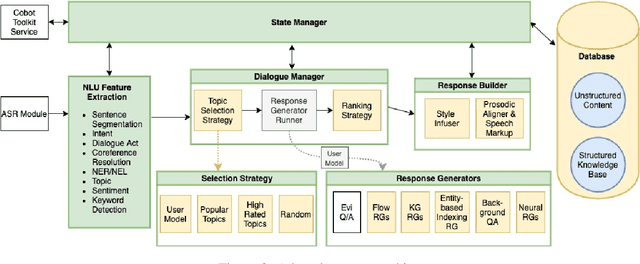

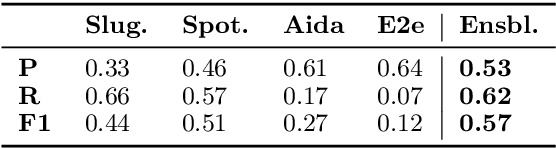

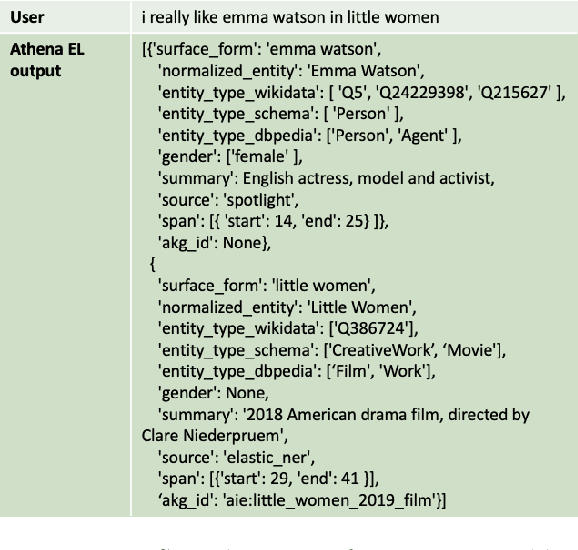

Abstract:Conversational agents are consistently growing in popularity and many people interact with them every day. While many conversational agents act as personal assistants, they can have many different goals. Some are task-oriented, such as providing customer support for a bank or making a reservation. Others are designed to be empathetic and to form emotional connections with the user. The Alexa Prize Challenge aims to create a socialbot, which allows the user to engage in coherent conversations, on a range of popular topics that will interest the user. Here we describe Athena 2.0, UCSC's conversational agent for Amazon's Socialbot Grand Challenge 4. Athena 2.0 utilizes a novel knowledge-grounded discourse model that tracks the entity links that Athena introduces into the dialogue, and uses them to constrain named-entity recognition and linking, and coreference resolution. Athena 2.0 also relies on a user model to personalize topic selection and other aspects of the conversation to individual users.

Athena 2.0: Contextualized Dialogue Management for an Alexa Prize SocialBot

Nov 03, 2021

Abstract:Athena 2.0 is an Alexa Prize SocialBot that has been a finalist in the last two Alexa Prize Grand Challenges. One reason for Athena's success is its novel dialogue management strategy, which allows it to dynamically construct dialogues and responses from component modules, leading to novel conversations with every interaction. Here we describe Athena's system design and performance in the Alexa Prize during the 20/21 competition. A live demo of Athena as well as video recordings will provoke discussion on the state of the art in conversational AI.

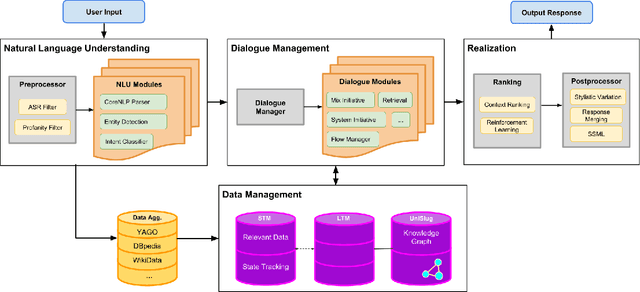

Athena: Constructing Dialogues Dynamically with Discourse Constraints

Nov 21, 2020

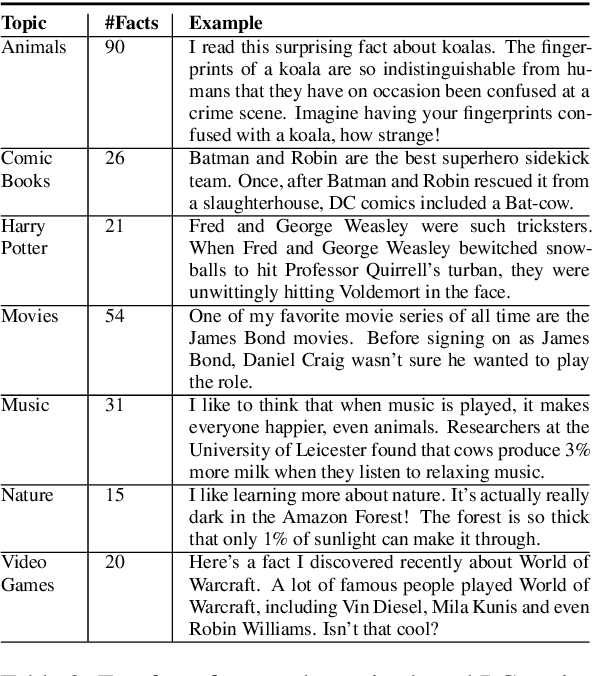

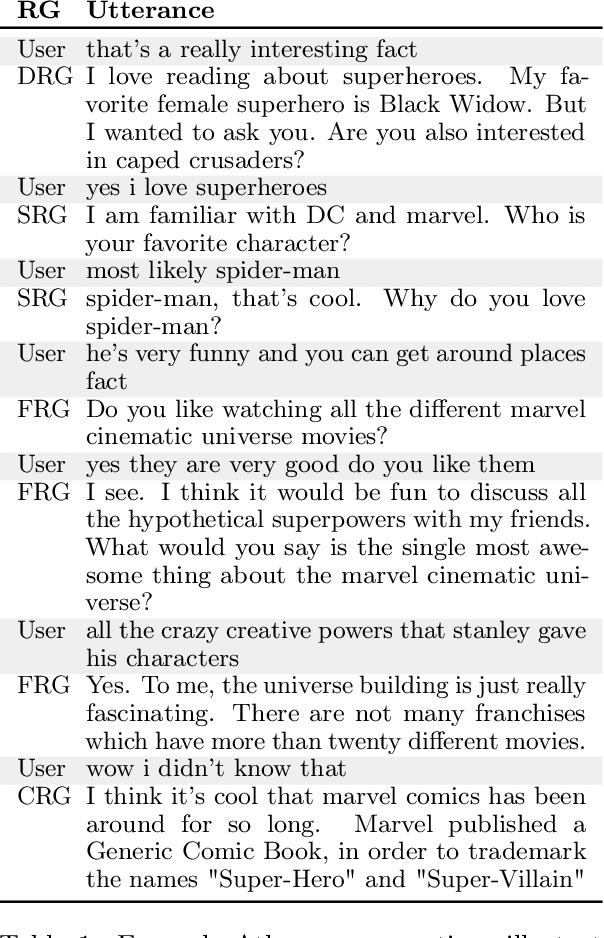

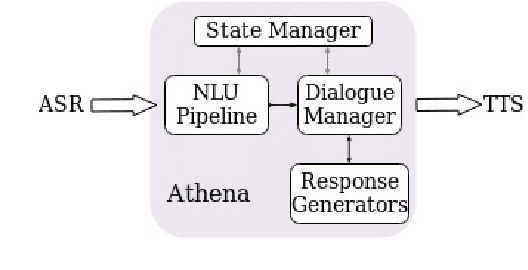

Abstract:This report describes Athena, a dialogue system for spoken conversation on popular topics and current events. We develop a flexible topic-agnostic approach to dialogue management that dynamically configures dialogue based on general principles of entity and topic coherence. Athena's dialogue manager uses a contract-based method where discourse constraints are dispatched to clusters of response generators. This allows Athena to procure responses from dynamic sources, such as knowledge graph traversals and feature-based on-the-fly response retrieval methods. After describing the dialogue system architecture, we perform an analysis of conversations that Athena participated in during the 2019 Alexa Prize Competition. We conclude with a report on several user studies we carried out to better understand how individual user characteristics affect system ratings.

Entertaining and Opinionated but Too Controlling: A Large-Scale User Study of an Open Domain Alexa Prize System

Aug 13, 2019

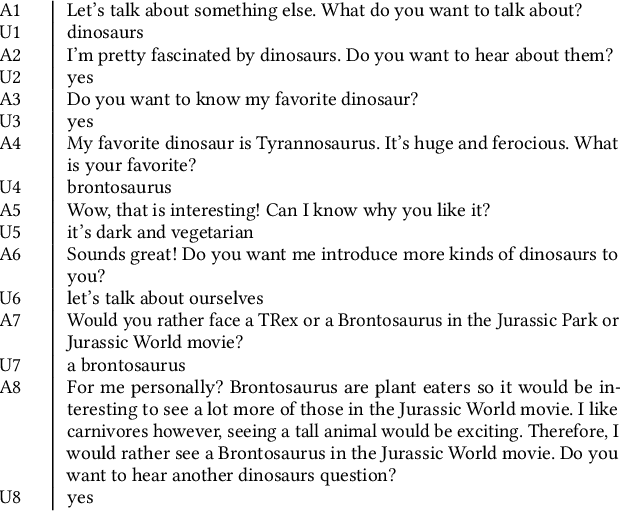

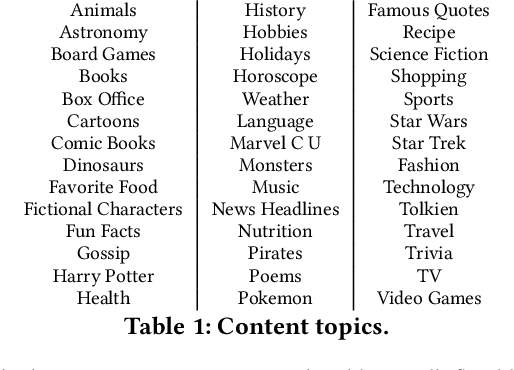

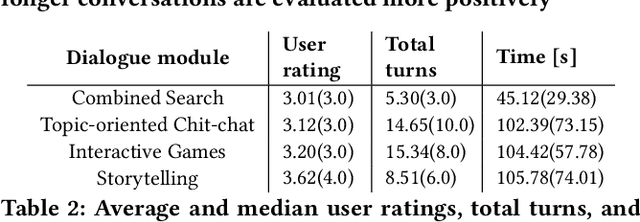

Abstract:Conversational systems typically focus on functional tasks such as scheduling appointments or creating todo lists. Instead we design and evaluate SlugBot (SB), one of 8 semifinalists in the 2018 AlexaPrize, whose goal is to support casual open-domain social inter-action. This novel application requires both broad topic coverage and engaging interactive skills. We developed a new technical approach to meet this demanding situation by crowd-sourcing novel content and introducing playful conversational strategies based on storytelling and games. We collected over 10,000 conversations during August 2018 as part of the Alexa Prize competition. We also conducted an in-lab follow-up qualitative evaluation. Over-all users found SB moderately engaging; conversations averaged 3.6 minutes and involved 26 user turns. However, users reacted very differently to different conversation subtypes. Storytelling and games were evaluated positively; these were seen as entertaining with predictable interactive structure. They also led users to impute personality and intelligence to SB. In contrast, search and general Chit-Chat induced coverage problems; here users found it hard to infer what topics SB could understand, with these conversations seen as being too system-driven. Theoretical and design implications suggest a move away from conversational systems that simply provide factual information. Future systems should be designed to have their own opinions with personal stories to share, and SB provides an example of how we might achieve this.

SlugBot: Developing a Computational Model andFramework of a Novel Dialogue Genre

Jul 22, 2019

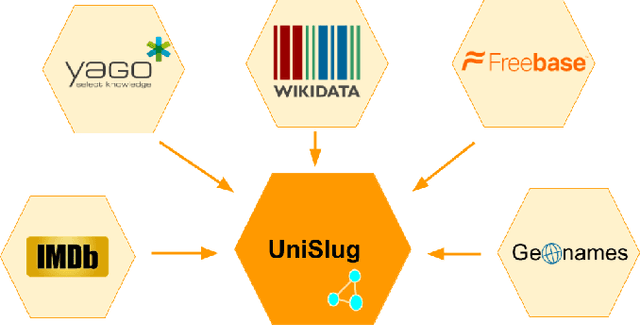

Abstract:One of the most interesting aspects of the Amazon Alexa Prize competition is that the framing of the competition requires the development of new computational models of dialogue and its structure. Traditional computational models of dialogue are of two types: (1) task-oriented dialogue, supported by AI planning models,or simplified planning models consisting of frames with slots to be filled; or (2)search-oriented dialogue where every user turn is treated as a search query that may elaborate and extend current search results. Alexa Prize dialogue systems such as SlugBot must support conversational capabilities that go beyond what these traditional models can do. Moreover, while traditional dialogue systems rely on theoretical computational models, there are no existing computational theories that circumscribe the expected system and user behaviors in the intended conversational genre of the Alexa Prize Bots. This paper describes how UCSC's SlugBot team has combined the development of a novel computational theoretical model, Discourse Relation Dialogue Model, with its implementation in a modular system in order to test and refine it. We highlight how our novel dialogue model has led us to create a novel ontological resource, UniSlug, and how the structure of UniSlug determine show we curate and structure content so that our dialogue manager implements and tests our novel computational dialogue model.

Implicit Discourse Relation Identification for Open-domain Dialogues

Jul 09, 2019

Abstract:Discourse relation identification has been an active area of research for many years, and the challenge of identifying implicit relations remains largely an unsolved task, especially in the context of an open-domain dialogue system. Previous work primarily relies on a corpora of formal text which is inherently non-dialogic, i.e., news and journals. This data however is not suitable to handle the nuances of informal dialogue nor is it capable of navigating the plethora of valid topics present in open-domain dialogue. In this paper, we designed a novel discourse relation identification pipeline specifically tuned for open-domain dialogue systems. We firstly propose a method to automatically extract the implicit discourse relation argument pairs and labels from a dataset of dialogic turns, resulting in a novel corpus of discourse relation pairs; the first of its kind to attempt to identify the discourse relations connecting the dialogic turns in open-domain discourse. Moreover, we have taken the first steps to leverage the dialogue features unique to our task to further improve the identification of such relations by performing feature ablation and incorporating dialogue features to enhance the state-of-the-art model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge