Weiguo Zhou

Harbin Institute of Technology, Shenzhen

Measuring Generalisation to Unseen Viewpoints, Articulations, Shapes and Objects for 3D Hand Pose Estimation under Hand-Object Interaction

Mar 30, 2020

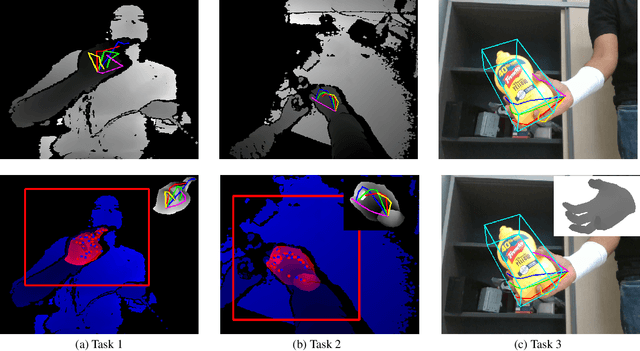

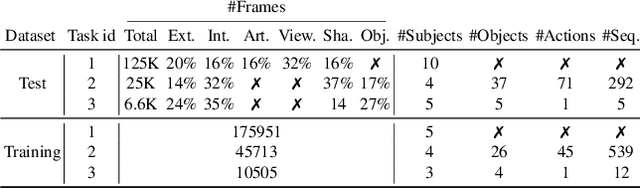

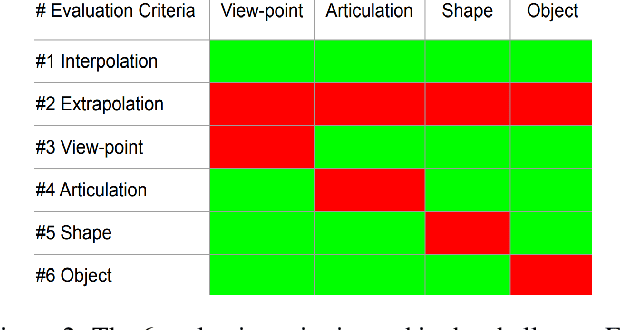

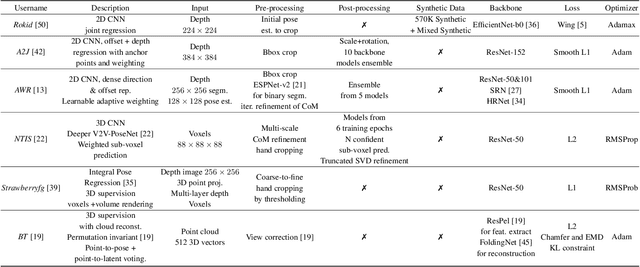

Abstract:In this work, we study how well different type of approaches generalise in the task of 3D hand pose estimation under hand-object interaction and single hand scenarios. We show that the accuracy of state-of-the-art methods can drop, and that they fail mostly on poses absent from the training set. Unfortunately, since the space of hand poses is highly dimensional, it is inherently not feasible to cover the whole space densely, despite recent efforts in collecting large-scale training datasets. This sampling problem is even more severe when hands are interacting with objects and/or inputs are RGB rather than depth images, as RGB images also vary with lighting conditions and colors. To address these issues, we designed a public challenge to evaluate the abilities of current 3D hand pose estimators~(HPEs) to interpolate and extrapolate the poses of a training set. More exactly, our challenge is designed (a) to evaluate the influence of both depth and color modalities on 3D hand pose estimation, under the presence or absence of objects; (b) to assess the generalisation abilities \wrt~four main axes: shapes, articulations, viewpoints, and objects; (c) to explore the use of a synthetic hand model to fill the gaps of current datasets. Through the challenge, the overall accuracy has dramatically improved over the baseline, especially on extrapolation tasks, from 27mm to 13mm mean joint error. Our analyses highlight the impacts of: Data pre-processing, ensemble approaches, the use of MANO model, and different HPE methods/backbones.

HMTNet:3D Hand Pose Estimation from Single Depth Image Based on Hand Morphological Topology

Nov 12, 2019

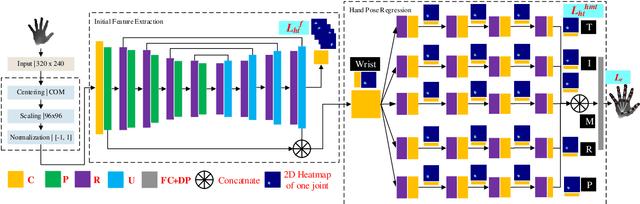

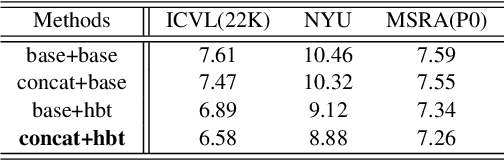

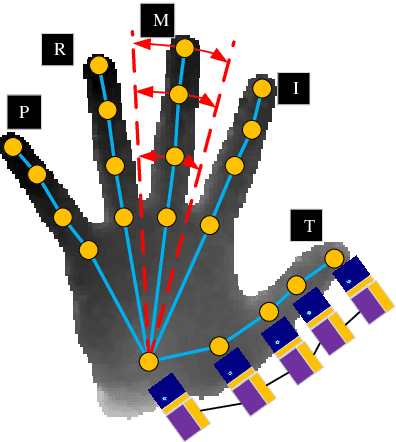

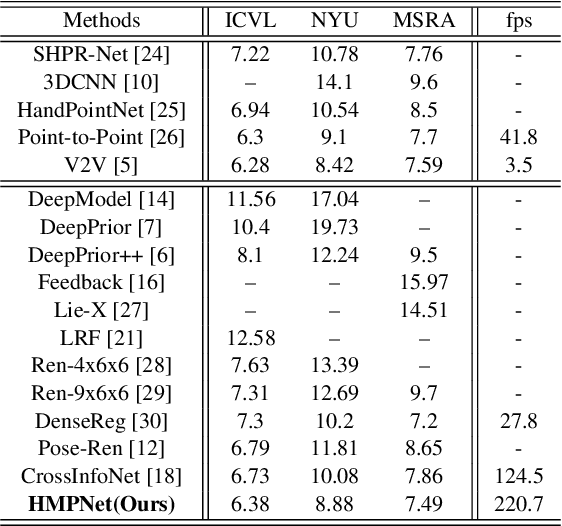

Abstract:Thanks to the rapid development of CNNs and depth sensors, great progress has been made in 3D hand pose estimation. Nevertheless, it is still far from being solved for its cluttered circumstance and severe self-occlusion of hand. In this paper, we propose a method that takes advantage of human hand morphological topology (HMT) structure to improve the pose estimation performance. The main contributions of our work can be listed as below. Firstly, in order to extract more powerful features, we concatenate original and last layer of initial feature extraction module to preserve hand information better. Next, regression module inspired from hand morphological topology is proposed. In this submodule, we design a tree-like network structure according to hand joints distribution to make use of high order dependency of hand joints. Lastly, we conducted sufficient ablation experiments to verify our proposed method on each dataset. Experimental results on three popular hand pose dataset show superior performance of our method compared with the state-of-the-art methods. On ICVL and NYU dataset, our method outperforms great improvement over 2D state-of-the-art methods. On MSRA dataset, our method achieves comparable accuracy with the state-of-the-art methods. To summarize, our method is the most efficient method which can run at 220:7 fps on a single GPU compared with approximate accurate methods at present. The code will be available at.

Pose Estimation for Texture-less Shiny Objects in a Single RGB Image Using Synthetic Training Data

Sep 23, 2019

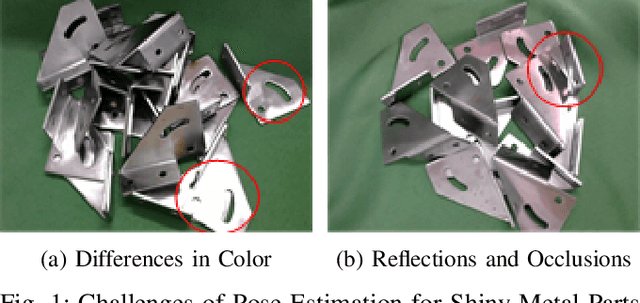

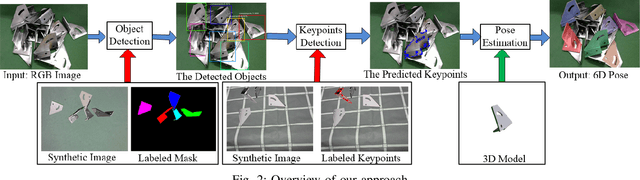

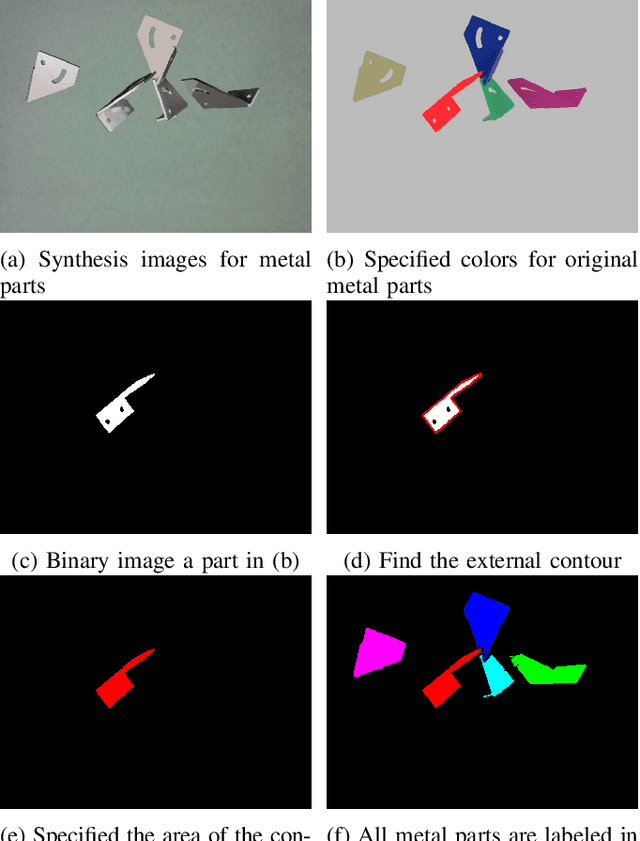

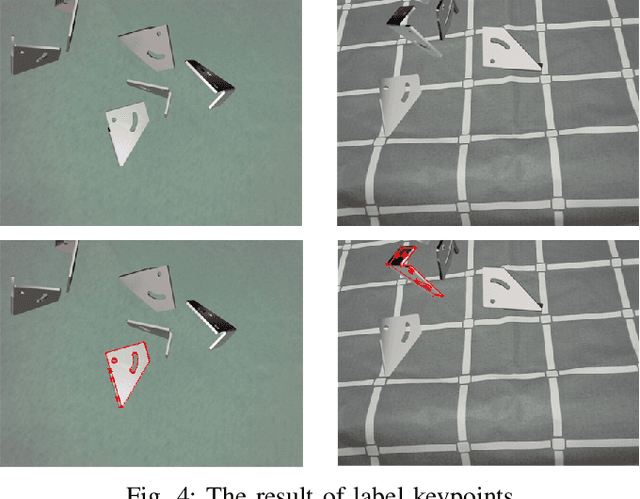

Abstract:In the industrial domain, the pose estimation of multiple texture-less shiny parts is a valuable but challenging task. In this particular scenario, it is impractical to utilize keypoints or other texture information because most of them are not actual features of the target but the reflections of surroundings. Moreover, the similarity of color also poses a challenge in segmentation. In this article, we propose to divide the pose estimation process into three stages: object detection, features detection and pose optimization. A convolutional neural network was utilized to perform object detection. Concerning the reliability of surface texture, we leveraged the contour information for estimating pose. Since conventional contour-based methods are inapplicable to clustered metal parts due to the difficulties in segmentation, we use the dense discrete points along the metal part edges as semantic keypoints for contour detection. Afterward, we exploit both keypoint information and CAD model to calculate the 6D pose of each object in view. A typical implementation of deep learning methods not only requires a large amount of training data, but also relies on intensive human labor for labeling the datasets. Therefore, we propose an approach to generate datasets and label them automatically. Despite not using any real-world photos for training, a series of experiments showed that the algorithm built on synthetic data perform well in the real environment.

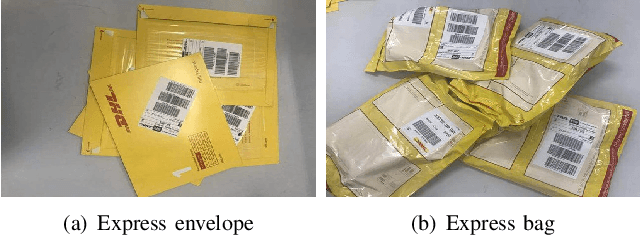

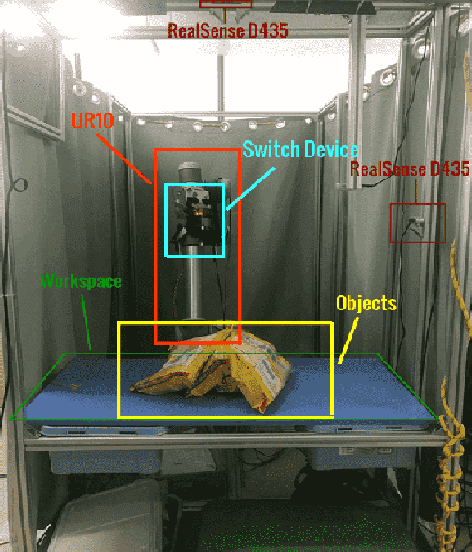

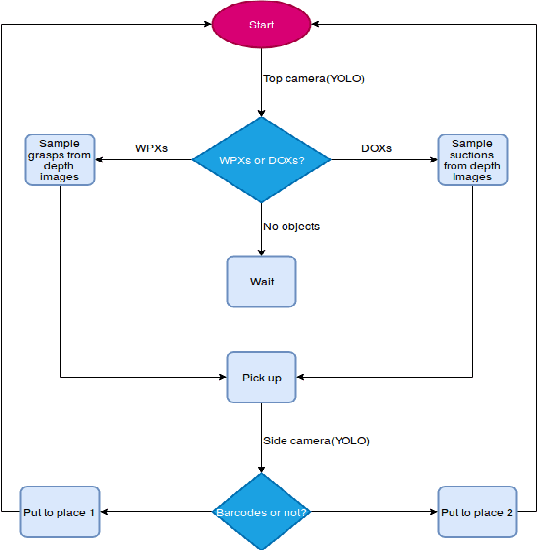

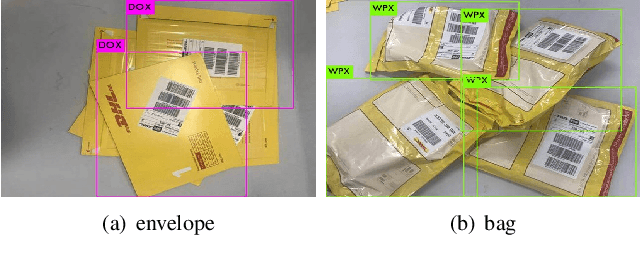

Vision Based Picking System for Automatic Express Package Dispatching

Apr 09, 2019

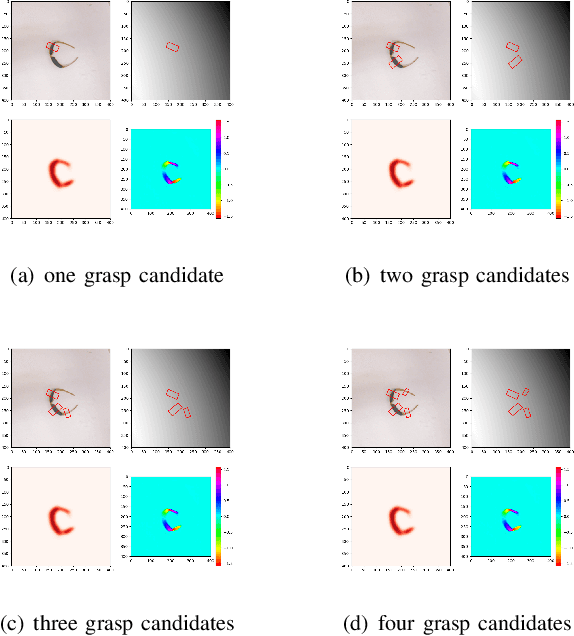

Abstract:This paper presents a vision based robotic system to handle the picking problem involved in automatic express package dispatching. By utilizing two RealSense RGB-D cameras and one UR10 industrial robot, package dispatching task which is usually done by human can be completed automatically. In order to determine grasp point for overlapped deformable objects, we improved the sampling algorithm proposed by the group in Berkeley to directly generate grasp candidate from depth images. For the purpose of package recognition, the deep network framework YOLO is integrated. We also designed a multi-modal robot hand composed of a two-fingered gripper and a vacuum suction cup to deal with different kinds of packages. All the technologies have been integrated in a work cell which simulates the practical conditions of an express package dispatching scenario. The proposed system is verified by experiments conducted for two typical express items.

Efficient Fully Convolution Neural Network for Generating Pixel Wise Robotic Grasps With High Resolution Images

Feb 24, 2019

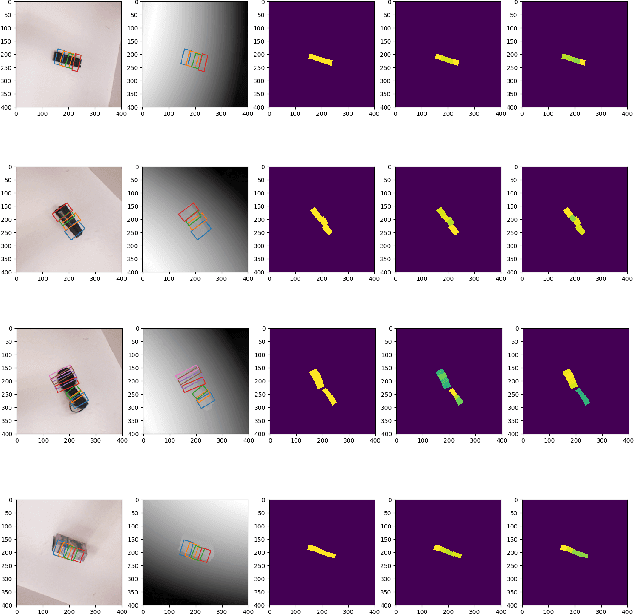

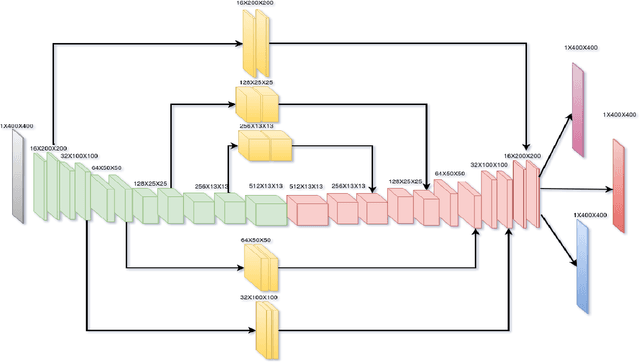

Abstract:This paper presents an efficient neural network model to generate robotic grasps with high resolution images. The proposed model uses fully convolution neural network to generate robotic grasps for each pixel using 400 $\times$ 400 high resolution RGB-D images. It first down-sample the images to get features and then up-sample those features to the original size of the input as well as combines local and global features from different feature maps. Compared to other regression or classification methods for detecting robotic grasps, our method looks more like the segmentation methods which solves the problem through pixel-wise ways. We use Cornell Grasp Dataset to train and evaluate the model and get high accuracy about 94.42% for image-wise and 91.02% for object-wise and fast prediction time about 8ms. We also demonstrate that without training on the multiple objects dataset, our model can directly output robotic grasps candidates for different objects because of the pixel wise implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge