Shipeng Xie

Measuring Generalisation to Unseen Viewpoints, Articulations, Shapes and Objects for 3D Hand Pose Estimation under Hand-Object Interaction

Mar 30, 2020

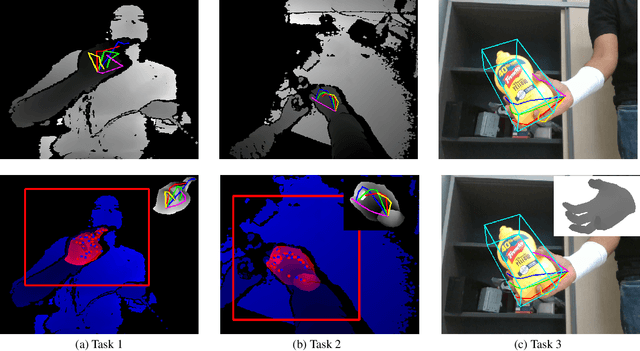

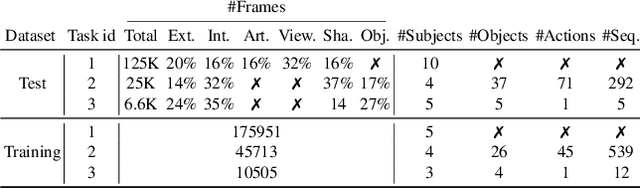

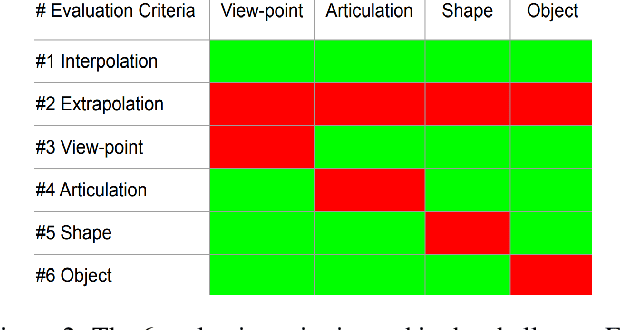

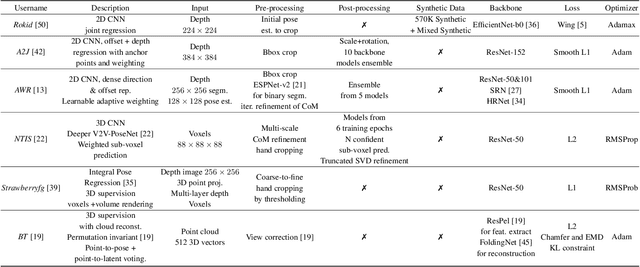

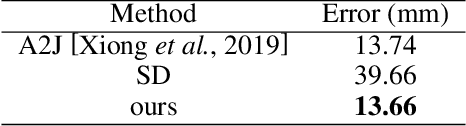

Abstract:In this work, we study how well different type of approaches generalise in the task of 3D hand pose estimation under hand-object interaction and single hand scenarios. We show that the accuracy of state-of-the-art methods can drop, and that they fail mostly on poses absent from the training set. Unfortunately, since the space of hand poses is highly dimensional, it is inherently not feasible to cover the whole space densely, despite recent efforts in collecting large-scale training datasets. This sampling problem is even more severe when hands are interacting with objects and/or inputs are RGB rather than depth images, as RGB images also vary with lighting conditions and colors. To address these issues, we designed a public challenge to evaluate the abilities of current 3D hand pose estimators~(HPEs) to interpolate and extrapolate the poses of a training set. More exactly, our challenge is designed (a) to evaluate the influence of both depth and color modalities on 3D hand pose estimation, under the presence or absence of objects; (b) to assess the generalisation abilities \wrt~four main axes: shapes, articulations, viewpoints, and objects; (c) to explore the use of a synthetic hand model to fill the gaps of current datasets. Through the challenge, the overall accuracy has dramatically improved over the baseline, especially on extrapolation tasks, from 27mm to 13mm mean joint error. Our analyses highlight the impacts of: Data pre-processing, ensemble approaches, the use of MANO model, and different HPE methods/backbones.

HandAugment: A Simple Data Augmentation Method for Depth-Based 3D Hand Pose Estimation

Jan 23, 2020

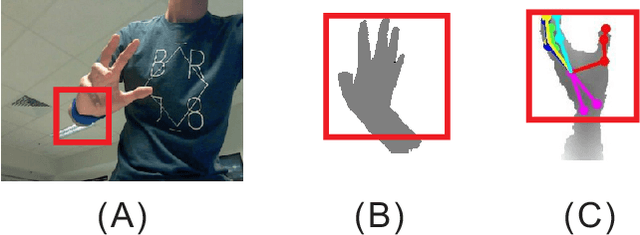

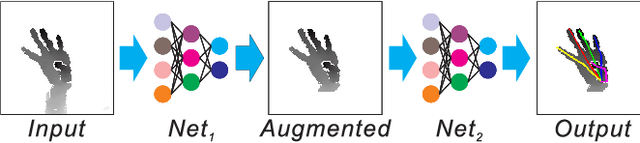

Abstract:Hand pose estimation from 3D depth images, has been explored widely using various kinds of techniques in the field of computer vision. Though, deep learning based method improve the performance greatly recently, however, this problem still remains unsolved due to lack of large datasets, like ImageNet or effective data synthesis methods. In this paper, we propose HandAugment, a method to synthesize image data to augment the training process of the neural networks. Our method has two main parts: First, We propose a scheme of two-stage neural networks. This scheme can make the neural networks focus on the hand regions and thus to improve the performance. Second, we introduce a simple and effective method to synthesize data by combining real and synthetic image together in the image space. Finally, we show that our method achieves the first place in the task of depth-based 3D hand pose estimation in HANDS 2019 challenge.

Deep Features Analysis with Attention Networks

Jan 20, 2019

Abstract:Deep neural network models have recently draw lots of attention, as it consistently produce impressive results in many computer vision tasks such as image classification, object detection, etc. However, interpreting such model and show the reason why it performs quite well becomes a challenging question. In this paper, we propose a novel method to interpret the neural network models with attention mechanism. Inspired by the heatmap visualization, we analyze the relation between classification accuracy with the attention based heatmap. An improved attention based method is also included and illustrate that a better classifier can be interpreted by the attention based heatmap.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge