Wanli Liu

IL-MCAM: An interactive learning and multi-channel attention mechanism-based weakly supervised colorectal histopathology image classification approach

Jun 07, 2022

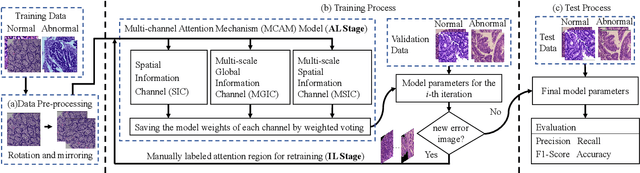

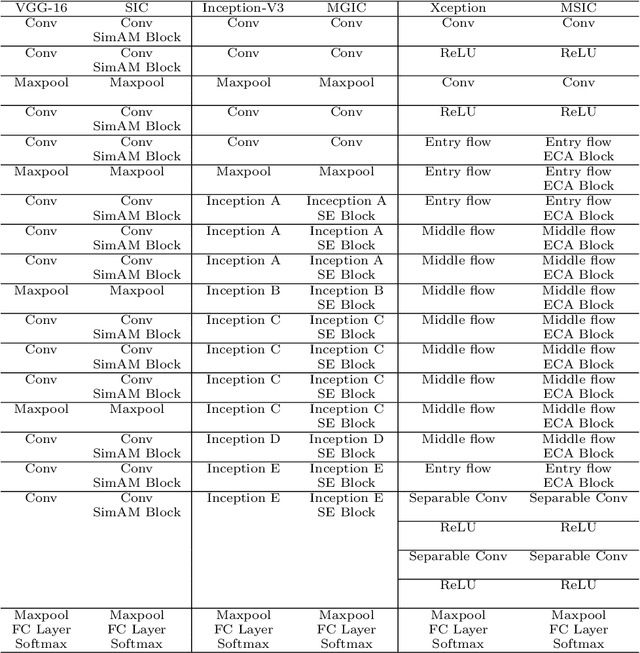

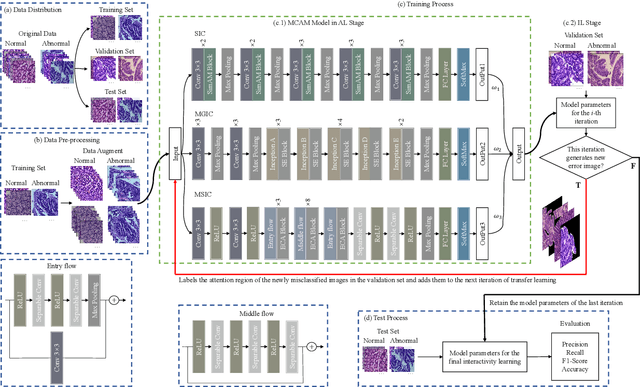

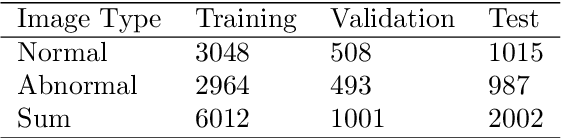

Abstract:In recent years, colorectal cancer has become one of the most significant diseases that endanger human health. Deep learning methods are increasingly important for the classification of colorectal histopathology images. However, existing approaches focus more on end-to-end automatic classification using computers rather than human-computer interaction. In this paper, we propose an IL-MCAM framework. It is based on attention mechanisms and interactive learning. The proposed IL-MCAM framework includes two stages: automatic learning (AL) and interactivity learning (IL). In the AL stage, a multi-channel attention mechanism model containing three different attention mechanism channels and convolutional neural networks is used to extract multi-channel features for classification. In the IL stage, the proposed IL-MCAM framework continuously adds misclassified images to the training set in an interactive approach, which improves the classification ability of the MCAM model. We carried out a comparison experiment on our dataset and an extended experiment on the HE-NCT-CRC-100K dataset to verify the performance of the proposed IL-MCAM framework, achieving classification accuracies of 98.98% and 99.77%, respectively. In addition, we conducted an ablation experiment and an interchangeability experiment to verify the ability and interchangeability of the three channels. The experimental results show that the proposed IL-MCAM framework has excellent performance in the colorectal histopathological image classification tasks.

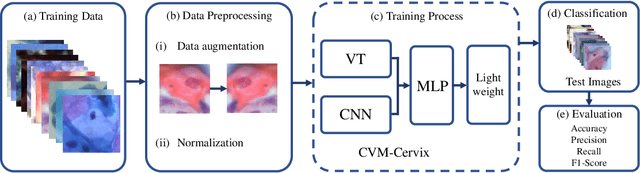

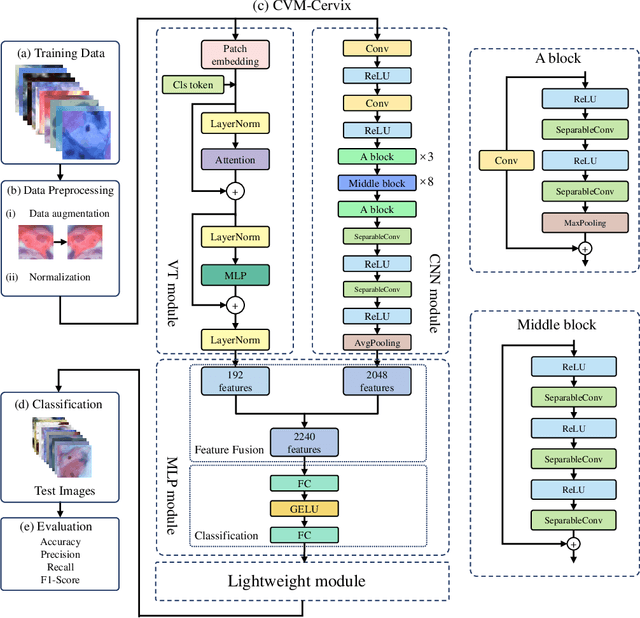

CVM-Cervix: A Hybrid Cervical Pap-Smear Image Classification Framework Using CNN, Visual Transformer and Multilayer Perceptron

Jun 02, 2022

Abstract:Cervical cancer is the seventh most common cancer among all the cancers worldwide and the fourth most common cancer among women. Cervical cytopathology image classification is an important method to diagnose cervical cancer. Manual screening of cytopathology images is time-consuming and error-prone. The emergence of the automatic computer-aided diagnosis system solves this problem. This paper proposes a framework called CVM-Cervix based on deep learning to perform cervical cell classification tasks. It can analyze pap slides quickly and accurately. CVM-Cervix first proposes a Convolutional Neural Network module and a Visual Transformer module for local and global feature extraction respectively, then a Multilayer Perceptron module is designed to fuse the local and global features for the final classification. Experimental results show the effectiveness and potential of the proposed CVM-Cervix in the field of cervical Pap smear image classification. In addition, according to the practical needs of clinical work, we perform a lightweight post-processing to compress the model.

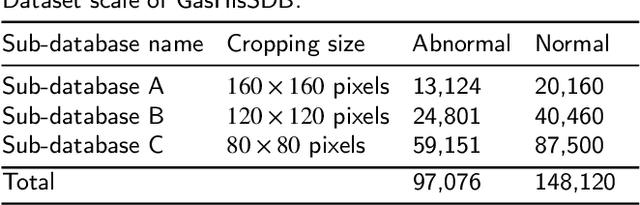

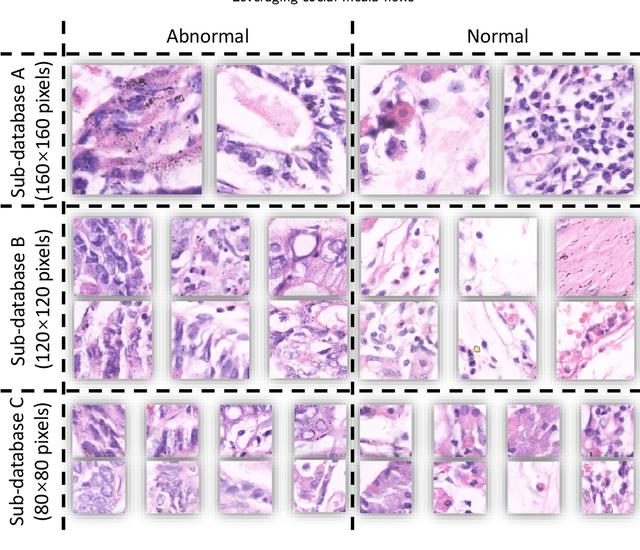

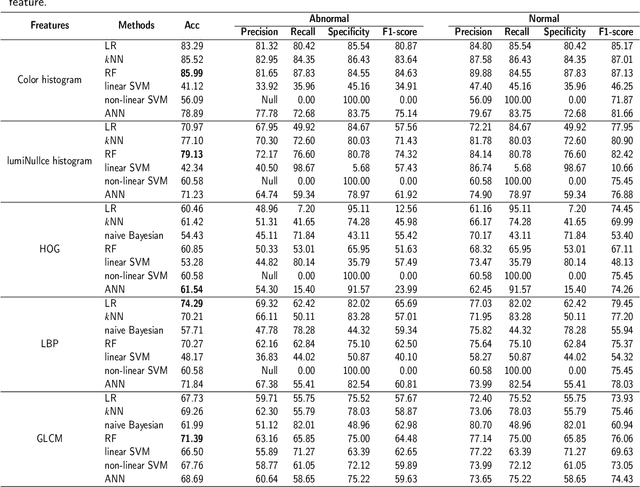

A Comparative Study of Gastric Histopathology Sub-size Image Classification: from Linear Regression to Visual Transformer

May 25, 2022

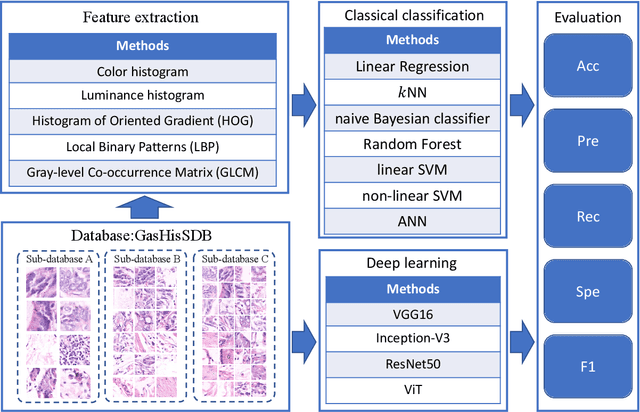

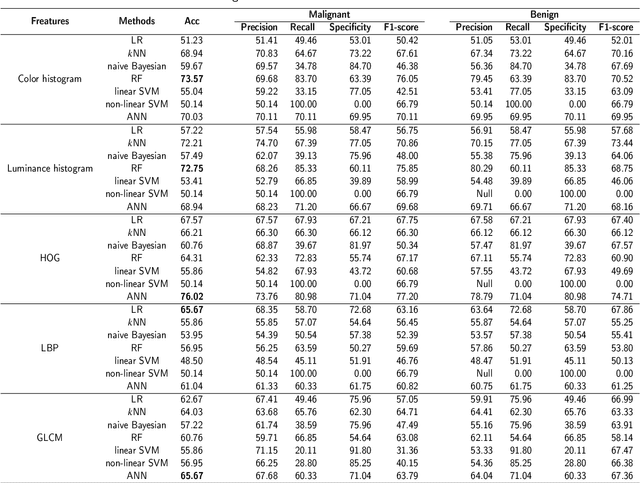

Abstract:Gastric cancer is the fifth most common cancer in the world. At the same time, it is also the fourth most deadly cancer. Early detection of cancer exists as a guide for the treatment of gastric cancer. Nowadays, computer technology has advanced rapidly to assist physicians in the diagnosis of pathological pictures of gastric cancer. Ensemble learning is a way to improve the accuracy of algorithms, and finding multiple learning models with complementarity types is the basis of ensemble learning. The complementarity of sub-size pathology image classifiers when machine performance is insufficient is explored in this experimental platform. We choose seven classical machine learning classifiers and four deep learning classifiers for classification experiments on the GasHisSDB database. Among them, classical machine learning algorithms extract five different image virtual features to match multiple classifier algorithms. For deep learning, we choose three convolutional neural network classifiers. In addition, we also choose a novel Transformer-based classifier. The experimental platform, in which a large number of classical machine learning and deep learning methods are performed, demonstrates that there are differences in the performance of different classifiers on GasHisSDB. Classical machine learning models exist for classifiers that classify Abnormal categories very well, while classifiers that excel in classifying Normal categories also exist. Deep learning models also exist with multiple models that can be complementarity. Suitable classifiers are selected for ensemble learning, when machine performance is insufficient. This experimental platform demonstrates that multiple classifiers are indeed complementarity and can improve the efficiency of ensemble learning. This can better assist doctors in diagnosis, improve the detection of gastric cancer, and increase the cure rate.

EBHI:A New Enteroscope Biopsy Histopathological H&E Image Dataset for Image Classification Evaluation

Feb 17, 2022

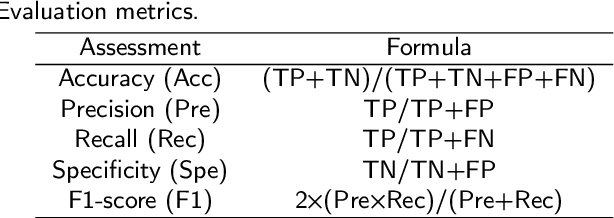

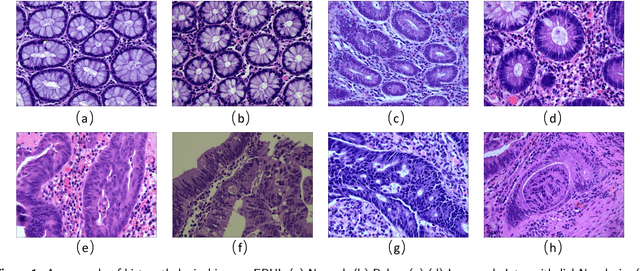

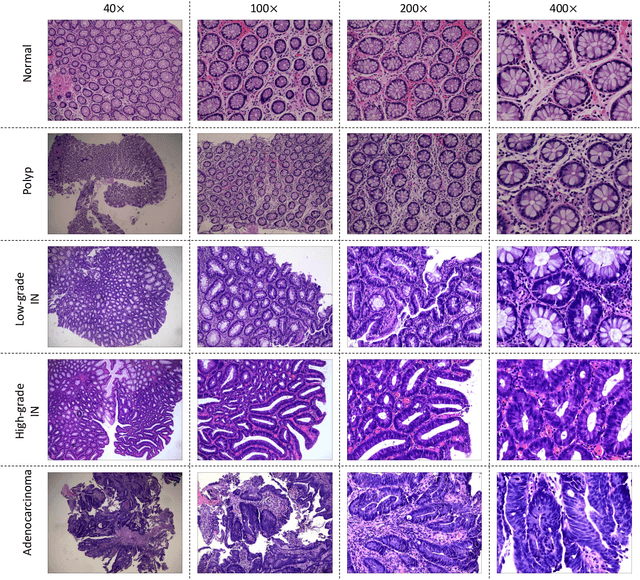

Abstract:Background and purpose: Colorectal cancer has become the third most common cancer worldwide, accounting for approximately 10% of cancer patients. Early detection of the disease is important for the treatment of colorectal cancer patients. Histopathological examination is the gold standard for screening colorectal cancer. However, the current lack of histopathological image datasets of colorectal cancer, especially enteroscope biopsies, hinders the accurate evaluation of computer-aided diagnosis techniques. Methods: A new publicly available Enteroscope Biopsy Histopathological H&E Image Dataset (EBHI) is published in this paper. To demonstrate the effectiveness of the EBHI dataset, we have utilized several machine learning, convolutional neural networks and novel transformer-based classifiers for experimentation and evaluation, using an image with a magnification of 200x. Results: Experimental results show that the deep learning method performs well on the EBHI dataset. Traditional machine learning methods achieve maximum accuracy of 76.02% and deep learning method achieves a maximum accuracy of 95.37%. Conclusion: To the best of our knowledge, EBHI is the first publicly available colorectal histopathology enteroscope biopsy dataset with four magnifications and five types of images of tumor differentiation stages, totaling 5532 images. We believe that EBHI could attract researchers to explore new classification algorithms for the automated diagnosis of colorectal cancer, which could help physicians and patients in clinical settings.

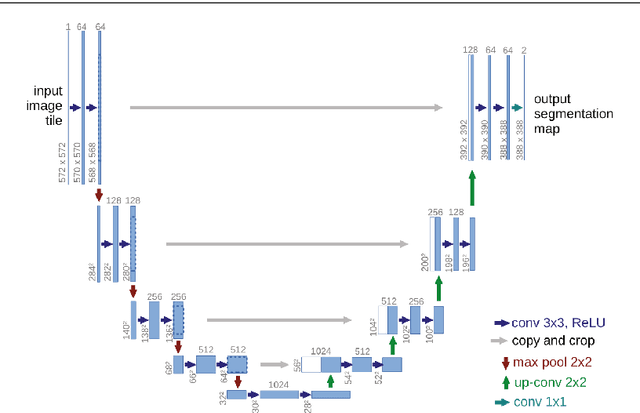

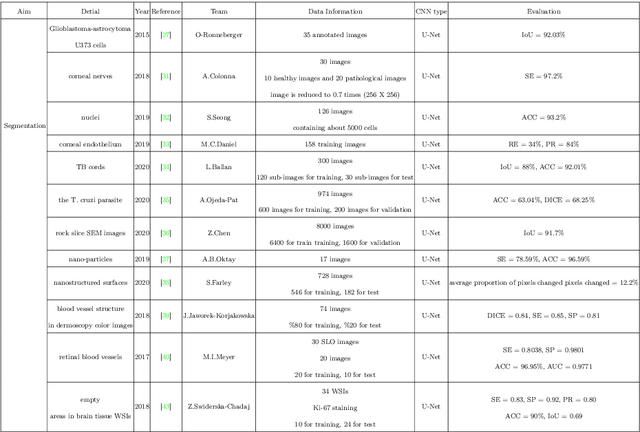

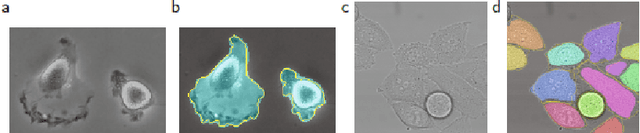

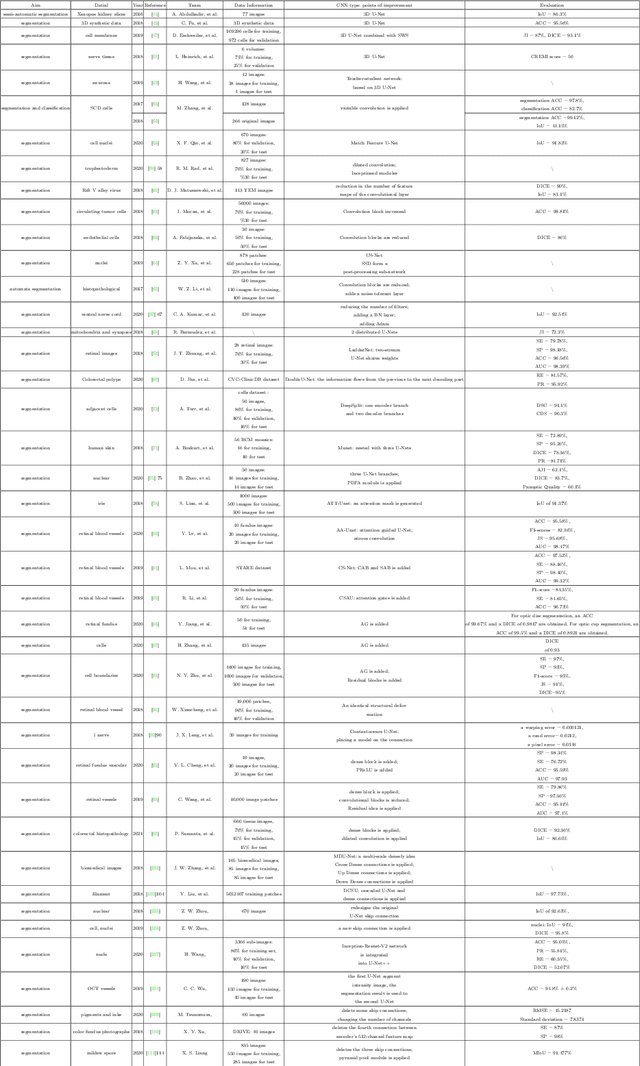

A State-of-the-art Survey of U-Net in Microscopic Image Analysis: from Simple Usage to Structure Mortification

Feb 14, 2022

Abstract:Image analysis technology is used to solve the inadvertences of artificial traditional methods in disease, wastewater treatment, environmental change monitoring analysis and convolutional neural networks (CNN) play an important role in microscopic image analysis. An important step in detection, tracking, monitoring, feature extraction, modeling and analysis is image segmentation, in which U-Net has increasingly applied in microscopic image segmentation. This paper comprehensively reviews the development history of U-Net, and analyzes various research results of various segmentation methods since the emergence of U-Net and conducts a comprehensive review of related papers. First, This paper has summarizes the improved methods of U-Net and then listed the existing significances of image segmentation techniques and their improvements that has introduced over the years. Finally, focusing on the different improvement strategies of U-Net in different papers, the related work of each application target is reviewed according to detailed technical categories to facilitate future research. Researchers can clearly see the dynamics of transmission of technological development and keep up with future trends in this interdisciplinary field.

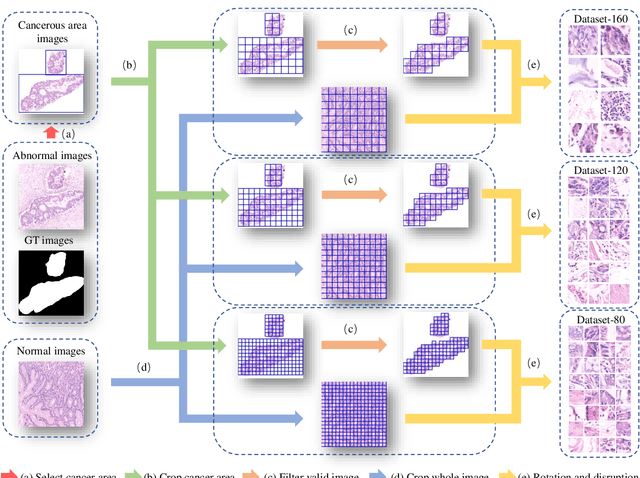

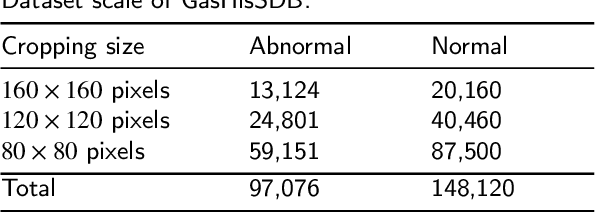

A New Gastric Histopathology Subsize Image Database (GasHisSDB) for Classification Algorithm Test: from Linear Regression to Visual Transformer

Jun 18, 2021

Abstract:GasHisSDB is a New Gastric Histopathology Subsize Image Database with a total of 245196 images. GasHisSDB is divided into 160*160 pixels sub-database, 120*120 pixels sub-database and 80*80 pixels sub-database. GasHisSDB is made to realize the function of valuating image classification. In order to prove that the methods of different periods in the field of image classification have discrepancies on GasHisSDB, we select a variety of classifiers for evaluation. Seven classical machine learning classifiers, three CNN classifiers and a novel transformer-based classifier are selected for testing on image classification tasks. GasHisSDB is available at the URL:https://github.com/NEUhwm/GasHisSDB.git.

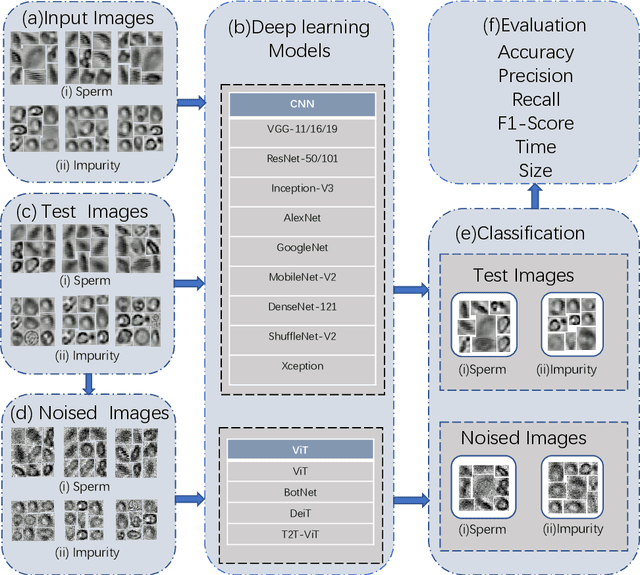

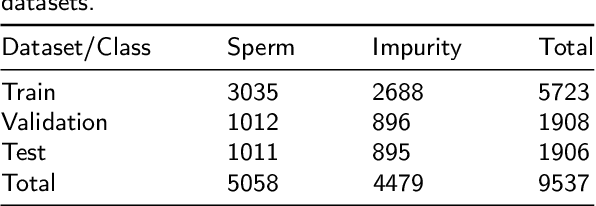

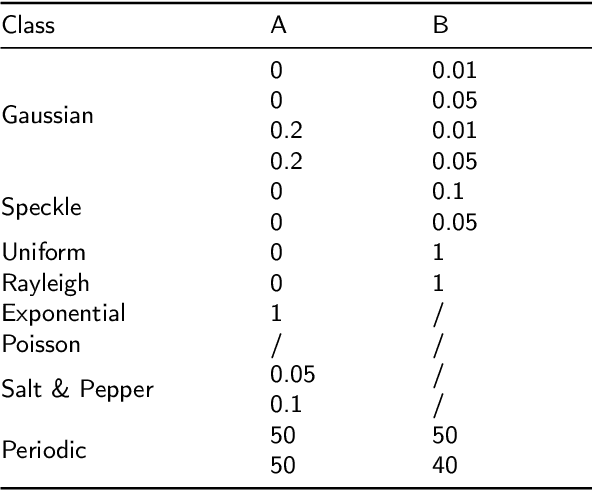

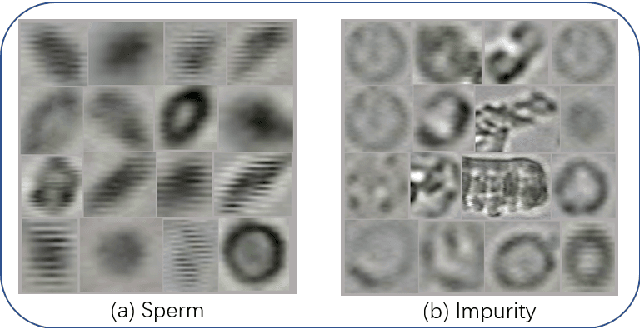

A Comparison for Anti-noise Robustness of Deep Learning Classification Methods on a Tiny Object Image Dataset: from Convolutional Neural Network to Visual Transformer and Performer

Jun 09, 2021

Abstract:Image classification has achieved unprecedented advance with the the rapid development of deep learning. However, the classification of tiny object images is still not well investigated. In this paper, we first briefly review the development of Convolutional Neural Network and Visual Transformer in deep learning, and introduce the sources and development of conventional noises and adversarial attacks. Then we use various models of Convolutional Neural Network and Visual Transformer to conduct a series of experiments on the image dataset of tiny objects (sperms and impurities), and compare various evaluation metrics in the experimental results to obtain a model with stable performance. Finally, we discuss the problems in the classification of tiny objects and make a prospect for the classification of tiny objects in the future.

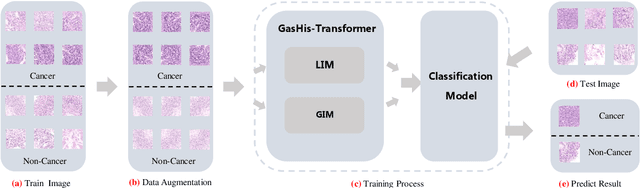

GasHis-Transformer: A Multi-scale Visual Transformer Approach for Gastric Histopathology Image Classification

May 25, 2021

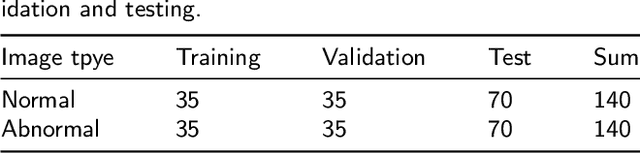

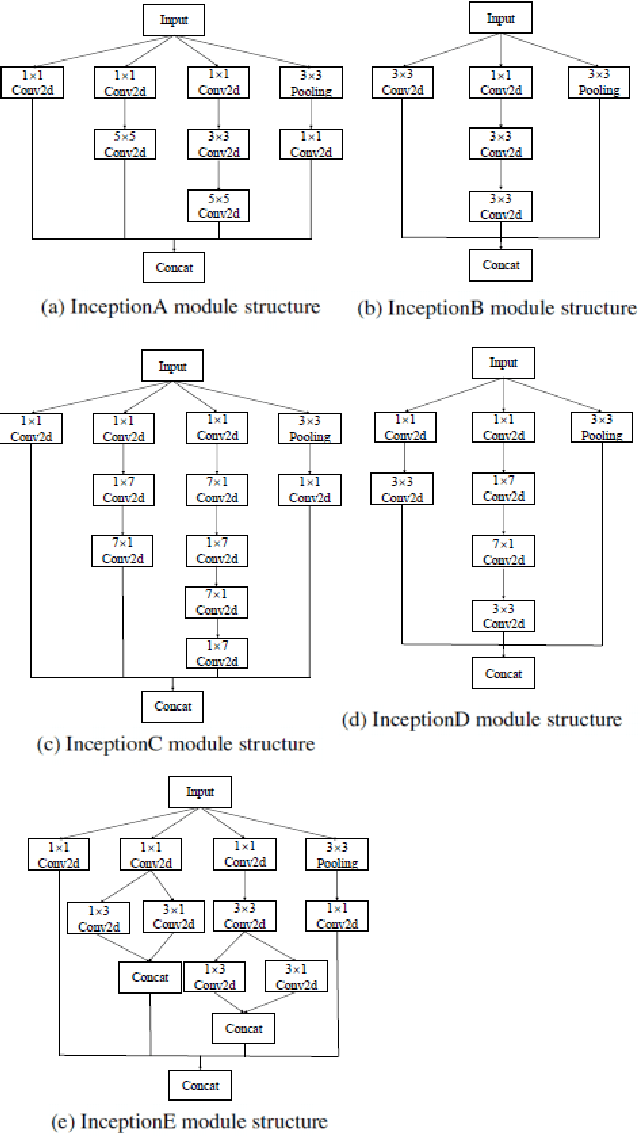

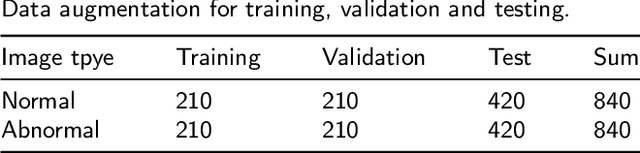

Abstract:Existing deep learning methods for diagnosis of gastric cancer commonly use convolutional neural networks (CNN). Recently, the Visual Transformer (VT) has attracted a major attention because of its performance and efficiency, but its applications are mostly in the field of computer vision. In this paper, a multi-scale visual transformer model, referred to as GasHis-Transformer, is proposed for gastric histopathology image classification (GHIC), which enables the automatic classification of microscopic gastric images into abnormal and normal cases. The GasHis-Transformer model consists of two key modules: a global information module (GIM) and a local information module (LIM) to extract pathological features effectively. In our experiments, a public hematoxylin and eosin (H&E) stained gastric histopathology dataset with 280 abnormal or normal images using the GasHis-Transformer model is applied to estimate precision, recall, F1-score, and accuracy on the testing set as 98.0%, 100.0%, 96.0% and 98.0% respectively. Furthermore, a critical study is conducted to evaluate the robustness of GasHis-Transformer according to add ten different noises including adversarial attack and traditional image noise. In addition, a clinically meaningful study is executed to test the gastric cancer identification of GasHis-Transformerwith 420 abnormal images and achieves 96.2% accuracy. Finally, a comparative study is performed to test the generalizability with both H&E and Immunohistochemical (IHC) stained images on a lymphoma image dataset, a breast cancer dataset and a cervical cancer dataset, producing comparable F1-scores (85.6%, 82.8% and 65.7%, respectively) and accuracy (83.9%, 89.4% and 65.7%, respectively) respectively. In conclusion, GasHis-Transformerdemonstrates a high classification performance and shows its significant potential in histopathology image analysis.

Is Image Size Important? A Robustness Comparison of Deep Learning Methods for Multi-scale Cell Image Classification Tasks: from Convolutional Neural Networks to Visual Transformers

May 16, 2021

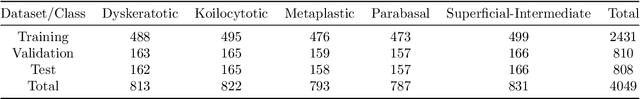

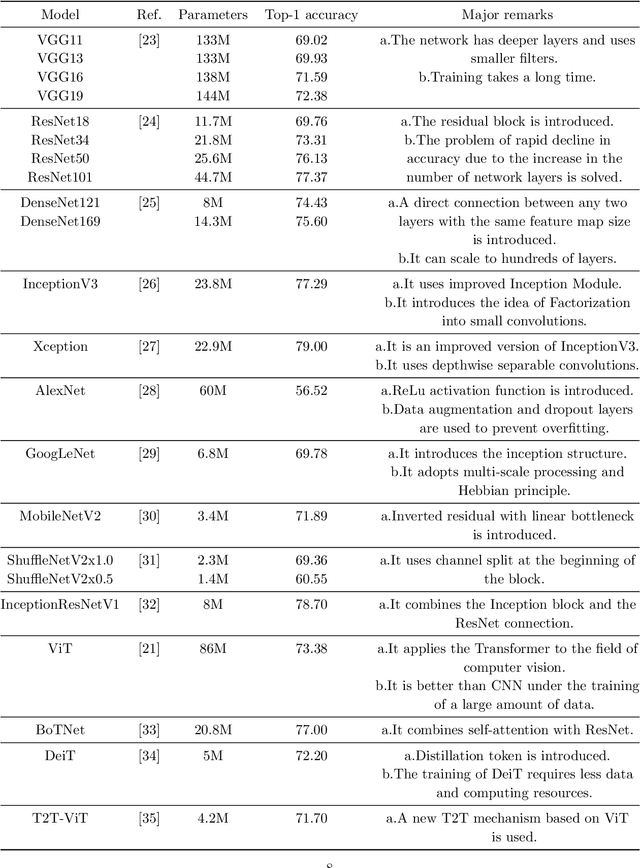

Abstract:Cervical cancer is a very common and fatal cancer in women, but it can be prevented through early examination and treatment. Cytopathology images are often used to screen for cancer. Then, because of the possibility of artificial errors due to the large number of this method, the computer-aided diagnosis system based on deep learning is developed. The image input required by the deep learning method is usually consistent, but the size of the clinical medical image is inconsistent. The internal information is lost after resizing the image directly, so it is unreasonable. A lot of research is to directly resize the image, and the results are still robust. In order to find a reasonable explanation, 22 deep learning models are used to process images of different scales, and experiments are conducted on the SIPaKMeD dataset. The conclusion is that the deep learning method is very robust to the size changes of images. This conclusion is also validated on the Herlev dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge