Vincent Schorp

ORBIT-Surgical: An Open-Simulation Framework for Learning Surgical Augmented Dexterity

Apr 24, 2024

Abstract:Physics-based simulations have accelerated progress in robot learning for driving, manipulation, and locomotion. Yet, a fast, accurate, and robust surgical simulation environment remains a challenge. In this paper, we present ORBIT-Surgical, a physics-based surgical robot simulation framework with photorealistic rendering in NVIDIA Omniverse. We provide 14 benchmark surgical tasks for the da Vinci Research Kit (dVRK) and Smart Tissue Autonomous Robot (STAR) which represent common subtasks in surgical training. ORBIT-Surgical leverages GPU parallelization to train reinforcement learning and imitation learning algorithms to facilitate study of robot learning to augment human surgical skills. ORBIT-Surgical also facilitates realistic synthetic data generation for active perception tasks. We demonstrate ORBIT-Surgical sim-to-real transfer of learned policies onto a physical dVRK robot. Project website: orbit-surgical.github.io

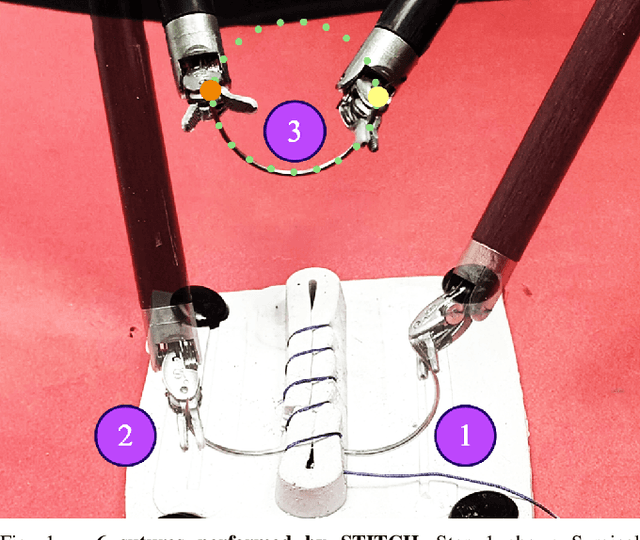

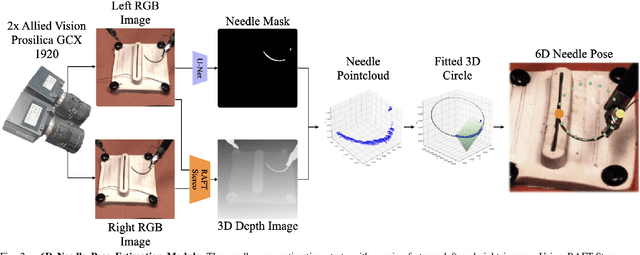

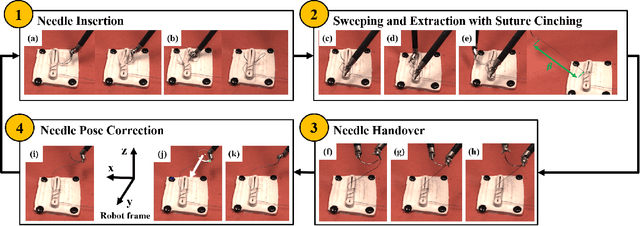

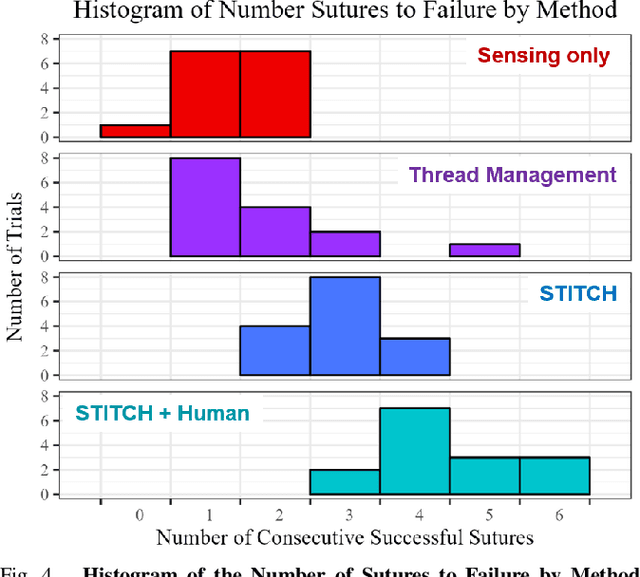

STITCH: Augmented Dexterity for Suture Throws Including Thread Coordination and Handoffs

Apr 08, 2024

Abstract:We present STITCH: an augmented dexterity pipeline that performs Suture Throws Including Thread Coordination and Handoffs. STITCH iteratively performs needle insertion, thread sweeping, needle extraction, suture cinching, needle handover, and needle pose correction with failure recovery policies. We introduce a novel visual 6D needle pose estimation framework using a stereo camera pair and new suturing motion primitives. We compare STITCH to baselines, including a proprioception-only and a policy without visual servoing. In physical experiments across 15 trials, STITCH achieves an average of 2.93 sutures without human intervention and 4.47 sutures with human intervention. See https://sites.google.com/berkeley.edu/stitch for code and supplemental materials.

Self-Supervised Learning for Interactive Perception of Surgical Thread for Autonomous Suture Tail-Shortening

Jul 13, 2023

Abstract:Accurate 3D sensing of suturing thread is a challenging problem in automated surgical suturing because of the high state-space complexity, thinness and deformability of the thread, and possibility of occlusion by the grippers and tissue. In this work we present a method for tracking surgical thread in 3D which is robust to occlusions and complex thread configurations, and apply it to autonomously perform the surgical suture "tail-shortening" task: pulling thread through tissue until a desired "tail" length remains exposed. The method utilizes a learned 2D surgical thread detection network to segment suturing thread in RGB images. It then identifies the thread path in 2D and reconstructs the thread in 3D as a NURBS spline by triangulating the detections from two stereo cameras. Once a 3D thread model is initialized, the method tracks the thread across subsequent frames. Experiments suggest the method achieves a 1.33 pixel average reprojection error on challenging single-frame 3D thread reconstructions, and an 0.84 pixel average reprojection error on two tracking sequences. On the tail-shortening task, it accomplishes a 90% success rate across 20 trials. Supplemental materials are available at https://sites.google.com/berkeley.edu/autolab-surgical-thread/ .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge