Thanh Trung Huynh

Federated Prompt-Tuning with Heterogeneous and Incomplete Multimodal Client Data

Feb 06, 2026Abstract:This paper introduces a generalized federated prompt-tuning framework for practical scenarios where local datasets are multi-modal and exhibit different distributional patterns of missing features at the input level. The proposed framework bridges the gap between federated learning and multi-modal prompt-tuning which have traditionally focused on either uni-modal or centralized data. A key challenge in this setting arises from the lack of semantic alignment between prompt instructions that encode similar distributional patterns of missing data across different clients. To address this, our framework introduces specialized client-tuning and server-aggregation designs that simultaneously optimize, align, and aggregate prompt-tuning instructions across clients and data modalities. This allows prompt instructions to complement one another and be combined effectively. Extensive evaluations on diverse multimodal benchmark datasets demonstrate that our work consistently outperforms state-of-the-art (SOTA) baselines.

A Multi-agent Text2SQL Framework using Small Language Models and Execution Feedback

Dec 21, 2025Abstract:Text2SQL, the task of generating SQL queries from natural language text, is a critical challenge in data engineering. Recently, Large Language Models (LLMs) have demonstrated superior performance for this task due to their advanced comprehension and generation capabilities. However, privacy and cost considerations prevent companies from using Text2SQL solutions based on external LLMs offered as a service. Rather, small LLMs (SLMs) that are openly available and can hosted in-house are adopted. These SLMs, in turn, lack the generalization capabilities of larger LLMs, which impairs their effectiveness for complex tasks such as Text2SQL. To address these limitations, we propose MATS, a novel Text2SQL framework designed specifically for SLMs. MATS uses a multi-agent mechanism that assigns specialized roles to auxiliary agents, reducing individual workloads and fostering interaction. A training scheme based on reinforcement learning aligns these agents using feedback obtained during execution, thereby maintaining competitive performance despite a limited LLM size. Evaluation results using on benchmark datasets show that MATS, deployed on a single- GPU server, yields accuracy that are on-par with large-scale LLMs when using significantly fewer parameters. Our source code and data are available at https://github.com/thanhdath/mats-sql.

Scaling Text2SQL via LLM-efficient Schema Filtering with Functional Dependency Graph Rerankers

Dec 18, 2025Abstract:Most modern Text2SQL systems prompt large language models (LLMs) with entire schemas -- mostly column information -- alongside the user's question. While effective on small databases, this approach fails on real-world schemas that exceed LLM context limits, even for commercial models. The recent Spider 2.0 benchmark exemplifies this with hundreds of tables and tens of thousands of columns, where existing systems often break. Current mitigations either rely on costly multi-step prompting pipelines or filter columns by ranking them against user's question independently, ignoring inter-column structure. To scale existing systems, we introduce \toolname, an open-source, LLM-efficient schema filtering framework that compacts Text2SQL prompts by (i) ranking columns with a query-aware LLM encoder enriched with values and metadata, (ii) reranking inter-connected columns via a lightweight graph transformer over functional dependencies, and (iii) selecting a connectivity-preserving sub-schema with a Steiner-tree heuristic. Experiments on real datasets show that \toolname achieves near-perfect recall and higher precision than CodeS, SchemaExP, Qwen rerankers, and embedding retrievers, while maintaining sub-second median latency and scaling to schemas with 23,000+ columns. Our source code is available at https://github.com/thanhdath/grast-sql.

Learning Reconfigurable Representations for Multimodal Federated Learning with Missing Data

Oct 27, 2025

Abstract:Multimodal federated learning in real-world settings often encounters incomplete and heterogeneous data across clients. This results in misaligned local feature representations that limit the effectiveness of model aggregation. Unlike prior work that assumes either differing modality sets without missing input features or a shared modality set with missing features across clients, we consider a more general and realistic setting where each client observes a different subset of modalities and might also have missing input features within each modality. To address the resulting misalignment in learned representations, we propose a new federated learning framework featuring locally adaptive representations based on learnable client-side embedding controls that encode each client's data-missing patterns. These embeddings serve as reconfiguration signals that align the globally aggregated representation with each client's local context, enabling more effective use of shared information. Furthermore, the embedding controls can be algorithmically aggregated across clients with similar data-missing patterns to enhance the robustness of reconfiguration signals in adapting the global representation. Empirical results on multiple federated multimodal benchmarks with diverse data-missing patterns across clients demonstrate the efficacy of the proposed method, achieving up to 36.45\% performance improvement under severe data incompleteness. The method is also supported by a theoretical analysis with an explicit performance bound that matches our empirical observations. Our source codes are provided at https://github.com/nmduonggg/PEPSY

Hypergraph Diffusion for High-Order Recommender Systems

Jan 28, 2025

Abstract:Recommender systems rely on Collaborative Filtering (CF) to predict user preferences by leveraging patterns in historical user-item interactions. While traditional CF methods primarily focus on learning compact vector embeddings for users and items, graph neural network (GNN)-based approaches have emerged as a powerful alternative, utilizing the structure of user-item interaction graphs to enhance recommendation accuracy. However, existing GNN-based models, such as LightGCN and UltraGCN, often struggle with two major limitations: an inability to fully account for heterophilic interactions, where users engage with diverse item categories, and the over-smoothing problem in multi-layer GNNs, which hinders their ability to model complex, high-order relationships. To address these gaps, we introduce WaveHDNN, an innovative wavelet-enhanced hypergraph diffusion framework. WaveHDNN integrates a Heterophily-aware Collaborative Encoder, designed to capture user-item interactions across diverse categories, with a Multi-scale Group-wise Structure Encoder, which leverages wavelet transforms to effectively model localized graph structures. Additionally, cross-view contrastive learning is employed to maintain robust and consistent representations. Experiments on benchmark datasets validate the efficacy of WaveHDNN, demonstrating its superior ability to capture both heterophilic and localized structural information, leading to improved recommendation performance.

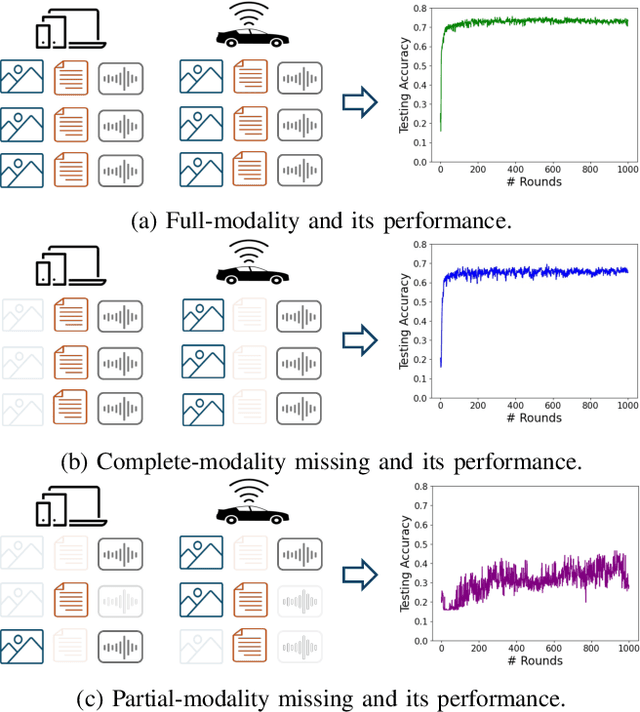

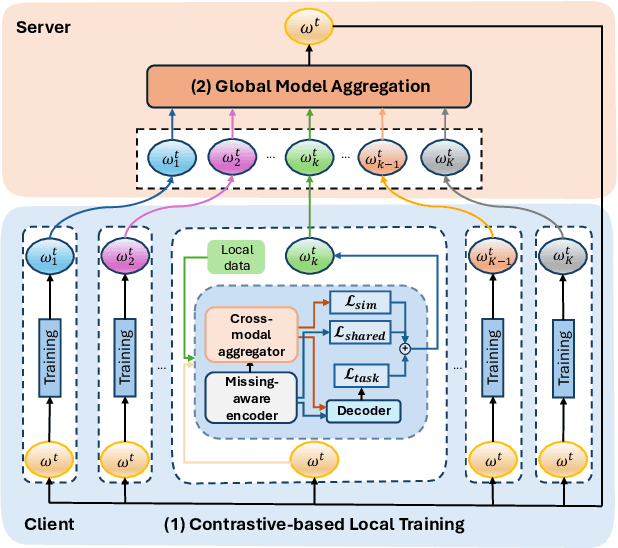

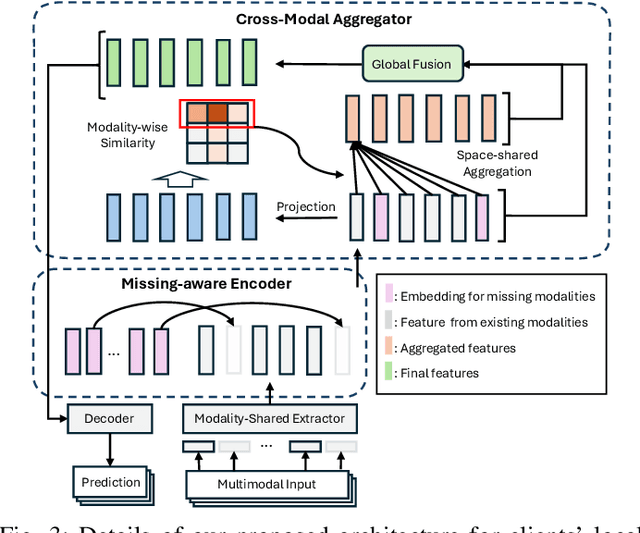

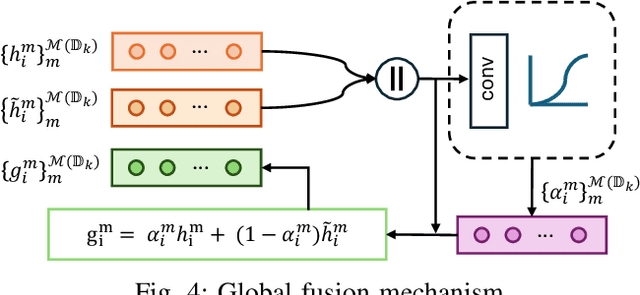

FedMAC: Tackling Partial-Modality Missing in Federated Learning with Cross-Modal Aggregation and Contrastive Regularization

Oct 04, 2024

Abstract:Federated Learning (FL) is a method for training machine learning models using distributed data sources. It ensures privacy by allowing clients to collaboratively learn a shared global model while storing their data locally. However, a significant challenge arises when dealing with missing modalities in clients' datasets, where certain features or modalities are unavailable or incomplete, leading to heterogeneous data distribution. While previous studies have addressed the issue of complete-modality missing, they fail to tackle partial-modality missing on account of severe heterogeneity among clients at an instance level, where the pattern of missing data can vary significantly from one sample to another. To tackle this challenge, this study proposes a novel framework named FedMAC, designed to address multi-modality missing under conditions of partial-modality missing in FL. Additionally, to avoid trivial aggregation of multi-modal features, we introduce contrastive-based regularization to impose additional constraints on the latent representation space. The experimental results demonstrate the effectiveness of FedMAC across various client configurations with statistical heterogeneity, outperforming baseline methods by up to 26% in severe missing scenarios, highlighting its potential as a solution for the challenge of partially missing modalities in federated systems.

Heterogeneous Hypergraph Embedding for Recommendation Systems

Jul 04, 2024

Abstract:Recent advancements in recommender systems have focused on integrating knowledge graphs (KGs) to leverage their auxiliary information. The core idea of KG-enhanced recommenders is to incorporate rich semantic information for more accurate recommendations. However, two main challenges persist: i) Neglecting complex higher-order interactions in the KG-based user-item network, potentially leading to sub-optimal recommendations, and ii) Dealing with the heterogeneous modalities of input sources, such as user-item bipartite graphs and KGs, which may introduce noise and inaccuracies. To address these issues, we present a novel Knowledge-enhanced Heterogeneous Hypergraph Recommender System (KHGRec). KHGRec captures group-wise characteristics of both the interaction network and the KG, modeling complex connections in the KG. Using a collaborative knowledge heterogeneous hypergraph (CKHG), it employs two hypergraph encoders to model group-wise interdependencies and ensure explainability. Additionally, it fuses signals from the input graphs with cross-view self-supervised learning and attention mechanisms. Extensive experiments on four real-world datasets show our model's superiority over various state-of-the-art baselines, with an average 5.18\% relative improvement. Additional tests on noise resilience, missing data, and cold-start problems demonstrate the robustness of our KHGRec framework. Our model and evaluation datasets are publicly available at \url{https://github.com/viethungvu1998/KHGRec}.

Fast-FedUL: A Training-Free Federated Unlearning with Provable Skew Resilience

May 28, 2024Abstract:Federated learning (FL) has recently emerged as a compelling machine learning paradigm, prioritizing the protection of privacy for training data. The increasing demand to address issues such as ``the right to be forgotten'' and combat data poisoning attacks highlights the importance of techniques, known as \textit{unlearning}, which facilitate the removal of specific training data from trained FL models. Despite numerous unlearning methods proposed for centralized learning, they often prove inapplicable to FL due to fundamental differences in the operation of the two learning paradigms. Consequently, unlearning in FL remains in its early stages, presenting several challenges. Many existing unlearning solutions in FL require a costly retraining process, which can be burdensome for clients. Moreover, these methods are primarily validated through experiments, lacking theoretical assurances. In this study, we introduce Fast-FedUL, a tailored unlearning method for FL, which eliminates the need for retraining entirely. Through meticulous analysis of the target client's influence on the global model in each round, we develop an algorithm to systematically remove the impact of the target client from the trained model. In addition to presenting empirical findings, we offer a theoretical analysis delineating the upper bound of our unlearned model and the exact retrained model (the one obtained through retraining using untargeted clients). Experimental results with backdoor attack scenarios indicate that Fast-FedUL effectively removes almost all traces of the target client, while retaining the knowledge of untargeted clients (obtaining a high accuracy of up to 98\% on the main task). Significantly, Fast-FedUL attains the lowest time complexity, providing a speed that is 1000 times faster than retraining. Our source code is publicly available at \url{https://github.com/thanhtrunghuynh93/fastFedUL}.

Manipulating Recommender Systems: A Survey of Poisoning Attacks and Countermeasures

Apr 23, 2024

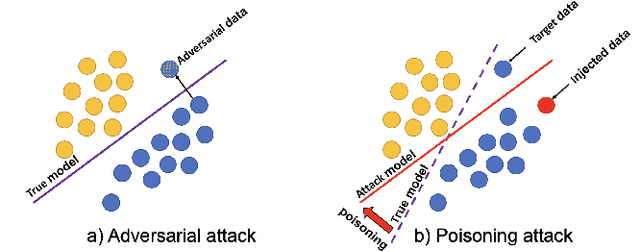

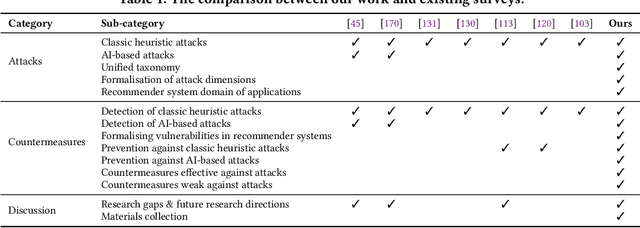

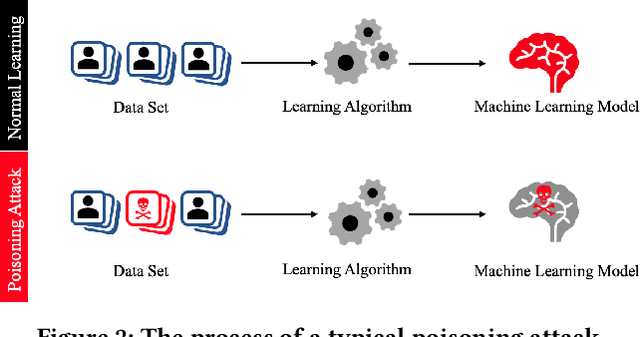

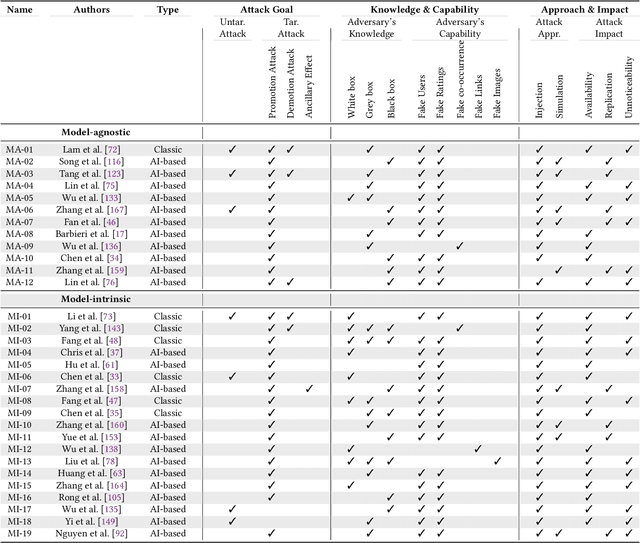

Abstract:Recommender systems have become an integral part of online services to help users locate specific information in a sea of data. However, existing studies show that some recommender systems are vulnerable to poisoning attacks, particularly those that involve learning schemes. A poisoning attack is where an adversary injects carefully crafted data into the process of training a model, with the goal of manipulating the system's final recommendations. Based on recent advancements in artificial intelligence, such attacks have gained importance recently. While numerous countermeasures to poisoning attacks have been developed, they have not yet been systematically linked to the properties of the attacks. Consequently, assessing the respective risks and potential success of mitigation strategies is difficult, if not impossible. This survey aims to fill this gap by primarily focusing on poisoning attacks and their countermeasures. This is in contrast to prior surveys that mainly focus on attacks and their detection methods. Through an exhaustive literature review, we provide a novel taxonomy for poisoning attacks, formalise its dimensions, and accordingly organise 30+ attacks described in the literature. Further, we review 40+ countermeasures to detect and/or prevent poisoning attacks, evaluating their effectiveness against specific types of attacks. This comprehensive survey should serve as a point of reference for protecting recommender systems against poisoning attacks. The article concludes with a discussion on open issues in the field and impactful directions for future research. A rich repository of resources associated with poisoning attacks is available at https://github.com/tamlhp/awesome-recsys-poisoning.

A Survey of Privacy-Preserving Model Explanations: Privacy Risks, Attacks, and Countermeasures

Mar 31, 2024

Abstract:As the adoption of explainable AI (XAI) continues to expand, the urgency to address its privacy implications intensifies. Despite a growing corpus of research in AI privacy and explainability, there is little attention on privacy-preserving model explanations. This article presents the first thorough survey about privacy attacks on model explanations and their countermeasures. Our contribution to this field comprises a thorough analysis of research papers with a connected taxonomy that facilitates the categorisation of privacy attacks and countermeasures based on the targeted explanations. This work also includes an initial investigation into the causes of privacy leaks. Finally, we discuss unresolved issues and prospective research directions uncovered in our analysis. This survey aims to be a valuable resource for the research community and offers clear insights for those new to this domain. To support ongoing research, we have established an online resource repository, which will be continuously updated with new and relevant findings. Interested readers are encouraged to access our repository at https://github.com/tamlhp/awesome-privex.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge