Tao Zeng

Entropy-Aware Structural Alignment for Zero-Shot Handwritten Chinese Character Recognition

Feb 03, 2026Abstract:Zero-shot Handwritten Chinese Character Recognition (HCCR) aims to recognize unseen characters by leveraging radical-based semantic compositions. However, existing approaches often treat characters as flat radical sequences, neglecting the hierarchical topology and the uneven information density of different components. To address these limitations, we propose an Entropy-Aware Structural Alignment Network that bridges the visual-semantic gap through information-theoretic modeling. First, we introduce an Information Entropy Prior to dynamically modulate positional embeddings via multiplicative interaction, acting as a saliency detector that prioritizes discriminative roots over ubiquitous components. Second, we construct a Dual-View Radical Tree to extract multi-granularity structural features, which are integrated via an adaptive Sigmoid-based gating network to encode both global layout and local spatial roles. Finally, a Top-K Semantic Feature Fusion mechanism is devised to augment the decoding process by utilizing the centroid of semantic neighbors, effectively rectifying visual ambiguities through feature-level consensus. Extensive experiments demonstrate that our method establishes new state-of-the-art performance, significantly outperforming existing CLIP-based baselines in the challenging zero-shot setting. Furthermore, the framework exhibits exceptional data efficiency, demonstrating rapid adaptability with minimal support samples.

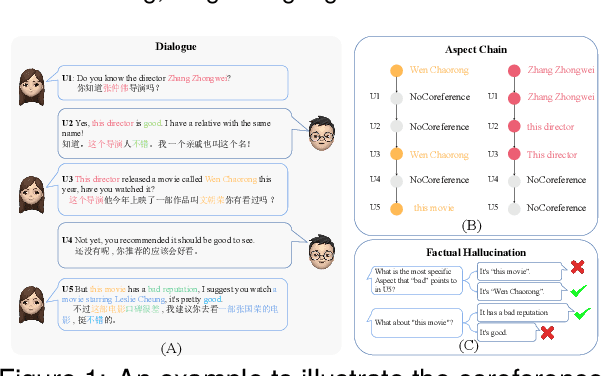

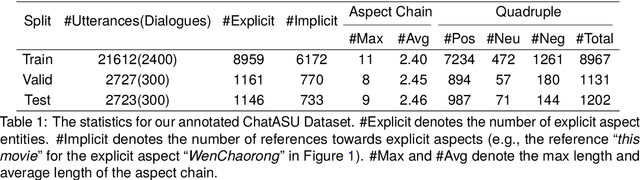

ChatASU: Evoking LLM's Reflexion to Truly Understand Aspect Sentiment in Dialogues

Mar 12, 2024

Abstract:Aspect Sentiment Understanding (ASU) in interactive scenarios (e.g., Question-Answering and Dialogue) has attracted ever-more interest in recent years and achieved important progresses. However, existing studies on interactive ASU largely ignore the coreference issue for opinion targets (i.e., aspects), while this phenomenon is ubiquitous in interactive scenarios especially dialogues, limiting the ASU performance. Recently, large language models (LLMs) shows the powerful ability to integrate various NLP tasks with the chat paradigm. In this way, this paper proposes a new Chat-based Aspect Sentiment Understanding (ChatASU) task, aiming to explore LLMs' ability in understanding aspect sentiments in dialogue scenarios. Particularly, this ChatASU task introduces a sub-task, i.e., Aspect Chain Reasoning (ACR) task, to address the aspect coreference issue. On this basis, we propose a Trusted Self-reflexion Approach (TSA) with ChatGLM as backbone to ChatASU. Specifically, this TSA treats the ACR task as an auxiliary task to boost the performance of the primary ASU task, and further integrates trusted learning into reflexion mechanisms to alleviate the LLMs-intrinsic factual hallucination problem in TSA. Furthermore, a high-quality ChatASU dataset is annotated to evaluate TSA, and extensive experiments show that our proposed TSA can significantly outperform several state-of-the-art baselines, justifying the effectiveness of TSA to ChatASU and the importance of considering the coreference and hallucination issues in ChatASU.

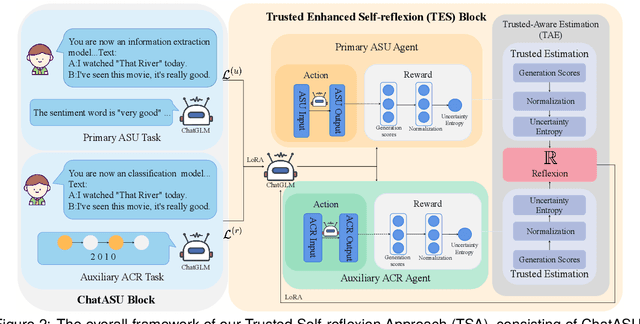

Fedlearn-Algo: A flexible open-source privacy-preserving machine learning platform

Jul 30, 2021

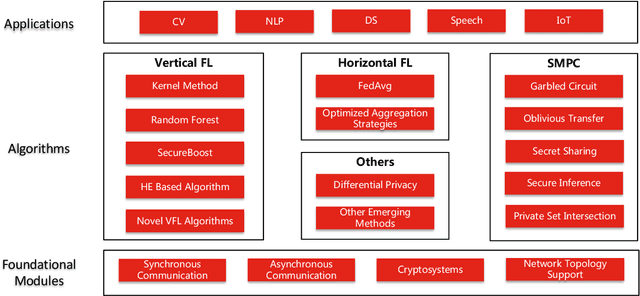

Abstract:In this paper, we present Fedlearn-Algo, an open-source privacy preserving machine learning platform. We use this platform to demonstrate our research and development results on privacy preserving machine learning algorithms. As the first batch of novel FL algorithm examples, we release vertical federated kernel binary classification model and vertical federated random forest model. They have been tested to be more efficient than existing vertical federated learning models in our practice. Besides the novel FL algorithm examples, we also release a machine communication module. The uniform data transfer interface supports transferring widely used data formats between machines. We will maintain this platform by adding more functional modules and algorithm examples. The code is available at https://github.com/fedlearnAI/fedlearn-algo.

BioNavi-NP: Biosynthesis Navigator for Natural Products

May 26, 2021

Abstract:Nature, a synthetic master, creates more than 300,000 natural products (NPs) which are the major constituents of FDA-proved drugs owing to the vast chemical space of NPs. To date, there are fewer than 30,000 validated NPs compounds involved in about 33,000 known enzyme catalytic reactions, and even fewer biosynthetic pathways are known with complete cascade-connected enzyme catalysis. Therefore, it is valuable to make computer-aided bio-retrosynthesis predictions. Here, we develop BioNavi-NP, a navigable and user-friendly toolkit, which is capable of predicting the biosynthetic pathways for NPs and NP-like compounds through a novel (AND-OR Tree)-based planning algorithm, an enhanced molecular Transformer neural network, and a training set that combines general organic transformations and biosynthetic steps. Extensive evaluations reveal that BioNavi-NP generalizes well to identifying the reported biosynthetic pathways for 90% of test compounds and recovering the verified building blocks for 73%, significantly outperforming conventional rule-based approaches. Moreover, BioNavi-NP also shows an outstanding capacity of biologically plausible pathways enumeration. In this sense, BioNavi-NP is a leading-edge toolkit to redesign complex biosynthetic pathways of natural products with applications to total or semi-synthesis and pathway elucidation or reconstruction.

Transformer-based Conditional Variational Autoencoder for Controllable Story Generation

Jan 04, 2021

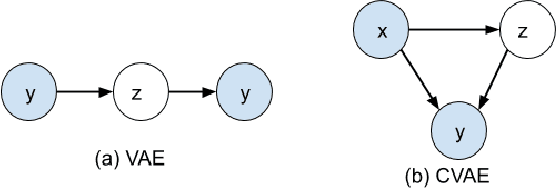

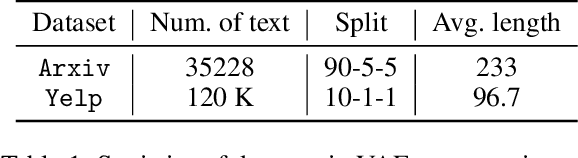

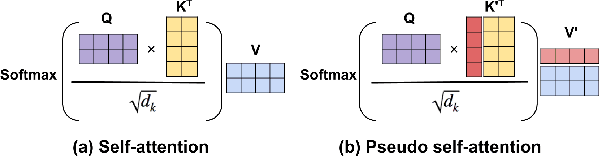

Abstract:We investigate large-scale latent variable models (LVMs) for neural story generation -- an under-explored application for open-domain long text -- with objectives in two threads: generation effectiveness and controllability. LVMs, especially the variational autoencoder (VAE), have achieved both effective and controllable generation through exploiting flexible distributional latent representations. Recently, Transformers and its variants have achieved remarkable effectiveness without explicit latent representation learning, thus lack satisfying controllability in generation. In this paper, we advocate to revive latent variable modeling, essentially the power of representation learning, in the era of Transformers to enhance controllability without hurting state-of-the-art generation effectiveness. Specifically, we integrate latent representation vectors with a Transformer-based pre-trained architecture to build conditional variational autoencoder (CVAE). Model components such as encoder, decoder and the variational posterior are all built on top of pre-trained language models -- GPT2 specifically in this paper. Experiments demonstrate state-of-the-art conditional generation ability of our model, as well as its excellent representation learning capability and controllability.

Outline to Story: Fine-grained Controllable Story Generation from Cascaded Events

Jan 04, 2021

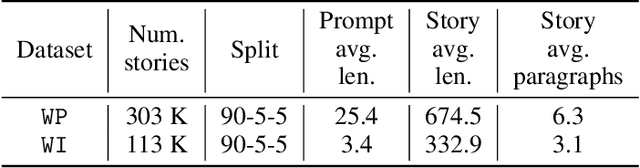

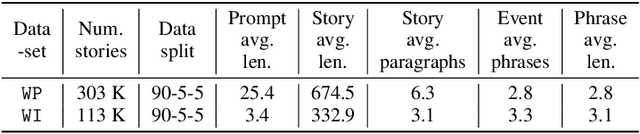

Abstract:Large-scale pretrained language models have shown thrilling generation capabilities, especially when they generate consistent long text in thousands of words with ease. However, users of these models can only control the prefix of sentences or certain global aspects of generated text. It is challenging to simultaneously achieve fine-grained controllability and preserve the state-of-the-art unconditional text generation capability. In this paper, we first propose a new task named "Outline to Story" (O2S) as a test bed for fine-grained controllable generation of long text, which generates a multi-paragraph story from cascaded events, i.e. a sequence of outline events that guide subsequent paragraph generation. We then create dedicate datasets for future benchmarks, built by state-of-the-art keyword extraction techniques. Finally, we propose an extremely simple yet strong baseline method for the O2S task, which fine tunes pre-trained language models on augmented sequences of outline-story pairs with simple language modeling objective. Our method does not introduce any new parameters or perform any architecture modification, except several special tokens as delimiters to build augmented sequences. Extensive experiments on various datasets demonstrate state-of-the-art conditional story generation performance with our model, achieving better fine-grained controllability and user flexibility. Our paper is among the first ones by our knowledge to propose a model and to create datasets for the task of "outline to story". Our work also instantiates research interest of fine-grained controllable generation of open-domain long text, where controlling inputs are represented by short text.

Reconstruction of Simulation-Based Physical Field by Reconstruction Neural Network Method

Sep 05, 2018

Abstract:A variety of modeling techniques have been developed in the past decade to reduce the computational expense and improve the accuracy of modeling. In this study, a new framework of modeling is suggested. Compared with other popular methods, a distinctive characteristic is "from image based model to analysis based model (e.g. stress, strain, and deformation)". In such a framework, a reconstruction neural network (ReConNN) model designed for simulation-based physical field's reconstruction is proposed. The ReConNN contains two submodels that are convolutional neural network (CNN) and generative adversarial net-work (GAN). The CNN is employed to construct the mapping between contour images of physical field and objective function. Subsequently, the GAN is utilized to generate more images which are similar to the existing contour images. Finally, Lagrange polynomial is applied to complete the reconstruction. However, the existing CNN models are commonly applied to the classification tasks, which seem to be difficult to handle with regression tasks of images. Meanwhile, the existing GAN architectures are insufficient to generate high-accuracy "pseudo contour images". Therefore, a ReConNN model based on a Convolution in Convolution (CIC) and a Convolutional AutoEncoder based on Wasserstein Generative Adversarial Network (WGAN-CAE) is suggested. To evaluate the performance of the proposed model representatively, a classical topology optimization procedure is considered. Then the ReConNN is utilized to the reconstruction of heat transfer process of a pin fin heat sink. It demonstrates that the proposed ReConNN model is proved to be a potential capability to reconstruct physical field for multidisciplinary, such as structural optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge