Sung Hwan Mun

EEND-DEMUX: End-to-End Neural Speaker Diarization via Demultiplexed Speaker Embeddings

Dec 11, 2023Abstract:In recent years, there have been studies to further improve the end-to-end neural speaker diarization (EEND) systems. This letter proposes the EEND-DEMUX model, a novel framework utilizing demultiplexed speaker embeddings. In this work, we focus on disentangling speaker-relevant information in the latent space and then transform each separated latent variable into its corresponding speech activity. EEND-DEMUX can directly obtain separated speaker embeddings through the demultiplexing operation in the inference phase without an external speaker diarization system, an embedding extractor, or a heuristic decoding technique. Furthermore, we employ a multi-head cross-attention mechanism to capture the correlation between mixture and separated speaker embeddings effectively. We formulate three loss functions based on matching, orthogonality, and sparsity constraints to learn robust demultiplexed speaker embeddings. The experimental results on the LibriMix dataset show consistently improved performance in both a fixed and flexible number of speakers scenarios.

Towards single integrated spoofing-aware speaker verification embeddings

Jun 01, 2023

Abstract:This study aims to develop a single integrated spoofing-aware speaker verification (SASV) embeddings that satisfy two aspects. First, rejecting non-target speakers' input as well as target speakers' spoofed inputs should be addressed. Second, competitive performance should be demonstrated compared to the fusion of automatic speaker verification (ASV) and countermeasure (CM) embeddings, which outperformed single embedding solutions by a large margin in the SASV2022 challenge. We analyze that the inferior performance of single SASV embeddings comes from insufficient amount of training data and distinct nature of ASV and CM tasks. To this end, we propose a novel framework that includes multi-stage training and a combination of loss functions. Copy synthesis, combined with several vocoders, is also exploited to address the lack of spoofed data. Experimental results show dramatic improvements, achieving a SASV-EER of 1.06% on the evaluation protocol of the SASV2022 challenge.

Adversarial Speaker-Consistency Learning Using Untranscribed Speech Data for Zero-Shot Multi-Speaker Text-to-Speech

Oct 12, 2022

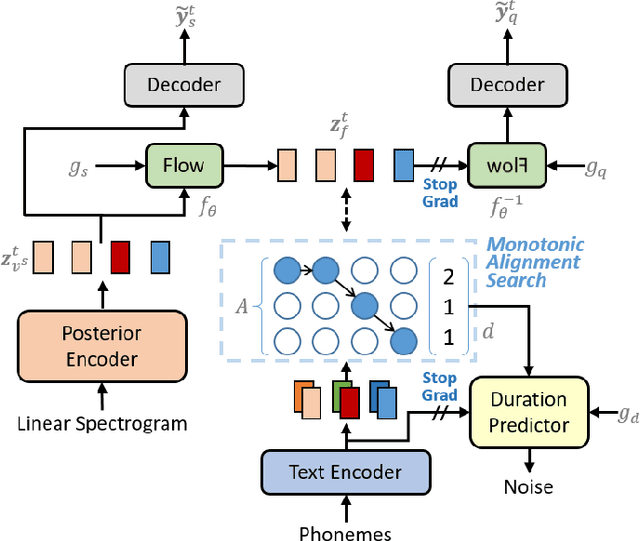

Abstract:Several recently proposed text-to-speech (TTS) models achieved to generate the speech samples with the human-level quality in the single-speaker and multi-speaker TTS scenarios with a set of pre-defined speakers. However, synthesizing a new speaker's voice with a single reference audio, commonly known as zero-shot multi-speaker text-to-speech (ZSM-TTS), is still a very challenging task. The main challenge of ZSM-TTS is the speaker domain shift problem upon the speech generation of a new speaker. To mitigate this problem, we propose adversarial speaker-consistency learning (ASCL). The proposed method first generates an additional speech of a query speaker using the external untranscribed datasets at each training iteration. Then, the model learns to consistently generate the speech sample of the same speaker as the corresponding speaker embedding vector by employing an adversarial learning scheme. The experimental results show that the proposed method is effective compared to the baseline in terms of the quality and speaker similarity in ZSM-TTS.

Fully Unsupervised Training of Few-shot Keyword Spotting

Oct 07, 2022

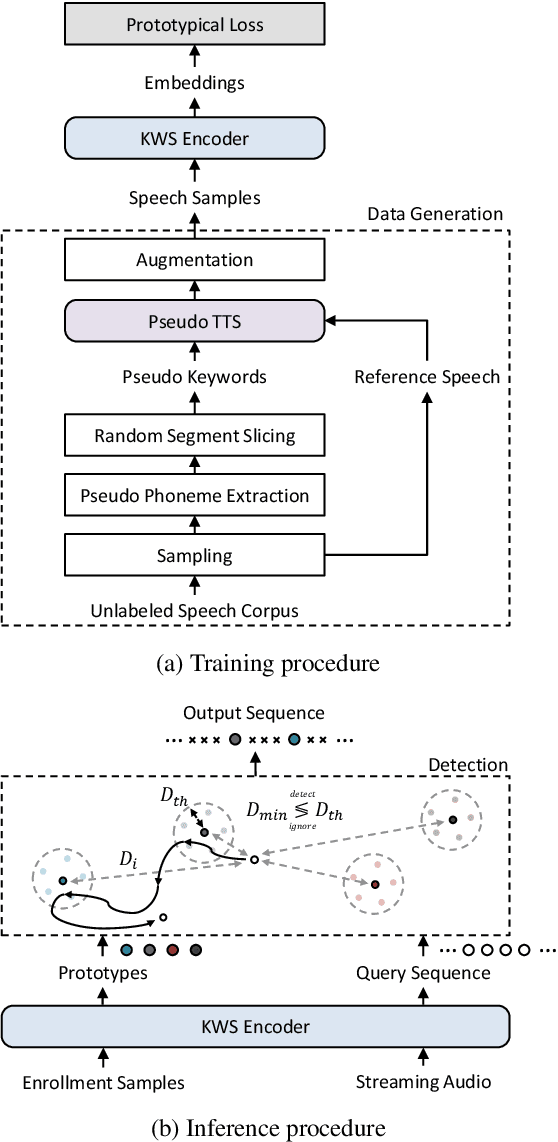

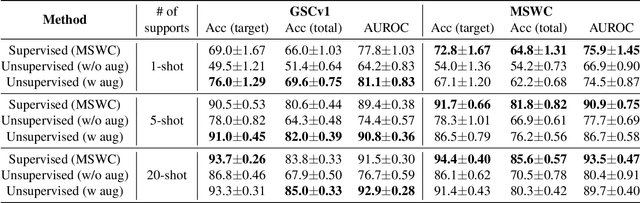

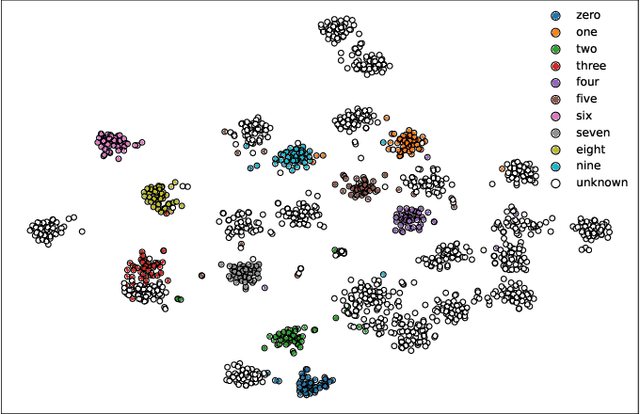

Abstract:For training a few-shot keyword spotting (FS-KWS) model, a large labeled dataset containing massive target keywords has known to be essential to generalize to arbitrary target keywords with only a few enrollment samples. To alleviate the expensive data collection with labeling, in this paper, we propose a novel FS-KWS system trained only on synthetic data. The proposed system is based on metric learning enabling target keywords to be detected using distance metrics. Exploiting the speech synthesis model that generates speech with pseudo phonemes instead of texts, we easily obtain a large collection of multi-view samples with the same semantics. These samples are sufficient for training, considering metric learning does not intrinsically necessitate labeled data. All of the components in our framework do not require any supervision, making our method unsupervised. Experimental results on real datasets show our proposed method is competitive even without any labeled and real datasets.

Disentangled Speaker Representation Learning via Mutual Information Minimization

Aug 17, 2022

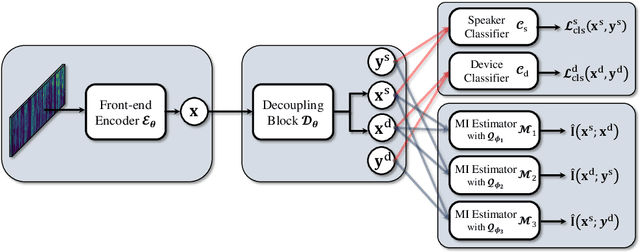

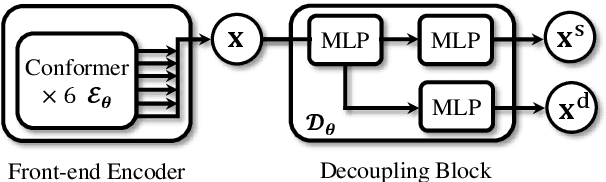

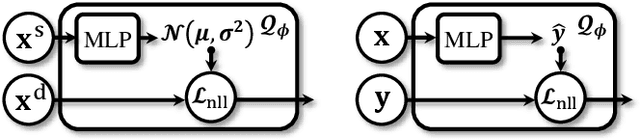

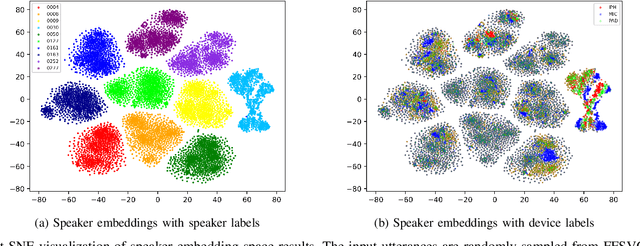

Abstract:Domain mismatch problem caused by speaker-unrelated feature has been a major topic in speaker recognition. In this paper, we propose an explicit disentanglement framework to unravel speaker-relevant features from speaker-unrelated features via mutual information (MI) minimization. To achieve our goal of minimizing MI between speaker-related and speaker-unrelated features, we adopt a contrastive log-ratio upper bound (CLUB), which exploits the upper bound of MI. Our framework is constructed in a 3-stage structure. First, in the front-end encoder, input speech is encoded into shared initial embedding. Next, in the decoupling block, shared initial embedding is split into separate speaker-related and speaker-unrelated embeddings. Finally, disentanglement is conducted by MI minimization in the last stage. Experiments on Far-Field Speaker Verification Challenge 2022 (FFSVC2022) demonstrate that our proposed framework is effective for disentanglement. Also, to utilize domain-unknown datasets containing numerous speakers, we pre-trained the front-end encoder with VoxCeleb datasets. We then fine-tuned the speaker embedding model in the disentanglement framework with FFSVC 2022 dataset. The experimental results show that fine-tuning with a disentanglement framework on a existing pre-trained model is valid and can further improve performance.

Selective Kernel Attention for Robust Speaker Verification

Apr 03, 2022

Abstract:Recent state-of-the-art speaker verification architectures adopt multi-scale processing and frequency-channel attention techniques. However, their full potential may not have been exploited because these techniques' receptive fields are fixed where most convolutional layers operate with specified kernel sizes such as 1, 3 or 5. We aim to further improve this line of research by introducing a selective kernel attention (SKA) mechanism. The SKA mechanism allows each convolutional layer to adaptively select the kernel size in a data-driven fashion based on an attention mechanism that exploits both frequency and channel domain using the previous layer's output. We propose three module variants using the SKA mechanism whereby two modules are applied in front of an ECAPA-TDNN model, and the other is combined with the Res2Net backbone block. Experimental results demonstrate that our proposed model consistently outperforms the conventional counterpart on the three different evaluation protocols in terms of both equal error rate and minimum detection cost function. In addition, we present a detailed analysis that helps understand how the SKA module works.

Bootstrap Equilibrium and Probabilistic Speaker Representation Learning for Self-supervised Speaker Verification

Dec 24, 2021

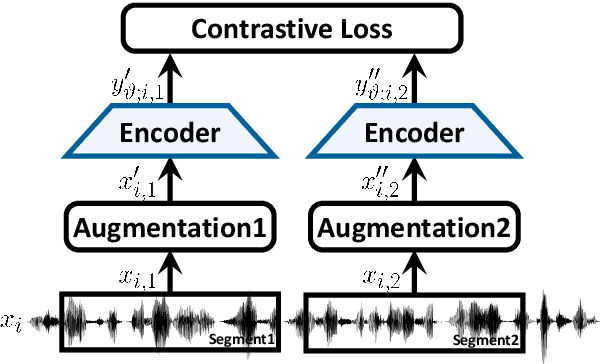

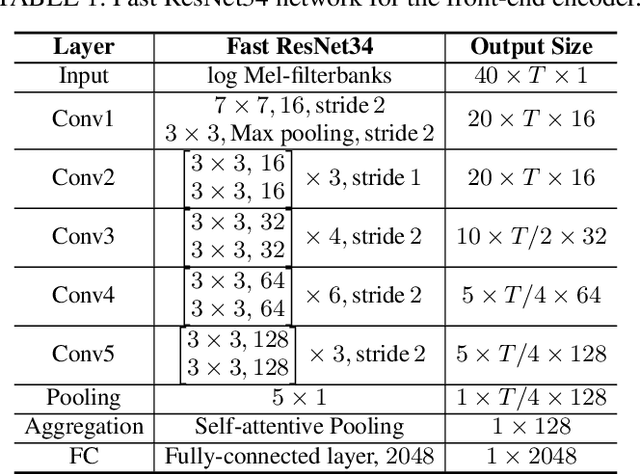

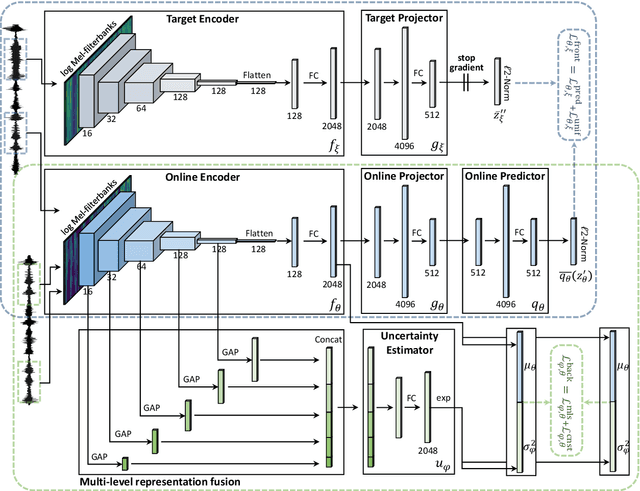

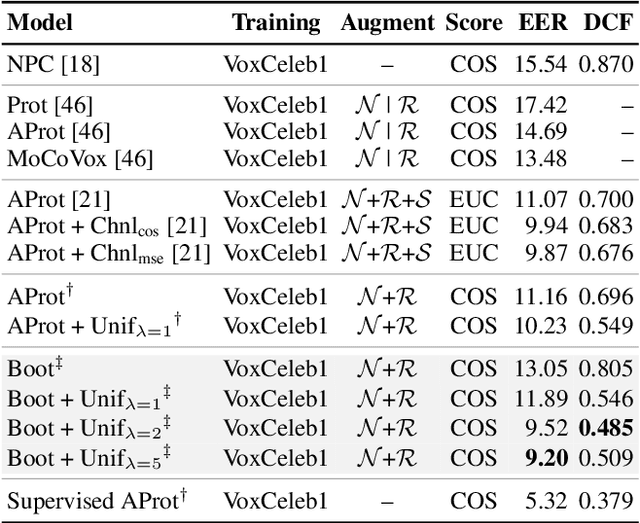

Abstract:In this paper, we propose self-supervised speaker representation learning strategies, which comprise of a bootstrap equilibrium speaker representation learning in the front-end and an uncertainty-aware probabilistic speaker embedding training in the back-end. In the front-end stage, we learn the speaker representations via the bootstrap training scheme with the uniformity regularization term. In the back-end stage, the probabilistic speaker embeddings are estimated by maximizing the mutual likelihood score between the speech samples belonging to the same speaker, which provide not only speaker representations but also data uncertainty. Experimental results show that the proposed bootstrap equilibrium training strategy can effectively help learn the speaker representations and outperforms the conventional methods based on contrastive learning. Also, we demonstrate that the integrated two-stage framework further improves the speaker verification performance on the VoxCeleb1 test set in terms of EER and MinDCF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge