Stefan Rass

RobotPerf: An Open-Source, Vendor-Agnostic, Benchmarking Suite for Evaluating Robotics Computing System Performance

Sep 17, 2023

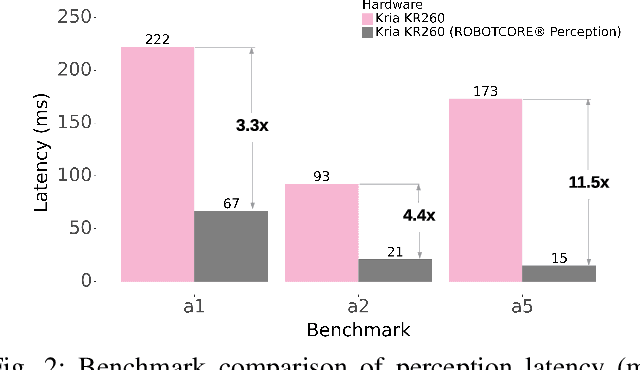

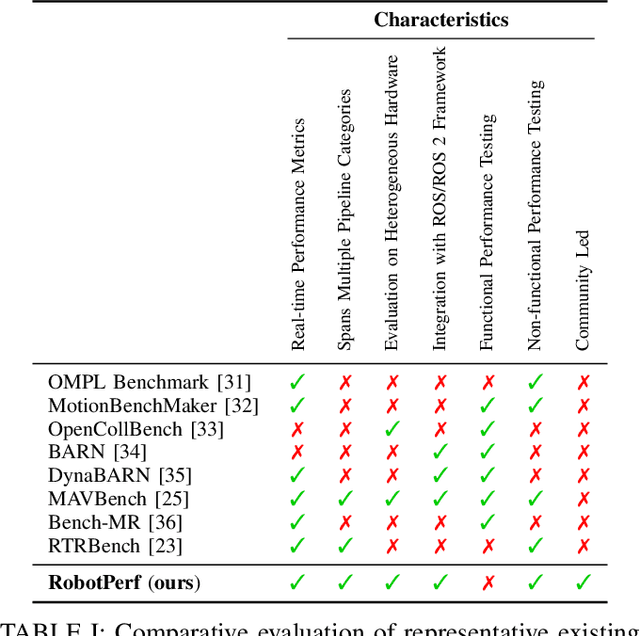

Abstract:We introduce RobotPerf, a vendor-agnostic benchmarking suite designed to evaluate robotics computing performance across a diverse range of hardware platforms using ROS 2 as its common baseline. The suite encompasses ROS 2 packages covering the full robotics pipeline and integrates two distinct benchmarking approaches: black-box testing, which measures performance by eliminating upper layers and replacing them with a test application, and grey-box testing, an application-specific measure that observes internal system states with minimal interference. Our benchmarking framework provides ready-to-use tools and is easily adaptable for the assessment of custom ROS 2 computational graphs. Drawing from the knowledge of leading robot architects and system architecture experts, RobotPerf establishes a standardized approach to robotics benchmarking. As an open-source initiative, RobotPerf remains committed to evolving with community input to advance the future of hardware-accelerated robotics.

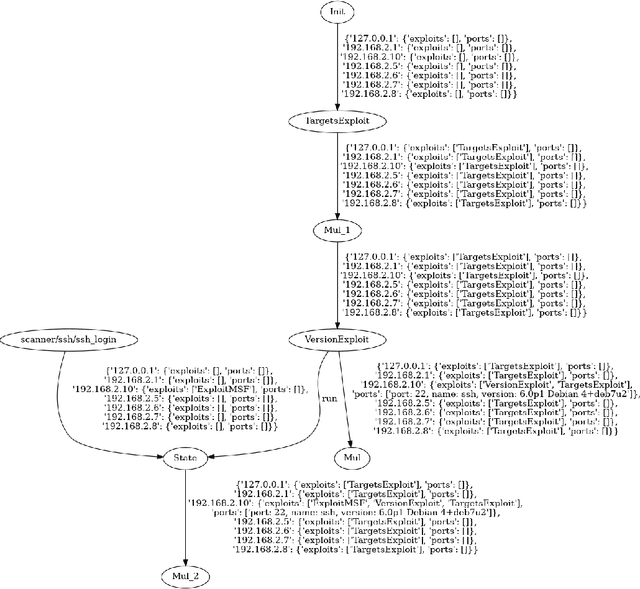

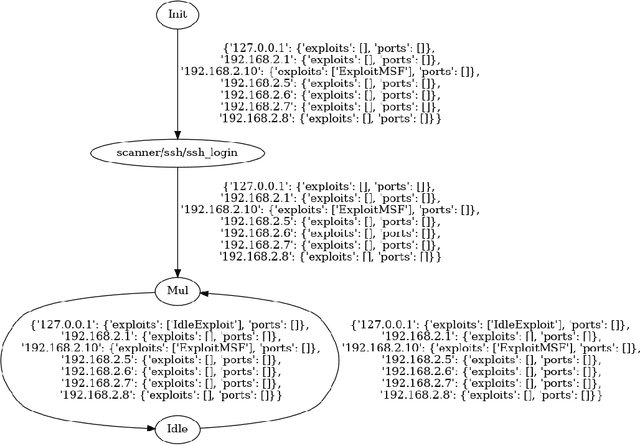

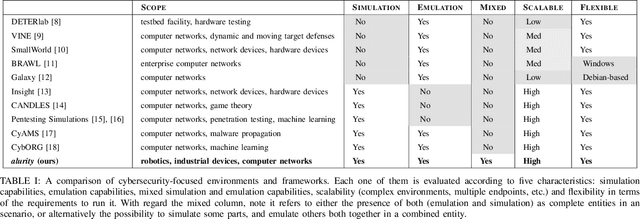

ExploitFlow, cyber security exploitation routes for Game Theory and AI research in robotics

Aug 04, 2023

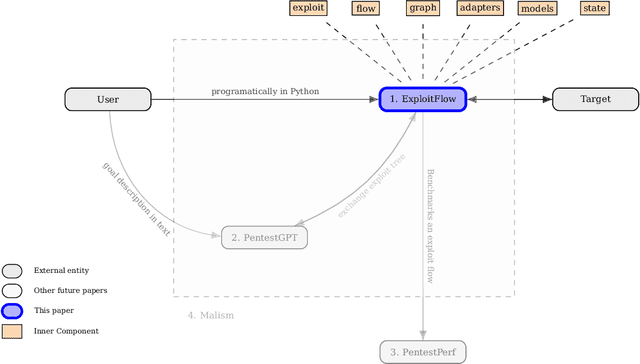

Abstract:This paper addresses the prevalent lack of tools to facilitate and empower Game Theory and Artificial Intelligence (AI) research in cybersecurity. The primary contribution is the introduction of ExploitFlow (EF), an AI and Game Theory-driven modular library designed for cyber security exploitation. EF aims to automate attacks, combining exploits from various sources, and capturing system states post-action to reason about them and understand potential attack trees. The motivation behind EF is to bolster Game Theory and AI research in cybersecurity, with robotics as the initial focus. Results indicate that EF is effective for exploring machine learning in robot cybersecurity. An artificial agent powered by EF, using Reinforcement Learning, outperformed both brute-force and human expert approaches, laying the path for using ExploitFlow for further research. Nonetheless, we identified several limitations in EF-driven agents, including a propensity to overfit, the scarcity and production cost of datasets for generalization, and challenges in interpreting networking states across varied security settings. To leverage the strengths of ExploitFlow while addressing identified shortcomings, we present Malism, our vision for a comprehensive automated penetration testing framework with ExploitFlow at its core.

Metricizing the Euclidean Space towards Desired Distance Relations in Point Clouds

Nov 07, 2022Abstract:Given a set of points in the Euclidean space $\mathbb{R}^\ell$ with $\ell>1$, the pairwise distances between the points are determined by their spatial location and the metric $d$ that we endow $\mathbb{R}^\ell$ with. Hence, the distance $d(\mathbf x,\mathbf y)=\delta$ between two points is fixed by the choice of $\mathbf x$ and $\mathbf y$ and $d$. We study the related problem of fixing the value $\delta$, and the points $\mathbf x,\mathbf y$, and ask if there is a topological metric $d$ that computes the desired distance $\delta$. We demonstrate this problem to be solvable by constructing a metric to simultaneously give desired pairwise distances between up to $O(\sqrt\ell)$ many points in $\mathbb{R}^\ell$. We then introduce the notion of an $\varepsilon$-semimetric $\tilde{d}$ to formulate our main result: for all $\varepsilon>0$, for all $m\geq 1$, for any choice of $m$ points $\mathbf y_1,\ldots,\mathbf y_m\in\mathbb{R}^\ell$, and all chosen sets of values $\{\delta_{ij}\geq 0: 1\leq i<j\leq m\}$, there exists an $\varepsilon$-semimetric $\tilde{\delta}:\mathbb{R}^\ell\times \mathbb{R}^\ell\to\mathbb{R}$ such that $\tilde{d}(\mathbf y_i,\mathbf y_j)=\delta_{ij}$, i.e., the desired distances are accomplished, irrespectively of the topology that the Euclidean or other norms would induce. We showcase our results by using them to attack unsupervised learning algorithms, specifically $k$-Means and density-based (DBSCAN) clustering algorithms. These have manifold applications in artificial intelligence, and letting them run with externally provided distance measures constructed in the way as shown here, can make clustering algorithms produce results that are pre-determined and hence malleable. This demonstrates that the results of clustering algorithms may not generally be trustworthy, unless there is a standardized and fixed prescription to use a specific distance function.

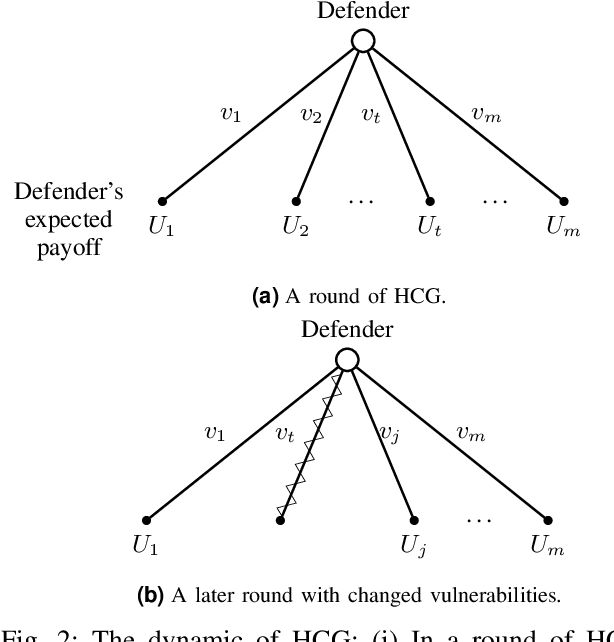

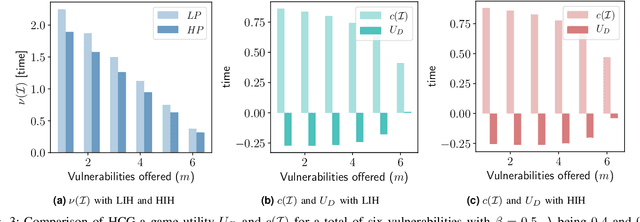

HoneyCar: A Framework to Configure HoneypotVulnerabilities on the Internet of Vehicles

Nov 03, 2021

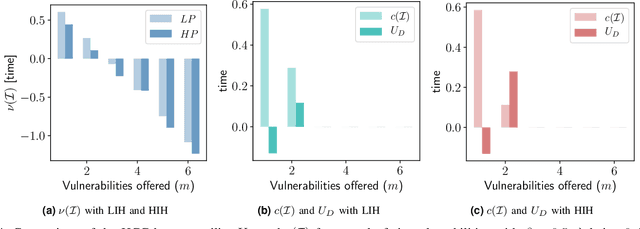

Abstract:The Internet of Vehicles (IoV), whereby interconnected vehicles communicate with each other and with road infrastructure on a common network, has promising socio-economic benefits but also poses new cyber-physical threats. Data on vehicular attackers can be realistically gathered through cyber threat intelligence using systems like honeypots. Admittedly, configuring honeypots introduces a trade-off between the level of honeypot-attacker interactions and any incurred overheads and costs for implementing and monitoring these honeypots. We argue that effective deception can be achieved through strategically configuring the honeypots to represent components of the IoV and engage attackers to collect cyber threat intelligence. In this paper, we present HoneyCar, a novel decision support framework for honeypot deception in IoV. HoneyCar builds upon a repository of known vulnerabilities of the autonomous and connected vehicles found in the Common Vulnerabilities and Exposure (CVE) data within the National Vulnerability Database (NVD) to compute optimal honeypot configuration strategies. By taking a game-theoretic approach, we model the adversarial interaction as a repeated imperfect-information zero-sum game in which the IoV network administrator chooses a set of vulnerabilities to offer in a honeypot and a strategic attacker chooses a vulnerability of the IoV to exploit under uncertainty. Our investigation is substantiated by examining two different versions of the game, with and without the re-configuration cost to empower the network administrator to determine optimal honeypot configurations. We evaluate HoneyCar in a realistic use case to support decision makers with determining optimal honeypot configuration strategies for strategic deployment in IoV.

Supervised Machine Learning with Plausible Deniability

Jun 08, 2021

Abstract:We study the question of how well machine learning (ML) models trained on a certain data set provide privacy for the training data, or equivalently, whether it is possible to reverse-engineer the training data from a given ML model. While this is easy to answer negatively in the most general case, it is interesting to note that the protection extends over non-recoverability towards plausible deniability: Given an ML model $f$, we show that one can take a set of purely random training data, and from this define a suitable ``learning rule'' that will produce a ML model that is exactly $f$. Thus, any speculation about which data has been used to train $f$ is deniable upon the claim that any other data could have led to the same results. We corroborate our theoretical finding with practical examples, and open source implementations of how to find the learning rules for a chosen set of raining data.

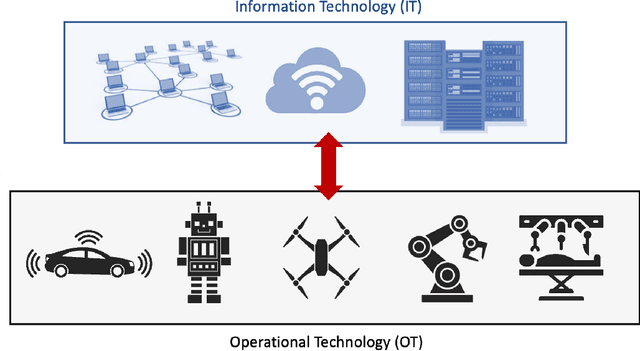

Cybersecurity in Robotics: Challenges, Quantitative Modeling, and Practice

Mar 16, 2021

Abstract:Robotics is becoming more and more ubiquitous, but the pressure to bring systems to market occasionally goes at the cost of neglecting security mechanisms during the development, deployment or while in production. As a result, contemporary robotic systems are vulnerable to diverse attack patterns, and an a posteriori hardening is at least challenging, if not impossible at all. This book aims to stipulate the inclusion of security in robotics from the earliest design phases onward and with a special focus on the cost-benefit tradeoff that can otherwise be an inhibitor for the fast development of affordable systems. We advocate quantitative methods of security management and design, covering vulnerability scoring systems tailored to robotic systems, and accounting for the highly distributed nature of robots as an interplay of potentially very many components. A powerful quantitative approach to model-based security is offered by game theory, providing a rich spectrum of techniques to optimize security against various kinds of attacks. Such a multi-perspective view on security is necessary to address the heterogeneity and complexity of robotic systems. This book is intended as an accessible starter for the theoretician and practitioner working in the field.

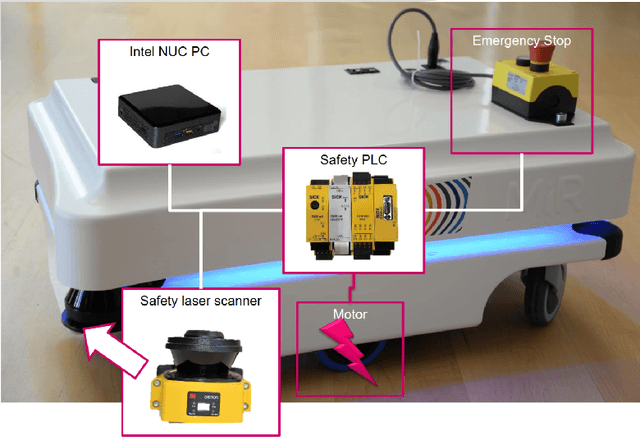

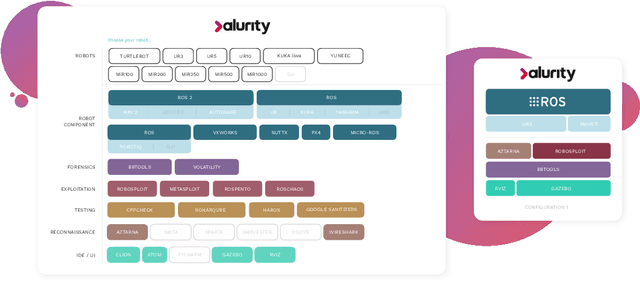

alurity, a toolbox for robot cybersecurity

Oct 16, 2020

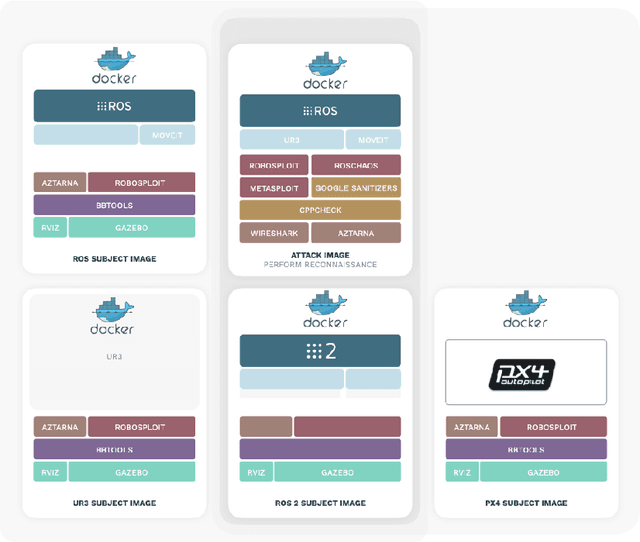

Abstract:The reuse of technologies and inherent complexity of most robotic systems is increasingly leading to robots with wide attack surfaces and a variety of potential vulnerabilities. Given their growing presence in public environments, security research is increasingly becoming more important than in any other area, specially due to the safety implications that robot vulnerabilities could cause on humans. We argue that security triage in robotics is still immature and that new tools must be developed to accelerate the testing-triage-exploitation cycle, necessary for prioritizing and accelerating the mitigation of flaws. The present work tackles the current lack of offensive cybersecurity research in robotics by presenting a toolbox and the results obtained with it through several use cases conducted over a year period. We propose a modular and composable toolbox for robot cybersecurity: alurity. By ensuring that both roboticists and security researchers working on a project have a common, consistent and easily reproducible development environment, alurity aims to facilitate the cybersecurity research and the collaboration across teams.

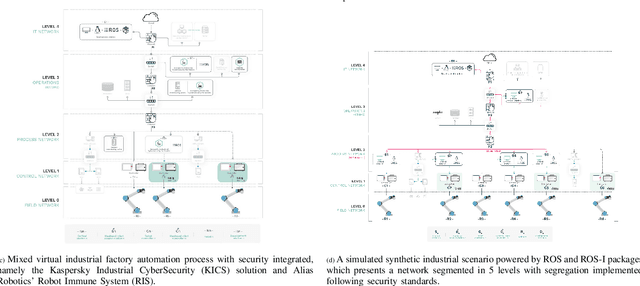

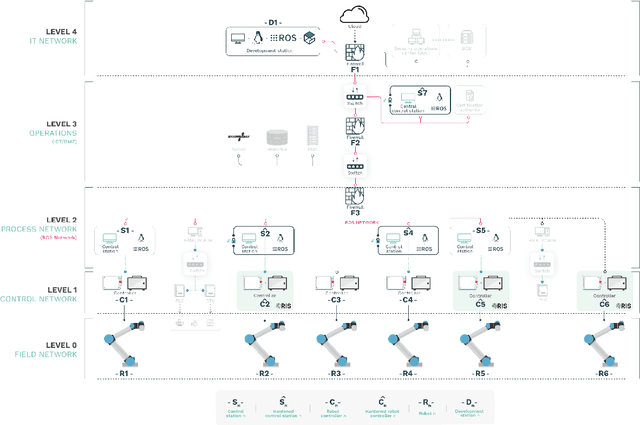

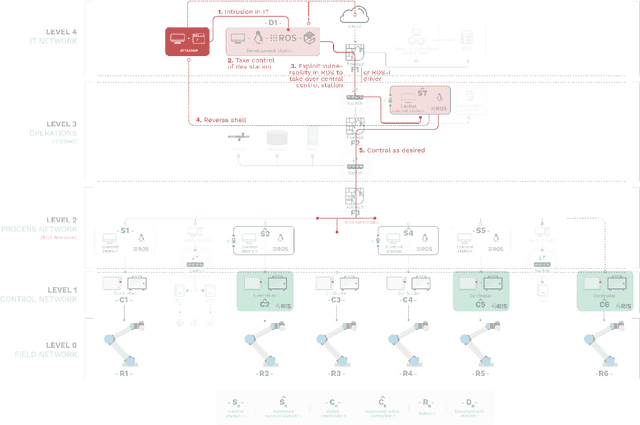

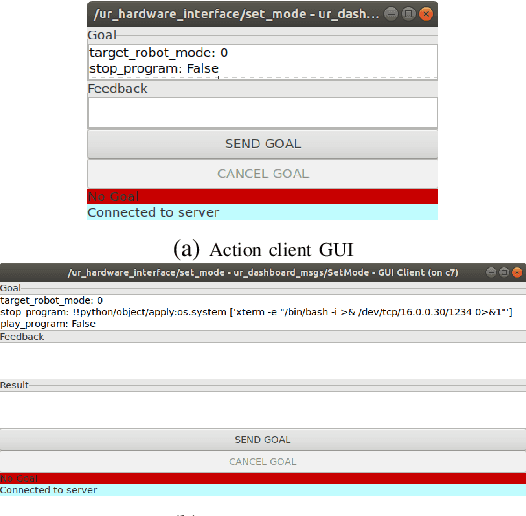

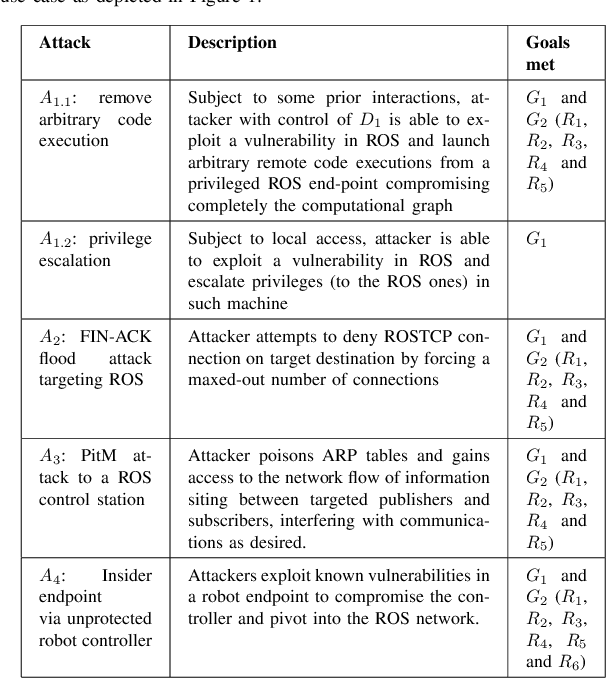

Can ROS be used securely in industry? Red teaming ROS-Industrial

Sep 17, 2020

Abstract:With its growing use in industry, ROS is rapidly becoming a standard in robotics. While developments in ROS 2 show promise, the slow adoption cycles in industry will push widespread ROS 2 industrial adoption years from now. ROS will prevail in the meantime which raises the question: can ROS be used securely for industrial use cases even though its origins didn't consider it? The present study analyzes this question experimentally by performing a targeted offensive security exercise in a synthetic industrial use case involving ROS-Industrial and ROS packages. Our exercise results in four groups of attacks which manage to compromise the ROS computational graph, and all except one take control of most robotic endpoints at desire. To the best of our knowledge and given our setup, results do not favour the secure use of ROS in industry today, however, we managed to confirm that the security of certain robotic endpoints hold and remain optimistic about securing ROS industrial deployments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge