Emmanouil Panaousis

MULTI-FLGANs: Multi-Distributed Adversarial Networks for Non-IID distribution

Jun 24, 2022

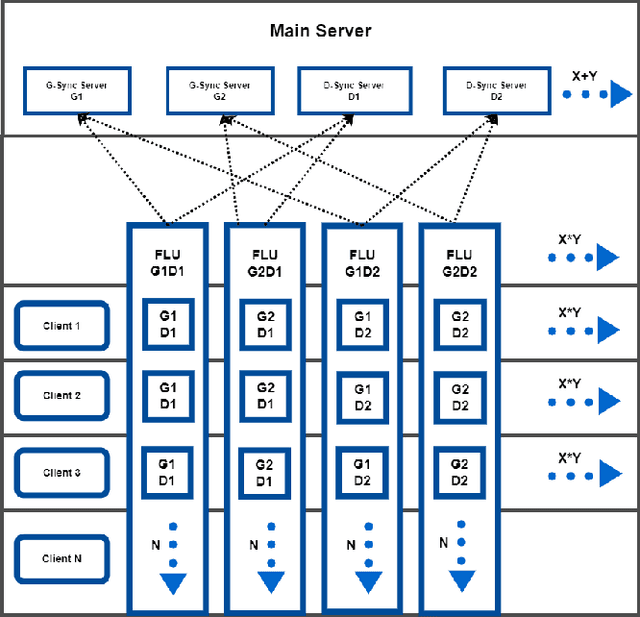

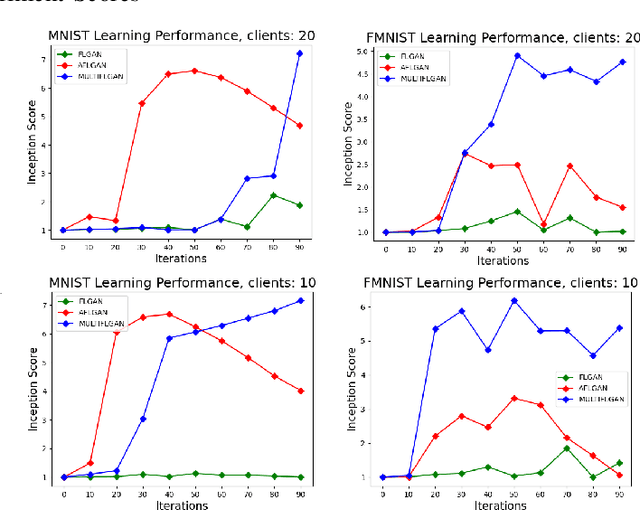

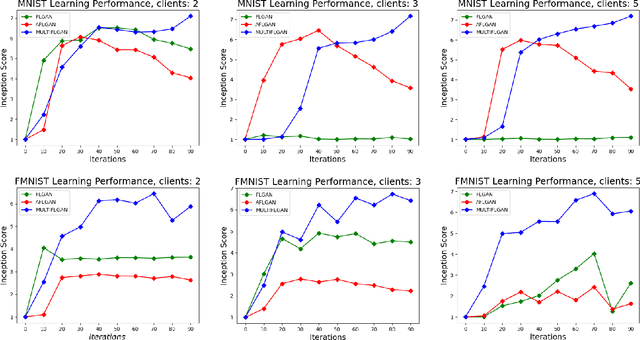

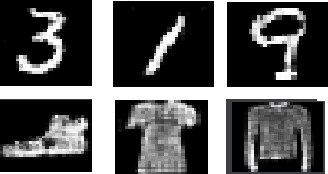

Abstract:Federated learning is an emerging concept in the domain of distributed machine learning. This concept has enabled GANs to benefit from the rich distributed training data while preserving privacy. However, in a non-iid setting, current federated GAN architectures are unstable, struggling to learn the distinct features and vulnerable to mode collapse. In this paper, we propose a novel architecture MULTI-FLGAN to solve the problem of low-quality images, mode collapse and instability for non-iid datasets. Our results show that MULTI-FLGAN is four times as stable and performant (i.e. high inception score) on average over 20 clients compared to baseline FLGAN.

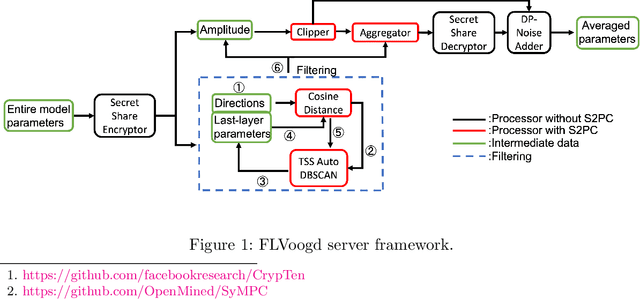

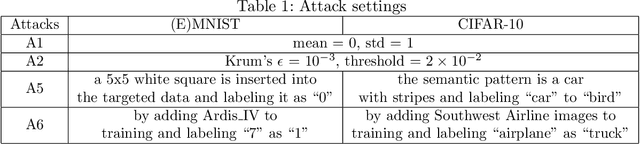

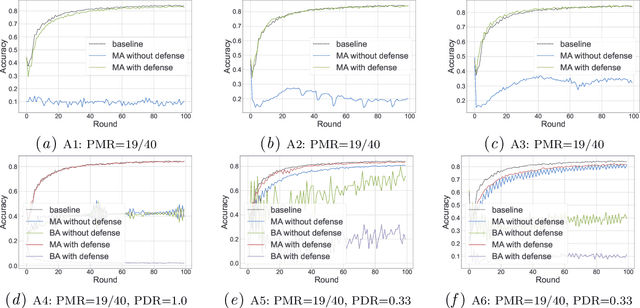

FLVoogd: Robust And Privacy Preserving Federated Learning

Jun 24, 2022

Abstract:In this work, we propose FLVoogd, an updated federated learning method in which servers and clients collaboratively eliminate Byzantine attacks while preserving privacy. In particular, servers use automatic Density-based Spatial Clustering of Applications with Noise (DBSCAN) combined with S2PC to cluster the benign majority without acquiring sensitive personal information. Meanwhile, clients build dual models and perform test-based distance controlling to adjust their local models toward the global one to achieve personalizing. Our framework is automatic and adaptive that servers/clients don't need to tune the parameters during the training. In addition, our framework leverages Secure Multi-party Computation (SMPC) operations, including multiplications, additions, and comparison, where costly operations, like division and square root, are not required. Evaluations are carried out on some conventional datasets from the image classification field. The result shows that FLVoogd can effectively reject malicious uploads in most scenarios; meanwhile, it avoids data leakage from the server-side.

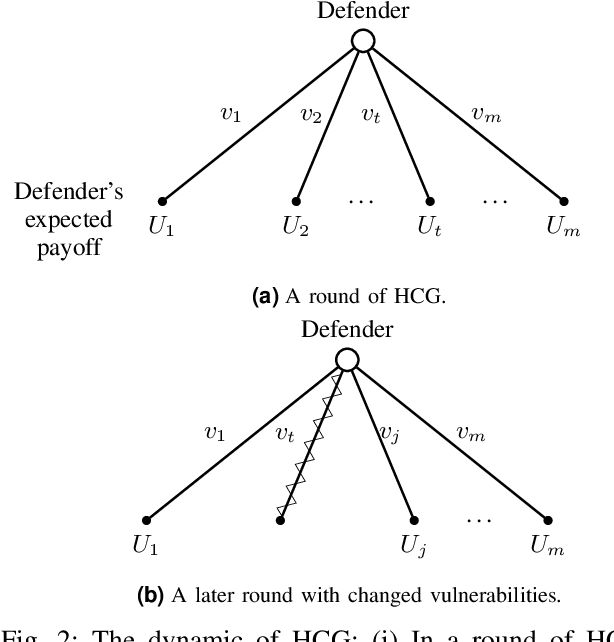

HoneyCar: A Framework to Configure HoneypotVulnerabilities on the Internet of Vehicles

Nov 03, 2021

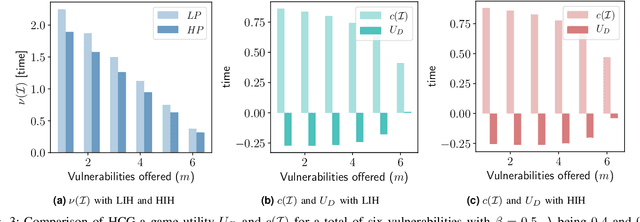

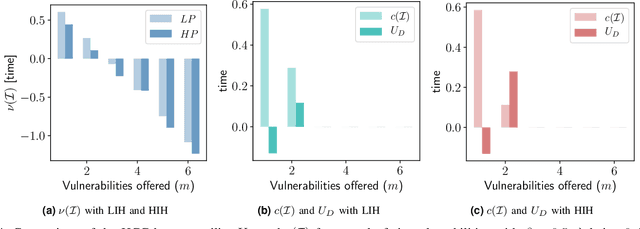

Abstract:The Internet of Vehicles (IoV), whereby interconnected vehicles communicate with each other and with road infrastructure on a common network, has promising socio-economic benefits but also poses new cyber-physical threats. Data on vehicular attackers can be realistically gathered through cyber threat intelligence using systems like honeypots. Admittedly, configuring honeypots introduces a trade-off between the level of honeypot-attacker interactions and any incurred overheads and costs for implementing and monitoring these honeypots. We argue that effective deception can be achieved through strategically configuring the honeypots to represent components of the IoV and engage attackers to collect cyber threat intelligence. In this paper, we present HoneyCar, a novel decision support framework for honeypot deception in IoV. HoneyCar builds upon a repository of known vulnerabilities of the autonomous and connected vehicles found in the Common Vulnerabilities and Exposure (CVE) data within the National Vulnerability Database (NVD) to compute optimal honeypot configuration strategies. By taking a game-theoretic approach, we model the adversarial interaction as a repeated imperfect-information zero-sum game in which the IoV network administrator chooses a set of vulnerabilities to offer in a honeypot and a strategic attacker chooses a vulnerability of the IoV to exploit under uncertainty. Our investigation is substantiated by examining two different versions of the game, with and without the re-configuration cost to empower the network administrator to determine optimal honeypot configurations. We evaluate HoneyCar in a realistic use case to support decision makers with determining optimal honeypot configuration strategies for strategic deployment in IoV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge