Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Akash Amalan

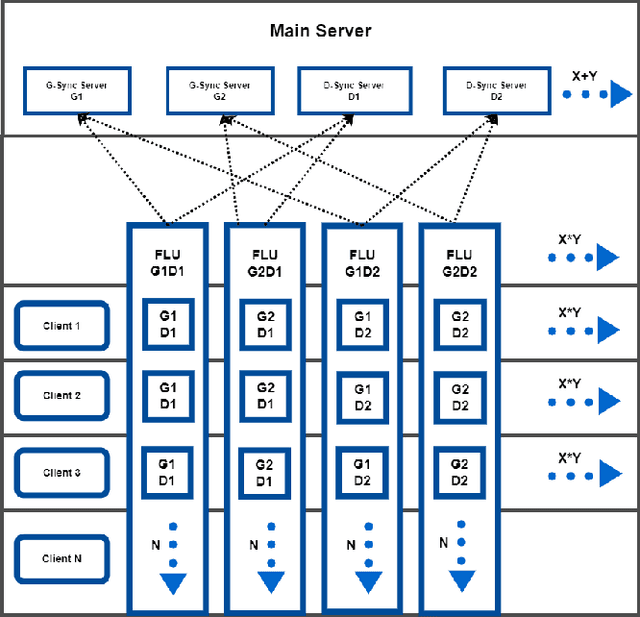

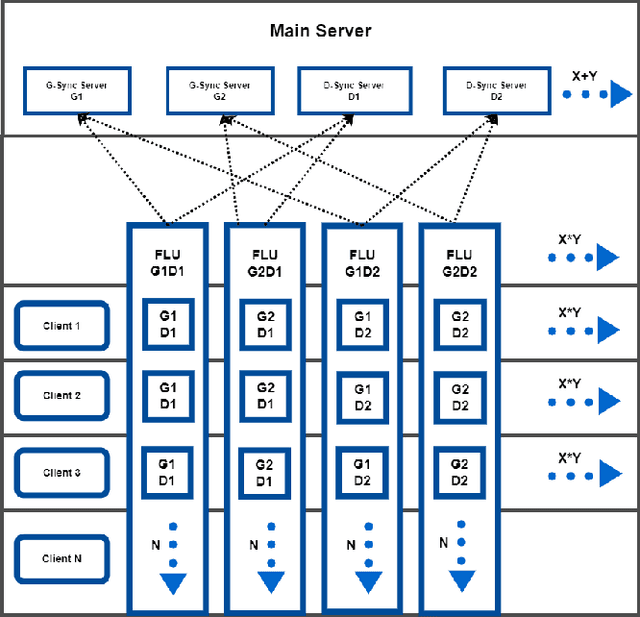

MULTI-FLGANs: Multi-Distributed Adversarial Networks for Non-IID distribution

Jun 24, 2022Figures and Tables:

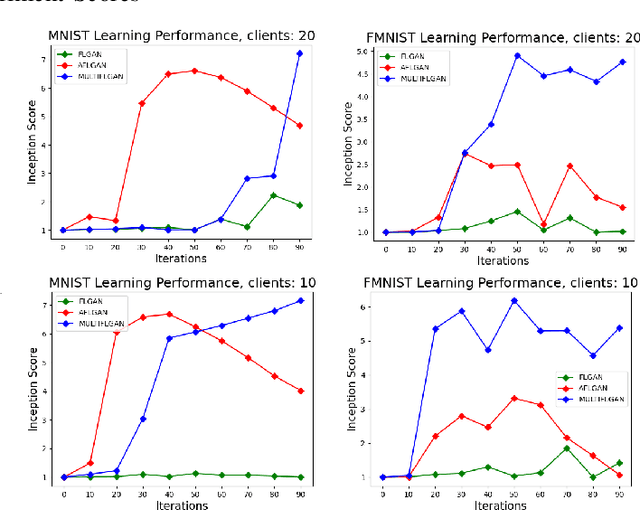

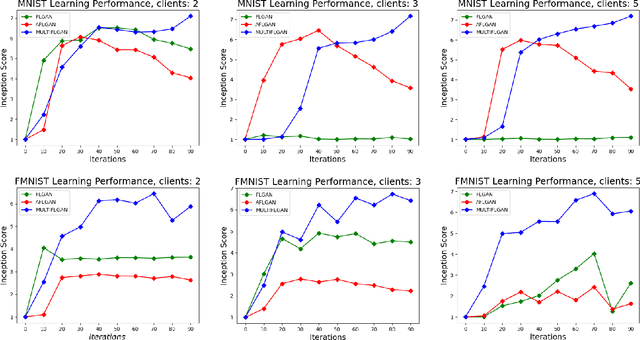

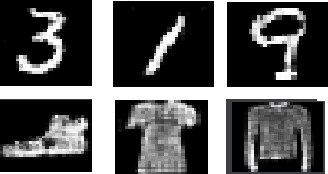

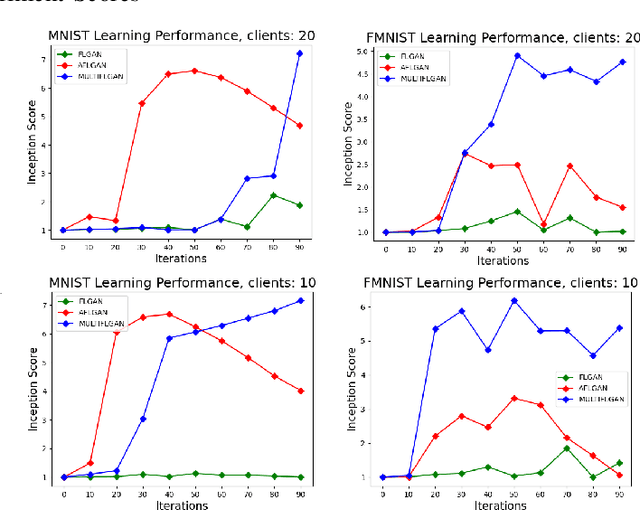

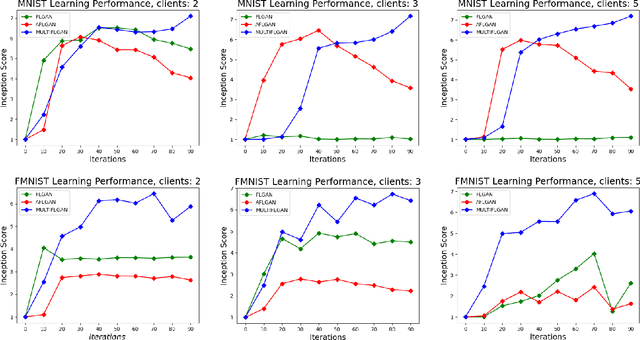

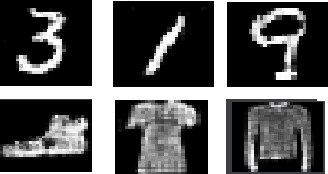

Abstract:Federated learning is an emerging concept in the domain of distributed machine learning. This concept has enabled GANs to benefit from the rich distributed training data while preserving privacy. However, in a non-iid setting, current federated GAN architectures are unstable, struggling to learn the distinct features and vulnerable to mode collapse. In this paper, we propose a novel architecture MULTI-FLGAN to solve the problem of low-quality images, mode collapse and instability for non-iid datasets. Our results show that MULTI-FLGAN is four times as stable and performant (i.e. high inception score) on average over 20 clients compared to baseline FLGAN.

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge